Eme? CDM? DRM? CENC? IDK! What you need to make your own video player in the browser

What do all these abbreviations mean? What you need to develop an open source player to view videos from Amazon, Sky and other platforms and watch videos from any provider? About how the process of streaming video, Sebastian Golash (Sebastian Golasch), spoke at the conference HolyJS 2018 Piter. Under the cut - video and translation of his report.

At the moment, Sebastian (Sebastian Golasch) is a developer at Deutsche Telekom. He worked with Java and PHP for a long time, and then switched to JS, Python and Rust. For the past seven years, he has been working on the Qivicon smart home platform.

First, let's look at the history of the web, how we came from QuickTime to Netflix in 25 years. It all started in the 90s when Apple invented QuickTime. Its use on the Internet began in 1993-1994. At that time, the player could play video with a resolution of 156 × 116 pixels and a frequency of 10 FPS, without hardware acceleration (using only processor resources). This format was focused on dial-up connection 9600 baud - it is 9600 bps, including service information.

It was the time of the Netscape browser. Video in the browser did not look too good, because it was not native to the web. External software (same QuickTime) with its interface, which was visualized in the browser using the embed tag, was used for playback.

The situation got a little better when Macromedia released the Shockwave Player (after absorbing Macromedia by Adobe, it became the Adobe Flash Player). The first version of Shockwave Player was released in 1997, but video playback in it appeared only in 2002.

They used the Sorenson Spark aka H.263 codec. It has been optimized for small resolutions and small file sizes. What does it mean? For example, a video of 43 seconds, which was used to test the Shockwave Player, weighed only 560 Kbytes. Of course, the film in such quality would not be very pleasant to watch, but the technology itself was interesting for that time. However, as in the case of QuickTime, Shockwave Player in the browser required the installation of additional software. This player had a lot of security problems, but the most important thing is that the video was still an add-on to the browser.

In 2007, Microsoft released Silverlight, a bit like Flash. We will not dig deep, but all these solutions had something in common - a “black box”. All players worked like a browser add-on, and you had no idea what was going on inside.

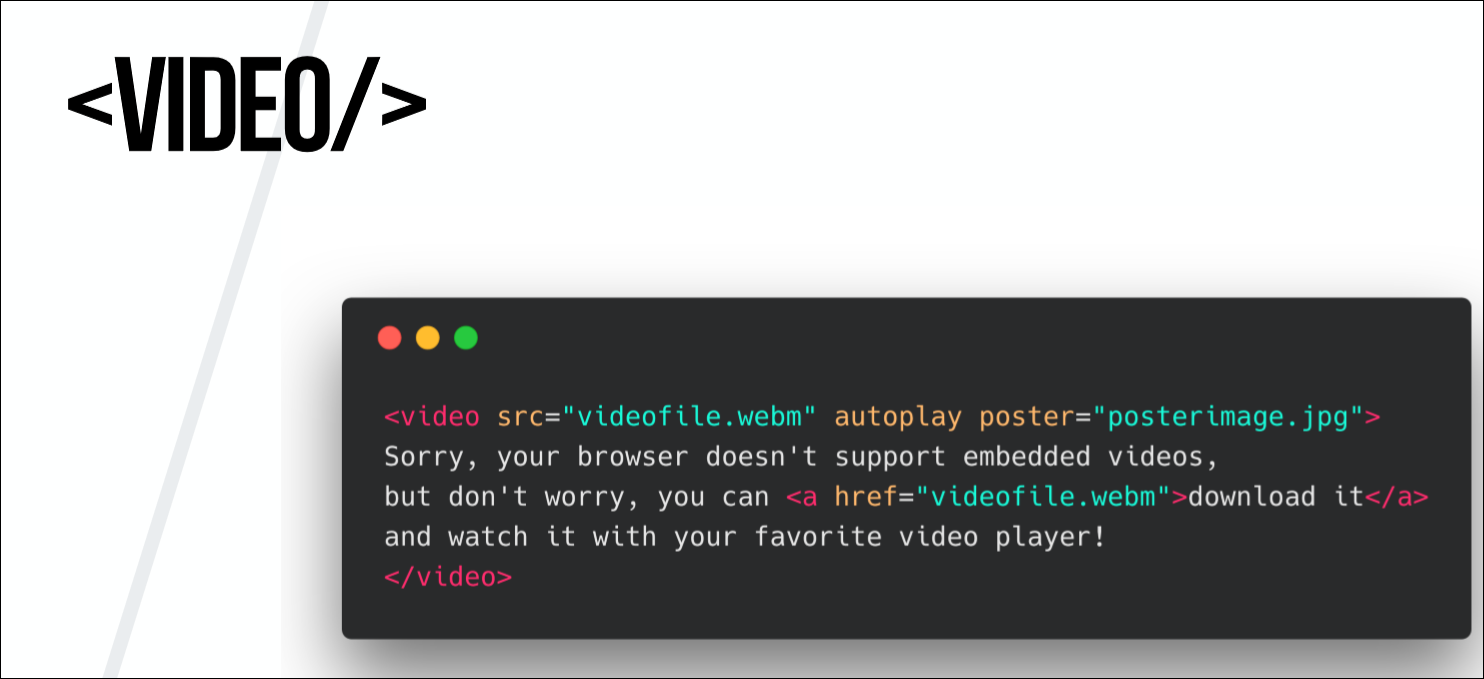

Element <

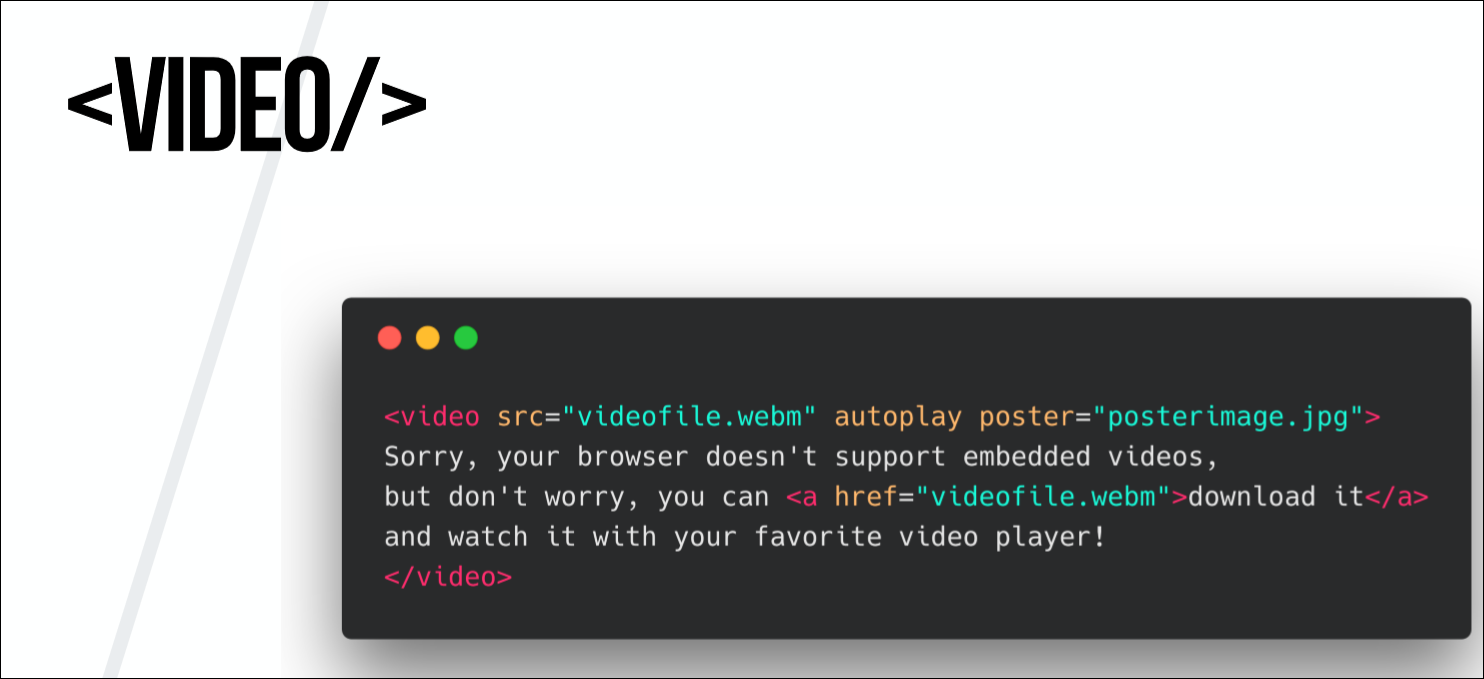

In 2007, Opera offered to use the <

A tag

However, you can’t just right-click on the video on Netflix and select “Save As”. The reason for this is DRM (Digital Restrictions Management, Digital Restrictions Management). This is not one technology and not a single application that performs any task. This is a general term for such concepts as:

To understand what DRM is, we need to examine its ecosystem, that is, to figure out which companies are involved. It:

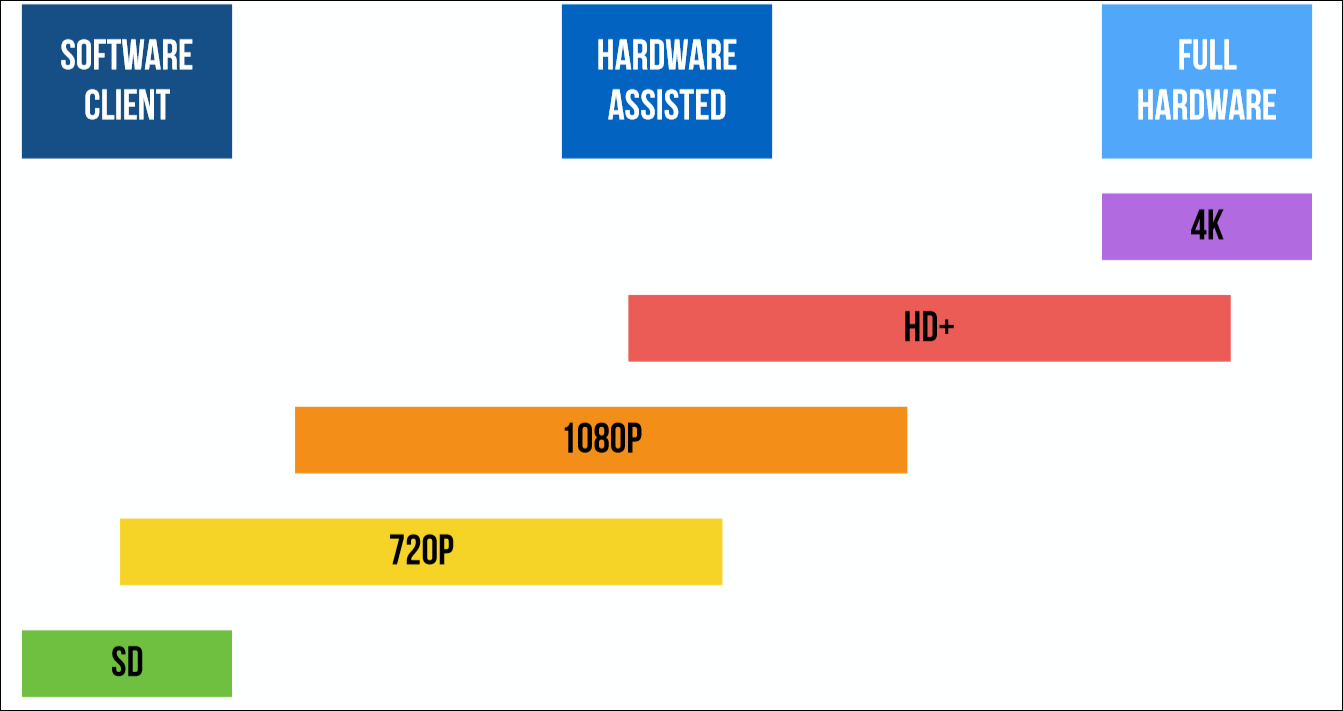

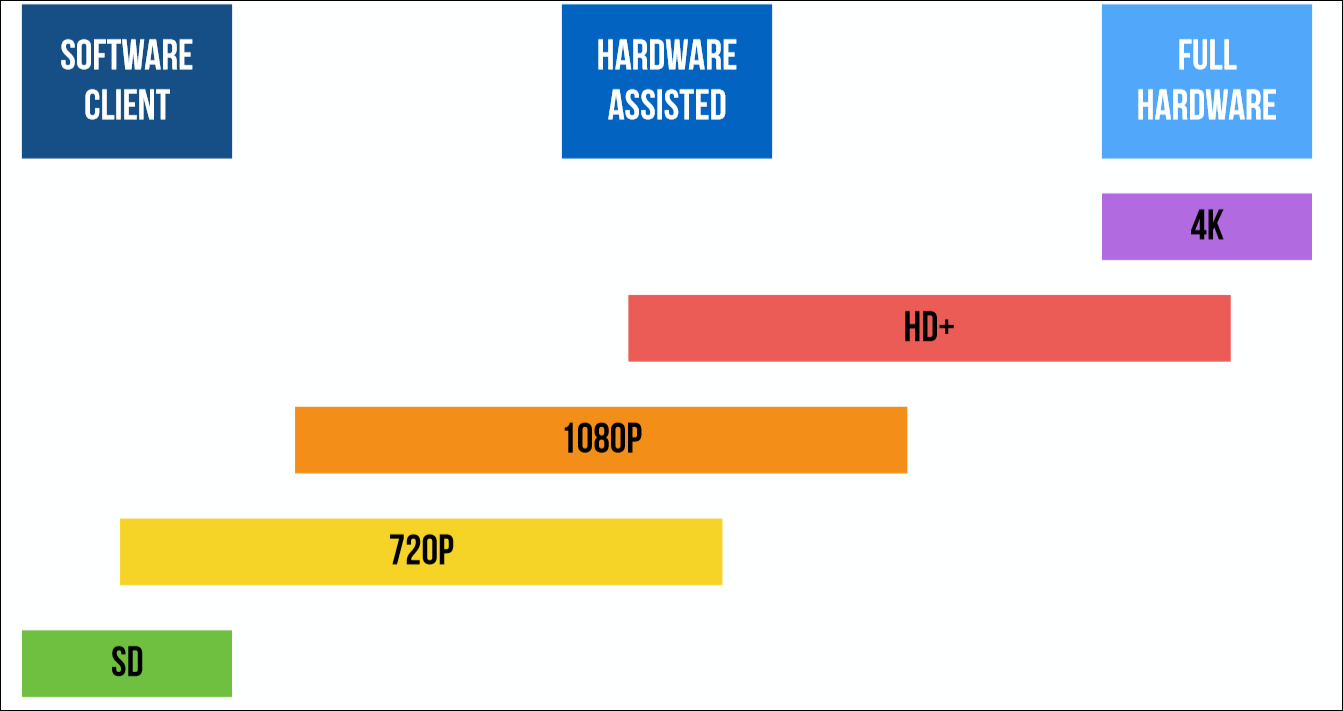

Have you ever wondered why when viewing videos on Netflix in a browser it doesn’t have a very high resolution (SD), but if you watch the same video on an Apple TV or on an Android TV Box, the same content is played in Full HD or 4K? DRM is also responsible for this. The fact is that manufacturers are always afraid of pirates who steal content. Therefore, the less protected is the environment in which video decoding is performed, the poorer the quality is shown to the user. For example, if decoding is performed programmatically (for example, in Chrome or Firefox), the video is shown in the worst quality. In an environment where hardware capabilities are used for decoding (for example, if Android uses a GPU), the illegal content copying capabilities are less, and here the playback quality is higher. Finally,

But if we talk about browsers, then they are almost always decoding performed by software. Different browsers use different systems for DRM. Chrome and Firefox use Widevine. This company is owned by Google and licenses their DRM applications. Thus, to decode Firefox downloads DRM-library from Google. In the browser you can see exactly where the download is coming from.

Apple uses its own FairPlay system, which was created when the company introduced the first iPhones and iPads. Microsoft also uses its development called PlayReady, which is built right into Windows. In other cases, Widevine is most often used. This system exists both as an application and as a hardware solution - chips that decode video.

The abbreviation CDM stands for Content Decryption Module. This is some piece of software or hardware that can work in several ways:

Despite GPU support, the second option is most often used (at least when it comes to Chrome and Firefox).

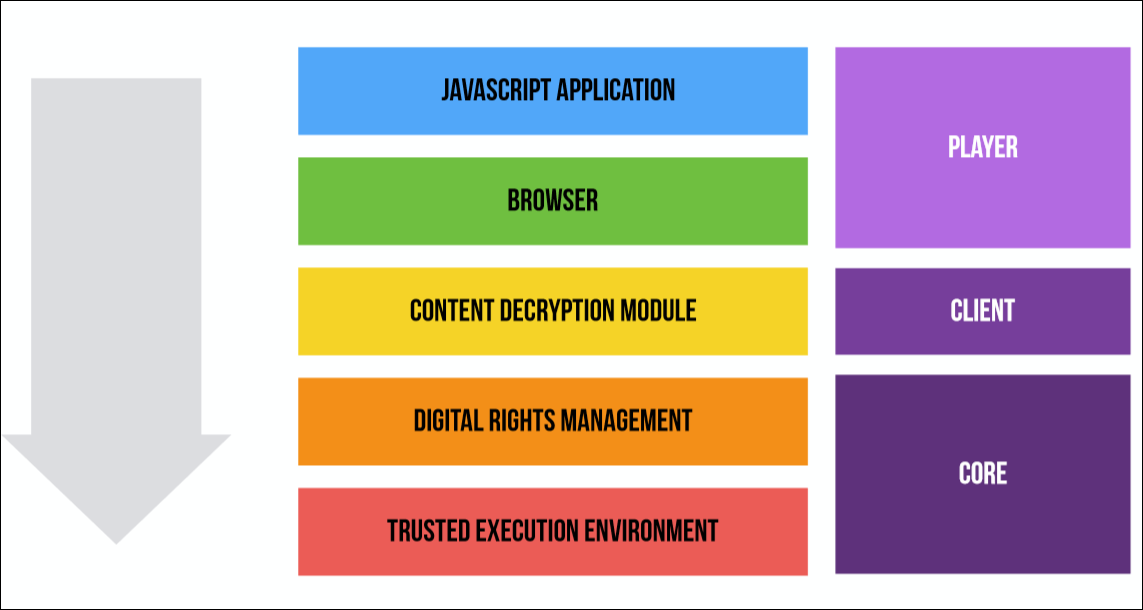

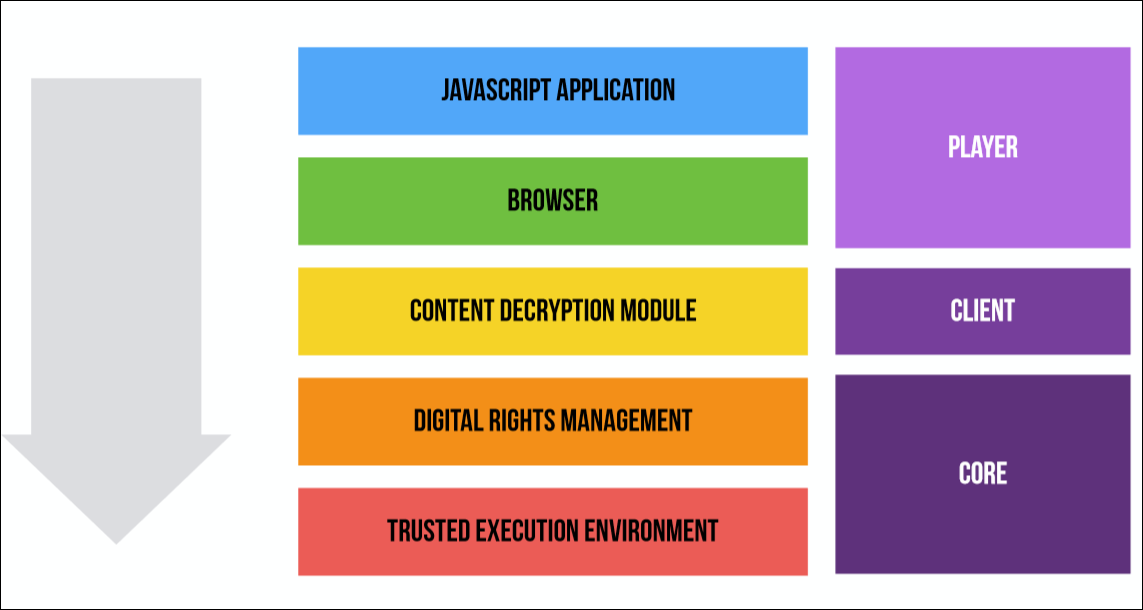

So how does all this work together? To understand this, look at the decoding and decryption layers in the browser. They are divided into:

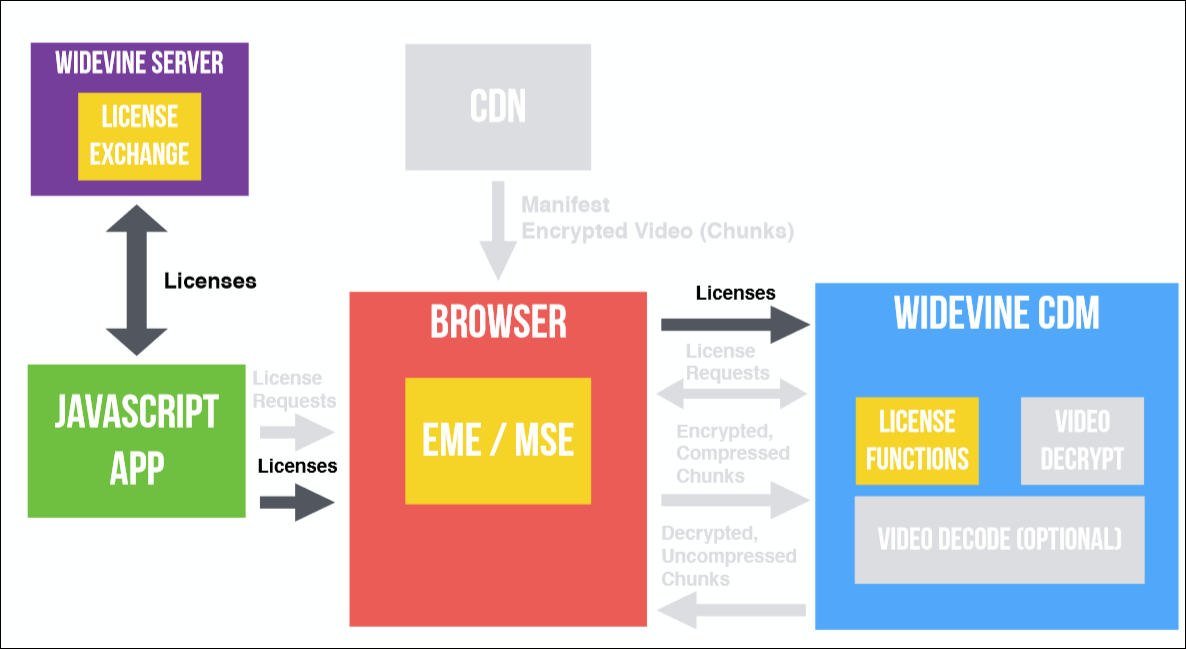

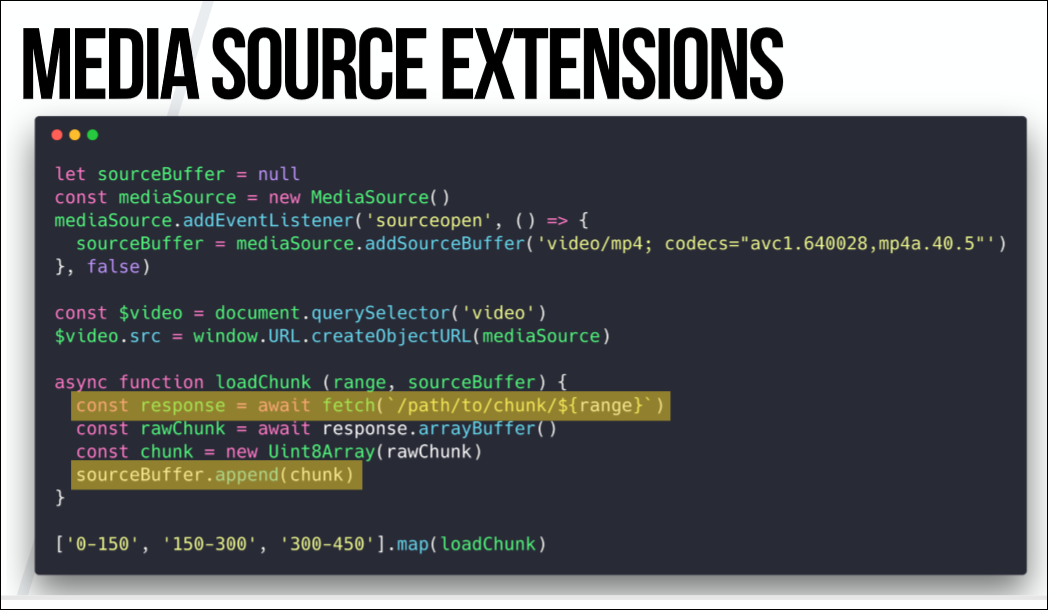

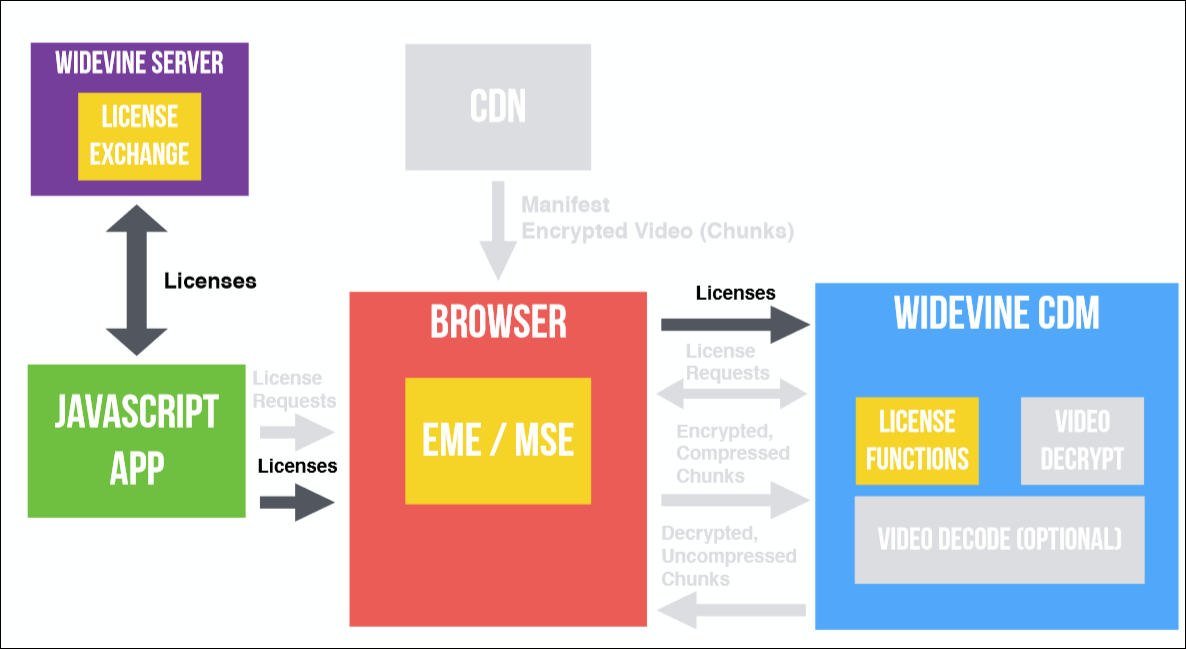

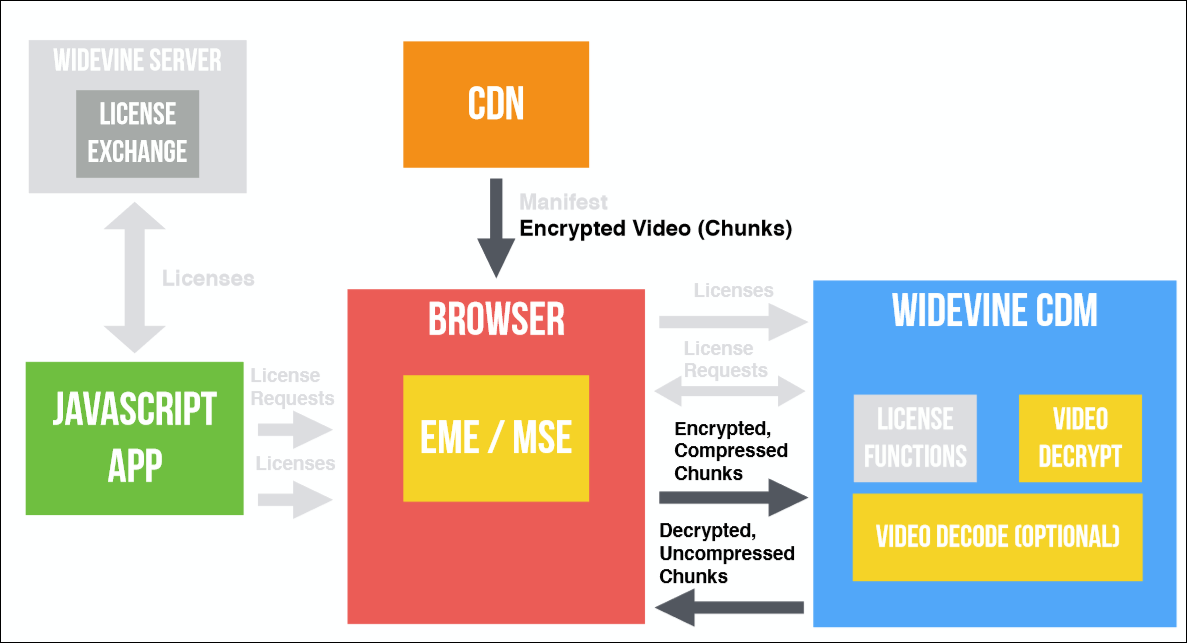

What happens when you play video in a browser is shown in the image below. She, of course, a little confused: there are a lot of arrows and flowers. I'll walk through it in steps, using real-life cases to make it clearer.

As an example, we will use Netflix. I wrote a debugging application.

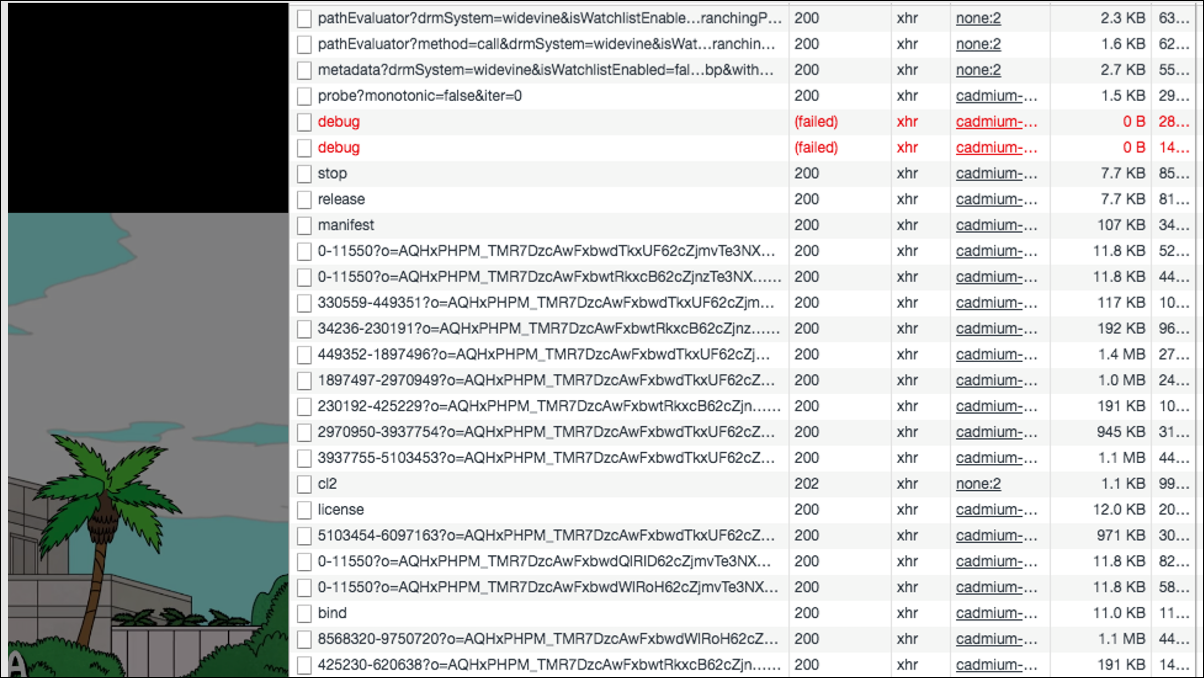

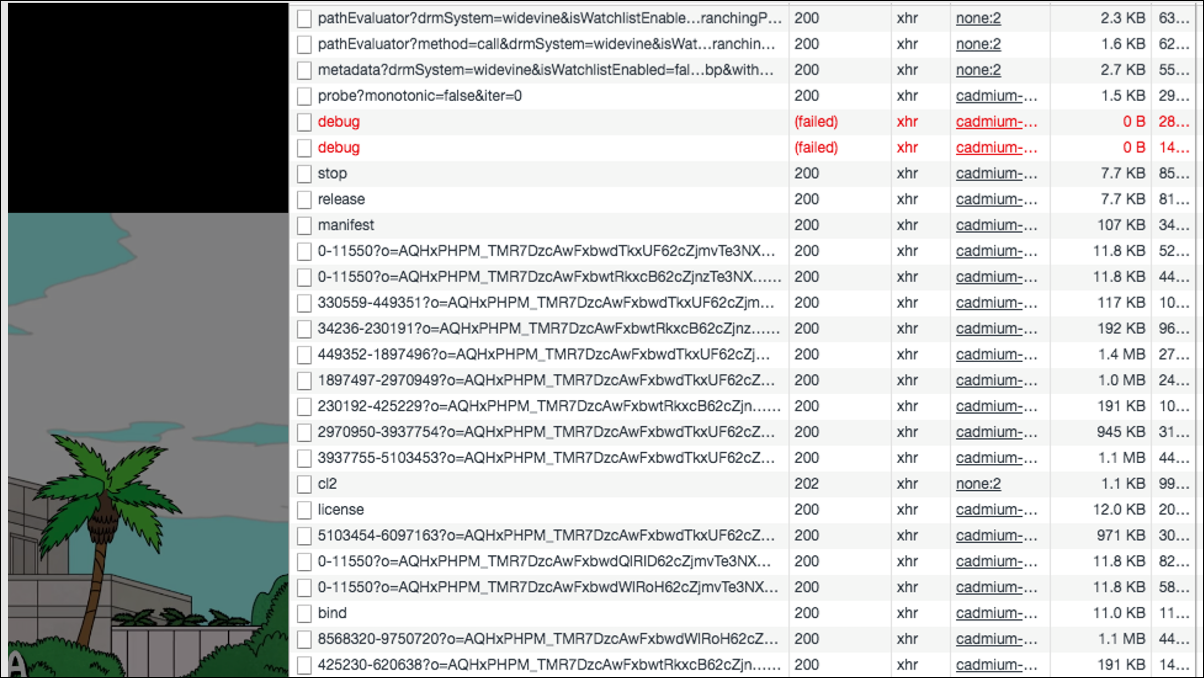

I started with what I think each of you would have started: I looked through the requests that Netflix makes when I launch the video, and saw a huge number of records.

However, if you leave only those that are really needed to play the video, it turns out that there are only three of them: manifest, license and the first fragment of the video.

The Netflix player is written in JavaScript and contains more than 76,000 lines of code, and, of course, I can’t completely disassemble it. But I would like to show the main parts that are needed to play the protected video.

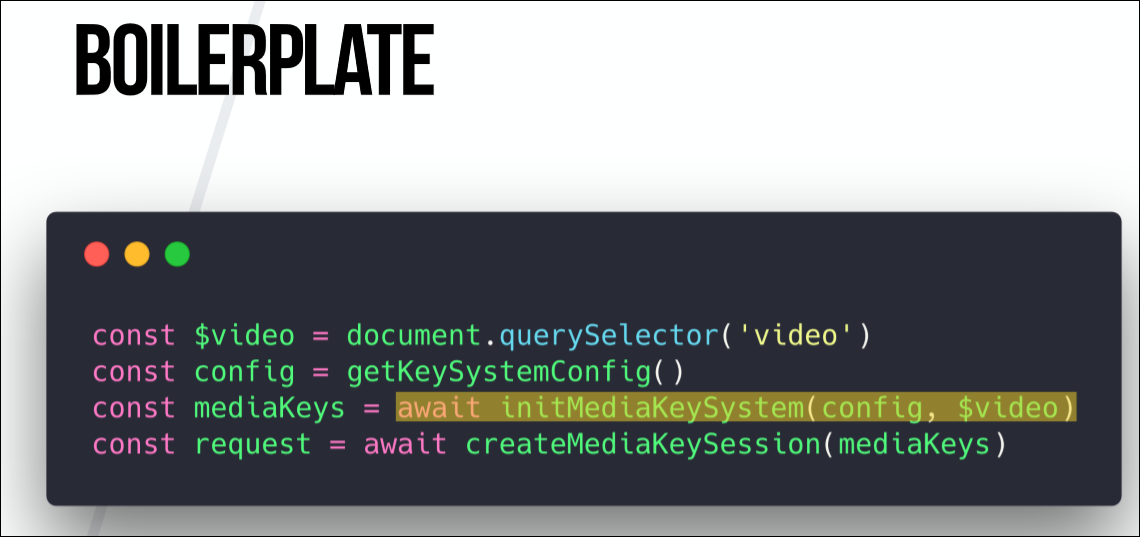

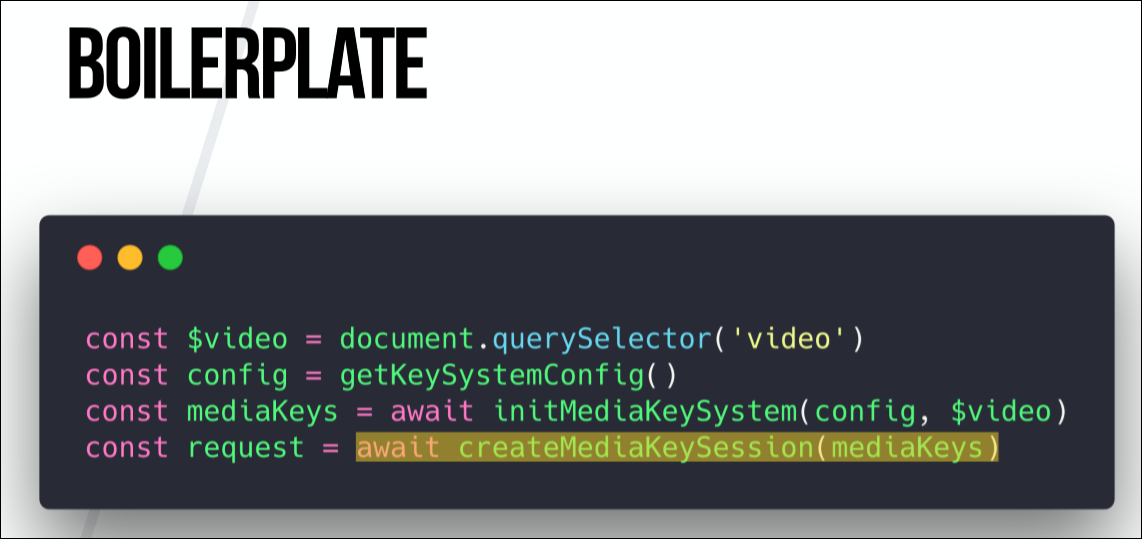

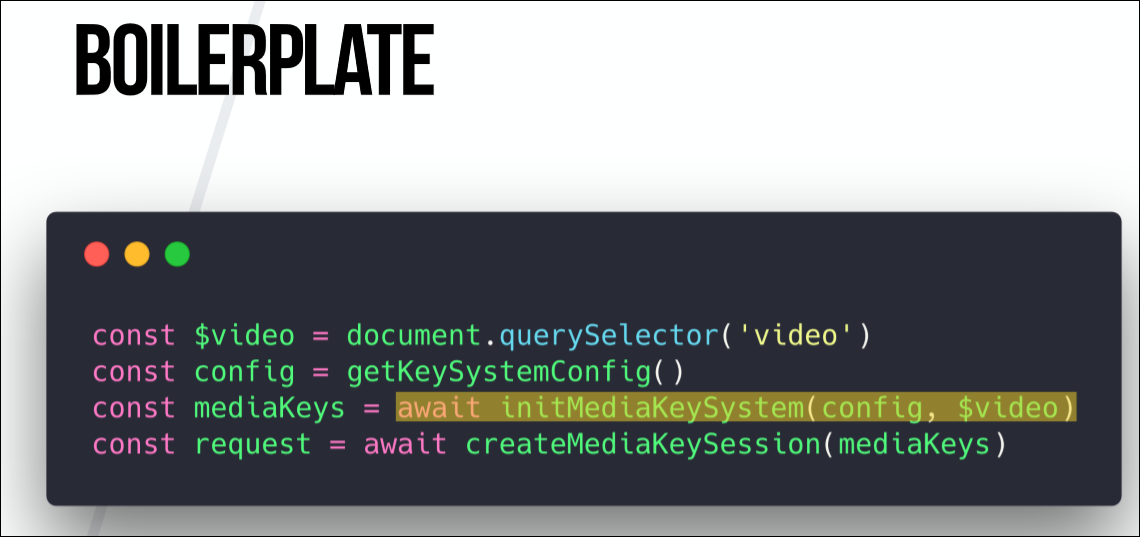

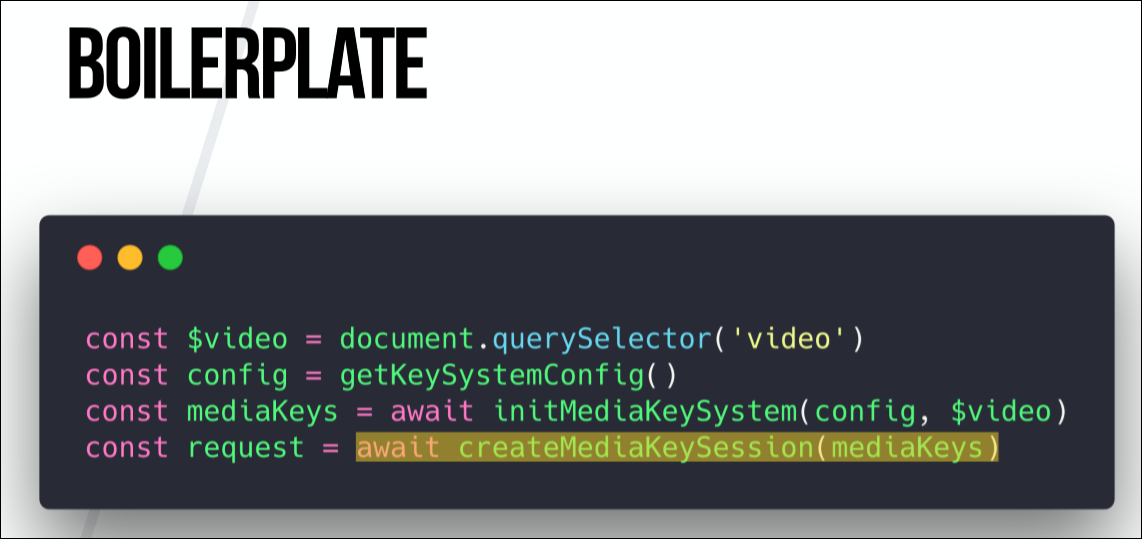

We will start with the template:

But before we dive into the functions, we need to get acquainted with another technology - EME (Encrypted Media Extensions, encrypted media extensions). This technology does not perform decryption and decoding; it is just a browser API. EME serves as an interface for CDM, for KeySystem, for a server with a license, and for the server on which the content is stored.

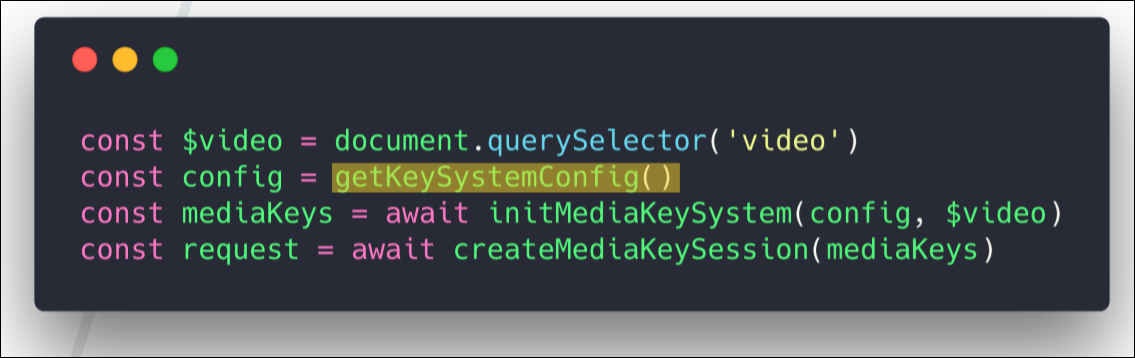

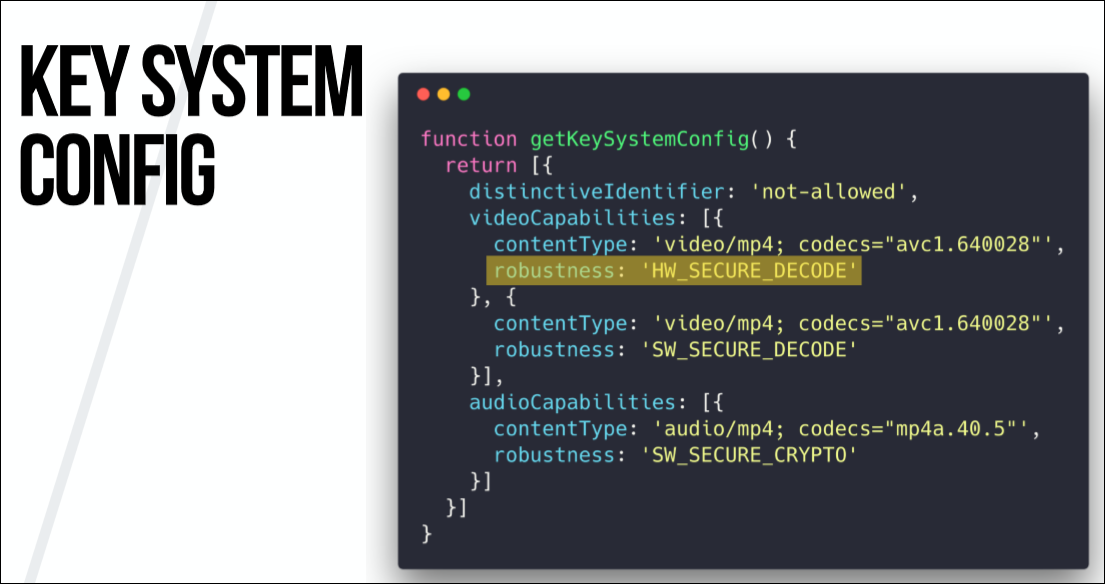

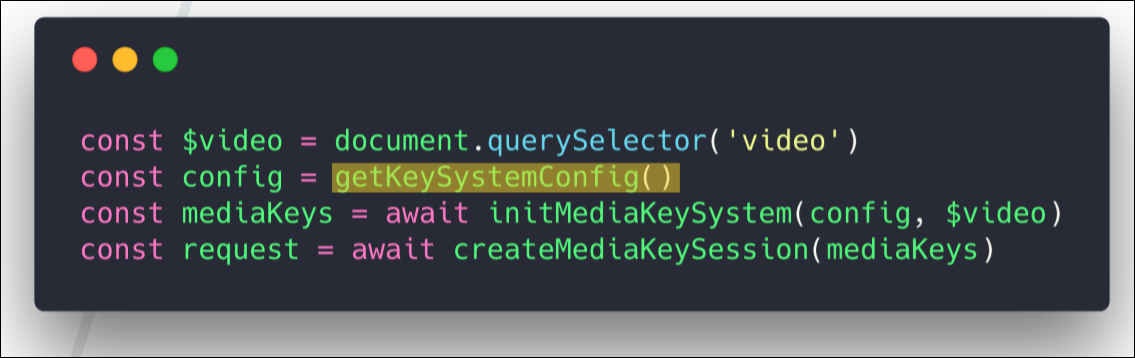

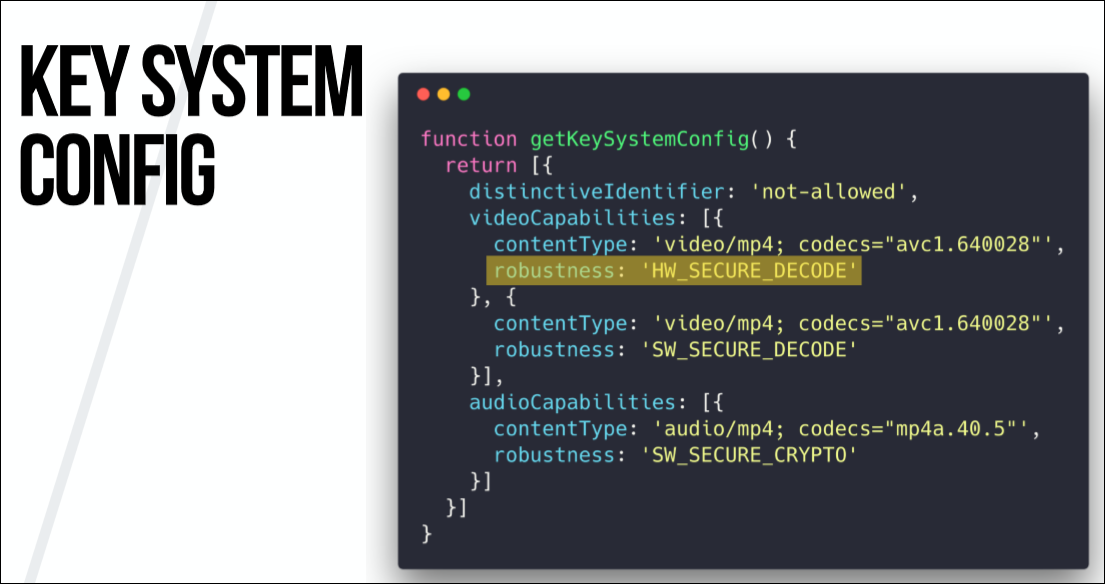

So let's start with getKeySystemConfig.

It should be borne in mind that it depends on the provider, so the config that I give here works for Netflix, but does not work, say, for Amazon.

In this config, we have to tell the backend system what level of trusted execution environment we can offer. This may be secure hardware decoding or secure software decoding. That is, we tell the system what hardware and software will be used for playback. And this will determine the quality of the content.

After configuring the config, let's take a look at creating the initial MediaKeySystem.

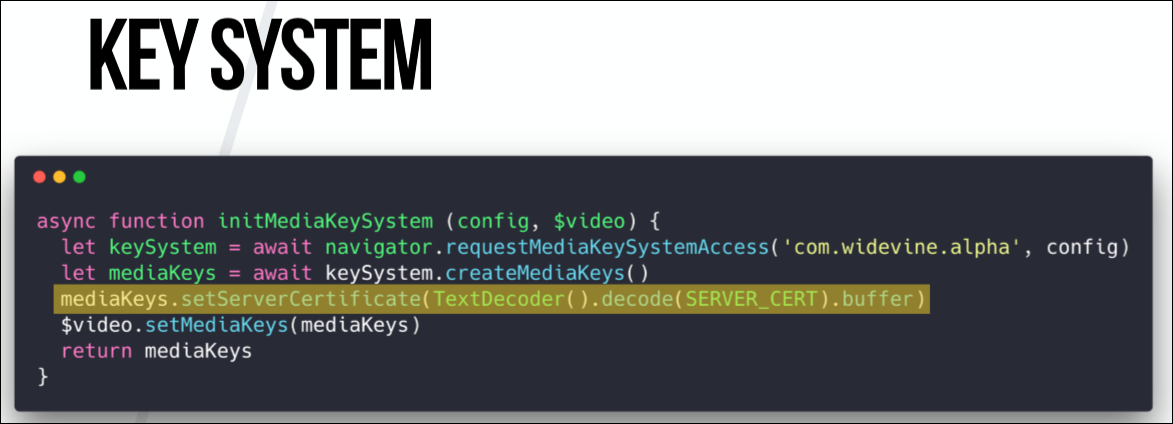

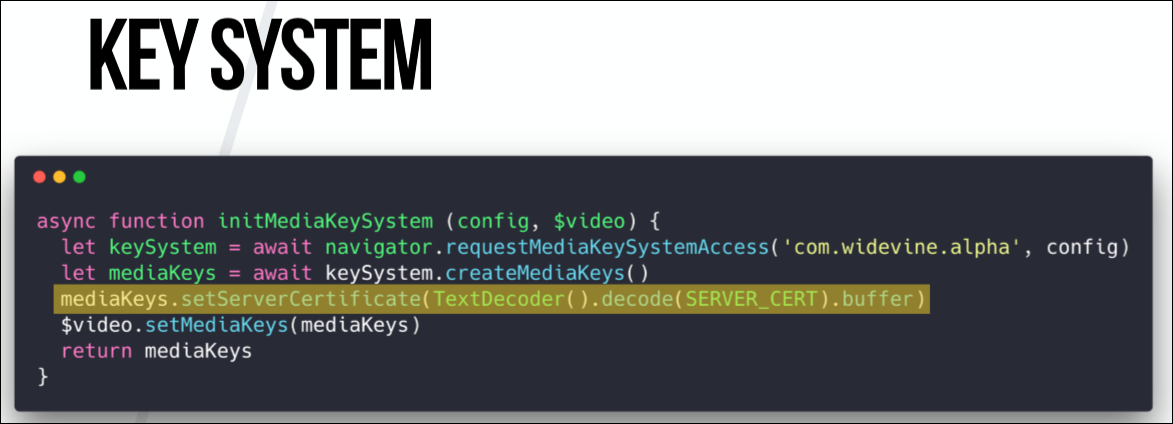

Here begins the interaction with the content decryption module. You need to tell the API which DRM system and KeySystem we are using. In our case, this is Widevine.

The next step is optional for all systems, but mandatory for Netflix. Again, its need depends on the provider. We need to apply a server certificate to our mediaKeys. Server certificates are plain text in Netflix's Cadmium.js file that can be easily copied. And when we apply it to mediaKeys, then all communication between the server with the license and our browser becomes secure thanks to the use of this certificate.

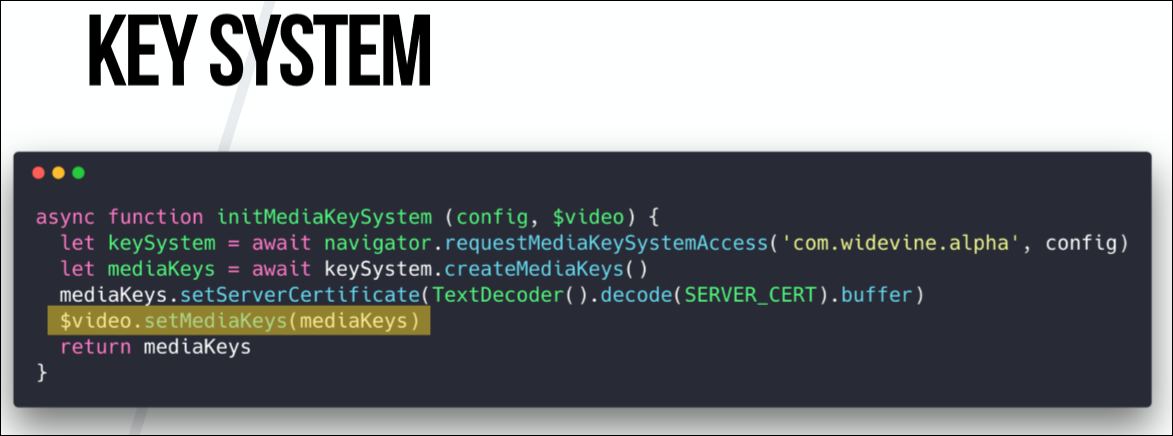

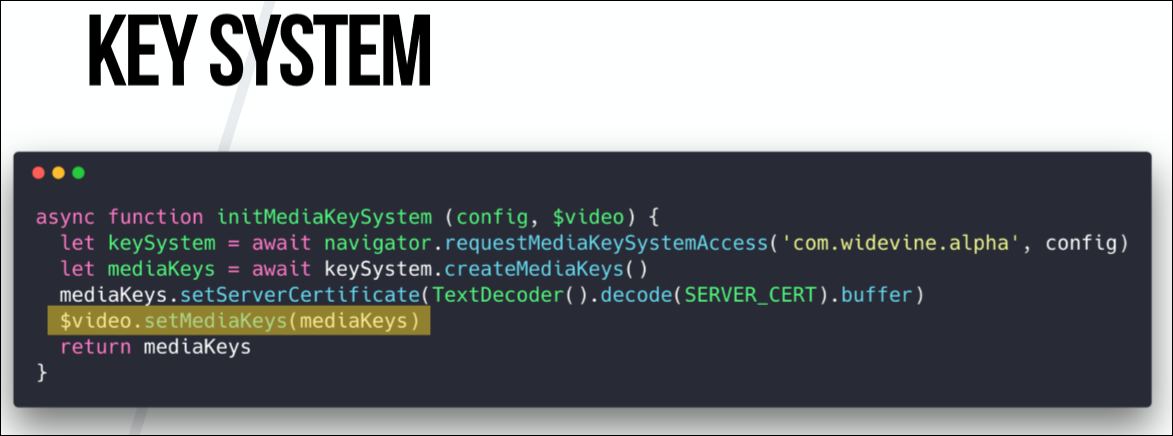

When this is done, we need to refer to the original video element and say, “Ok, this is the key system we want to use, and this is the hello video tag. Let's unite you. ”

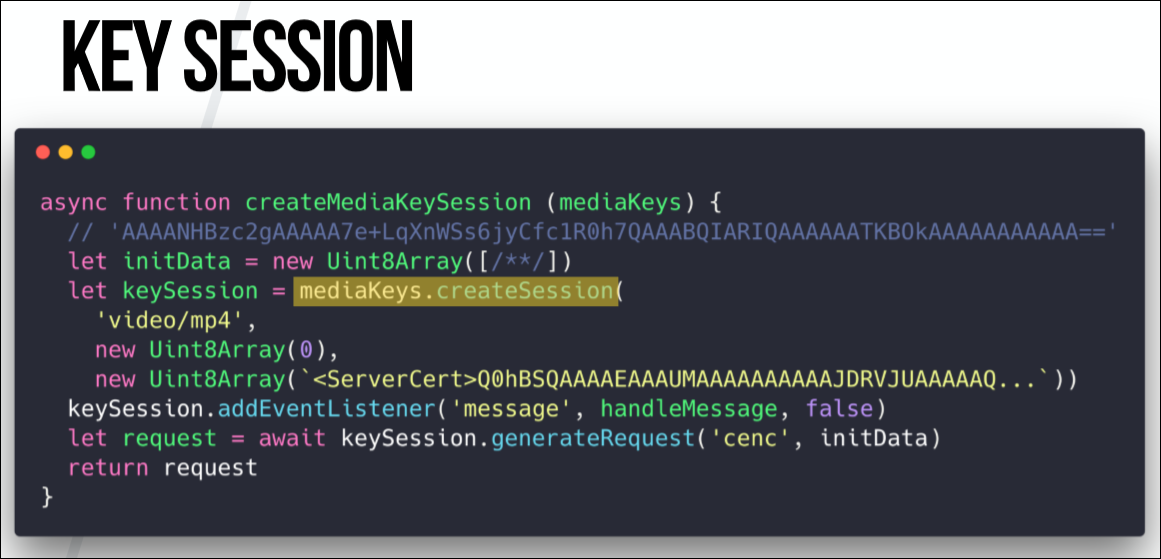

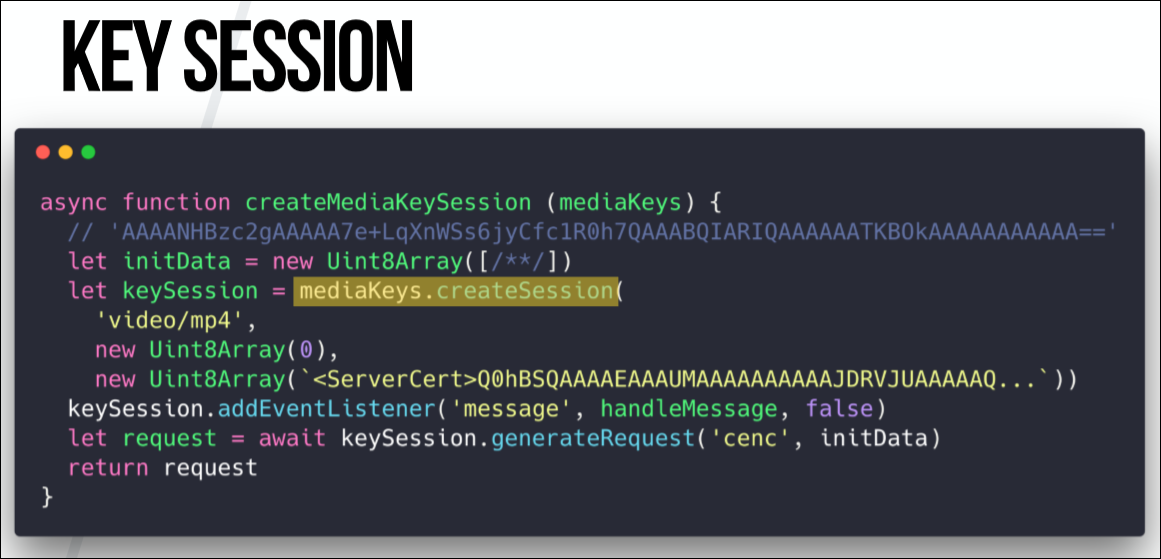

And here is the last function that is needed to configure the video system.

This is a DRM session, or MediaKeySession. It is just data that goes from the provider to the decryption module that signs requests. This data is also plain text, which is hidden behind several functions in the Netflix player file, from where I copied it.

When we call create.Session in the mediaKeys object, we need to tell which video we support. In this case, this is mp4. This brings us back to the context of messaging with our CDM system. We also need Netflix to apply the server certificate on base64 in each form, but all this config in the create session is again provided dependent on the DRM system.

The last function here below - keySession.generateRequest builds the license request in the background. Or CDM builds a license query in the background. In other words, these are raw binary data that we have to send to the licensed server in order to get a valid license in return.

Here interest is cenc. This is an ISO encryption standard that defines the protection scheme for mp4 video. In WebM, this is called differently, but the function does the same.

The handleMessage is the EventListener interface that we configured. When this event is triggered by a message event in keySession, we know that we are ready to receive a license from the server.

And in this callback, we only receive a request to the server with a license, which gives some binary data (they may also differ depending on the provider). We use this data to update the current session by adding a license. That is, as soon as we received a valid license from the server, our CDM knows that we can decode and decrypt the video.

If we apply it to the diagram below, we get this: we want to play the video, and the JavaScript application says: “Hello, browser! I want to play the video! ”- then uses the Encrypted Media Extensions and makes a request to the License Functions in the Widevine CDM for a license. This request is then returned to the browser, and we can exchange it for a valid license on the license server, and then we need to transfer this license back to CDM. This process was shown in the code above.

But note that we have not lost a single second of video, and all this we need to do in order to be able to play some videos in the future.

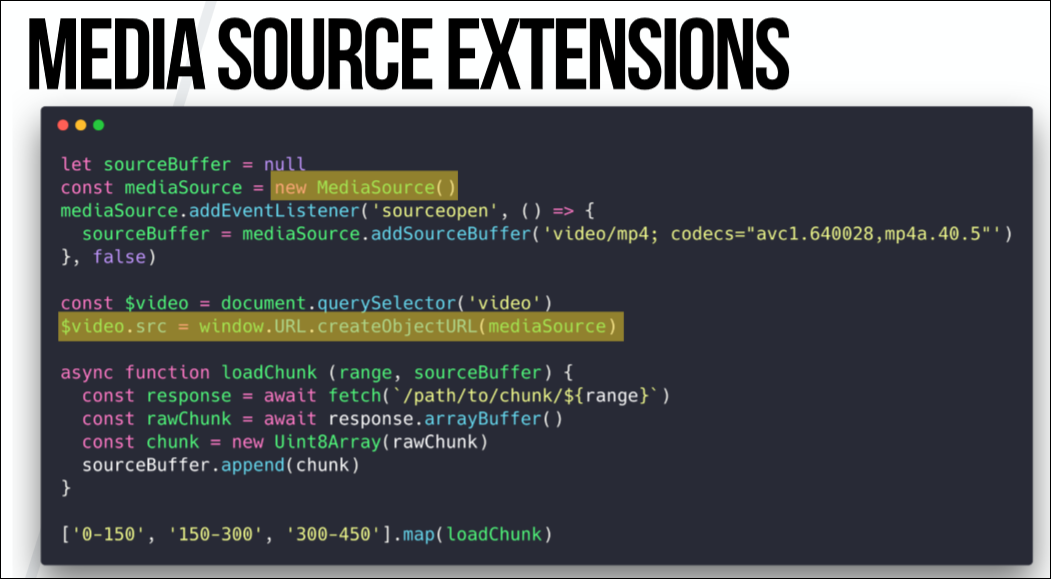

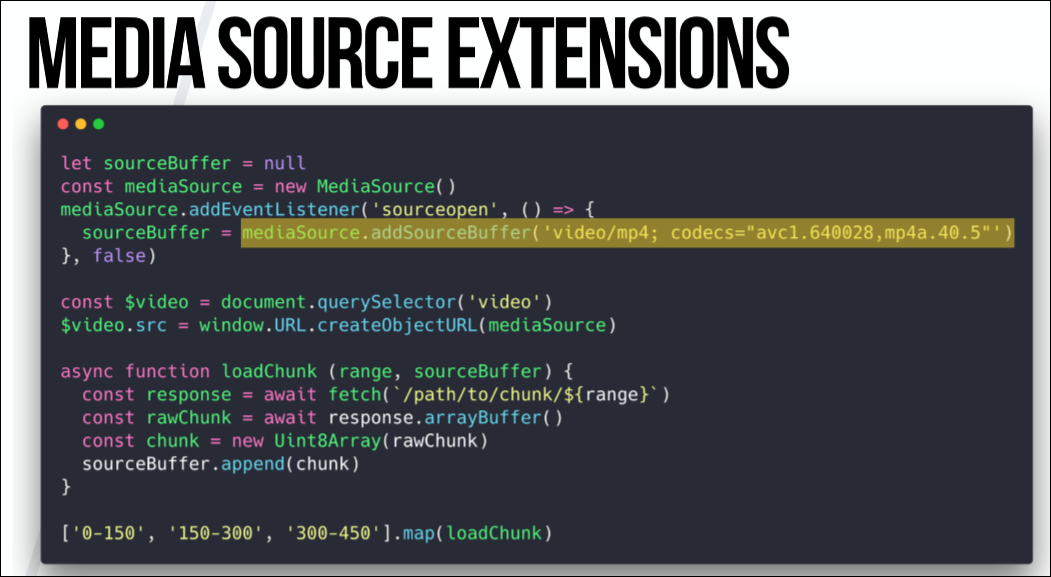

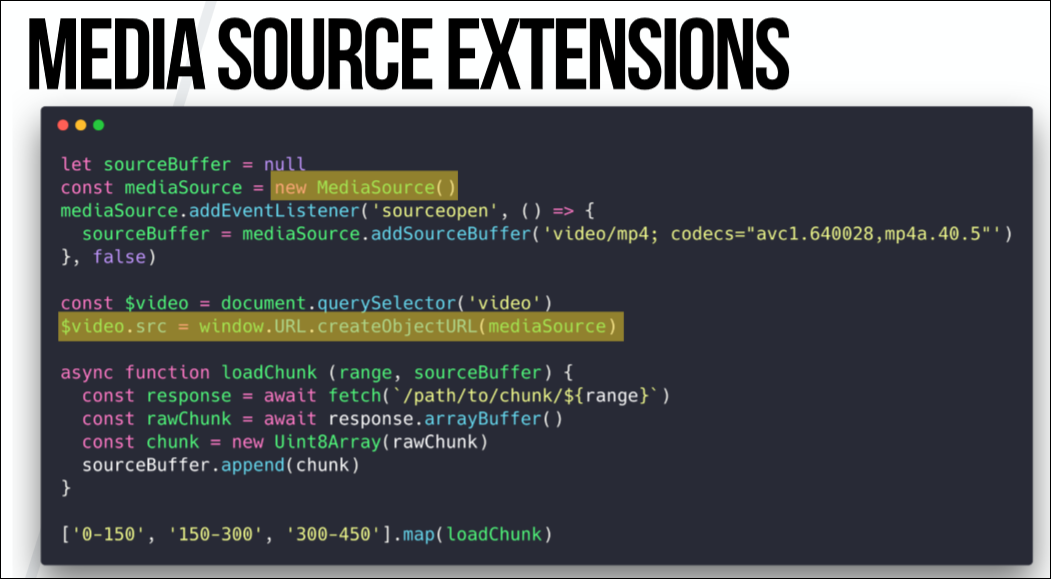

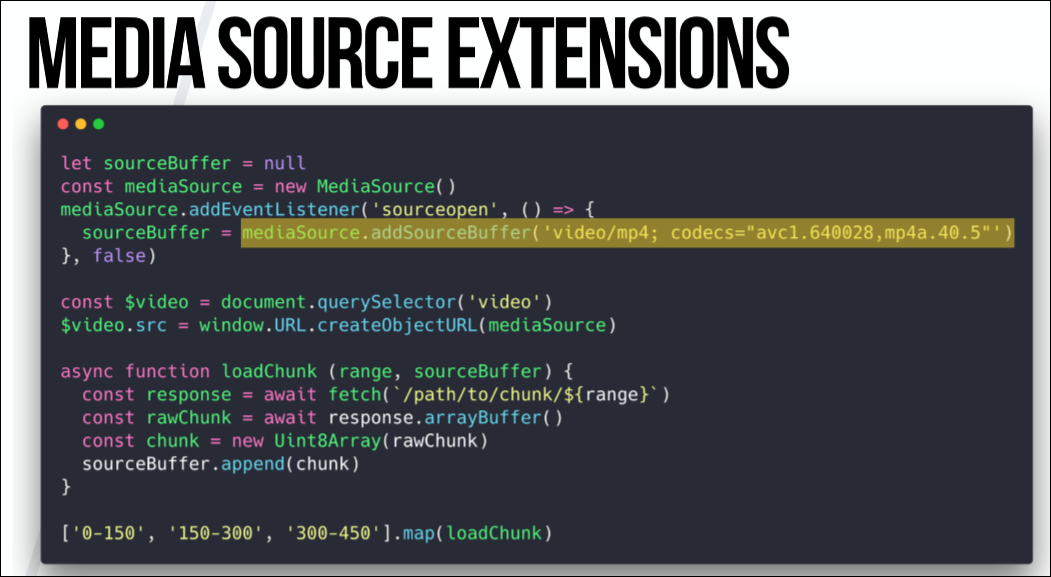

And another technology that we need to explore is MSE (Media Source Extensions, extensions for multimedia sources). She could be called EME's half-sister (Encrypted Media Extensions). This is also a browser API, and it has nothing to do with DRM. I see it as a software interface to <

So, we can use extensions for multimedia sources, instantiate and make access to the video, then load the video fragments in parts and apply them to the <

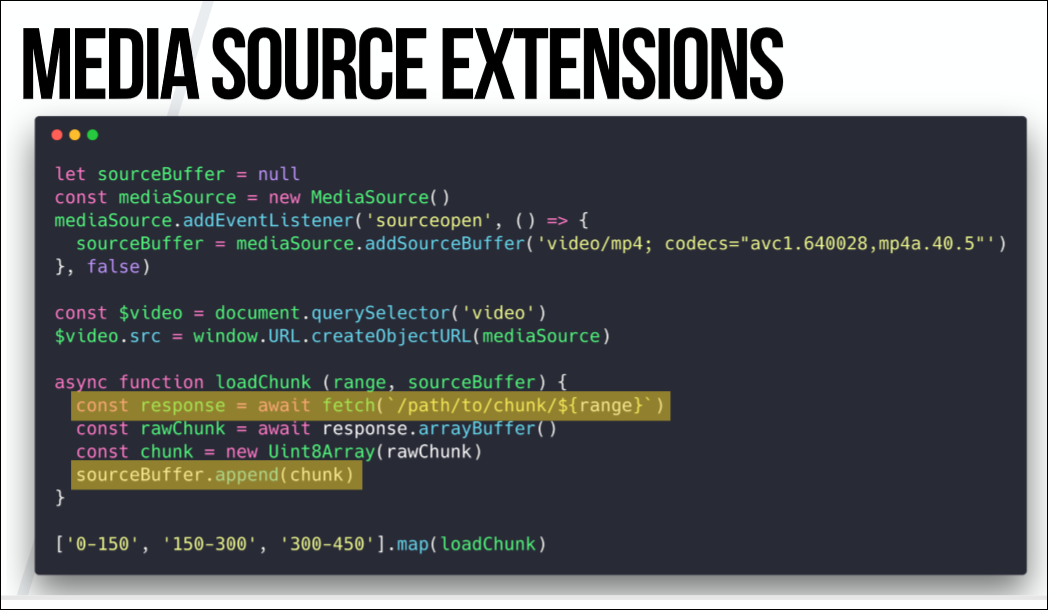

The point is that when you watch a two-hour video, you do not want to wait until it is fully loaded. Instead, you cut it into small fragments ranging in size from about 30 seconds to 2 minutes and alternately apply them to the <

Once our MediaSource buffer is ready and linked to the <

Finally, we can now begin to fetch individual fragments and send them to our SourceBuffer using the append method on the <Video /> element, receiving a dynamically created video. This can also be used for other use cases, when people can independently combine different video elements, creating their own videos, but I would not like to dig into it too deeply.

So this is almost the last step we have to take. You have a distribution network, you have fragments, and then the browser sends encrypted and compressed fragments to CDM, where decryption and possibly decoding is performed. Then the decrypted and uncompressed fragments are sent back to the browser, where they are visualized and displayed.

But there is one more thing. How do we know which fragments we need to load, where do we download them, and when? And this is the last part, the missing request from the manifest. When we make a request to Netflix for a manifest, it needs a lot of data. If we just want to play a video, then it matters for us which DRM system we use, which video we want to view (Netflix ID, which can be copied from the URL) and profiles. Profiles determine the resolution at which we receive video, as well as in what language we receive audio tracks, in what format (stereo, Dolby Digital, etc.), whether we use subtitles, etc.

The most commonly used manifest format is MPEG-DASH. True, Apple uses a different format - HSL, which looks like a list of files in the old Winamp player. But Widevine and Microsoft use MPEG-DASH. It is based on XML, and it defines everything: duration, buffer size, content types, when which fragments are loaded, fragments for different resolutions, and adaptive bitrate switching. The latter means that if a user, for example, watches a video and the download speed drops, the playback does not stop, but the quality of the video simply deteriorates. This is due to the fact that the manifest defines the same parts for different permissions, they have the same duration and the same indices.

Here is the manifesto for the film “Guardians of the Galaxy”. In it, we can see that at different download speeds people will get videos with different quality, as well as the fact that there are audio tracks for people with hearing impairments. It also spells the presence of subtitles.

We have a duration and an indication of the time from which to start playing. This function is used, for example, when you interrupt viewing and then return to the video, starting from where you left off.

There is again robustness, which says: this fragment can be lost only if your system meets the requirements. In this case, it is hardware decoding using Hardware Secure Codecs.

For the same part of the video, you can define as many fragments as you like with different resolutions.

And then you get the URL to download the fragment, and the range parameter shows the range of values in milliseconds.

This is the last part. You also sometimes get a manifest from a CDN. Some providers have a separate server for the delivery of fragments, but more often they come from the same machine as the manifest functionality. When we downloaded the manifest, we know which fragments we need to download, we can send a request for fragments, and then decrypt and decode from CDM.

In general, that's all. All that was said above is enough to develop an open source player for watching videos from Amazon, Sky and other platforms and watching videos of almost any provider.

Netflix considered that it was worth adding additional encryption of messages between the browser and the server. They called it the "message security layer" - Message Security Layer, or MSL. It doesn’t do anything directly with the video; it’s just an extra layer of encryption. One of the reasons for introducing MSL is that HTTPS is not secure enough. On the other hand, MSL is open source, so you can always see how it works. Here I am not going to delve into this topic, and if you are interested, you can always find information about why Netflix does MSL in their blog. GitHub has detailed implementation documentation and working implementations in Java.

There is also a Python implementation.which we wrote with friends. As far as I know, this is the only working open source client for Netflix. He works with Kodi Media Center. For visualization, you can use VLC Player or any other suitable software.

So, you saw what it took for us to implement all this, and how often I mentioned CDM - the “black box” that is downloaded from Google. Thus, we again returned the video to the “black box”. The beautiful <Video /> element is again hidden from us. We have added third-party software that helps us, but which is still closed and which we cannot manage. It can do a lot of obscure things: tracking, analytics, sending data ...

Here that on this occasion said Tim Berners Lee: "So, in general, it is important to maintain the EME as a relatively secure online environment where you can watch movies, as well as the most convenient and one that makes it part of the interrelated discourse of humanity. "

But there are other opinions regarding this. In particular, from the Electronic Frontiers Foundation, which until the advent of DRM was a member of the W3C. That's what they say: “In 2013, the EFF was disappointed to learn that the W3C had taken on the EME standardization project - encrypted media extensions. In essence, we are talking about an API whose sole function was to provide DRM for a leading role in the browser ecosystem. We will continue to fight to ensure that the Internet is free and open. We will continue to sue the US government to repeal laws that make DRM so toxic, and we will continue to fight at the level of global law. "

It's hard for me to say how to treat this. On the one hand, I am always for open and free Internet, in which browsers there is no proprietary code that can send requests to no one knows where. On the other hand, we need services like Netflix that charge for video. Perhaps they could create their own applications for playback, and then the Internet would refuse such kind of content.

At the moment, Sebastian (Sebastian Golasch) is a developer at Deutsche Telekom. He worked with Java and PHP for a long time, and then switched to JS, Python and Rust. For the past seven years, he has been working on the Qivicon smart home platform.

A bit about the history of streaming video

First, let's look at the history of the web, how we came from QuickTime to Netflix in 25 years. It all started in the 90s when Apple invented QuickTime. Its use on the Internet began in 1993-1994. At that time, the player could play video with a resolution of 156 × 116 pixels and a frequency of 10 FPS, without hardware acceleration (using only processor resources). This format was focused on dial-up connection 9600 baud - it is 9600 bps, including service information.

It was the time of the Netscape browser. Video in the browser did not look too good, because it was not native to the web. External software (same QuickTime) with its interface, which was visualized in the browser using the embed tag, was used for playback.

The situation got a little better when Macromedia released the Shockwave Player (after absorbing Macromedia by Adobe, it became the Adobe Flash Player). The first version of Shockwave Player was released in 1997, but video playback in it appeared only in 2002.

They used the Sorenson Spark aka H.263 codec. It has been optimized for small resolutions and small file sizes. What does it mean? For example, a video of 43 seconds, which was used to test the Shockwave Player, weighed only 560 Kbytes. Of course, the film in such quality would not be very pleasant to watch, but the technology itself was interesting for that time. However, as in the case of QuickTime, Shockwave Player in the browser required the installation of additional software. This player had a lot of security problems, but the most important thing is that the video was still an add-on to the browser.

In 2007, Microsoft released Silverlight, a bit like Flash. We will not dig deep, but all these solutions had something in common - a “black box”. All players worked like a browser add-on, and you had no idea what was going on inside.

Element < Video/>

In 2007, Opera offered to use the <

Video/> tag , that is, to make a native video in the browser. We use it today. It is easy and convenient, and any video can be not only viewed, but also downloaded. And even if we do not want to allow downloading videos, we cannot prohibit its loading into the browser. The maximum is to make it so difficult to download video.

A tag

<Video/>is the exact opposite of a black box, and viewing the source code is very simple.

DRM

However, you can’t just right-click on the video on Netflix and select “Save As”. The reason for this is DRM (Digital Restrictions Management, Digital Restrictions Management). This is not one technology and not a single application that performs any task. This is a general term for such concepts as:

- Authentication and User Encryption

- Content-dependent encryption

- Definition of rights and application of restrictions

- Review and update

- Output control and link protection

- Examination and tracking of violators

- Key and license management

To understand what DRM is, we need to examine its ecosystem, that is, to figure out which companies are involved. It:

- Content owners are at the top of the ecosystem. For example, Disney, MGM or FIFA. These companies produce content, and they have rights to it.

- DRM Cores are companies that provide DRM technology (for example, Google, Apple, Microsoft, etc.). Currently there are about 7–8 DRM technologies from different companies.

- Service providers - develop server software that encrypts video.

- Browsers that are actually players.

- Content providers are companies like Netflix, Amazon, Sky, etc. As a rule, they do not own the rights to the content, they license and distribute it.

- Chip / device vendors are also involved in the ecosystem, because DRM is not only software technology. Some companies (mostly Chinese) develop chips that encode and decode video.

Have you ever wondered why when viewing videos on Netflix in a browser it doesn’t have a very high resolution (SD), but if you watch the same video on an Apple TV or on an Android TV Box, the same content is played in Full HD or 4K? DRM is also responsible for this. The fact is that manufacturers are always afraid of pirates who steal content. Therefore, the less protected is the environment in which video decoding is performed, the poorer the quality is shown to the user. For example, if decoding is performed programmatically (for example, in Chrome or Firefox), the video is shown in the worst quality. In an environment where hardware capabilities are used for decoding (for example, if Android uses a GPU), the illegal content copying capabilities are less, and here the playback quality is higher. Finally,

But if we talk about browsers, then they are almost always decoding performed by software. Different browsers use different systems for DRM. Chrome and Firefox use Widevine. This company is owned by Google and licenses their DRM applications. Thus, to decode Firefox downloads DRM-library from Google. In the browser you can see exactly where the download is coming from.

Apple uses its own FairPlay system, which was created when the company introduced the first iPhones and iPads. Microsoft also uses its development called PlayReady, which is built right into Windows. In other cases, Widevine is most often used. This system exists both as an application and as a hardware solution - chips that decode video.

CDM

The abbreviation CDM stands for Content Decryption Module. This is some piece of software or hardware that can work in several ways:

- Decrypt the video, after which it is visualized in the browser using the <Video /> tag.

- Decrypt and decode video, then transfer the raw video frames for playback to the browser.

- Decrypt and decode video, then transfer the raw video frames for playback using the GPU.

Despite GPU support, the second option is most often used (at least when it comes to Chrome and Firefox).

Layers of decoding and decryption in the browser

So how does all this work together? To understand this, look at the decoding and decryption layers in the browser. They are divided into:

- JavaScript application - it tells the computer what kind of video I'm going to watch.

- A browser is a player that plays video content.

- Content decryption module.

- Digital rights management is all about video decoding (I couldn’t think of a better name, so I called it that way).

- Trusted runtime environment.

- In this case, the first two components are the DRM player, the content decryption module is the DRM client, and the last two components are the DRM core.

What happens when you play video in a browser is shown in the image below. She, of course, a little confused: there are a lot of arrows and flowers. I'll walk through it in steps, using real-life cases to make it clearer.

As an example, we will use Netflix. I wrote a debugging application.

I started with what I think each of you would have started: I looked through the requests that Netflix makes when I launch the video, and saw a huge number of records.

However, if you leave only those that are really needed to play the video, it turns out that there are only three of them: manifest, license and the first fragment of the video.

The Netflix player is written in JavaScript and contains more than 76,000 lines of code, and, of course, I can’t completely disassemble it. But I would like to show the main parts that are needed to play the protected video.

We will start with the template:

EME

But before we dive into the functions, we need to get acquainted with another technology - EME (Encrypted Media Extensions, encrypted media extensions). This technology does not perform decryption and decoding; it is just a browser API. EME serves as an interface for CDM, for KeySystem, for a server with a license, and for the server on which the content is stored.

So let's start with getKeySystemConfig.

It should be borne in mind that it depends on the provider, so the config that I give here works for Netflix, but does not work, say, for Amazon.

In this config, we have to tell the backend system what level of trusted execution environment we can offer. This may be secure hardware decoding or secure software decoding. That is, we tell the system what hardware and software will be used for playback. And this will determine the quality of the content.

After configuring the config, let's take a look at creating the initial MediaKeySystem.

Here begins the interaction with the content decryption module. You need to tell the API which DRM system and KeySystem we are using. In our case, this is Widevine.

The next step is optional for all systems, but mandatory for Netflix. Again, its need depends on the provider. We need to apply a server certificate to our mediaKeys. Server certificates are plain text in Netflix's Cadmium.js file that can be easily copied. And when we apply it to mediaKeys, then all communication between the server with the license and our browser becomes secure thanks to the use of this certificate.

When this is done, we need to refer to the original video element and say, “Ok, this is the key system we want to use, and this is the hello video tag. Let's unite you. ”

And here is the last function that is needed to configure the video system.

This is a DRM session, or MediaKeySession. It is just data that goes from the provider to the decryption module that signs requests. This data is also plain text, which is hidden behind several functions in the Netflix player file, from where I copied it.

When we call create.Session in the mediaKeys object, we need to tell which video we support. In this case, this is mp4. This brings us back to the context of messaging with our CDM system. We also need Netflix to apply the server certificate on base64 in each form, but all this config in the create session is again provided dependent on the DRM system.

The last function here below - keySession.generateRequest builds the license request in the background. Or CDM builds a license query in the background. In other words, these are raw binary data that we have to send to the licensed server in order to get a valid license in return.

Here interest is cenc. This is an ISO encryption standard that defines the protection scheme for mp4 video. In WebM, this is called differently, but the function does the same.

The handleMessage is the EventListener interface that we configured. When this event is triggered by a message event in keySession, we know that we are ready to receive a license from the server.

And in this callback, we only receive a request to the server with a license, which gives some binary data (they may also differ depending on the provider). We use this data to update the current session by adding a license. That is, as soon as we received a valid license from the server, our CDM knows that we can decode and decrypt the video.

If we apply it to the diagram below, we get this: we want to play the video, and the JavaScript application says: “Hello, browser! I want to play the video! ”- then uses the Encrypted Media Extensions and makes a request to the License Functions in the Widevine CDM for a license. This request is then returned to the browser, and we can exchange it for a valid license on the license server, and then we need to transfer this license back to CDM. This process was shown in the code above.

But note that we have not lost a single second of video, and all this we need to do in order to be able to play some videos in the future.

MSE

And another technology that we need to explore is MSE (Media Source Extensions, extensions for multimedia sources). She could be called EME's half-sister (Encrypted Media Extensions). This is also a browser API, and it has nothing to do with DRM. I see it as a software interface to <

Video/> Src. With its help, you can create binary streams in JavaScript and apply video fragments to the < Video/> element . Thus, thanks to it, the source tag < Video/> becomes dynamic. So, we can use extensions for multimedia sources, instantiate and make access to the video, then load the video fragments in parts and apply them to the <

Video/> tag .

The point is that when you watch a two-hour video, you do not want to wait until it is fully loaded. Instead, you cut it into small fragments ranging in size from about 30 seconds to 2 minutes and alternately apply them to the <

Video/> element . Once our MediaSource buffer is ready and linked to the <

Video/> element , we can add a SourceBuffer. We again have to tell him which video format and which codecs we use, and then it will be created.

Finally, we can now begin to fetch individual fragments and send them to our SourceBuffer using the append method on the <Video /> element, receiving a dynamically created video. This can also be used for other use cases, when people can independently combine different video elements, creating their own videos, but I would not like to dig into it too deeply.

So this is almost the last step we have to take. You have a distribution network, you have fragments, and then the browser sends encrypted and compressed fragments to CDM, where decryption and possibly decoding is performed. Then the decrypted and uncompressed fragments are sent back to the browser, where they are visualized and displayed.

Manifest

But there is one more thing. How do we know which fragments we need to load, where do we download them, and when? And this is the last part, the missing request from the manifest. When we make a request to Netflix for a manifest, it needs a lot of data. If we just want to play a video, then it matters for us which DRM system we use, which video we want to view (Netflix ID, which can be copied from the URL) and profiles. Profiles determine the resolution at which we receive video, as well as in what language we receive audio tracks, in what format (stereo, Dolby Digital, etc.), whether we use subtitles, etc.

MPEG-DASH

The most commonly used manifest format is MPEG-DASH. True, Apple uses a different format - HSL, which looks like a list of files in the old Winamp player. But Widevine and Microsoft use MPEG-DASH. It is based on XML, and it defines everything: duration, buffer size, content types, when which fragments are loaded, fragments for different resolutions, and adaptive bitrate switching. The latter means that if a user, for example, watches a video and the download speed drops, the playback does not stop, but the quality of the video simply deteriorates. This is due to the fact that the manifest defines the same parts for different permissions, they have the same duration and the same indices.

Here is the manifesto for the film “Guardians of the Galaxy”. In it, we can see that at different download speeds people will get videos with different quality, as well as the fact that there are audio tracks for people with hearing impairments. It also spells the presence of subtitles.

We have a duration and an indication of the time from which to start playing. This function is used, for example, when you interrupt viewing and then return to the video, starting from where you left off.

There is again robustness, which says: this fragment can be lost only if your system meets the requirements. In this case, it is hardware decoding using Hardware Secure Codecs.

For the same part of the video, you can define as many fragments as you like with different resolutions.

And then you get the URL to download the fragment, and the range parameter shows the range of values in milliseconds.

This is the last part. You also sometimes get a manifest from a CDN. Some providers have a separate server for the delivery of fragments, but more often they come from the same machine as the manifest functionality. When we downloaded the manifest, we know which fragments we need to download, we can send a request for fragments, and then decrypt and decode from CDM.

In general, that's all. All that was said above is enough to develop an open source player for watching videos from Amazon, Sky and other platforms and watching videos of almost any provider.

MSL

Netflix considered that it was worth adding additional encryption of messages between the browser and the server. They called it the "message security layer" - Message Security Layer, or MSL. It doesn’t do anything directly with the video; it’s just an extra layer of encryption. One of the reasons for introducing MSL is that HTTPS is not secure enough. On the other hand, MSL is open source, so you can always see how it works. Here I am not going to delve into this topic, and if you are interested, you can always find information about why Netflix does MSL in their blog. GitHub has detailed implementation documentation and working implementations in Java.

There is also a Python implementation.which we wrote with friends. As far as I know, this is the only working open source client for Netflix. He works with Kodi Media Center. For visualization, you can use VLC Player or any other suitable software.

And again the "black box"

So, you saw what it took for us to implement all this, and how often I mentioned CDM - the “black box” that is downloaded from Google. Thus, we again returned the video to the “black box”. The beautiful <Video /> element is again hidden from us. We have added third-party software that helps us, but which is still closed and which we cannot manage. It can do a lot of obscure things: tracking, analytics, sending data ...

Here that on this occasion said Tim Berners Lee: "So, in general, it is important to maintain the EME as a relatively secure online environment where you can watch movies, as well as the most convenient and one that makes it part of the interrelated discourse of humanity. "

But there are other opinions regarding this. In particular, from the Electronic Frontiers Foundation, which until the advent of DRM was a member of the W3C. That's what they say: “In 2013, the EFF was disappointed to learn that the W3C had taken on the EME standardization project - encrypted media extensions. In essence, we are talking about an API whose sole function was to provide DRM for a leading role in the browser ecosystem. We will continue to fight to ensure that the Internet is free and open. We will continue to sue the US government to repeal laws that make DRM so toxic, and we will continue to fight at the level of global law. "

It's hard for me to say how to treat this. On the one hand, I am always for open and free Internet, in which browsers there is no proprietary code that can send requests to no one knows where. On the other hand, we need services like Netflix that charge for video. Perhaps they could create their own applications for playback, and then the Internet would refuse such kind of content.

A month later, on November 24-25, HolyJS 2018 Moscow will be held in Moscow , where Sebastian will give a talk on The Universal Serial Web : analyze the new WebUSB standard in detail, the ability to work with USB devices from the browser. All this with live demo examples is very interesting and clear.

PS On November 1, the final price of tickets will be set . Anyone who goes for their own - 50% discount from the standard price.