Great overview testing of programming languages

Recently, I once again worked with second-year students of the 2-semester discipline "Algorithmic Languages". Reviewed a few dozen programming languages. One of the students, Vadim Shukalyuk, wanted to get to know them better, to get a clearer picture of each of them. He advised him to do a little research. Than and carried away. I offer my report on the work done over several months with him.

Each programming language has its own advantages and disadvantages. One of the most important characteristics of a translator from any language is the speed of program execution. It is very difficult or even impossible to get an accurate estimate of this speed of execution. Resource http://benchmarksgame.alioth.debian.org/offers a game form to test this speed on different tasks. But the number of languages represented on this resource is rather small. The maximum capacity of the stack, the critical value for recursive calculations is easier to check, but it can vary in different versions of the translator and be dependent on system settings.

The following translators were tested: C (gcc, clang, icc), assembler (x86, x86-64), Java (OpenJDK), Pascal (fpc), JavaScript (Google Chrome, Mozilla Firefox), Lisp (sbcl, clisp), Erlang, Haskell (ghc, hugs), dino [1], auk (gawk, mawk, busybox), lua, ruby, basic (gambas, libre office), python-2, p-hi-p, postscript (gs), prolog ( swipl, gprolog), pearl, metapost, T E X, tickle, bash. We investigated both the actual speed of execution of several small, but time-consuming algorithms, and:

As the first task, on which all translators were tested, the calculation of the Fibonacci number by double recursion was chosen according to the definition: the numbers with numbers 1 and 2 are units, and the subsequent ones are the sum of the two previous ones. This algorithm has several attractive features:

The first feature allows for a short time to test translators, the speed of which varies hundreds of thousands of times. The second feature allows you to quickly check the accuracy of the calculations. The third feature theoretically allows you to investigate the stack capacity, but due to the fact that the calculation at n> 50 becomes very slow even on a supercomputer, it is practically impossible to use this feature.

In the following table 1, in the second column, the name of the language, the name of the compiler and its version and, if used, the optimization option of the generated code are indicated. The third column shows the relative calculation time on the AMD Phenom II x4 3.2 GHz processor. Tests were conducted on the AMD FX-6100 at the same frequency, but their results do not differ much from those given. The unit takes the computation time in the bash language, so erlange calculation is about 20,000 times faster than the bash. The 4th column shows the relative calculation time on the Intel Core i3-2100 3.1 GHz processor. Since the comparison of processors was not the aim of the study, some translators were not tested on the Intel platform. Fifth, an upper estimate (10% accuracy) of the maximum number of recursive calls supported by the translator in calculating ack (1,1, n) on a computer with 8 GB of RAM with a system stack size (ulimit -s) of 8192 KB. Some translators use their own settings that determine the size of the stack used - the default values for the selected version of the translator are always used. The measurements were carried out on a Linux system, but their results should not change when moving to another OS. Data is sorted by column 3. All sources can be viewedhere .

Table 1.

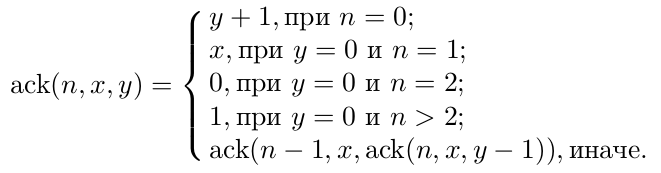

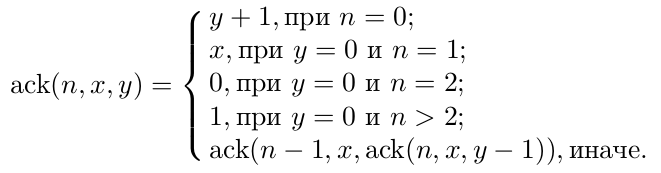

As the second problem, the Akkerman function was chosen in the form when all arithmetic operations are reduced to it, i.e., ack (1, x, y) = x + y, ack (2, x, y) = x * y, ack ( 3, x, y) = x y , ack (4, x, y) is the tetration of x and y, etc.

This function grows very rapidly with increasing n (the number ack (5,5,5) is so large that the number of digits in the order of this number is many times greater than the number of atoms in the observed part of the Universe), but it is considered very slow. The latter property is theoretically convenient for testing performance. However, the calculation of this function requires a significant number of recursive calls, and most of the languages tested were not able to support them for calculations that have a noticeable duration. It is known that the calculation of this function cannot be reduced to iteration. The calculation for this problem allowed us to study the maximum capacity of the stack of the languages studied: the calculation of ack (1,1, n-1) requires the nth nesting of calls and is very fast. The following table 2 presents the results of calculating the pentation ack (5,2,3), for those languages whose stack was able to withstand it (nesting of calls 65539). For the unit of speed, the operating time of gcc with the -O5 option is selected, i.e. php is about 420 times slower.

Table 2.

The idea to use the above two tasks was borrowed from the work of B. V. Kernigan and R. Pike “Unix - a universal programming environment”, where it was used to test the hoc language [2].

Of course, with more complex calculations, using mainly the means of standard libraries, the difference in the speed of the translators would be much smaller.

Time was measured by the standard time command, and then, when it was impossible (javascript, office basic), tools built into the language were used.

According to the results of the study, the following conclusions were made, some of which were somewhat unexpected:

In conclusion, a few words from a student beginning to master the art of programming.

To write programs for the required calculations in any language, you first need to understand how variables are declared in a particular language, how to build an if-else type construct, and how to organize recursion. I started my work with the simple language Pascal, because at that time I knew it better than anyone. After Pascal, I took on C, Java and Dino, as their syntaxes are roughly similar. C turned out to be quite interesting, simple, and at the same time with intuitive operators. Java seemed less convenient than C / C ++ - you need to write a lot of irrelevant things, one that could be taken by default. I also strained the moment of the need for the same class and file names. Haskell left only positive emotions. Convenient, clear and powerful. PHP, a language for developing web applications, is very similar to C: you can simply paste the C code with minimal changes and everything will work as it should. Erlang is similar in syntax to Haskell and a bit to Prolog. It is also quite a pleasant and understandable language, no difficulties arose. JavaScript syntax is similar to Java or C. Visual Basic in both office and GAMBAS versions has somewhat angular and inconvenient syntax, but in general, it was not very difficult. Then, after acquiring knowledge of the basic syntax of C and Java, it turned out to write Python code pretty quickly, since Python is similar to C. No problems arose with Lua and its rather powerful and flexible constructions. Awk also has a similar structure to C, and quickly managed to overpower it. Some difficulties arose with Lisp, as in a person who had previously studied C-like languages, for example, with a basic understanding of the prefix notation. Which, after low development costs, seemed very convenient, logical and beautiful. After, I switched to the logical programming language Prolog, which turned out to be specific, but very interesting and fundamental. Ruby - a language with powerful support for object-oriented programming and with a very beautiful bright red ruby on the icon turned out to be an excellent language: no extra brackets, semicolons and other unnecessary characters. One of the most remembered. Although python, with the exception of OOP designs, is no less concise. Perl - although it bears the name "pearl", the camel is the symbol of the tongue, which apparently is a reference to the fact that the camel is not a very beautiful, but very hardy animal that can perform hard work. Putting dollars, brackets and semicolons again after Ruby was not very nice. The syntax in some places is similar to the syntax of the terminal shell language Bash. Then I took up the assembler. There were certain difficulties and the need to understand the processor and its registers. Surprise knew no bounds when it turned out that C handles calculations faster than assembler, machine code! There were no problems with Bash, although there you need to bet a lot of dollars, and with calculations and brackets. The Metapost / Metafont language caused some problems - only numbers are supported there, not more than 4096. Although its syntax is quite traditional. Tickle (TCL) also has a fairly traditional syntax, but line-oriented - this and bash-like semantics were very confusing at first. PostScript seemed the most difficult. In this language, the syntax is very specific and without preparation, you won’t be able to write anything intuitively, therefore, I had to study the relevant literature and start training with the simplest programs. PostScript was a real test: writing double recursion postfix only using the stack, after getting used to all the tools and features of Ruby and C was problematic. Writing and testing Ackerman's function on a postscript is like trying to paint a wall with a toothbrush. But the first place in complexity definitely takes TE X. I have not seen anything more difficult. And without the direct help of a teacher, it would not be possible to overcome this language.

Curious were the data on the size of the language stack. The larger the stack of the language, the greater the likelihood that it will be able to cope with the function of Ackerman. But if a program in some language could not cope with the calculation of ack (5,2,3), this does not mean that the language is bad and uncomfortable. It is likely that this language could be created for other useful purposes such as Metapost or Postscript.

In general, the work seemed to me very interesting and super-cognitive, for example, writing the same logical turn in 20 different ways. Also, understanding the principle of operation of processor registers and writing a double recursive function only with the help of the stack and three operations: adding, deleting and scrolling the stack greatly expanded my horizons.

Some of the conclusions of his student seemed too categorical to the teacher, but he decided to keep them as more recent than his own.

[1] - A programming language developed in Russia . Now its author is an employee of Red Hat, working to improve the collection of gcc compilers. The latest version of dino was released in 2007.

[2] - This language was also tested, showing a speed result between mawk and ghc.

Each programming language has its own advantages and disadvantages. One of the most important characteristics of a translator from any language is the speed of program execution. It is very difficult or even impossible to get an accurate estimate of this speed of execution. Resource http://benchmarksgame.alioth.debian.org/offers a game form to test this speed on different tasks. But the number of languages represented on this resource is rather small. The maximum capacity of the stack, the critical value for recursive calculations is easier to check, but it can vary in different versions of the translator and be dependent on system settings.

The following translators were tested: C (gcc, clang, icc), assembler (x86, x86-64), Java (OpenJDK), Pascal (fpc), JavaScript (Google Chrome, Mozilla Firefox), Lisp (sbcl, clisp), Erlang, Haskell (ghc, hugs), dino [1], auk (gawk, mawk, busybox), lua, ruby, basic (gambas, libre office), python-2, p-hi-p, postscript (gs), prolog ( swipl, gprolog), pearl, metapost, T E X, tickle, bash. We investigated both the actual speed of execution of several small, but time-consuming algorithms, and:

- quality optimization of some translators;

- features when working with Intel and AMD processors;

- limit number of recursive calls (stack capacity).

As the first task, on which all translators were tested, the calculation of the Fibonacci number by double recursion was chosen according to the definition: the numbers with numbers 1 and 2 are units, and the subsequent ones are the sum of the two previous ones. This algorithm has several attractive features:

- If the calculation time of the nth number is t, then the (n + 1) th is t * φ, where φ is the golden ratio equal to (√5 + 1) / 2;

- The self-calculated ne is equal to the value rounded up to the nearest integer φ n / √5;

- Calculating fib (n + 1) requires the nth nesting of calls.

The first feature allows for a short time to test translators, the speed of which varies hundreds of thousands of times. The second feature allows you to quickly check the accuracy of the calculations. The third feature theoretically allows you to investigate the stack capacity, but due to the fact that the calculation at n> 50 becomes very slow even on a supercomputer, it is practically impossible to use this feature.

In the following table 1, in the second column, the name of the language, the name of the compiler and its version and, if used, the optimization option of the generated code are indicated. The third column shows the relative calculation time on the AMD Phenom II x4 3.2 GHz processor. Tests were conducted on the AMD FX-6100 at the same frequency, but their results do not differ much from those given. The unit takes the computation time in the bash language, so erlange calculation is about 20,000 times faster than the bash. The 4th column shows the relative calculation time on the Intel Core i3-2100 3.1 GHz processor. Since the comparison of processors was not the aim of the study, some translators were not tested on the Intel platform. Fifth, an upper estimate (10% accuracy) of the maximum number of recursive calls supported by the translator in calculating ack (1,1, n) on a computer with 8 GB of RAM with a system stack size (ulimit -s) of 8192 KB. Some translators use their own settings that determine the size of the stack used - the default values for the selected version of the translator are always used. The measurements were carried out on a Linux system, but their results should not change when moving to another OS. Data is sorted by column 3. All sources can be viewedhere .

Table 1.

| N | Tongue | AMD | Intel | Stack |

|---|---|---|---|---|

| 1 | C / C ++ (gcc 4.7.2, -O5) | 354056 | 493533 | 790000 |

| 2 | C / C ++ (clang 3.0-6.2, -O3) | 307294 | 270000 | |

| 3 | C / C ++ (icc 14.0.3, -fast) | 250563 | 232665 | 530000 |

| 4 | Assembler x86-64 | 243083 | 271443 | 350,000 |

| 5 | Assembler x86 | 211514 | 301603 | 700,000 |

| 6 | Java (OpenJDK 1.7.0_25) | 186401 | 239659 | 8000 |

| 7 | Pascal (fpc 2.6.0, -O3) | 170604 | 186401 | 180,000 |

| 8 | C / C ++ (gcc 4.7.2, -O0) | 159672 | 173261 | 180,000 |

| 9 | C / C ++ (clang 3.0-6.2, -O0) | 146726 | 110000 | |

| 10 | C / C ++ (icc 14.0.3, -O0) | 136862 | 156602 | 530000 |

| eleven | Javascript (Mozilla Firefox 25) | 121979 | 4200 | |

| 12 | Javascript (Google Chrome 31) | 92850 | 10,000 | |

| thirteen | Lisp (sbcl 1.0.57) | 54925 | 51956 | 31000 |

| 14 | Erlang (5.9.1) | 19845 | 18589 | no limit |

| fifteen | Haskell (ghc 7.4.1, -O) | 18589 | 22946 | 260,000 |

| 16 | Awk (mawk 1.3.3) | 6621 | 6306 | 44000 |

| 17 | Lua (5.2) | 6420 | 7075 | 150,000 |

| 18 | Ruby (1.9.3) | 5297 | 6969 | 6600 |

| 19 | Dino (0.55) | 5024 | 6420 | 190000 |

| 20 | Basic (Gambas 3.1.1) | 3968 | 4373 | 26000 |

| 21 | Python (2.7.3) | 3678 | 4013 | 1000 |

| 22 | PHP (5.4.4) | 2822 | 3720 | no limit |

| 23 | Awk (gawk 4.0.1) | 2648 | 2547 | no limit |

| 24 | Postscript (gs 9.05) | 2355 | 3246 | 5000 |

| 25 | Prolog (swipl 5.10.4) | 1996 | 2407 | 2,300,000 |

| 26 | Perl (5.14.2) | 1516 | 1670 | no limit |

| 27 | Prolog (gprolog 1.3.0) | 1116 | 1320 | 120,000 |

| 28 | Lisp (clisp 2.49) | 998 | 1023 | 5500 |

| 29th | Awk (busybox 1.20.2) | 981 | 1113 | 18000 |

| thirty | T E X (3.1415926) | 239 | 333 | 3400 |

| 31 | Metapost (1.504) | 235 | 470 | <4100 |

| 32 | Tcl (8.5) | 110 | 123 | 1000 |

| 33 | Haskell (hugs 98.200609.21) | 82 | 121 | 17000 |

| 34 | Basic (LibreOffice 3.5.4.2) | 20 | 35 | 6500 |

| 35 | bash (4.2.37) | 1 | 0.77 | 600 |

As the second problem, the Akkerman function was chosen in the form when all arithmetic operations are reduced to it, i.e., ack (1, x, y) = x + y, ack (2, x, y) = x * y, ack ( 3, x, y) = x y , ack (4, x, y) is the tetration of x and y, etc.

This function grows very rapidly with increasing n (the number ack (5,5,5) is so large that the number of digits in the order of this number is many times greater than the number of atoms in the observed part of the Universe), but it is considered very slow. The latter property is theoretically convenient for testing performance. However, the calculation of this function requires a significant number of recursive calls, and most of the languages tested were not able to support them for calculations that have a noticeable duration. It is known that the calculation of this function cannot be reduced to iteration. The calculation for this problem allowed us to study the maximum capacity of the stack of the languages studied: the calculation of ack (1,1, n-1) requires the nth nesting of calls and is very fast. The following table 2 presents the results of calculating the pentation ack (5,2,3), for those languages whose stack was able to withstand it (nesting of calls 65539). For the unit of speed, the operating time of gcc with the -O5 option is selected, i.e. php is about 420 times slower.

Table 2.

| gcc -O5 | 1 |

| asm x86 | 2.15 |

| icc -fast | 2.18 |

| asm x86-64 | 2.36 |

| clang -O3 | 2.76 |

| fpc -O3 | 4.44 |

| gcc -O0 | 7.75 |

| icc -O0 | 8.36 |

| clang -O0 | 9.64 |

| Erlang | 18.51 |

| ghc -O | 50.18 |

| lua | 122.55 |

| php | 423.64 |

| gawk | 433.82 |

| swipl | 766.55 |

| dino | 915.64 |

The idea to use the above two tasks was borrowed from the work of B. V. Kernigan and R. Pike “Unix - a universal programming environment”, where it was used to test the hoc language [2].

Of course, with more complex calculations, using mainly the means of standard libraries, the difference in the speed of the translators would be much smaller.

Time was measured by the standard time command, and then, when it was impossible (javascript, office basic), tools built into the language were used.

According to the results of the study, the following conclusions were made, some of which were somewhat unexpected:

- The speed of assembler programs can be more than 50% slower than C / C ++ programs compiled with maximum optimizations;

- The speed of a virtual Java machine with bytecode often exceeds the speed of the hardware with codes received by translators from high-level languages. The Java machine is inferior in speed only to assembler and the best optimizing translators;

- The speed of compilation and execution of programs for javascript in popular browsers is only 2-3 times inferior to the best translators and surpasses even some high-quality compilers, certainly far (more than 10 times) overtaking most translators of other scripting languages and the like in terms of program execution speed;

- The speed of the codes generated by Intel's C language compiler turned out to be noticeably slower than the GNU compiler and sometimes LLVM;

- The speed of assembler x86-64 codes may be less than the corresponding x86 codes by about 10%;

- Code optimization works better on an Intel processor;

- Execution speed on the Intel processor was almost always higher, with the exception of Lisp, Erlang, Auk (gawk, mawk) and Bash languages. The difference in the speed in the cache is most likely caused by different environment settings on the systems under test, and not by the actual translator or hardware. Intel's advantage is particularly noticeable on 32-bit codes;

- The stack of most tested languages, in particular Java and JavaScript, support only a very limited number of recursive calls. Some translators (gcc, icc, ...) allow you to increase the size of the stack by changing the variables of the runtime or parameter;

- In the considered versions of gawk, php, perl, bash, a dynamic stack is implemented that allows you to use all the computer's memory. But perl and, especially, bash use the stack so extensively that 8-16 GB is not enough to calculate ack (5,2,3). In version 5.4.20 php, the stack was limited to approximately 200,000 calls.

In conclusion, a few words from a student beginning to master the art of programming.

To write programs for the required calculations in any language, you first need to understand how variables are declared in a particular language, how to build an if-else type construct, and how to organize recursion. I started my work with the simple language Pascal, because at that time I knew it better than anyone. After Pascal, I took on C, Java and Dino, as their syntaxes are roughly similar. C turned out to be quite interesting, simple, and at the same time with intuitive operators. Java seemed less convenient than C / C ++ - you need to write a lot of irrelevant things, one that could be taken by default. I also strained the moment of the need for the same class and file names. Haskell left only positive emotions. Convenient, clear and powerful. PHP, a language for developing web applications, is very similar to C: you can simply paste the C code with minimal changes and everything will work as it should. Erlang is similar in syntax to Haskell and a bit to Prolog. It is also quite a pleasant and understandable language, no difficulties arose. JavaScript syntax is similar to Java or C. Visual Basic in both office and GAMBAS versions has somewhat angular and inconvenient syntax, but in general, it was not very difficult. Then, after acquiring knowledge of the basic syntax of C and Java, it turned out to write Python code pretty quickly, since Python is similar to C. No problems arose with Lua and its rather powerful and flexible constructions. Awk also has a similar structure to C, and quickly managed to overpower it. Some difficulties arose with Lisp, as in a person who had previously studied C-like languages, for example, with a basic understanding of the prefix notation. Which, after low development costs, seemed very convenient, logical and beautiful. After, I switched to the logical programming language Prolog, which turned out to be specific, but very interesting and fundamental. Ruby - a language with powerful support for object-oriented programming and with a very beautiful bright red ruby on the icon turned out to be an excellent language: no extra brackets, semicolons and other unnecessary characters. One of the most remembered. Although python, with the exception of OOP designs, is no less concise. Perl - although it bears the name "pearl", the camel is the symbol of the tongue, which apparently is a reference to the fact that the camel is not a very beautiful, but very hardy animal that can perform hard work. Putting dollars, brackets and semicolons again after Ruby was not very nice. The syntax in some places is similar to the syntax of the terminal shell language Bash. Then I took up the assembler. There were certain difficulties and the need to understand the processor and its registers. Surprise knew no bounds when it turned out that C handles calculations faster than assembler, machine code! There were no problems with Bash, although there you need to bet a lot of dollars, and with calculations and brackets. The Metapost / Metafont language caused some problems - only numbers are supported there, not more than 4096. Although its syntax is quite traditional. Tickle (TCL) also has a fairly traditional syntax, but line-oriented - this and bash-like semantics were very confusing at first. PostScript seemed the most difficult. In this language, the syntax is very specific and without preparation, you won’t be able to write anything intuitively, therefore, I had to study the relevant literature and start training with the simplest programs. PostScript was a real test: writing double recursion postfix only using the stack, after getting used to all the tools and features of Ruby and C was problematic. Writing and testing Ackerman's function on a postscript is like trying to paint a wall with a toothbrush. But the first place in complexity definitely takes TE X. I have not seen anything more difficult. And without the direct help of a teacher, it would not be possible to overcome this language.

Curious were the data on the size of the language stack. The larger the stack of the language, the greater the likelihood that it will be able to cope with the function of Ackerman. But if a program in some language could not cope with the calculation of ack (5,2,3), this does not mean that the language is bad and uncomfortable. It is likely that this language could be created for other useful purposes such as Metapost or Postscript.

In general, the work seemed to me very interesting and super-cognitive, for example, writing the same logical turn in 20 different ways. Also, understanding the principle of operation of processor registers and writing a double recursive function only with the help of the stack and three operations: adding, deleting and scrolling the stack greatly expanded my horizons.

Some of the conclusions of his student seemed too categorical to the teacher, but he decided to keep them as more recent than his own.

[1] - A programming language developed in Russia . Now its author is an employee of Red Hat, working to improve the collection of gcc compilers. The latest version of dino was released in 2007.

[2] - This language was also tested, showing a speed result between mawk and ghc.