Custom project optimization in PHP

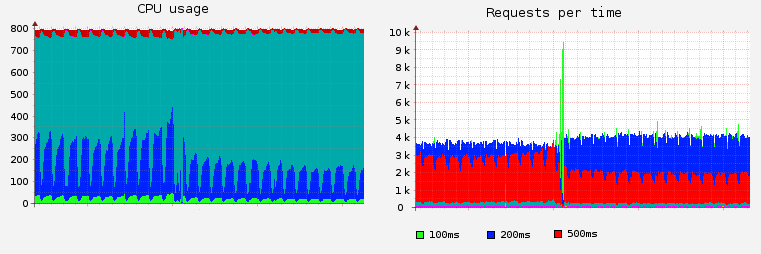

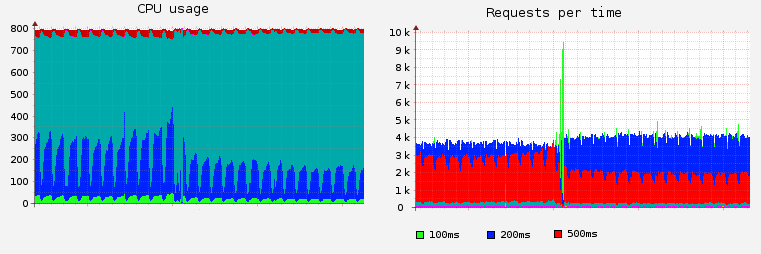

In this publication I want to talk about how, using unconventional methods, we were able to reduce the load on the servers and speed up the page processing time by several times.

Traditional methods, I think, are known to everyone:

In our project, all this was done, but at the same time the problem of page processing speed remained. The average page processing speed was around 500ms. At one point, the idea came up to analyze what resources are and what they can be spent on.

After analysis, the following main resources were identified that need to be monitored:

In our project, we almost do not use writing to disk, so we immediately deleted the 4th item.

Then the search for bottlenecks began.

The first thing that was tried was profiling MySQL queries, searching for slow queries. The approach did not bring much success: several requests were optimized, but the average page processing time did not change much.

Next was an attempt to profile the code with XHProf. This gave acceleration in bottlenecks and was able to reduce the load by about 10-15%. But this also did not solve the main problem. (If it is interesting, I can separately write an article on how to optimize using XHProf. You just let me know in the comments.)

In the third stage, the thought came to see how much memory it takes to process the request. It turned out that this is the problem - a simple request may require loading up to 20mb of code into RAM. And the reason for this was not clear, since there is a simple page loading - without querying the database, or downloading large files.

It was decided to write an analyzer and find out how much memory each PCP file requires when it is turned on.

The analyzer is very simple: the project already had a file autoloader, which, based on the class name, loaded the necessary file (autoload) itself. It simply added 2 lines: how much memory was before the file was downloaded, how much was after.

Code example:

The first line saves how much memory was in the beginning, the last line subtracts the difference and adds it to the download list. The profiler also saves the file download queue (backtrack).

At the end of the execution of all the code, we added the output of the panel with the collected information.

The analysis showed very interesting reasons why excessive memory can be used. After we analyzed all the pages and removed the loading of extra files, the page began to require less than 8 MB of memory. Updating the page became faster, the load on the servers decreased, and on the same machines it became possible to process more clients.

Next is a list of things that we have changed. It is worth noting here that the functionality itself has not changed. Only the code structure has changed.

All examples are made specially simplified, since there is no way to provide the original project code.

Example:

The file itself is very small, but at the same time, it pulls at least one other file, which can be quite large.

There are several solutions:

Very similar to the previous paragraph. Example:

Naturally, PHP does not know that you only need 1 function. As soon as this class is accessed, the entire file is loaded.

Solution:

If there are similar classes, then it is worth highlighting the functionality that is used everywhere in a separate class. In the current class, you can reference the new class for compatibility.

Example:

Or:

Those. The example is similar to the previous one, only now a constant. The solution is the same as in the previous case.

Example:

If the Handler is used frequently, then there is no problem. A completely different question, if it is used only in 1 of the functions from 20.

Solution:

Remove from the constructor, switch to lazy loading through the magic __get method or for example like this:

Example: You have a large file with settings for all pages of all menu items. A file can have many arrays.

If part of the data is rarely used, and part often - then this file is a candidate for separation.

Those. It’s worth loading these settings only when they are needed.

An example of our memory usage:

A file of 16 kb, just an array of data - it requires 100 kb or more.

Some of the settings that change frequently, we stored in a file in serialize format. We found that this loads both the processor and the RAM, and it turned out that in PHP version 5.3.x the unserialize function has a very strong memory leak.

After optimization, we got rid of these functions as much as possible. We decided to store the data in files as an array saved through var_export and load it back through using include / require. Thus, we were able to use the APC mode.

Unfortunately, for data stored in memcache, this approach does not work.

All these examples are easy to find and very easy to edit without much changing the structure of the project. We took the rule: “any files that require more than 100kb should be checked to see if their download can be optimized. We also looked at situations when several files were downloaded from the same branch. In this situation, we looked at whether it is possible to not download the entire file branch at all. The key idea was: “everything that we download should make sense. If you can not download in any way - it is better not to download.

After we removed everything above, one request to the server began to require about 2 times less RAM. This gave us a decrease in processor load by about 2 times without reconfiguring the servers and reducing the average speed of loading one page several times.

If it is interesting, there is an idea in the plans to describe ways of profiling code and finding bottlenecks using XHprof.

Traditional methods, I think, are known to everyone:

- Optimization of SQL queries;

- Search and fix bottlenecks;

- Switch to Memcache for frequently used data;

- Install APC, XCache and the like;

- Client optimization: CSS sprites, etc.

In our project, all this was done, but at the same time the problem of page processing speed remained. The average page processing speed was around 500ms. At one point, the idea came up to analyze what resources are and what they can be spent on.

After analysis, the following main resources were identified that need to be monitored:

- CPU time

- RAM;

- Waiting time for other resources (MySQL, memcache);

- Disk timeout.

In our project, we almost do not use writing to disk, so we immediately deleted the 4th item.

Then the search for bottlenecks began.

Stage 1

The first thing that was tried was profiling MySQL queries, searching for slow queries. The approach did not bring much success: several requests were optimized, but the average page processing time did not change much.

Stage 2

Next was an attempt to profile the code with XHProf. This gave acceleration in bottlenecks and was able to reduce the load by about 10-15%. But this also did not solve the main problem. (If it is interesting, I can separately write an article on how to optimize using XHProf. You just let me know in the comments.)

Stage 3

In the third stage, the thought came to see how much memory it takes to process the request. It turned out that this is the problem - a simple request may require loading up to 20mb of code into RAM. And the reason for this was not clear, since there is a simple page loading - without querying the database, or downloading large files.

It was decided to write an analyzer and find out how much memory each PCP file requires when it is turned on.

The analyzer is very simple: the project already had a file autoloader, which, based on the class name, loaded the necessary file (autoload) itself. It simply added 2 lines: how much memory was before the file was downloaded, how much was after.

Code example:

Profiler::startLoadFile($fileName);

Include $fileName;

Profiler::endLoadFile($fileName);

The first line saves how much memory was in the beginning, the last line subtracts the difference and adds it to the download list. The profiler also saves the file download queue (backtrack).

At the end of the execution of all the code, we added the output of the panel with the collected information.

echo Profiler:showPanel();

The analysis showed very interesting reasons why excessive memory can be used. After we analyzed all the pages and removed the loading of extra files, the page began to require less than 8 MB of memory. Updating the page became faster, the load on the servers decreased, and on the same machines it became possible to process more clients.

Next is a list of things that we have changed. It is worth noting here that the functionality itself has not changed. Only the code structure has changed.

All examples are made specially simplified, since there is no way to provide the original project code.

Example 1: Loading but not using large parent classes

Example:

class SysPage extends Page{

static function helloWorld(){

echo "Hello World";

}

}

The file itself is very small, but at the same time, it pulls at least one other file, which can be quite large.

There are several solutions:

- Remove inheritance where not needed;

- Make sure that all parent classes are minimal in size.

Example 2: Using long classes, although only a small part of the class is needed

Very similar to the previous paragraph. Example:

class Page{

public static function isActive(){}

// тут длинный код на 1000-2000 строк

}

Naturally, PHP does not know that you only need 1 function. As soon as this class is accessed, the entire file is loaded.

Solution:

If there are similar classes, then it is worth highlighting the functionality that is used everywhere in a separate class. In the current class, you can reference the new class for compatibility.

Example 3: Loading a large class for constants only

Example:

$CONFIG['PAGES'] = array(

'news' => Page::PAGE_TYPE1,

'about' => Page::PAGE_TYPE2,

);

Or:

if(get('page')==Page::PAGE_TYPE1){}

Those. The example is similar to the previous one, only now a constant. The solution is the same as in the previous case.

Example 4: Auto creation in the constructor of classes that may not be used

Example:

class Action{

public $handler;

public function __construct(){

$this->handler = new Handler();

}

public function handle1(){}

public function handle2(){}

public function handle3(){}

public function handle4(){}

public function handle5(){}

public function handle6(){}

public function handle7(){}

public function handle8(){}

public function handle9(){}

public function handle10(){

$info = $this->handler->getInfo();

}

}

If the Handler is used frequently, then there is no problem. A completely different question, if it is used only in 1 of the functions from 20.

Solution:

Remove from the constructor, switch to lazy loading through the magic __get method or for example like this:

public function handle10(){

$info = $this->handler()->getInfo();

}

public function handler(){

if($this->handler===null)

$this->handler = new Handler();

return $this->handler;

}

Example 5: Uploading unnecessary language files / configs

Example: You have a large file with settings for all pages of all menu items. A file can have many arrays.

If part of the data is rarely used, and part often - then this file is a candidate for separation.

Those. It’s worth loading these settings only when they are needed.

An example of our memory usage:

A file of 16 kb, just an array of data - it requires 100 kb or more.

Example 6: Using serialize / unserialize

Some of the settings that change frequently, we stored in a file in serialize format. We found that this loads both the processor and the RAM, and it turned out that in PHP version 5.3.x the unserialize function has a very strong memory leak.

After optimization, we got rid of these functions as much as possible. We decided to store the data in files as an array saved through var_export and load it back through using include / require. Thus, we were able to use the APC mode.

Unfortunately, for data stored in memcache, this approach does not work.

Summary

All these examples are easy to find and very easy to edit without much changing the structure of the project. We took the rule: “any files that require more than 100kb should be checked to see if their download can be optimized. We also looked at situations when several files were downloaded from the same branch. In this situation, we looked at whether it is possible to not download the entire file branch at all. The key idea was: “everything that we download should make sense. If you can not download in any way - it is better not to download.

Conclusion

After we removed everything above, one request to the server began to require about 2 times less RAM. This gave us a decrease in processor load by about 2 times without reconfiguring the servers and reducing the average speed of loading one page several times.

Future plans

If it is interesting, there is an idea in the plans to describe ways of profiling code and finding bottlenecks using XHprof.