SDN: new flow control features in mesh networks

Hello dear readers. It is worth mentioning right away that this article is not about what is good, but what is bad in SDN or some other network technologies. We are not violent adherents of software-configured networks. We just want to tell you about the solutions we have come up with when developing industrial mesh networks as part of creating industrial wireless communication systems . Talk about the opportunities that are at the crossroads of technology, allowing you to rely on well-proven solutions and at the same time keep up with the times.

The effectiveness of the operation of data transmission networks is largely determined by the network-level algorithms implemented in them (routing, load limitation, transmission of service information). The number of such algorithms is quite large, which is caused by a variety of requirements for information exchange, features of transmitted information flows, as well as hardware and software capabilities. Moreover, each of the protocols has its own advantages and disadvantages, and, apparently, ideal routing algorithms simply do not exist and cannot exist.

The foregoing fully applies to mesh networks, where two approaches to routing prevail - proactive (for example, the OLSR protocol) and reactive(AODV protocol). There are also very productive hybrid schemes.

OLSR (Optimized Link-State Routing) [1] is based on the collection and dissemination of overhead information about network status. As a result of processing this information, each node can build a model of the current state of the network in the form of a formal description of a graph whose vertices are mapped to network nodes, edges (or arcs) - communication lines (links). Having such a graph, any node can calculate the "lengths" of the shortest paths to all destinations in the network and select the "optimal" route leading to any particular network node.

This algorithm responds well to many unforeseen events, which, first of all, include:

- spontaneous failures / restoration of nodes and lines;

- damage and repair of network nodes;

- aggressive effects of the "external environment", leading to the blocking of individual elements of the system;

- connecting and disconnecting nodes and lines during operational relocation of subscribers.

Application of the OLSR protocol is most effective in networks with a high density of nodes, in which traffic of an irregular, sporadic nature predominates. OLSR constantly uses some bandwidth resource for its service traffic.

Along with the OLSR protocol, which provides a preliminary (proactive) calculation of routes between all pairs of subscribers within the subnet, mesh-network implements another - reactive AODV protocol (Ad hoc On-Demand Distance Vector) [2]. This protocol provides for the search and organization of routes as necessary, and the destruction of found routes after their use.

AODV uses less resources than OLSR because service messages are relatively small and require less bandwidth to maintain routes and routing tables. But at the same time, the number of connections and disconnections of nodes in the network should be small.

Nevertheless, both protocols (in aggregate), to some extent, correspond to the basic requirements that an “ideal algorithm” must satisfy [3]. These requirements can be formulated and commented as follows:

Correctness. Both algorithms are workable and do not contain logical contradictions.

- Computational simplicity. Implementing the AODV algorithm requires minimal processor resources. Conversely, the OLSR algorithm is quite complex.

- Sustainability. Both algorithms strive for a concrete solution without sharp oscillations with insignificant changes in the states of the radio beam.

- Justice. At first glance, both algorithms provide equivalent services to all network users. But, due to their topological location on the network, individual users can capture a relatively larger share of the resources than they need.

- Adaptability to changes in traffic and topology. Both algorithms are able to determine a new set of routes when changing the network topology. But both of them are static in relation to external traffic and load balancing.

The last drawback is characteristic of all known decentralized strategies , with the exception of shortest queue and offset strategies [3], in which the route weight is determined as a linear function of two parameters: the number of transit sections on the way to the destination and the length of the queues on the issuing directions lying on the route ( a classic example is the Internet [3]). There are no such strategies in the protocols of mesh networks, and, apparently, they will not, since such a strategy will require complex and time-consuming calculations on all nodes and is unlikely to have the stability property - insignificant load fluctuations can provoke route recalculation. Centralized strategies are

free from these shortcomings, which involve the global distribution of service information from an external "smart" device (controller) to all nodes and vice versa - from all nodes to the controller, and can be adaptive to changes that are reported in the content of service messages.

In the simplest version, the controller calculates routing tables using any of the methods for optimal distribution of flows using the known topology and given external traffic. At the same time, among other possible ones, two approaches can be noted: the controller periodically distributes the tables calculated by it among all nodes and the virtual channel approach - the calculation of individual routes for each pair of subscribers. The second approach can be very useful and even necessary when organizing data transmission in real time.

The advantage of centralized strategies is the exclusion of intelligent computing on all nodes except the controller, the main drawback is that the reliability of the network is determined by the reliability of the controller and the secure channels used to control the slave nodes. If the controller fails, the network will either cease to function at all, or will function inefficiently - dynamic routing will turn into static. In addition, any centralized strategy implies the possibility of delivering service information to all nodes of the network, which is not always possible when it is deployed and reconfigured in an aggressive or degrading environment of network functioning. It is also worth noting the fact that the response time of the controller to events of managed nodes is very important. If the controller response time includes a situation assessment, decision making, generation of control actions and their delivery to nodes. If it is less than the rate of occurrence of control events, the network will inevitably degrade.

A compromise approach is also possible - a combination of a centralized and decentralized strategy. Under favorable conditions, a centralized strategy operates that ensures the optimal distribution of the most important flows using the controller, and when the controller fails, route and other intelligent calculations are performed using the available decentralized algorithms.

This approach can also be implemented in mesh networks, but it requires the use of specialized routing and address tables that specify the correspondence of the directions of packet transmission not only to the addresses of the recipients, but also to more specialized features, for example, the selected pairs - “source-receiver”. There are no such capabilities in standard network protocols, but this task is trivially solved by using the OpenFlow protocol.

One of the unobtrusive, but most significant features of this protocol is that it allows you to understand almost anything by a “stream”, by creating your own stream classifieradapted to a specific network task. For example, in the first version of the OpenFlow protocol, a TCP stream can be defined with data from 10 fields. OpenFlow allows you to find a compromise between standard solutions and unique algorithms that can coexist in the same environment without “interfering” with each other.

The essence of such a compromise is as follows:

- mechanisms are built into the standard protocol stack that allow you to divide the served stream into parts, one of which can be served by standard TCP / IP protocols, the rest using original procedures;

- for sorting, service fields of received packets can be used, such as the IP addresses of the sender and receiver, their corresponding MAC addresses, port numbers, etc.

- to determine the routes of "non-standard" streams, flow tables can be used that specify the correspondence of packet directions not only to recipient addresses, but also based on more complex dynamic logic - "source-receiver", access rights, server loading, service priorities, etc. .

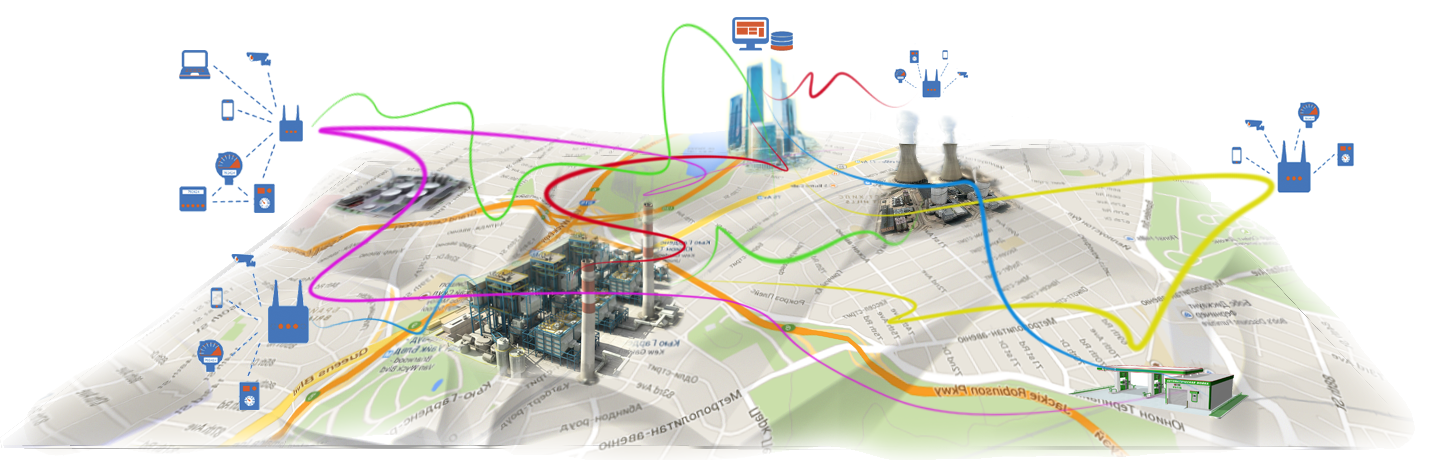

In conclusion of this article, it is worth noting that the hybrid approach most clearly manifests itself in building networks operating in conditions of limited resources and harsh operating conditions. Such networks include industrial communication networks, special-purpose networks, rapidly deployed communication systems, as well as wireless mesh networks.

If this article arouses interest in the community, we are pleased to write a sequel in which we will try to highlight the implementation of the described hybrid approach in industrial wireless mesh networks.

Thanks for attention!