Confused thoughts about the future of FPGA technology

While working on the next game (training) FPGA project for the Mars rover2 board, I was faced with the fact that I clearly did not have enough space in the crystal. It seems that the project is not very complicated, but my implementation is such that it requires a lot of logic. In principle, this is nonsense, something everyday. Well, if you really need it, then you can choose an FPGA with a larger capacity. Actually, my project is the Life game, but implemented in the FPGA in the Verilog HDL language .

About the logic of the game, I will not talk about it already enough has been written about it .

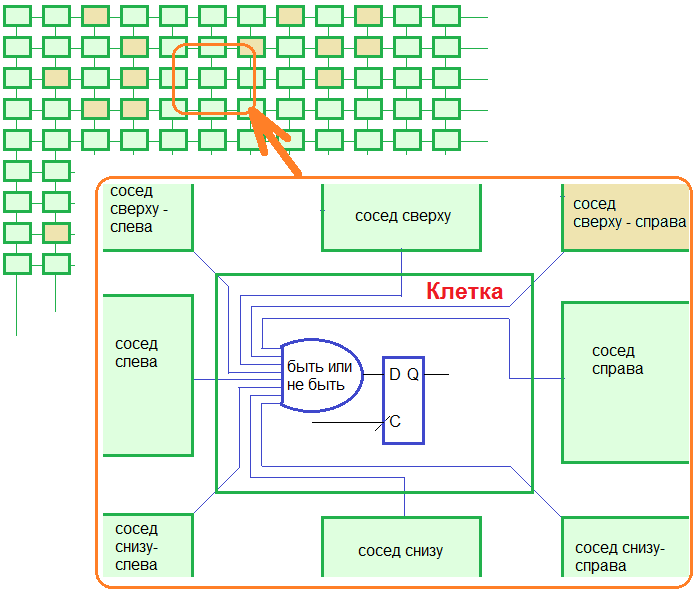

The idea of the project is this: each cell in the playing field is an independent calculator. Each calculator has its own logical function and its own register, which stores the current state of the cell (live / not living). The whole space for the life of cells is a two-dimensional array of calculators, the calculators form a whole network. All calculators work synchronously, since a single clock frequency is applied to all registers. The figure above should clarify the design of the project.

So here. Alter's Cyclone III EP3C10E144C8 FPGA is on my board. 10 thousand logical elements. At first I thought that I could make a two-dimensional array of cells 128x64 = 8192 cells. Does not fit. 64x64 = 4096 - the same does not fit into the crystal. How so. I managed to fit in the FPGA only 32x16 = 512 cells. Pichalka ...

Reflections lead me to the idea that, in the future, FPGA technology may develop into something more than programmable logic. Here I would like to tell about this vision. I’ll immediately tell the sophisticated reader that much further written is just a figment of the imagination and may even be nonsense.

However ..

Currently, FPGA technology is used by a fairly narrow circle of specialists. FPGAs are mainly used for prototyping microcircuits and for small-scale products when manufacturing ASIC microcircuits is not economically feasible. FPGA experts, I think, are not very many. The technology is quite complex and requires many specific knowledge. On the other hand, FPGAs are perhaps the most suitable technology for organizing high-speed and parallel computing.

We know, for example, that Altera offers and promotes OpenCL technology.

Simply put, it is such a C-like language for describing parallel computing in an FPGA accelerator. By the way, Nvidia and its video cards also support parallel computing with OpenCL .

Thus, it is clear that Altera is clearly thinking about how to bring the "usual" C-like parallel computing of FPGAs to the people. Let's see what's up with the prices of boards for parallel computing with FPGA? Board Terasic DE5-Net.

25 users like the price of 8 thousand bucks (just kidding).

There are other attempts to bring FPGA to the people - Altera together with Intel released the E6x5C series chipwhere the Atom processor and the Arria II FPGA are connected in a single case. A good attempt, but the technology seems to be unable to find a mass consumer.

Apparently the existing FPGA crystals do not fit well into the modern programming paradigm.

And what is our current paradigm of “normal” programming?

It seems to me that somehow:

- several computing processes and threads are always running on the computer simultaneously

- the state of processes and threads is stored in memory, a process can request as much memory from the OS as needed; you can even ask for more than there is physical memory - and the process can actually get this virtual memory.

- calculations are made by the processor, sometimes it has many cores. Usually a process or thread does not know (or does not want to know) in which processor core the task is currently executing.

- a higher priority task may supplant a lower priority task. To squeeze out means to save the state of the memory pages of a sluggish process to disk and switch to a higher priority process. Allocate a larger time slice to it.

It is clear that the more memory and the more processor cores, the faster all processes in the system work.

Memory and processor cores are the resources of a computer, a computer, which the operating system distributes between processes.

Now imagine that in the future we will get computers where, along with the memory and processor cores, there will be more blocks of logic like-like-plis. This “as-is-plis” resource, by its properties, is located somewhere between the processor and memory. On the one hand, it’s like-like-plis = it is a computer, that is closer to the processor, but on the other hand it is also a memory, because a lot of information is stored in the plis registers. It’s already quite difficult to save the state of the “as-as-plis” calculator in the task_struct structure when switching the task context. But, probably, you can try to force the state of logic blocks into a swap file, like virtual memory. And temporarily, the OS can load other logic blocks for the active thread ...

By the way, both Altera and Xilinx have long had an FPGA with the ability to partially reload individual sections, logic blocks ( Partial Reconfiguration ) - that is, in principle, they somehow go this way ...

The programmer can allocate for the process as much as-like-pleas, as needed. Literally, the function lalloc (logic alloc) is similar to malloc (memory alloc). Then read the “FPGA firmware” from the file and it will live and work. We programmers, by and large, should not think that the system has this memory / logic "like-pleas" or does not have it. And this is not our business. Yes, sometimes it can be very slow with limited resources, but don’t we know how to “lag” video games on weak video cards? Users know that they will have to pay for strong hardware, but it would be nice if even the smallest system already had logical “like-or-plis” logic blocks for use in programs.

In fact, of course, I imagine that such a technology is unlikely to appear soon, all this is too complicated and not feasible. At least the existing FPGA chips are not very suitable for these purposes, if only because the slow serial interfaces of the JTAG type are used to configure the FPGAs. But maybe one of us will do this someday?

PS: by the way, and that's my game life in FPGA, so small, 32x16 ... well, I do not have a virtual

PS2: all sources and a detailed description of the project of the game Life can be taken here .