Kubernetes 1.12: a review of major innovations

Today is September 27, which means that during working hours (in the US time zone) we can expect the next release of Kubernetes - 1.12 (however, its official announcement is sometimes delayed). In general, it's time to continue the glorious tradition and talk about the most significant changes, which we will make, guided by public information from the project: the Kubernetes features tracking table , CHANGELOG-1.12 , numerous issues, pull requests and design proposals. So what's new in K8s 1.12?

Storage

If you single out one thing that is most often mentioned among all the issues related to the release of Kubernetes 1.12, perhaps it will be the Container Storage Interface (CSI) , which we have already written about the other day. For this reason, let's start with the changes in storage support.

The CSI plugins, as such, have retained beta status and are awaiting recognition as stable by the next release of Kubernetes (1.13). What is new in CSI support then?

In February of this year , work began on the concept of topology in the CSI specification itself.. In short, topology is information about cluster segmentation (for example, by “racks” for on-premise installations or by “regions” and “zones” for cloud environments) that you need to know and take into account orchestration systems. What for? The volumes allocated by storage providers will not necessarily be equally accessible throughout the cluster, and therefore knowledge of the topology is necessary in order to effectively plan resources and make decisions on provisioning.

The result of the appearance of topologies in CSI ( taken in the specification on June 1) was their support in Kubernetes 1.12:

- support as such (registration of information about the volume topology reported by the CSI driver) - alpha version;

- support of topologies in dynamic provisioning (see detailed documentation in the design proposal called " Volume Topology-aware Scheduling ") - right away is a beta version;

- GCE PD topology support - alpha version;

- AWS EBS Topology Support - Beta.

But the CSI-related updates don't end there. Another important innovation in the release of Kubernetes 1.12 is snapshot support for CSI ( currently in alpha status). Snapshots for volumes as such appeared in the release of K8s 1.8 . The main implementation, which includes the controller and the provisioner (two separate binaries), was decided to be transferred to the external repository . Since then, support has appeared for GCE PD, AWS EBS, OpenStack Cinder, GlusterFS, and Kubernetes volumes

hostPath. The new design proposal is intended to “continue this initiative by adding support for CSI Volume Drivers” (support for snapshots in the CSI specification is described here). Since Kubernetes adheres to the principle of including a minimum set of features in the core API, this implementation (as for snapshots in Volume Snapshot Controller) uses CRD (

CustomResourceDefinitions). And a couple of new features for CSI drivers:

- The alpha version of the driver's ability to register itself with the Kubernetes API (in order to make it easier for users to find the drivers installed in the cluster and allow drivers to influence the interaction processes of Kubernetes with them);

- The alpha version of the ability of the driver to receive information about the file that requested the volume through

NodePublish.

Presented in the last release of Kubernetes, the mechanism of dynamic limiting of volumes on nodes advanced from alpha to beta, having received ... you guessed it, support for CSI, as well as Azure.

Finally, the Mount namespace propagation feature , which allows to mount the volume as

rshared(so that all mounted container directories are visible on the host) and which had beta status in the release of K8s 1.10 , has been declared stable.Scheduler

In the Kubernetes 1.12 scheduler, performance is improved through an alpha version of the mechanism for limiting the search in a cluster of nodes suitable for feasible planning . If earlier for each attempt to schedule each sub-kube-schedulerchecked the availability of all nodes and passed them for evaluation, then now the scheduler will find only a certain number of them, and then stop their work. In this case, the mechanism provides for the mandatory selection of nodes from different regions and zones, as well as the need to view different nodes in different planning cycles (do not select the first 100 nodes each launch). The decision on the implementation of this mechanism was made, guided by the results of the analysis of data on the performance of the scheduler (if the 90th percentile showed 30 ms for the hearth, then the 99th time was already 60 ms).

In addition, the following scheduler features matured to the beta version:

- Taint node by Condition , which appeared in K8s 1.8 and allows you to mark a node with a certain status (for further actions) upon the occurrence of certain events: now the node's life cycle controller automatically creates taints, and the scheduler checks them (instead of conditions );

- scheduling in

DaemonSetusing kube-scheduler (instead of controllerDaemonSet): it was also made active by default; - indication of priority class in

ResourceQuota.

Cluster nodes

An interesting innovation was the emergence (in the status of the alpha version)

RuntimeClass- a new resource at the cluster level, designed to serve the parameters of the container execution time (container runtime) . RuntimeClassesthey are assigned to the subs through the field of the same name PodSpecand implement support for the use of multiple executable media within a cluster or node. What for?“Interest in using different executable environments in a cluster is growing. At the moment, the main motivator for this are sandboxes (sandboxes) and the desire of Kata and gVisor containers to integrate with Kubernetes. In the future, other runtime models like Windows containers or even remote executable environments will also need support. RuntimeClass offers a way to choose between different executable environments that are configured in a cluster and change their properties (both by the cluster and by the user). "

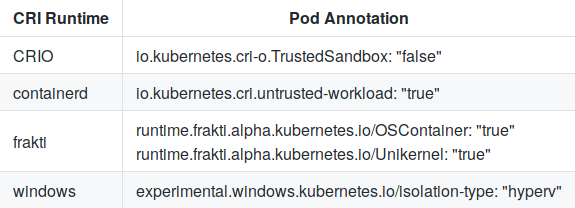

To choose between the predefined configurations in the CRI (Container Runtime Interface) is transmitted

RuntimeHandler, which is intended to replace the current annotation of the submission:

A configuration in containerd for kata-runtime looks like this:

[plugins.cri.containerd.kata-runtime]

runtime_type = "io.containerd.runtime.v1.linux"

runtime_engine = "/opt/kata/bin/kata-runtime"

runtime_root = ""The criterion

RuntimeClassfor the alpha version is a successful CRI validation test . In addition, to the status of the beta up a mechanism to record local plug-ins (including CSI) in Kubelet and

shareProcessNamespace(a feature become enabled by default).Network

The main news in the network part of Kubernetes is the alpha version of SCTP support (Stream Control Transmission Protocol). With support from Pod , Service , Endpoint, and NetworkPolicy , this telecommunications protocol has been extended to include TCP and UDP. With the new feature, applications that require SCTP as an L4 protocol for their interfaces can be easier deployed to Kubernetes clusters; for example, they will be able to use service discovery based on kube-dns , and their interaction will be controlled through NetworkPolicy . ” Implementation details are available in this document .

Two network capabilities presented in K8s 1.8 also achieved a stable status: support for outgoing traffic policies

EgressRules in the NetworkPolicy API and applying source / recipient CIDR rules through ipBlockRule.Scaling

Improvements in the Horizontal Pod Autoscaler include:

- updated algorithm for faster achievement of the correct size (both alpha and beta versions at once), read more about which in the new section of the documentation ;

- development of arbitrary / user metrics , the second beta version of which received a revised API and support for label selectors.

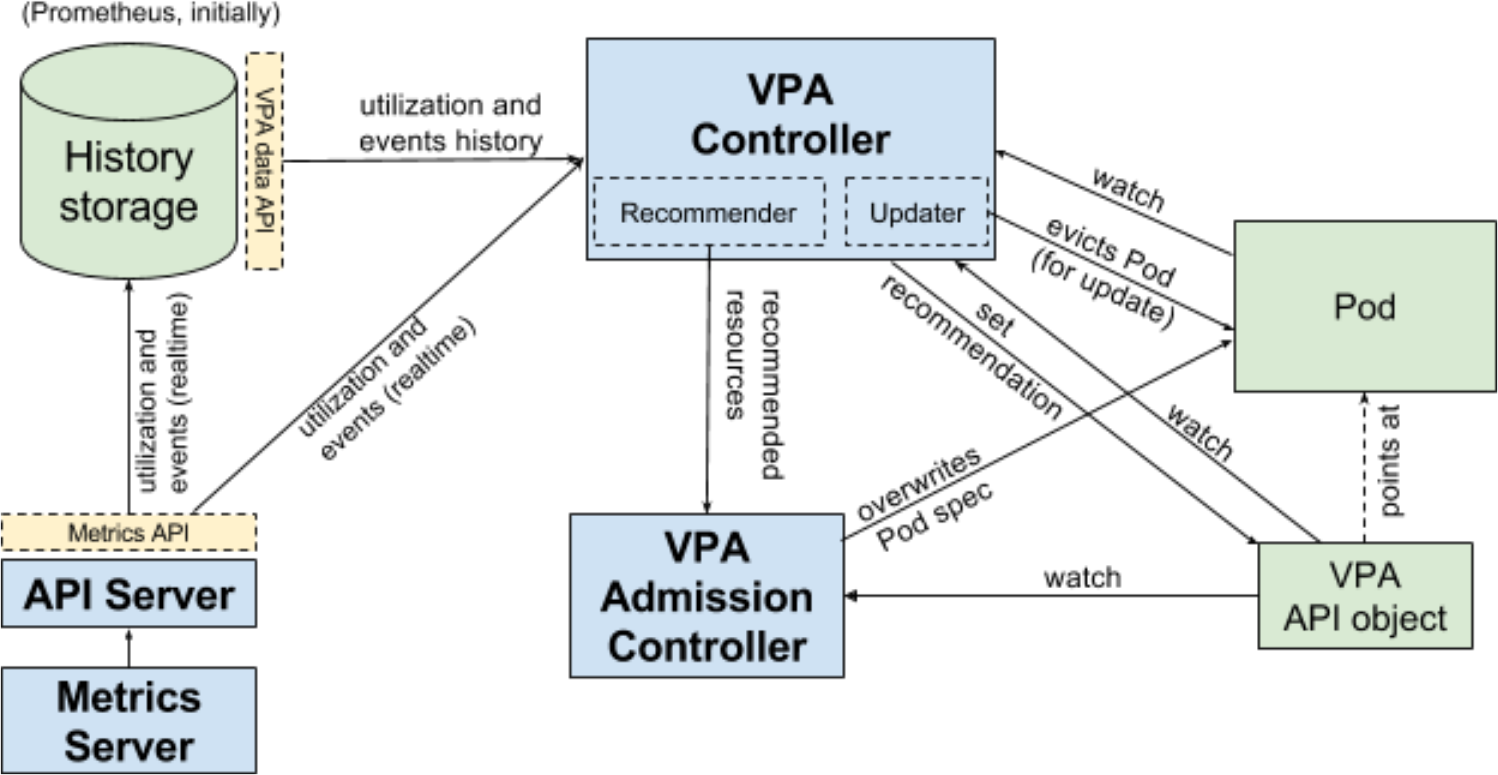

The vertical scaling of the hearths is also not in place , which, until reaching the beta version, lacked user testing. The authors found it sufficient for the release of K8s 1.12 and remind that this possibility is rather an addition to Kubernetes (not included in the core of the system). All development is conducted in a separate repository, a beta release in which will be timed to the release of Kubernetes.

Vertical Pod Autoscaler (VPA) workflow in Kubernetes

Finally, K8s 1.12 includes (in alpha-version) the results of work on “simplifying installation with help

ComponentConfig” (within the sig-cluster-lifecycle), which has been going on for almost two years. Unfortunately, for some unknown reason (simple oversight?), Access to the design proposal with details closed for anonymous users.Other changes

API

Two new features are implemented in the api-machinery group:

параметр dry-runfor apiserver (alpha version), which simulates validation and query processing;- Resource Quota API (immediately beta), defining limited resources by default (instead of the current behavior when resource consumption is not limited if no quota is set).

Azure

Declared stable:

Added first implementations (alpha versions):

- Azure Availability Zones support ;

- support nodes of the cross resource group (RG) type and unmanaged nodes (on-prem).

Kubectl

- The alpha version of the updated plugin mechanism is implemented , which allows both adding new commands and rewriting existing subcommands of any nesting level. It is made similar to Git and looks at executable files starting with

kubectl-, in the$PATHuser. See the design proposal for details . - A beta version of the idea of extracting a package

pkg/kubectl/genericclioptionsfrom kubectl into an independent repository has been implemented. - Declared stable function output on the server side (server-side printing) .

Other

- An alpha version of the new TTL mechanism after finish , designed to limit the lifetime of finished Jobs and Pods, is presented . After a specified TTL, the objects will be automatically cleaned without the need for user intervention.

- Kubelet private key and CSR (TLS Bootstrap) has been declared stable for signing a certificate at the cluster level.

- Passed into beta status is the rotation of the server TLS certificate in Kubelet .

PS

Read also in our blog:

- “ Kubernetes 1.11: an overview of major innovations ”;

- “ Containd integration with Kubernetes, replacing Docker, is ready for production ”;

- “ Kubernetes 1.10: a review of major innovations ”;

- “ Kubernetes 1.9: review of major innovations ”;

- “ Four releases 1.0 from CNCF and major announcements about Kubernetes from KubeCon 2017 ”;

- “ Kubernetes 1.8: review of major innovations ”;

- " Docker 17.06 and Kubernetes 1.7: key innovations ."