Testing layout of a news site with responsive design

One of the most noteworthy challenges that EastBanc Technologies QA has ever faced is the creation of an automated testing system for the site www.washingtonpost.com . This is an electronic newspaper, implemented in the form of an information and news portal.

The main reason for the need to create an automated testing system was that the application planned to switch to a new CMS (the so-called PageBuilder), which should replace several other CMS that were previously used to publish content in various sections of the site. With this kind of migration, it is very important not to make mistakes so that the content published through the new CMS on various pages looks appropriate.

We are not faced with the task of checking all pages for compliance with our tests. Our task is to identify the bugs of PageBuilder, to check the reliability of the layout of pages created by a freshly baked PageBuilder, to draw the attention of Washingtonpost editors to the nuances of filling a specific page with content that could lead to potential problems in displaying pages.

The creation of a testing system is under active development, but some interesting points, in our opinion, can already be presented to the general public.

Before we do this, it is necessary to note one feature of the project: all testing with us takes place “outside”. Those. we, like any other user, use a combat version of the site for testing.

After exploring the Internet, we settled on the following approaches and tools. To test the page frame, we adopted the Galen framework , which we later integrated with testNG.

Naturally, the passed Galen test for the page frame does not mean the validity of the layout. In addition to the location of the blocks, you also need to check the display of various elements inside the block. We decided to test the internal content of the blocks by comparing screenshots.

Various logos, buttons, some blocks with a specific display fall to the mercy of screenshot tests — everything that Galen does not reach and that it is difficult / impossible to verify with functional tests.

Azure - tested by Galen, flooded with green - screenshot tests:

Caution! Big picture

Galen and Screenshot tests can successfully replace some functional tests, sometimes winning in visibility, sometimes in speed, and sometimes in both. The choice of the testing method for a particular case is carried out by us through a collective discussion of the test case for each type of page based on performance criteria, ease of support, completeness of test coverage and visibility.

For example, there are 2 blocks for the verification of which we originally wrote functional tests: Most Read and Information Block. Now we check the first with screenshots, and the second with the galen test.

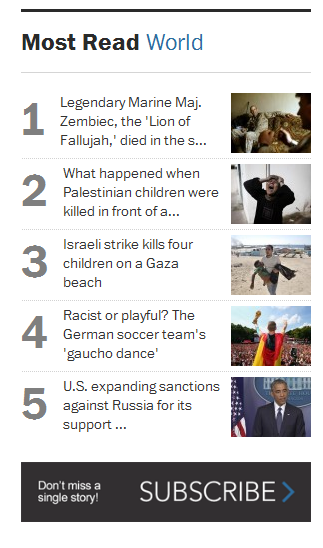

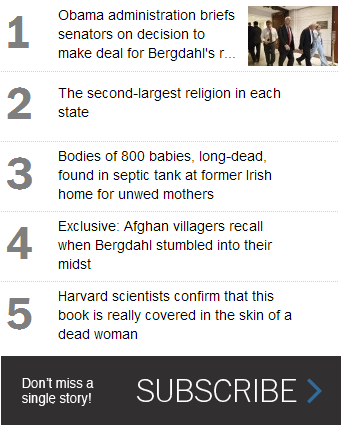

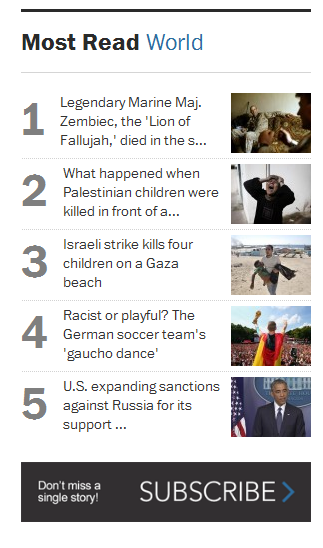

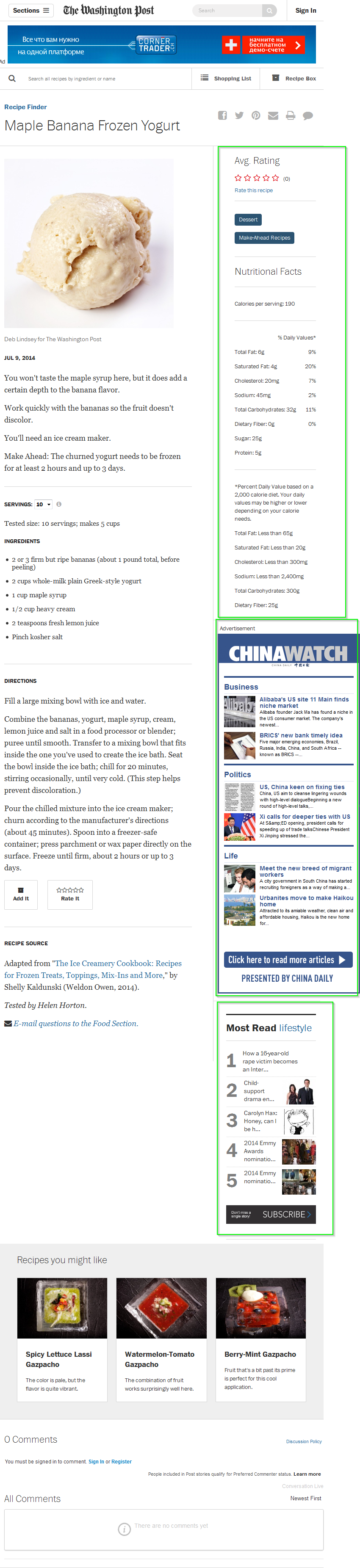

MostRead Block, verification by screenshot test:

Regarding the functional test: the lines of code have become significantly less, the completeness of the test coverage has increased, and updating the test when changing the appearance of this block on the page will not take much time.

Testing of this block is discussed in the chapter on the screenshot method.

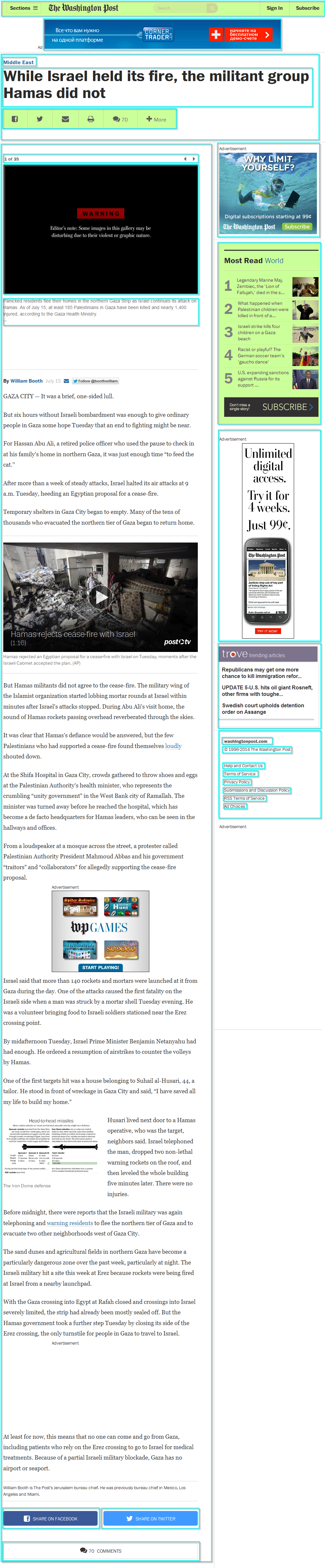

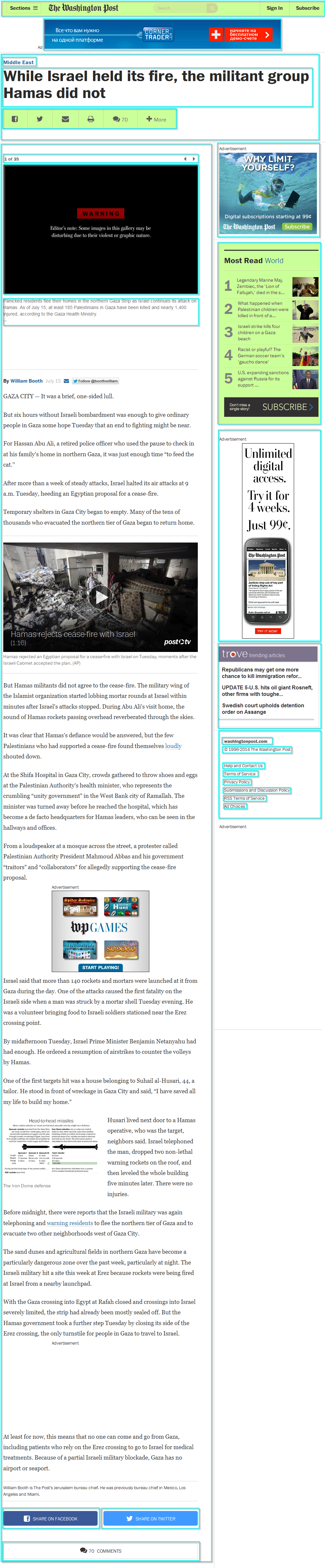

WaPo Information Block:

Galen copes with checking the correspondence of the text and links of this block without any problems: the links themselves are set in the locator, and the correspondence of the text with the internal galen check. Regarding the functional test, the completeness of the test coverage has not changed, but due to the fact that the tests are carried out as part of a single test, we significantly save time.

Galen test code .

Our automated testing system uses: Java, Maven, TestNG, Selenium WebDriver, Selenium Grid, Galen Framework .

In creating Screenshot-based tests, the cross-platform set of ImageMagick utilities actively helps us .

I would like to note right away that we write test code in Java using the PageObject pattern and the framework from Yandex - HTML Elements . To run the tests, maven and testNG are used.

To facilitate the launch of tests, view the history of test launches, view reports without involving highly qualified specialists, we are developing a separate application - Dashboard.

It will be useful to emphasize that now we are still at the stage of research on how to properly organize the entire testing process, and not all approaches have been fully mastered and studied

The Galen Framework has many obvious advantages: it is a flexible, easy-to-use tool with extensive testing capabilities for responsive design. In addition, it is well documented and is actively developing at the moment.

Galen Framework has already been described in sufficient detail in one of the articles. If you briefly describe the principle of working with Galen, it looks like this: you write a page specification (the so-called spec file) using a special, well-documented and intuitive syntax. The spec file describes the relative position, size, indentation, nesting of the page elements and some other parameters and conditions that the page layout should comply with, you can even check the consistency of the text inside the element. And all these checks will be applied depending on the tags we specified.

Tags in the spec file can be specified as follows:

Galen performs all the checks, and then generates a visual report in the form of an html file. The report indicates which specific checks failed for this test, and for each of the failed checks you can see a full screenshot of the tested page, where elements that did not pass the specific test will be highlighted.

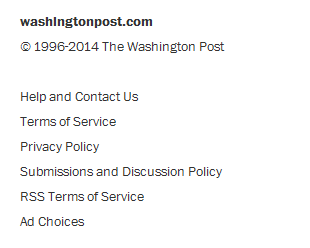

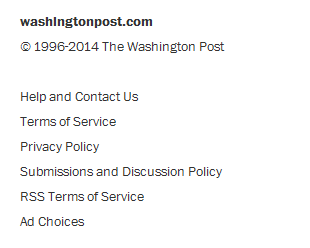

For example, a failed test for the distance between neighboring elements will look like this in a report:

When you click on a check highlighted in red, a screenshot of the entire page being checked with such highlighting of the elements is displayed:

Galen Framework accepts the following parameters as an input:

As you can see, by varying the parameters supplied to Galen, you can achieve almost complete test coverage of the framework of our site.

Once we decided on a site wireframe testing tool, the next task was to choose a scheme that allows us to provide maximum page coverage with Galen tests.

And which pages to choose for testing layout, if the test is designed to check many of the same type of pages?

We decided, without particularly bothering, to select a random page from a subset each time we run a test suite (i.e., to test a subset of the recipes pages, we selected one of the recipes and pass its url to all the layouts tests). Since it is not worth checking all the pages of the task, therefore, the option of choosing a random page seemed optimal. The url of the random page of a subset of the checked pages is transmitted to Galen by the method common to all tests inside our automated site testing system (except for typesetting tests, we also have functional and screenshot tests).

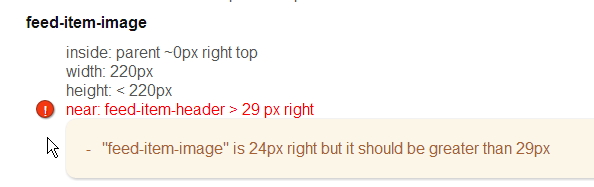

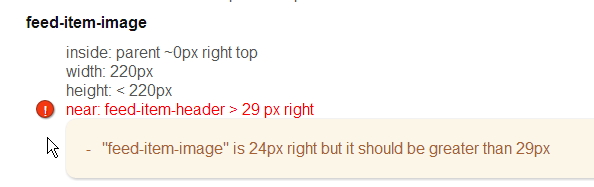

For example, there are 2 options for displaying the same type of pages - recipes pages, in one of which the layout contains an error:

As you can see, the “Most Read” block, which should be located in the right column of the page, is on the left page in the main parts, not right. To verify that there are no such problems, you need to check a large number of pages and take into account many factors.

First, the idea came up to choose the most common devices and use their resolution to run tests. However, the clearly traced tendency of accelerated mobilization of the planet does not allow us to single out (and, moreover, predict) some unconditional leaders in this field. There are a lot of devices that allow you to view web applications, and the unification of permissions for such devices is not a fashionable occupation nowadays. The sudden creeping thought that the adaptive design is adaptive in order to correctly display at any valid resolution saved our minds and prevented further research in this area. The decision was made: we are testing the layout at all valid resolutions.

Valid permissions were assigned all permissions from min Viewport width = 241 px (the browser does not decrease less) to max Viewport width = 1920px (the upper bound is a simple volitional effort). We have not yet had any pages where the height of the viewport for the purpose of automated testing was the determining parameter, so we do not pay attention to the height so far.

How to test layout at all resolutions?

To begin with, the entire range of valid permissions was divided into ranges of differing layouts. The layouts themselves are "rubber", but the different arrangement of the blocks allows a distinction to be made. It is not difficult to determine the boundaries of layouts - we drag by the corner of the browser and look at what boundary point the page blocks change: their relative position, number and / or behavior. For simplicity, we take into account only the width of the viewport. The following table was obtained:

DESKTOP: max 1920px, min 1018px;

LAPTOP: max 1017px, min 769px;

TABLET: max 768px, min 481px;

MOBILE: max 480px, min 361px;

SMALL_MOBILE: max 360px, min 280px.

By the way, we have decided not to test the SMALL_MOBILE layout yet, since the number of users viewing the Washington Post on devices with such a resolution is disastrously small (a speculative conclusion, and there is no problem to add when testing in the future). It remains to test 4 ranges with different typesetting.

Below is the code to run the Galen test for desktop permissions:

At the start of each test, Galen is given a random permission from the range for the given layout (getRandomResolution (DESKTOP)):

And, in fact, the range of permissions is set in this form:

Testing by randomly choosing the resolution from the valid range and the tested page from a subset of the same type of pages, thus, turns into a probabilistic process. The more we run it, the more different bugs we find. With a single successful passage of the test, we can only say that this particular page at this particular resolution is valid. But after 500 successful runs, we can argue that the layout is for the most part viable. Immediately make a reservation that “500 successful runs” is a speculative assessment, and here you need to look at the content and the number of equivalent pages.

Running on a random resolution very soon paid off and immediately revealed one interesting bug that we would most likely have missed when running tests in a fixed resolution.

Let's see how this approach helps us with the example of testing the recipe page.

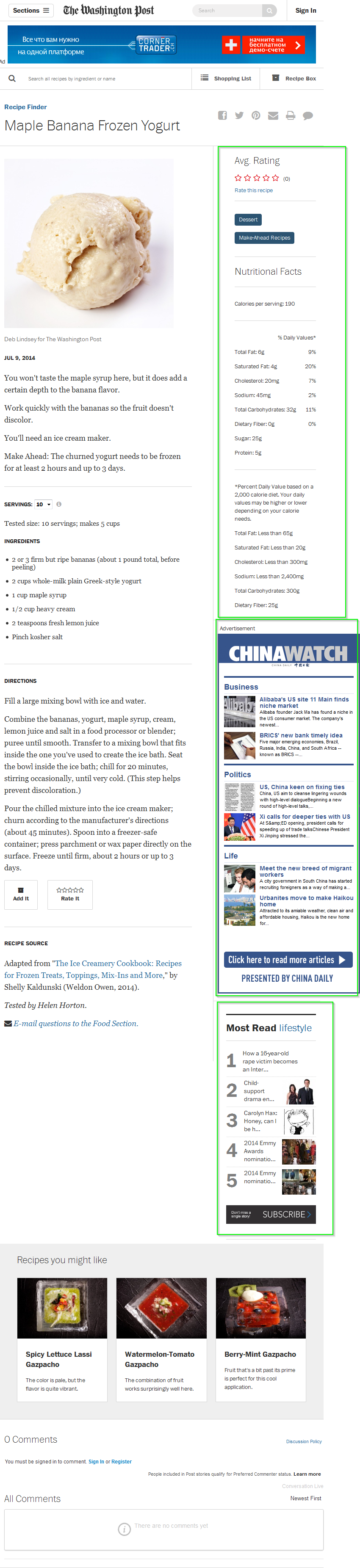

The recipe page wireframe test runs for the resolution range (Viewport width) from 768px to 1017px. Take for example the page: www.washingtonpost.com/pb/recipes/maple-banana-frozen-yogurt/14143

The boundary layout test of the Laptop layout (1017px and 768px) did not give errors.

However, after we started running the test at random resolution, in about half of the cases, the tests fell and the screenshots showed that the blocks from the right column creep down under the main content.

Correct view:

Caution! Big picture

Layout Broken:

Caution! Big picture

Inspired by the article, we decided to use the screenshot-based testing method. By the way, to test the layout, we initially relied on this method. Those. there was an idea to compare full-size screenshots of a page with a previously prepared model, replacing all potentially changing elements with stubs (a pre-selected arbitrary image is taken as stubs). These elements included pictures, flash advertising and text. The idea failed mainly due to the fact that the pages contained many blocks that were loaded dynamically, as a result of which the physical dimensions of the screenshots taken and the location of the blocks changed from running the test to running. In addition, for some time now Chrome has lost the ability to take full-size screenshots, which also created a number of problems.

Screenshot-based tests now check with us those individual elements and blocks on the page for which display is important, and / or checking with functional or galen tests is difficult or impossible.

For example:

Here is the MostRead block on the main page of washingtonpost.com (on the left) and the model with which we will compare the screenshot of this block (on the right):

The test code looks like this:

The following directory structure is used for storing screenshots: /models/HomePage/firefox/HomePage_thePostMost.png

As can be seen from here, a different screenshot of the necessary block is taken for different browsers.

The shootAndVerify () method finds the path to the model by the class of the submitted page and the name of the browser in which the test is running.

Looking ahead, let's say - it works pretty well, and then we will describe some details of the process with the caveat that not everything is still fully debugged.

As it turned out, the picture taken of the necessary block may depend on many factors, such as:

The first problem was that the size of the screenshots taken was different depending on the OS and browser settings. To make the sizes of blocks, and, consequently, screenshots the same, you need to run a browser with constant sizes. You can resize the browser window using the appropriate web driver method: driver.manage (). Window (). SetSize (requiredSize). But in this way we set the size of the window, and not the size of the visible area we need - the viewport. A vertical scrollbar, by the way, also affects the size of the viewport, and its thickness also depends on the theme of windows, so you need to consider this. The solution was a calibration method that adjusts the viewport size to a given size. After starting the first test, the difference between the width of the window size and the width of the viewport is saved in a special parameter and is reused during subsequent launches.

The second problem we encountered was the different display of fonts in browsers due to anti-aliasing options. We tried to solve the problem by setting various browser settings, such as:

layers.acceleration.disabled

gfx.font_rendering.cleartype_params.rendering_mode

gfx.direct2d.disabled

But, unfortunately, this did not help.

In addition, to compare screenshots with ImageMagick, a parameter such as fuzz is used, which sets the maximum possible difference between the screenshots.

We tried to solve this problem by experimenting with this parameter. A small fuzz coefficient did not solve the problem, because the number of different pixels was very large, due to the fact that there was a lot of text, and a large coefficient led to the fact that the absence of some elements in the blocks did not affect the test, and led to potentially missed bugs.

The solution was to duplicate all the settings of various browsers on all virtual machines on which the tests were run, and duplicate the settings of the operating systems themselves.

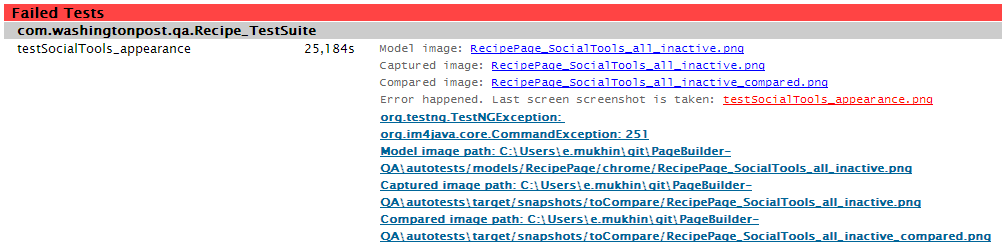

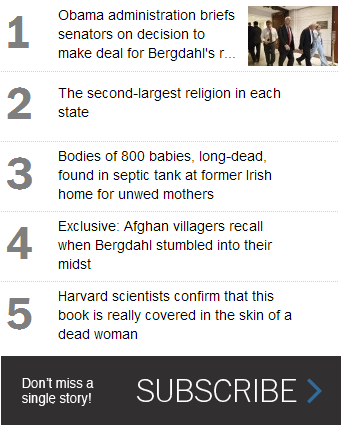

For example, a test that checks the block of social buttons in which one of the images did not load.

The links in the report are available:

picture-model

screenshot of the tested unit: the

result of comparing these two images:

CommandException tells us that the compared images differ by 251px:

There are also situations where the screenshot sizes do not match. In this case, we get the following report:

Sometimes, for unknown reasons, the elements inside the tested block are slightly biased. For such cases, we compare not with one model, but with a group of models suitable for the mask, i.e. we can have several models of thePostMost block with the names HomePage_thePostMost.png, HomePage_thePostMost (1) .png, and we consider all models to be valid. Fortunately, the number of such options is finite, usually no more than 2.

As mentioned above, a technology stack is used to write and run tests: Java, Maven, TestNG, Selenium, Galen Framework. In addition, test results are sent to graphite. The tests are run directly using Jenkins CIS. We will not dwell in detail on why such a set was chosen. We briefly describe how this is all interconnected.

Selenium Grid is now deployed locally on four virtual machines with Windows 7, where the grid nodes are running, and on the Linux machine on which the hub is running. There are 3 instances of firefox and chrome browsers available on each node. In addition, Jenkins and graphite are also deployed on the Linux machine. Galen tests run in the general test run thanks to integration with TestNG. To do this, an appropriate class was written that allows you to use the jav Galen API. When implementing the interaction of TestNG with galen, we had some problems that were quickly resolved due to the interaction with the galen developer. The galena developer himself willingly cooperates and regularly releases updates for this tool, which expand its capabilities and make it even more convenient.

Functional, galen and screenshot based tests are separated using the appropriate group parameter assigned to the Test annotation, and there is the possibility of their separate launch.

Both approaches - the method of comparing screenshots and testing using the Galen Framework - are applicable for testing page layout. They successfully complement each other. The method of comparing screenshots is more applicable when you need to test the display of any single element or block, for example, the sharing panel in social networks or the main menu in the header. A block can contain many icons within itself, which in turn can be inside other icons and elements, or have relative positioning with them.

Using Galen to describe all these small moments is rather time-consuming, however, one screenshot for each browser solves this problem, and comparison of screenshots excludes the option when we can miss something when describing the spec. Galen, in turn, copes with the relative arrangement of blocks and checking the headers and fixed text in them. It has good use when you need to test layout on the same type of template pages that are not loaded with functional logic, for example, of any information portal, as in our case, when almost any page of the site is accessible without authorization, or any other user actions. In addition, Galen well solves cross-browser testing in adaptive application layout.

The main reason for the need to create an automated testing system was that the application planned to switch to a new CMS (the so-called PageBuilder), which should replace several other CMS that were previously used to publish content in various sections of the site. With this kind of migration, it is very important not to make mistakes so that the content published through the new CMS on various pages looks appropriate.

We are not faced with the task of checking all pages for compliance with our tests. Our task is to identify the bugs of PageBuilder, to check the reliability of the layout of pages created by a freshly baked PageBuilder, to draw the attention of Washingtonpost editors to the nuances of filling a specific page with content that could lead to potential problems in displaying pages.

The creation of a testing system is under active development, but some interesting points, in our opinion, can already be presented to the general public.

Before we do this, it is necessary to note one feature of the project: all testing with us takes place “outside”. Those. we, like any other user, use a combat version of the site for testing.

Selection of layout testing tools

After exploring the Internet, we settled on the following approaches and tools. To test the page frame, we adopted the Galen framework , which we later integrated with testNG.

Naturally, the passed Galen test for the page frame does not mean the validity of the layout. In addition to the location of the blocks, you also need to check the display of various elements inside the block. We decided to test the internal content of the blocks by comparing screenshots.

Various logos, buttons, some blocks with a specific display fall to the mercy of screenshot tests — everything that Galen does not reach and that it is difficult / impossible to verify with functional tests.

Azure - tested by Galen, flooded with green - screenshot tests:

Caution! Big picture

Hidden text

Galen and Screenshot tests can successfully replace some functional tests, sometimes winning in visibility, sometimes in speed, and sometimes in both. The choice of the testing method for a particular case is carried out by us through a collective discussion of the test case for each type of page based on performance criteria, ease of support, completeness of test coverage and visibility.

For example, there are 2 blocks for the verification of which we originally wrote functional tests: Most Read and Information Block. Now we check the first with screenshots, and the second with the galen test.

MostRead Block, verification by screenshot test:

Regarding the functional test: the lines of code have become significantly less, the completeness of the test coverage has increased, and updating the test when changing the appearance of this block on the page will not take much time.

Testing of this block is discussed in the chapter on the screenshot method.

WaPo Information Block:

Galen copes with checking the correspondence of the text and links of this block without any problems: the links themselves are set in the locator, and the correspondence of the text with the internal galen check. Regarding the functional test, the completeness of the test coverage has not changed, but due to the fact that the tests are carried out as part of a single test, we significantly save time.

Galen test code .

Our automated testing system uses: Java, Maven, TestNG, Selenium WebDriver, Selenium Grid, Galen Framework .

In creating Screenshot-based tests, the cross-platform set of ImageMagick utilities actively helps us .

I would like to note right away that we write test code in Java using the PageObject pattern and the framework from Yandex - HTML Elements . To run the tests, maven and testNG are used.

To facilitate the launch of tests, view the history of test launches, view reports without involving highly qualified specialists, we are developing a separate application - Dashboard.

It will be useful to emphasize that now we are still at the stage of research on how to properly organize the entire testing process, and not all approaches have been fully mastered and studied

Testing with the Galen Framework

The Galen Framework has many obvious advantages: it is a flexible, easy-to-use tool with extensive testing capabilities for responsive design. In addition, it is well documented and is actively developing at the moment.

Galen Framework has already been described in sufficient detail in one of the articles. If you briefly describe the principle of working with Galen, it looks like this: you write a page specification (the so-called spec file) using a special, well-documented and intuitive syntax. The spec file describes the relative position, size, indentation, nesting of the page elements and some other parameters and conditions that the page layout should comply with, you can even check the consistency of the text inside the element. And all these checks will be applied depending on the tags we specified.

Tags in the spec file can be specified as follows:

Galen performs all the checks, and then generates a visual report in the form of an html file. The report indicates which specific checks failed for this test, and for each of the failed checks you can see a full screenshot of the tested page, where elements that did not pass the specific test will be highlighted.

For example, a failed test for the distance between neighboring elements will look like this in a report:

When you click on a check highlighted in red, a screenshot of the entire page being checked with such highlighting of the elements is displayed:

Galen Framework accepts the following parameters as an input:

- the browser in which the check will take place

- permission at which to run the test

- url of the tested page

- A javascript file that you need (if necessary) to apply on the launched page before starting checks on the .spec file (for example, if you need to check the display of the page to a user logged on to the site)

- name of the launched .spec file

- tags that need to be applied to checks of the .spec file (for example: desktop, all, if we are testing a layout for a desktop).

As you can see, by varying the parameters supplied to Galen, you can achieve almost complete test coverage of the framework of our site.

Once we decided on a site wireframe testing tool, the next task was to choose a scheme that allows us to provide maximum page coverage with Galen tests.

Selecting a test page from a subset of pages of the same type

And which pages to choose for testing layout, if the test is designed to check many of the same type of pages?

We decided, without particularly bothering, to select a random page from a subset each time we run a test suite (i.e., to test a subset of the recipes pages, we selected one of the recipes and pass its url to all the layouts tests). Since it is not worth checking all the pages of the task, therefore, the option of choosing a random page seemed optimal. The url of the random page of a subset of the checked pages is transmitted to Galen by the method common to all tests inside our automated site testing system (except for typesetting tests, we also have functional and screenshot tests).

For example, there are 2 options for displaying the same type of pages - recipes pages, in one of which the layout contains an error:

As you can see, the “Most Read” block, which should be located in the right column of the page, is on the left page in the main parts, not right. To verify that there are no such problems, you need to check a large number of pages and take into account many factors.

At what permissions do I run tests?

First, the idea came up to choose the most common devices and use their resolution to run tests. However, the clearly traced tendency of accelerated mobilization of the planet does not allow us to single out (and, moreover, predict) some unconditional leaders in this field. There are a lot of devices that allow you to view web applications, and the unification of permissions for such devices is not a fashionable occupation nowadays. The sudden creeping thought that the adaptive design is adaptive in order to correctly display at any valid resolution saved our minds and prevented further research in this area. The decision was made: we are testing the layout at all valid resolutions.

Valid permissions were assigned all permissions from min Viewport width = 241 px (the browser does not decrease less) to max Viewport width = 1920px (the upper bound is a simple volitional effort). We have not yet had any pages where the height of the viewport for the purpose of automated testing was the determining parameter, so we do not pay attention to the height so far.

How to test layout at all resolutions?

To begin with, the entire range of valid permissions was divided into ranges of differing layouts. The layouts themselves are "rubber", but the different arrangement of the blocks allows a distinction to be made. It is not difficult to determine the boundaries of layouts - we drag by the corner of the browser and look at what boundary point the page blocks change: their relative position, number and / or behavior. For simplicity, we take into account only the width of the viewport. The following table was obtained:

DESKTOP: max 1920px, min 1018px;

LAPTOP: max 1017px, min 769px;

TABLET: max 768px, min 481px;

MOBILE: max 480px, min 361px;

SMALL_MOBILE: max 360px, min 280px.

By the way, we have decided not to test the SMALL_MOBILE layout yet, since the number of users viewing the Washington Post on devices with such a resolution is disastrously small (a speculative conclusion, and there is no problem to add when testing in the future). It remains to test 4 ranges with different typesetting.

Below is the code to run the Galen test for desktop permissions:

Hidden text

The invokeGalenActions method gives Galen all the preconditions in the form digested by this framework:

GalenActionBuilder allows you to easily add the execution of both native Galen preconditions (.waitForVisible (5, ".pb-f-ad-leaderboard> div> div> div> iframe")), as well as our relatives (.scrollToElement (". Pb- f-ad-flex ")):

@Test(groups = { "Galen" })

@WebTest(value = "Verify that layout of Article page is not broken on desktop screen resolution.", bugs = {"#5599", "#5601", "#5600"})

public void testArticlepageLayoutOnDesktop() throws Exception {

GalenActionsBuilder builder = new GalenActionsBuilder()

//advertisement frames become visible only if advertisement placeholder is visible

.waitForVisible(5, ".pb-f-ad-leaderboard > div> div > div > iframe")

.scrollToElement(".pb-f-ad-flex")

.waitForVisible(5, ".pb-f-ad-flex > div > div > iframe")

.scrollToElement(".pb-f-ad-flex-2")

.waitForVisible(5, ".pb-f-ad-flex-2 > div > div > iframe")

.scrollToElement(".pb-f-ad-flex-3")

.waitForVisible(5, ".pb-f-ad-flex-3 > div > div > iframe")

.injectJavascript("/js/scroll_to_top.js")

.waitSeconds(2)

.check("/article.spec", Arrays.asList("all", "desktop"));

invokeGalenActions(ArticlePage.getRandomArticlePage(), builder.build(), getRandomResolution(DESKTOP));

}

The invokeGalenActions method gives Galen all the preconditions in the form digested by this framework:

protected void invokeGalenActions(String url, List actions, Dimension... sizes) throws Exception {

run(url, actions, recalculateSizes(sizes));

}

GalenActionBuilder allows you to easily add the execution of both native Galen preconditions (.waitForVisible (5, ".pb-f-ad-leaderboard> div> div> div> iframe")), as well as our relatives (.scrollToElement (". Pb- f-ad-flex ")):

public class GalenActionsBuilder {

private boolean built;

private final List actions = new ArrayList<>();

public GalenActionsBuilder waitFor(Integer seconds, GalenPageActionWait.UntilType type, Locator locator) {

checkUsed();

GalenPageActionWait.Until u = new GalenPageActionWait.Until(type, locator);

GalenPageActionWait a = new GalenPageActionWait();

a.setTimeout(seconds * 1000);

a.setUntilElements(Lists.newArrayList(u));

a.setOriginalCommand("wait " + seconds + "s until " + type.toString() + " " + locator.getLocatorValue());

actions.add(a);

return this;

}

public GalenActionsBuilder waitSeconds(Integer seconds) {

checkUsed();

GalenPageActionWait a = new GalenPageActionWait();

a.setTimeout(seconds * 1000);

a.setOriginalCommand("wait " + seconds + "s");

actions.add(a);

return this;

}

public GalenActionsBuilder waitForExist(Integer seconds, String cssSelector) {

return waitFor(seconds, GalenPageActionWait.UntilType.EXIST, Locator.css(cssSelector));

}

public GalenActionsBuilder waitForVisible(Integer seconds, String cssSelector) {

return waitFor(seconds, GalenPageActionWait.UntilType.VISIBLE, Locator.css(cssSelector));

}

public GalenActionsBuilder waitForHidden(Integer seconds, String cssSelector) {

return waitFor(seconds, GalenPageActionWait.UntilType.HIDDEN, Locator.css(cssSelector));

}

public GalenActionsBuilder waitForGone(Integer seconds, String cssSelector) {

return waitFor(seconds, GalenPageActionWait.UntilType.GONE, Locator.css(cssSelector));

}

public GalenActionsBuilder waitForExist(Integer seconds, Locator locator) {

return waitFor(seconds, GalenPageActionWait.UntilType.EXIST, locator);

}

public GalenActionsBuilder waitForVisible(Integer seconds, Locator locator) {

return waitFor(seconds, GalenPageActionWait.UntilType.VISIBLE, locator);

}

public GalenActionsBuilder waitForHidden(Integer seconds, Locator locator) {

return waitFor(seconds, GalenPageActionWait.UntilType.HIDDEN, locator);

}

public GalenActionsBuilder waitForGone(Integer seconds, Locator locator) {

return waitFor(seconds, GalenPageActionWait.UntilType.GONE, locator);

}

public GalenActionsBuilder withCookies(String... cookies) {

checkUsed();

GalenPageActionCookie a = new GalenPageActionCookie()

.withCookies(cookies);

a.setOriginalCommand("cookie " + Joiner.on("; ").join(cookies));

actions.add(a);

return this;

}

public GalenActionsBuilder injectJavascript(String javascriptFilePath) {

checkUsed();

GalenPageActionInjectJavascript a = new GalenPageActionInjectJavascript(javascriptFilePath);

a.setOriginalCommand("inject " + javascriptFilePath);

actions.add(a);

return this;

}

public GalenActionsBuilder runJavascript(String javascriptFilePath) {

checkUsed();

GalenPageActionRunJavascript a = new GalenPageActionRunJavascript(javascriptFilePath);

actions.add(a);

return this;

}

public GalenActionsBuilder runJavascript(String javascriptFilePath, String jsonArgs) {

checkUsed();

GalenPageActionRunJavascript a = new GalenPageActionRunJavascript(javascriptFilePath)

.withJsonArguments(jsonArgs);

actions.add(a);

return this;

}

public GalenActionsBuilder check(String specFile, List tags) {

checkUsed();

GalenPageActionCheck a = new GalenPageActionCheck()

.withSpecs(Arrays.asList(specFile))

.withIncludedTags(tags);

actions.add(a);

return this;

}

public GalenActionsBuilder resize(int width, int height) {

checkUsed();

GalenPageActionResize a = new GalenPageActionResize(width, height);

a.setOriginalCommand("resize " + GalenUtils.formatScreenSize(new Dimension(width, height)));

actions.add(a);

return this;

}

public GalenActionsBuilder open(String url) {

checkUsed();

GalenPageActionOpen a = new GalenPageActionOpen(url);

a.setOriginalCommand("open " + url);

actions.add(a);

return this;

}

private void checkUsed() {

if (built)

throw new IllegalStateException("Incorrect builder usage error. build() method has been already called");

}

public List build() {

built = true;

return actions;

}

public GalenActionsBuilder scrollToElement(String locator) {

String content = String.format("jQuery(\"%s\")[0].scrollIntoView(true);", locator);

Properties properties = new Properties();

try (InputStream is = getClass().getResourceAsStream("/test.properties")){

properties.load(is);

} catch (Exception e) {

throw new RuntimeException("I/O Exception during loading configuration", e);

}

String workDirPath = properties.getProperty("work_dir");

String tempDirPath = workDirPath + "\\temp";

String auxJsFile = String.format("%s\\%s.js", tempDirPath, locator.hashCode());

File tempDir = new File(tempDirPath);

tempDir.mkdirs();

try {

PrintWriter writer = new PrintWriter(auxJsFile, "UTF-8");

writer.println(content);

writer.close();

} catch (Exception e) {

throw new RuntimeException("Exception during creating file", e);

}

injectJavascript(auxJsFile);

return this;

}

}

At the start of each test, Galen is given a random permission from the range for the given layout (getRandomResolution (DESKTOP)):

protected Dimension getRandomResolution(Dimension[] d) {

return getRandomDimensionBetween(d[0], d[1]);

}

private Dimension getRandomDimensionBetween(Dimension d1, Dimension d2) {

double k = Math.random();

int width = (int) (k * (Math.abs(d1.getWidth() - d2.getWidth()) + 1) + Math.min(d1.getWidth(), d2.getWidth()));

int height = (int) (k * (Math.abs(d1.getHeight() - d2.getHeight()) + 1) + Math.min(d1.getHeight(), d2.getHeight()));

return new Dimension(width, height);

}

And, in fact, the range of permissions is set in this form:

public static final Dimension[] DESKTOP = {new Dimension(1920, 1080), new Dimension(1018, 1080)};

Testing by randomly choosing the resolution from the valid range and the tested page from a subset of the same type of pages, thus, turns into a probabilistic process. The more we run it, the more different bugs we find. With a single successful passage of the test, we can only say that this particular page at this particular resolution is valid. But after 500 successful runs, we can argue that the layout is for the most part viable. Immediately make a reservation that “500 successful runs” is a speculative assessment, and here you need to look at the content and the number of equivalent pages.

Running on a random resolution very soon paid off and immediately revealed one interesting bug that we would most likely have missed when running tests in a fixed resolution.

Let's see how this approach helps us with the example of testing the recipe page.

The recipe page wireframe test runs for the resolution range (Viewport width) from 768px to 1017px. Take for example the page: www.washingtonpost.com/pb/recipes/maple-banana-frozen-yogurt/14143

The boundary layout test of the Laptop layout (1017px and 768px) did not give errors.

However, after we started running the test at random resolution, in about half of the cases, the tests fell and the screenshots showed that the blocks from the right column creep down under the main content.

Correct view:

Caution! Big picture

Hidden text

Layout Broken:

Caution! Big picture

Hidden text

Screenshot-based testing method

Inspired by the article, we decided to use the screenshot-based testing method. By the way, to test the layout, we initially relied on this method. Those. there was an idea to compare full-size screenshots of a page with a previously prepared model, replacing all potentially changing elements with stubs (a pre-selected arbitrary image is taken as stubs). These elements included pictures, flash advertising and text. The idea failed mainly due to the fact that the pages contained many blocks that were loaded dynamically, as a result of which the physical dimensions of the screenshots taken and the location of the blocks changed from running the test to running. In addition, for some time now Chrome has lost the ability to take full-size screenshots, which also created a number of problems.

Screenshot-based tests now check with us those individual elements and blocks on the page for which display is important, and / or checking with functional or galen tests is difficult or impossible.

For example:

Here is the MostRead block on the main page of washingtonpost.com (on the left) and the model with which we will compare the screenshot of this block (on the right):

The test code looks like this:

@Test(groups = { "ScreenshotBased" })

@WebTest("Verifies that 'Post Most' block is displayed properly")

public void testMakeupForPostMost() {

HomePage page = new HomePage().open();

page.preparePostMostForScreenshot();

screenshotHelper.shootAndVerify(page, page.thePostMost, "_thePostMost");

}

The following directory structure is used for storing screenshots: /models/HomePage/firefox/HomePage_thePostMost.png

As can be seen from here, a different screenshot of the necessary block is taken for different browsers.

The shootAndVerify () method finds the path to the model by the class of the submitted page and the name of the browser in which the test is running.

Looking ahead, let's say - it works pretty well, and then we will describe some details of the process with the caveat that not everything is still fully debugged.

As it turned out, the picture taken of the necessary block may depend on many factors, such as:

- operating system version

- operating system theme

- browser and its version

- various font smoothing options and hardware acceleration.

The first problem was that the size of the screenshots taken was different depending on the OS and browser settings. To make the sizes of blocks, and, consequently, screenshots the same, you need to run a browser with constant sizes. You can resize the browser window using the appropriate web driver method: driver.manage (). Window (). SetSize (requiredSize). But in this way we set the size of the window, and not the size of the visible area we need - the viewport. A vertical scrollbar, by the way, also affects the size of the viewport, and its thickness also depends on the theme of windows, so you need to consider this. The solution was a calibration method that adjusts the viewport size to a given size. After starting the first test, the difference between the width of the window size and the width of the viewport is saved in a special parameter and is reused during subsequent launches.

The second problem we encountered was the different display of fonts in browsers due to anti-aliasing options. We tried to solve the problem by setting various browser settings, such as:

layers.acceleration.disabled

gfx.font_rendering.cleartype_params.rendering_mode

gfx.direct2d.disabled

But, unfortunately, this did not help.

In addition, to compare screenshots with ImageMagick, a parameter such as fuzz is used, which sets the maximum possible difference between the screenshots.

We tried to solve this problem by experimenting with this parameter. A small fuzz coefficient did not solve the problem, because the number of different pixels was very large, due to the fact that there was a lot of text, and a large coefficient led to the fact that the absence of some elements in the blocks did not affect the test, and led to potentially missed bugs.

The solution was to duplicate all the settings of various browsers on all virtual machines on which the tests were run, and duplicate the settings of the operating systems themselves.

For example, a test that checks the block of social buttons in which one of the images did not load.

The links in the report are available:

picture-model

screenshot of the tested unit: the

result of comparing these two images:

CommandException tells us that the compared images differ by 251px:

There are also situations where the screenshot sizes do not match. In this case, we get the following report:

Sometimes, for unknown reasons, the elements inside the tested block are slightly biased. For such cases, we compare not with one model, but with a group of models suitable for the mask, i.e. we can have several models of thePostMost block with the names HomePage_thePostMost.png, HomePage_thePostMost (1) .png, and we consider all models to be valid. Fortunately, the number of such options is finite, usually no more than 2.

Technical aspects

As mentioned above, a technology stack is used to write and run tests: Java, Maven, TestNG, Selenium, Galen Framework. In addition, test results are sent to graphite. The tests are run directly using Jenkins CIS. We will not dwell in detail on why such a set was chosen. We briefly describe how this is all interconnected.

Selenium Grid is now deployed locally on four virtual machines with Windows 7, where the grid nodes are running, and on the Linux machine on which the hub is running. There are 3 instances of firefox and chrome browsers available on each node. In addition, Jenkins and graphite are also deployed on the Linux machine. Galen tests run in the general test run thanks to integration with TestNG. To do this, an appropriate class was written that allows you to use the jav Galen API. When implementing the interaction of TestNG with galen, we had some problems that were quickly resolved due to the interaction with the galen developer. The galena developer himself willingly cooperates and regularly releases updates for this tool, which expand its capabilities and make it even more convenient.

Functional, galen and screenshot based tests are separated using the appropriate group parameter assigned to the Test annotation, and there is the possibility of their separate launch.

Our conclusions

Both approaches - the method of comparing screenshots and testing using the Galen Framework - are applicable for testing page layout. They successfully complement each other. The method of comparing screenshots is more applicable when you need to test the display of any single element or block, for example, the sharing panel in social networks or the main menu in the header. A block can contain many icons within itself, which in turn can be inside other icons and elements, or have relative positioning with them.

Using Galen to describe all these small moments is rather time-consuming, however, one screenshot for each browser solves this problem, and comparison of screenshots excludes the option when we can miss something when describing the spec. Galen, in turn, copes with the relative arrangement of blocks and checking the headers and fixed text in them. It has good use when you need to test layout on the same type of template pages that are not loaded with functional logic, for example, of any information portal, as in our case, when almost any page of the site is accessible without authorization, or any other user actions. In addition, Galen well solves cross-browser testing in adaptive application layout.