A brief overview of open source backup tools

Means for backing up information can be divided into several categories:

Means for backing up information can be divided into several categories: - For home / office use (backing up important documents, photos, etc. to the NAS or to the cloud);

- For medium and large (offline) enterprises (reservation of important documents, reports, databases, etc., both on servers and at workstations of employees);

- For small web projects (backup files and databases from a hosting site or VPS / VDS to a remote host (or vice versa));

- For large web projects with a distributed architecture (almost the same as in offline enterprises only taking into account work in the global network, and not local, and usually using open source tools).

With software products for home and office, everything is quite simple; there are lots of solutions, both open and proprietary, from cmd / bash scripts to solutions from well-known software manufacturers.

In the enterprise sector, everything is quite boring; there are a lot of software products that have been working successfully for a long time at many enterprises, in large banks, etc., we will not advertise anyone . Many of these products have greatly simplified the life of system administrators, for fairly "modest money" by the standards of some enterprises.

In this article, we’ll take a closer look at open source solutions for backing up web projects of various sizes, as well as test the speed of file backups.

The article will be useful to webmasters, small web studios, and maybe even an experienced admin will find something useful here.

What is needed to reserve a small site or blog, or several sites, for example, from a VPS on which the disk space is right up to the end?

Begins a reservation to the remote host. Those. To save valuable space on your hosting or VPS, you can connect, for example, from your home / office computer (maybe you have a NAS), via ftp or sftp protocols, manually or schedule take files and carefully store them in some safe place . Any ftp or sftp client will do, a good rsync option.

With rsync it looks something like this:

rsync -avzPch user@remote.host:/path/to/copy /path/to/local/storageAnd this seems to be nice, but what if you need to store several versions of database backups? Or for some reason it was necessary to make incremental copies, and it would be nice to add encryption as well. You can sit a little and make a good

Consider several utilities that are suitable for various applications, in particular for the case described above.

Duplicity

Duplicity is a console-based backup utility with ample capabilities.

There are several graphical skins for Duplicity - Deja-dup for the Gnome environment and test-drive for KDE. There is also a duply console wrapper.

Duplicity backups encrypted volumes in tar format locally or to a remote host. The librsync library allows for incremental file recordings, gzip is used for compression, and gpg does encryption.

There is no configuration file. Automate the backup process yourself.

Usage examples:

Reserving a local folder to a remote host

duplicity /usr scp://host.net/target_dirReserving from a remote host to a local folder

duplicity sftp://user@remote.host/var/www /home/backup/var/wwwRestoring

duplicity restore /home/backup/var/www sftp://user@remote.host/var/www About Duplicity there were already articles on Habré, therefore we will not focus on it.

Rsnapshot

About rsnapshot is also said a lot on Habré, here and here . And hereanother good article. Rsnapshot in general, a good tool for creating incremental backups (snapshots). Written in perl, uses rsync to copy files. It is fast enough (faster than rdiff-backup) and saves good disk space due to hard links. Able to do pre and post-backup operations, can not (without crutches) to encrypt and backup to a remote host. Files are stored in their original form - easy to recover. Conveniently organized configuration. It supports several temporary reservation levels (daily, weekly, monthly). There is a fairly active community.

After you write the necessary lines in the config (what to backup and where), you can start backup:

rsnapshot -v hourlyBy default, several hourly and daily snapshots will be stored. Rsnapshot differs from other utilities in that it is automated out of the box (relevant for Debian / Ubuntu), i.e. the necessary lines will be written in the crowns, and the reservation of the directories "/ home", "/ etc", "/ usr / local" is written in the config

Rdiff-backup

Rdiff-backup is very similar to Rsnapshot, but unlike it it is written in Python and uses the library librsync for data transfer. Able to copy files to a remote host, which by the way, we quite successfully used and in some places we still use. You can also backup from a remote host, but first you need to install Rdiff-backup there. Stores information about file changes (deltas) in a compressed form, good for large files,

Metadata (rights, dates, owner) are stored in separate files.

Backup is launched from the console:

rdiff-backup remote.host::/home/web/sites/ /home/backup/rdiff/The presence of a configuration file is not assumed. Automate yourself.

Obnam

Obnam - an open client-server application for backup, the program code is written in Python for transferring data using the SSH protocol. It can be operated in two ways:

- Push reservation from a local host to a remote server running the Obnam daemon.

- Pull daemon itself takes files from remote hosts via ssh protocol. In this case, the Obnam client is not needed.

Able to do snapshots, deduplication and encryption GnuPG. File backups are stored in volumes. Metadata is stored in separate files. Recovery is done through the console.

A small excerpt from the description on Opennet (http://www.opennet.ru/opennews/art.shtml?num=39323):

“The backup approach offered by Obnam aims to achieve three goals: high storage efficiency, ease of use, and security. Storage efficiency is achieved by placing backups in a special repository in which data is stored in the optimal representation using deduplication. One repository can store backups of different clients and servers. At the same time, duplicates are merged for all stored backups, regardless of their type, creation time, and backup source. To check the integrity of the repository and restore it after a crash, a special version of the fsck utility is provided.

If the same operating system is used on the server group, then only one copy of duplicate files will be saved in the repository, which can significantly save disk space when organizing backups of a large number of typical systems, for example, virtual environments. The repository for storing backup copies can be located both on the local disk and on external servers (to create a server for storing backup copies, additional programs are not required; access via SFTP is sufficient). It is possible to access backups by mounting a virtual partition using a specially prepared FUSE-module. ”

All this is good, BUT scp with all the consequences is used to copy to a remote host.

Bacula

Bacula is a cross-platform client-server software that allows you to manage backup, recovery, and data verification over the network for computers and operating systems of various types. Currently, Bacula can be used on almost any unix-like system (Linux (including zSeries), NetBSD, FreeBSD, OpenBSD, Solaris, HP-UX, Tru64, IRIX, Mac OS X) and Microsoft Windows.

Bacula can also run completely on a single computer or, distributed, on several, and can write backups to various types of media, including tapes, tape libraries (autochangers / libraries) and disks.

Bacula is a network client-server program for backup, archiving and recovery. Offering ample opportunities for managing data warehouses, it facilitates the search and recovery of lost or damaged files. Due to its modular structure, Bacula is scalable and can work on both small and large systems consisting of hundreds of computers located in a large network.

Bacula has GUIs and web interfaces (Almir, Webmin) of varying degrees of complexity.

Some time ago, I had to work hard and to no avail with Almir to run it on Debian Wheezy.

Bacula is a reliable, time-tested backup system, including one that has proven itself in many large enterprises. Bacula differs fundamentally from Obnam in its working scheme. In the case of the option, the client-server Bacula will be a 100% centralized system. You also need a client application on the host that you want to backup. Three daemons SD, FD work simultaneously on the server. DIR - Storage Daemon, File Daemon and Director respectively. It is easy to guess who is responsible for what.

Bacula stores backup files in volumes. Metadata is stored in the database (SQLite, MySQL, PostgreSQL). Recovery is performed using the console utility or through the graphical shell. The recovery process through the console, frankly, is not the most convenient.

Figures

I decided to check the backup speed of a small folder (626M) with several sites on WP.

To do this, I was not too lazy to deploy and configure all this software. :)

The test consists of two parts:

1. Participants Duplicity, Rsync, Rsnapshot, Rdiff-Backup. We copy it from the remote server to the home computer, and since Rsnapshot cannot do remote backup, it and Rdiff-backup (for comparison) will work on the home machine, i.e. they will pull files from the server from the server, and the rest, on the contrary, will push them to the home machine.

All utilities were launched with the minimum necessary options.

Rsync

rsync -az /home/web/sites/ home.host:/home/backup/rsyncfull backup

runtime:

real 4m23.179s

user 0m31.963s

sys 0m2.165s

Incremental

Runtime

real 0m4.963s

user 0m0.437s

sys 0m0.562s

Occupied

626M /home/backup/duplicity/Space : Duplicity

duplicity full /home/web/sites/ rsync://home.host//home/backup/duplicityFull backup Lead

Time:

real 5m52.179s

user 0m46.963s

sys 0m4.165s

Incremental

Runtime

real 0m49.883s

user 0m5.637s

sys 0m0.562s

Occupied

450M /home/backup/duplicity/Space : Rsnapshot

rsnapshot -v hourlyFull backup Lead

Time:

real 4m23.192s

user 0m32.892s

sys 0m2.185s

Incremental

Runtime

real 0m5.266s

user 0m0.423s

sys 0m0.656s

Occupied space:

626M /home/tmp/backup/rsnap/Rdiff-backup

rdiff-backup remote.host::/home/web/sites/ / home / backup / rdiff /

Full backup

Runtime:

real 7m26.315s

user 0m14.341s

sys 0m3.661s

Incremental

Runtime

real 0m25.344s

user 0m5.441s

sys 0m0.060s

Rank:

638M /home/backup/rsnap/Results are fairly predictable. Rsync turned out to be the fastest, with Rsnapshot practically the same result. Duplicity is a bit slower but takes up less disk space. Rdiff-backup is expected worse.

2. Now interesting. Let's check how Obnam and Bacula work. Both solutions are quite universal plus have some similarities. Let's see who is quicker.

Obnam

The first time I started copying from a remote host to my home, I had to wait a long time:

obnam backup --repository sftp: //home.host/home/backup/obnam/ / home / web / sites /

Full backup

Backed up 23919 files, uploaded 489.7 MiB in 1h42m16s at 81.7 KiB / s average speed

Execution Time:

real 102m16.469s

user 1m23.161s

sys 0m10.428s

the backup --repository the sftp obnam: //home.host/home/backup/obnam/ / home / of web / sites /

the Incremental

Backed up closeup 23919 files, uploaded 0.0 B in 3m8s at 0.0 B / s average speed

Lead time

real 3m8.230s

user 0m4.593s

sys 0m0.389s

Occupied position:

544M /home/tmp/backup/rsnap/Not a very good result, as it seems to me, although understandable.

We’ll try a second time, but already on a neighboring server via a gigabit network and add compression.

obnam backup --compress-with = deflate --repository sftp: //remote.host/home/backup/obnam/ / home / web / sites /

Full backup

Backed up 23919 files, uploaded 489.7 MiB in 2m15s at 3.6 MiB / s average speed lead

time:

real 2m15.251s

user 0m55.235s

sys 0m6.299s

obnam backup --compress-with = deflate --repository sftp: //remote.host/home/backup/obnam/ / home / web / sites /

Incremental

Backed up 23919 files, uploaded 0.0 B in 8s at 0.0 B / s average speed lead

time

real 0m7.823s

user 0m4.053s

sys 0m0.253s

Occupied space:

434M /home/tmp/backup/rsnap/So quickly and backup size is smaller. I did not try encryption, maybe later, if there is time.

Bacula

For Bacula, I prepared a full-fledged client-server option. Client and server on the same gigabit network.

I started the task in the background and went to drink tea. When I returned I found that everything was ready, and in the log the following:

...

Scheduled time: 23-Apr-2014 22:14:18

Start time: 23-Apr-2014 22:14:21

End time: 23-Apr-2014 22:14:34

Elapsed time: 13 secs

Priority: 10

FD Files Written: 23,919

SD Files Written: 23,919

FD Bytes Written: 591,680,895 (591.6 MB)

SD Bytes Written: 596,120,517 (596.1 MB)

Rate: 45513.9 KB/s

...

I was even a little surprised. Everything was done in 13 seconds, the next launch took place in one second.

Total

With rsnapshot you can easily solve the problem of backing up files and databases (with an additional script) of your VPS to your home computer / laptop / NAS. Also, rsnapshot can do a good job with a small fleet of servers of 10-25 hosts (you can, and of course more, depends on your desire). Rdiff will be good for backup of large files (Video content, databases, etc.).

Duplicity will not only help to keep your data intact, but protect it in case of theft (unfortunately you cannot protect yourself, be careful and careful, keep the keys in safe and inaccessible to anyone else)).

Bacula is an open source industry standard that will help keep the data of a large fleet of computers and servers of any enterprise safe.

Obnam is an interesting tool with a number of useful advantages, but I probably will not recommend it to anyone.

If, nevertheless, for some reason, none of these solutions suits you, feel free to reinvent your bikes. This can be useful both for you personally and for many people.

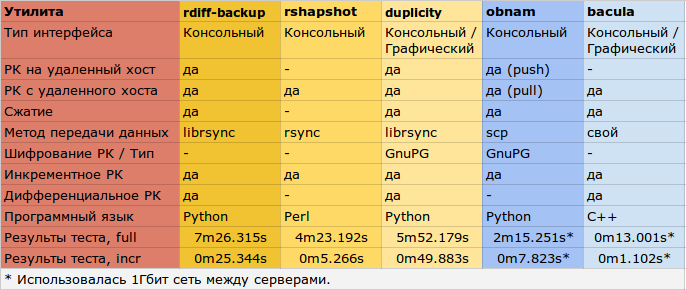

UPD: A small pivot table:

Only registered users can participate in the survey. Please come in.

What tools for RK do you use?

- 36.8% Script (bash / cmd) 257

- 10% Duplicity 70

- 7.8% Rsnapshot 55

- 5.4% Rdiff-backup 38

- 1.1% Obnam 8

- 28% Bacula 196

- 28.2% Other 197