The logic of thinking. Part 5. Brain waves

So, we come to the description of one of the key principles of the described model. This principle has not previously been used either in neural networks or in describing the functioning of the brain. In this regard, I highly recommend that you familiarize yourself with the previous parts . At a minimum, it is necessary to read the fourth part without which the following will be completely incomprehensible.

In the previous part, we talked about the fact that the activity of neurons is divided into induced and background. Echoes of background activity are observed by taking an electroencephalogram. The recorded signals have a complex shape and depend on the place of application of the electrodes to the head, but, nevertheless, individual harmonic components are quite clearly traced in them.

The main rhythms are called:

- alpha rhythm (from 8 to 13 Hz);

- beta rhythm (from 15 to 35 Hz);

- gamma rhythm (from 35 to 100 Hz);

- delta rhythm (from 0.5 to 4 Hz);

- theta rhythm (5 to 7 Hz);

- Sigma rhythm of the “spindle” (from 13 to 14 Hz).

The nature of rhythms has traditionally been correlated with the oscillatory properties of neurons. So, the behavior of a single neuron is described by the Hodgkin-Huxley equations (Hodgkin, 1952):

These equations determine the autowave process, that is, they direct the neuron to generate impulses. The Hodgkin-Huxley model is complex to model. Therefore, there are many of its simplifications that preserve the main generating properties. The most popular models: Fitzhugh-Nagumo (Fitzhugh, 1961), Morris-Lecara (Morris C., Lecar H., 1981), Hindmarsh-Rose (Hindmarsh JL, and Rose RM, 1984). Many models, for example, Hindmarsh-Rose, allow simulating both pack activity and isolated commissures (see figure below).

Burst activity and adhesions arising from the modeling of the Hindmarsh Rose neuron

By combining the neurons that generate their own impulses into structures resembling the structure of the real cortex, it is possible to reproduce various effects characteristic of the group activity of real neurons. For example, you can achieve global synchronization of neural activity or cause the appearance of waves. The most famous models are Wilson-Cowan (HR Wilson and JD Cowan, 1972) and Kuromoto (Kuramoto, 1984).

The electroencephalogram captures the echoes of the joint activity of neurons, but it is clear that this activity has a certain spatio-temporal organization. Optical methods for observing the activity of the cortex make it possible to see it live. In the experimental animal, a section of the cortex is exposed and a special dye is introduced that is sensitive to changes in electric potential. Under the influence of the total oscillations of the membrane potential of neurons, such a dye changes its spectral properties. And although these changes are extremely small, they can nevertheless be recorded, for example, using a diode array, which acts as a high-speed video camera. Optical methods do not allow you to look deep into the cortex and track the activity of individual neurons,

Scheme of optical fixation of cortical activity (Michael T. Lippert, Kentaroh Takagaki, Weifeng Xu, Xiaoying Huang, Jian-Young Wu, 2007)

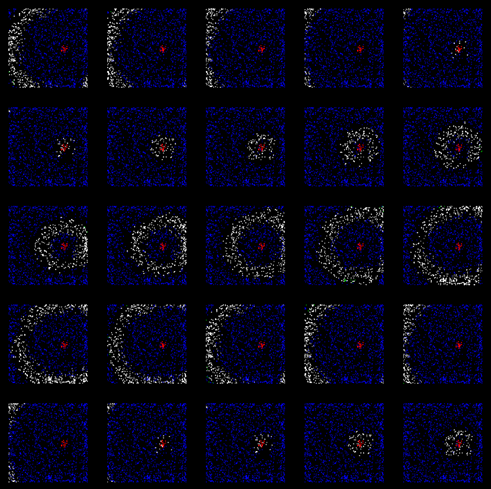

It turned out that the rhythms of the brain correspond to waves that arise in point sources and spread through the cortex, like circles in water. True, the front of the wave on the water diverges strictly in a circle, and the front of the wave of activity of brain neurons can propagate in a more complex way. The figure below shows the patterns of wave propagation in a 5 mm section of the rat cerebral cortex.

The pattern of the propagation of an activity wave on a section of the rat cerebral cortex. The potential is shown by a gradient from blue to red. 14 frames with an interval of 6 milliseconds cover one wave propagation cycle (84 milliseconds - 12 Hz) (Michael T. Lippert, Kentaroh Takagaki, Weifeng Xu, Xiaoying Huang, Jian-Young Wu, 2007)

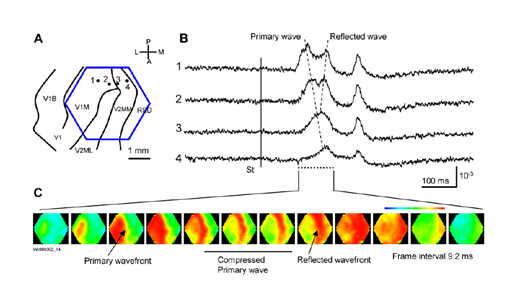

Very interesting and indicative videos of wave activity are given in (W.-F. Xu, X.-Y. Huang, K. Takagaki, and J.-Y. Wu, 2007). It shows that waves can condense, reaching the boundary of the crustal zone, can be reflected from another zone and create a counterpropagating wave, can propagate with double spirals and create vortices.

Compression and wave reflection in the primary visual cortex (W.-F. Xu, X.-Y. Huang, K. Takagaki, and J.-Y. Wu, 2007)

Below is a video from the same work (W.-F. Xu , X.-Y. Huang, K. Takagaki, and J.-Y. Wu, 2007):

Using models of oscillating neurons, similar wave patterns can be obtained in computer experiments. But such pulsation in itself does not make much sense. It is reasonable to assume that activity waves are a mechanism for the transfer and processing of information. But attempts in traditional models to explain the nature of this mechanism have not yielded tangible results. It seems logical to assume that information is encoded by the frequency and phase characteristics of neural signals. But this leads to the need to take into account interference processes and raises more questions than clarifies.

There is a hypothesis that the waves “scan” the cortex, reading information from it for subsequent transmission (Pitts W., McCulloch WS, 1947). It looks healthy enough, at least with respect to alpha waves and the primary visual cortex. The authors of this hypothesis, McCallock and Pitts, emphasized that scanning allows us to explain one important phenomenon. The fact is that information from the primary visual cortex is projected further along the fibers of white matter through axon bundles that are clearly insufficient in volume to simultaneously transmit the entire state of this zone of the cortex. So, they concluded, scanning allows you to use not only spatial but also time code, which ensures the sequential transmission of the required information.

Such a problem of the narrowness of projection beams exists not only for the primary visual cortex, but also for all other areas of the brain. The number of fibers in the projection paths is much less than the number of neurons that form the spatial picture of activity. The connections between the zones are clearly not able to simultaneously transmit the entire spatially distributed signal, which means that an understanding of how the information transmitted on them is compressed and decompressed is required. The assumption of scanning, although it does not provide an answer to the question about the coding mechanism itself, nevertheless allows us to pose the right questions.

Our model in explaining brain rhythms is based on the above description of the nature of metabotropic activity. Using the idea that not only synapses of neurons, but also metabotropic receptive clusters are involved in the process of creating rhythms, one can obtain a result that is qualitatively different from all classical theories. But before proceeding to the description of this, I want to make a warning. I will consciously describe simplified idealized models, matching them with certain processes inherent in the real brain, but without claiming that the brain works that way. Our task is to show the basic principles, understanding that evolution has gone far ahead and their true implementation is much trickier. You can draw an analogy with the development of computer technology. A modern computer is quite complicated, and if we begin to describe the basic principles of classical computing devices, it turns out that in their pure form it is almost impossible to meet them in modern systems. Basic representation - the processor reads the program and data from memory, performs the actions prescribed by the program on the data, and writes the results back to memory. And now add to this the use of cache at various levels, multithreading, hyperthreading, parallel computing using local, group and shared memory and the like. And it turns out that it is difficult to find literal compliance with simple rules in a real computer. Actually, all this must be taken into account, comparing the following description with the work of the real brain. that in its pure form it is almost impossible to meet them in modern systems. Basic representation - the processor reads the program and data from memory, performs the actions prescribed by the program on the data, and writes the results back to memory. And now add to this the use of cache at various levels, multithreading, hyperthreading, parallel computing using local, group and shared memory and the like. And it turns out that it is difficult to find literal compliance with simple rules in a real computer. Actually, all this must be taken into account, comparing the following description with the work of the real brain. that in its pure form it is almost impossible to meet them in modern systems. Basic representation - the processor reads the program and data from memory, performs the actions prescribed by the program on the data, and writes the results back to memory. And now add to this the use of cache at various levels, multithreading, hyperthreading, parallel computing using local, group and shared memory and the like. And it turns out that it is difficult to find literal compliance with simple rules in a real computer. Actually, all this must be taken into account, comparing the following description with the work of the real brain. And now add to this the use of cache at various levels, multithreading, hyperthreading, parallel computing using local, group and shared memory and the like. And it turns out that it is difficult to find literal compliance with simple rules in a real computer. Actually, all this must be taken into account, comparing the following description with the work of the real brain. And now add to this the use of cache at various levels, multithreading, hyperthreading, parallel computing using local, group and shared memory and the like. And it turns out that it is difficult to find literal compliance with simple rules in a real computer. Actually, all this must be taken into account, comparing the following description with the work of the real brain.

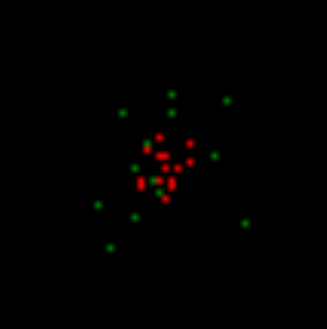

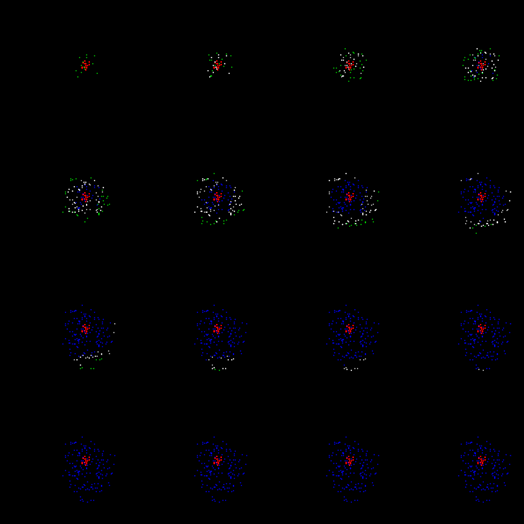

So, let's take a model of the cortex site, on which we will create a compact pattern of induced activity. For now, let’s leave the question of how this pattern arose. We will simply assume that there are elements on which a constant pulse signal is present. In the figure below, the neurons that make up this pattern are marked in red. In the real cortex, this corresponds to axons that transmit pack activity, i.e., produce a series of spikes with a high frequency. These axons can belong to neurons of the same cortical zone, which are in a state of induced excitation, or they can be projection fibers coming from other parts of the brain.

Evoked Activity Pattern

Now make the free neurons generate rare random impulses. In this case, we impose the condition that for a random spike a certain level of surrounding activity is required. This means that random spikes can only occur in the vicinity of already active neurons. In our case, they will appear in the vicinity of the pattern of induced activity. In the figure below, random spikes are shown in green.

Typically, all neuronal activity that is not triggered is called spontaneous or background. This is not very successful, as it sets up the perception of all this activity as random. Further we show that, basically, background activity is strictly predetermined and is not at all random. And only a small part of it is really completely random commissures. We have created such random spikes around the active pattern.

The first step of modeling. Spontaneous activity amid evoked activity

We model metabotropic receptive clusters on our formal neurons. To do this, we will give neurons the opportunity to remember, when necessary, a picture of the activity of the immediate environment. Moreover, we will not limit ourselves to one picture for one neuron, as it would be, we remember at the synapses, but let each neuron store a lot of such pictures.

Let us make it so that the presence of our own random spike and high surrounding activity will be the basis every time to remember the picture of the surrounding pulses (figure below). Further, our neurons will give single spikes each time one of these remembered local patterns is repeated. In order not to confuse them with random spikes, we will call these spikes wave.

The area of activity fixation for one of the neurons that issued a spontaneous spike (it is in the center of the square). When simulating, square receptive fields were used for simplicity, which, although it does not correspond to the tracking fields of real neurons, does not qualitatively affect the result.

As a result, at the next modeling step (figure below) we get a picture in which the same neurons with evoked activity (red), neurons that responded to local images stored in the previous step (white), and neurons that generated a spontaneous spike (green).

The second step of modeling. Reds - induced activity, white - wave activity, green - spontaneous activity

Repeating the modeling steps, we get activity spreading along the cortex with a certain unique randomly created pattern.

Now we introduce the neuron fatigue condition. Let us make it so that after several metabotropic (wave) spikes, neurons will lose the ability to generate new impulses for the time necessary for relaxation. This will lead to the fact that the activity will not be distributed by a continuous region, but by pulsating waves scattering from its source.

To prevent clogging, we block spontaneous activity in areas where the number of relaxing neurons is high. As a result, we get a ring of neurons with wave activity scattering from the pattern of induced activity (figure below).

Wavefront propagation. Blue ones are neurons that are in a state of relaxation.

The resulting wave, as it moves away from its center, will lose the front density and decay sooner or later. In the figure below, you can trace the first cycle of her life.

The first cycle of propagation of the identifier wave.

After the relaxation period has passed, a new wave will start. Now it will be picked up by neurons that have already been trained, and will spread a little further. With each new cycle, the wave will expand the boundaries of its propagation until it reaches the edges of the zone. To prevent an unlimited increase in the density of the wave front, we introduce one more restriction: we prohibit spontaneous activity for neurons when the total activity around them exceeds a certain threshold.

After some time, we will get a cortex trained to propagate a wave of activity unique in its pattern that corresponds to the initially set pattern of induced activity (figure below).

Wave propagation along the already trained cortex (after 200 training steps)

Below is a video of the wave learning process:

Note: when the cortex is already trained to propagate waves from a specific pattern, spontaneous (random) commissures disappear. Such an “experienced” wave is formed due to wave, nonrandom spikes. Random spikes appear only during training, when the wave front reaches still untrained or poorly trained territories. At this moment, spontaneous impulses create a random continuation of the wave pattern, which is immediately remembered on the surface of the neurons participating in this wave. But as soon as training ends, a wave from a familiar cortex pattern begins to propagate according to an already created wave pattern, each beat repeating exactly the same already nonrandom pattern in its path.

Bark training does not have to be phased. When modeling by selecting parameters, it is possible to ensure that the emerging wave is self-sufficient. That is, so that it does not fade in the first bars, but immediately spreads over the entire space (figure below).

An example of a training wave that does not fade during the first cycle of propagation

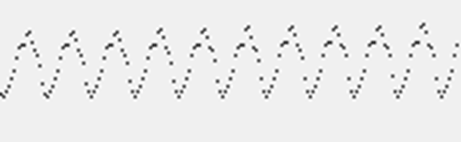

Since the width of the wave front is constant, its area increases with distance from the source. This corresponds to the fact that more and more neurons are involved in the spread of activity. If we trace the total potential created by them, then we will get graphs that resemble what we see in the encyclograms (figure below).

Single-source model rhythmic activity graph

Please note that the appearance of the rhythms observed on such “encephalograms” is not the rhythm of the “respiration” of the cortex. That is, not synchronization of joint bursts of activity, as is expected in most existing models, but a change in the number of neurons involved in the propagation of diverging waves. If new waves will be emitted before the fronts of previous waves disappear, their addition will give a smoother picture. With a certain selection of parameters on the model, situations are reproduced when the propagation of waves is practically not accompanied by total rhythmic activity. This can be correlated with the fact that about 10 percent of people on electroencephalograms have no wave activity.

If we now take a new pattern of induced activity, then the crust will create waves propagating from it. Moreover, such a crust is trained to create waves for any stable pattern of induced activity. Due to the fact that the same neuron can store many local images, it can be part of many different waves that correspond to different patterns. If we want more uniqueness of the waves, it is enough, as the neuron accumulates the local images that it remembers, to reduce the probability of its spontaneous spike.

For remembered local images, it makes sense to introduce a consolidation mechanism. That is, not to fix the image immediately for centuries, but to establish the time during which the image must be repeated a certain number of times. Since training in wave propagation and the formation of stable patterns of evoked activity are parallel processes, consolidation can erase the traces of unsuccessful training.

But the most important thing in all this is the uniqueness of each of the received wave stories. In any place through which the wave passes, she creates a unique, characteristic only for her drawing. This means that if there is a pattern of induced activity on the surface of the cortex that encodes an event, then the wave caused by it will spread information about it throughout the space of the cortex. In each specific place, this wave will create its own unique pattern, unique only for this event. Any other event in this place will create a different pattern associated with its wave. This means that, being in any place, we can tune in to the “reception” of the wave from any particular event and record when it will occur upon the arrival of the wave with the corresponding pattern.

In our model, it turns out that the fundamental properties of the cortex are:

- ability to generate waves diverging from patterns of induced activity;

- the ability to learn, to conduct unique and stable wave patterns for each pattern;

- when trained, the ability to propagate activity waves that arise around persistent patterns of induced activity.

Each unique pattern of induced activity corresponds to a wave unique in its pattern, which can be called the identifier of this pattern.

Now the learning process can be roughly represented as follows:

- statistically stable signals lead to the formation of neurons-detectors, which, due to synaptic plasticity, are trained to respond to certain patterns;

- detector neurons related to one pattern form a stable pattern;

- each stable pattern, as it is active, teaches the cortex to propagate a unique wave that corresponds only to this pattern, which can be interpreted as an identifier of this pattern;

- each place of the cortex, where the corresponding waves propagate, gets the opportunity to find out what is the general information picture at the current moment.

From the above it follows that for its full-fledged work, the brain must not only form neurons-detectors of various phenomena, but also train the cortex to propagate the corresponding information waves. This is in good agreement with the fact that the rhythmic activity in children is weakly expressed immediately after birth and increases with age.

When we talk about brain structures that respond to certain phenomena, their obvious dualism arises in our concept. These structures are not described exclusively by any neurons or groups of neurons, they are supplemented by identification waves, which allow the dissemination of relevant information. But, interestingly, in each specific place through which the wave passes, it behaves in the same way as the pattern that generated it. In the instantaneous time cut, it is itself a pattern that generates the continuation of the wave.

It is easy to see that such a propagation of identification waves repeats the Huygens-Fresnel principle, which describes the propagation of light waves. Its essence is that each point of the front of the light wave can be considered as the center of the secondary disturbance. Each such center generates secondary spherical waves. The resulting light field is the interference of these waves. The envelope of the secondary waves becomes the front of the wave at the next instant in time, and so on.

Huygens-Fresnel (Nordmann) refraction (left) and interference (right)

The difference in the nature of the pattern of induced activity and the pattern that occurs in the process of wave propagation can only be detected by analyzing the cause of the activity of the neurons included in them. For evoked activity, this is a picture at the synapses; for the propagation pattern, it is a reaction of metabotropic receptors. That is, the reflection of any phenomena by the brain is both a pattern and a wave, and at each particular moment in time when we try to fix the propagation of this wave, we will see a pattern that is different in pattern, but not in essence from what gave rise to the wave .

It is easy to notice that the described dualism corresponds quite accurately to the dualism of elementary particles. As you know, each particle is both a particle and a wave. Depending on what circumstances we are considering, a particle can exhibit both wave and particle properties. Modern physics states this fact, but does not give him an explanation. What we described about the dualism of information images, fortunately, is somewhat more visual than the dualism of elementary particles. Of course, the question begs, is it possible to extend these analogies and use them to understand the physical picture of the world? This is a very serious question, and later we will talk about the randomness or non-randomness of such a coincidence.

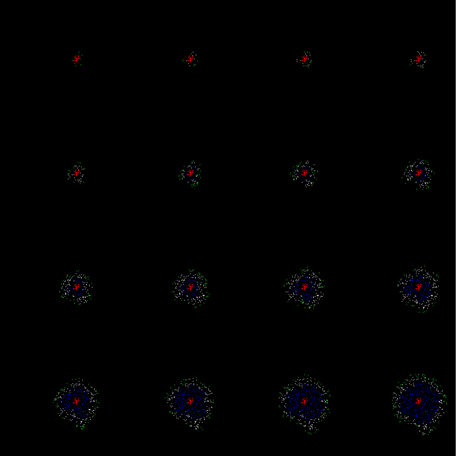

Important consequences arise from the dualism of informational images. Like particles with different spins, two patterns from different waves can be in the same spatial region without interfering with each other. Moreover, each of them causes a continuation of its own wave, without introducing mutual distortions. If we create several patterns and teach the cortex to propagate waves from each of them, then activating these patterns together, we will be able to observe the passage of waves through each other while preserving our own uniqueness (figure below).

Simulation of the passage of waves from two sources

This is very different from continuous wave processes. For example, when waves propagate in water or air, all molecules take part in the vibrations at once. The collision of two waves affects the entire contact volume, which leads to interference. The behavior of each molecule is determined by the action of both waves at once. As a result, we get an interference pattern, which is no longer as simple as each of the wave patterns individually. In our case, each of the waves propagates, affecting only a small part of all available neurons. At the intersection of two information waves, the common neurons capable of creating interference are too few to affect the propagation of each of them. As a result, the waves retain their informational picture, undistorted from a meeting with another wave.

A certain elegance of the described system also lies in the fact that neurons can perform two functions simultaneously. Each neuron can be a detector, tuning by changing the synaptic balance to a specific image. At the same time, the same neuron, due to the information recorded on its metabotropic clusters, can take part in the propagation of various information waves.

It is worthwhile to feel the difference between the properties of a neuron as a detector and as a participant in the propagation of wave activity. It would seem that both there and there he reacts to a certain picture of the activity of his environment. In reality, these are two fundamentally different properties. The relatively smooth adjustment of synaptic scales allows the neuron to learn how to identify hidden factors. Instant fixation of images by non-synaptic clusters provides the memorization of what is right now. Synapses perceive a large-scale picture from the entire receptive field of the neuron. An abbreviated picture of activity is available to the metabotropic receptive clusters, limited to the synapses that form their synaptic traps. There is only one set of synaptic weights for an ordinary neuron, but it can store tens and hundreds of thousands of identification images. Synaptic recognition generates evoked activity, i.e. a long burst of impulses. Several neuron detectors thereby create a pattern of evoked activity. Metabotropic recognition gives a single spike. The totality of such spikes creates the front of the identification wave.

When modeling, it is observed that the propagation range of the identifier depends on how actively the wave-creating pattern manifests itself. Applying the consolidation mechanism, that is, introducing the criteria of forgetting, which works if the activity is not repeated properly, it is possible to obtain an effect when often repeating patterns teach the entire cortex to propagate their waves, and rare combinations create areas of local distribution. Moreover, the sizes of these areas are not fixed, but willingly grow if the corresponding phenomenon begins to manifest itself more often. In this behavior, you can find a certain reason. It is possible that the real crust behaves in a similar way.

When I managed to formulate the wave principles described above, the modeling in the framework of our project moved to a new qualitative level. There was a feeling that instead of a stone ax we suddenly got a jackhammer. In the following parts, I will show how the use of the wave neural network model allows us to explain many previously mysterious properties of the brain.

Continuation

If somewhere is too brief, incomprehensible or inaudible, please write in the comments.

Previous parts:

Part 1. Neuron

Part 2. Factors

Part 3. Perceptron, convolutional networks

Part 4. Background activity