Squeezing gigabits: or the undocumented feature of ViPNet Client / Coordinator

Hello. This post is about how a spontaneous experiment and several hours spent in the server room helped to get interesting results on the performance of ViPNet Custom 4.3 in the Windows version.

Are you curious already? Then look under the cat.

1. Introductory

Initially, this testing was simply an internal experiment of its own. But since, in our opinion, the results were quite interesting, we decided to publish the received data.

What is ViPNet Custom Software? In short, this is a complex of firewall and encryption developed by InfoTeKS in accordance with the requirements of the FSB and FSTEC of Russia.

At the time of testing the solution, there was no data on the performance measurements of the ViPNet Custom software version on various configurations. It was interesting to compare the results of the ViPNet Custom software implementation with the hardware implementation of the ViPNet Coordinator HW1000 / HW2000, the performance of which is known and documented by the vendor.

2. Description of the initial stand

According to the data from the site infotecs.ru of OJSC InfoTeKS , the most powerful configurations of HW platforms have the following characteristics:

Unfortunately, no additional information was provided about the testing conditions.

Initially, we got access to equipment with the following characteristics:

1) IBM system x3550 M4 2 x E5-2960v2 10 core 64 GB RAM;

2) Fujitsu TX140 S1 E3-1230v2 4 core 16GB RAM.

Using the Fujitsu server in the experiment was called into question ... The desire to immediately storm 10GbE was prevented by the lack of a network on the equipment, so we decided to leave it as the starting point.

At IBM, we organized 2 virtual servers with a virtual 10-gigabit switch based on the ESX 6.0u1b platform and after that we evaluated the overall performance of the two virtual machines.

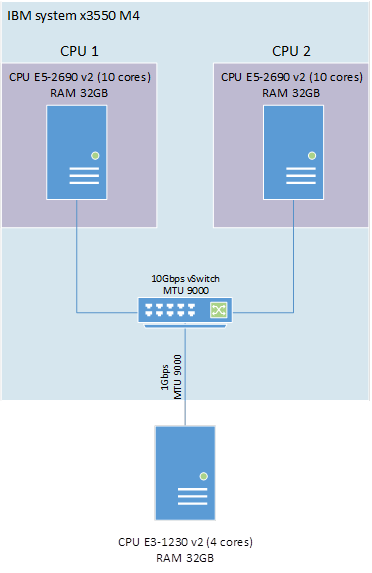

Stand Description:

1. IBM system server x3550 M4 2 x E5-2960v2 10 core 64 GB RAM, ESXi 6.0u1.

For each virtual machine (VM), one physical processor with 10 cores is allocated.

VM1: Windows 2012 R2 (VipNet Coordinator 4.3_ (1.33043)):

• 1 CPU 10 core;

• 8GB RAM.

VM2: Windows 8.1 (ViPNet Client 4.3_ (1.33043)):

• 1 CPU 10 core;

• 8GB RAM.

VMs are connected to a 10Gbps virtual switch, MTU 9000 is installed.

2. Fujitsu Server TX140 S1 E3-1230v2 4 core 16Gb RAM, Windows 2012 R2, ViPNet Client 4.3_ (1.33043).

The IBM and Fujitsu physical servers are connected by a gigabit network to the MTU 9000. Hyper Threading is disabled on both servers. As load software Iperf3 was used.

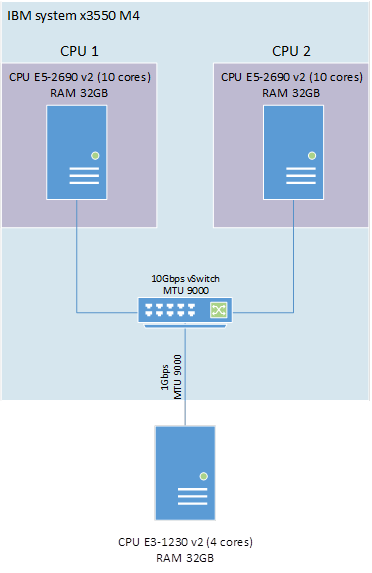

The layout of the stand is shown in Figure 1.

Figure 1 - Scheme of the organization of the test bench

3. The first stage of testing

Unfortunately, the preparation of this article began after all the tests, so for this section screenshots of the results, except the final one, were not saved.

3.1. Test No. 1

First, we will assess whether we can provide the network bandwidth of 1 Gb / s. To do this, we load the virtual coordinator VM1 Windows 2012 R2 (ViPNet Coordinator 4.3) and the physical server Fujitsu TX140 S1.

On the VM1 side, Iperf3 is running in server mode.

On the server side, Fujitsu launched Iperf3 with the parameters

Iperf.exe –c server IP –P4 –t 100,

where the –P4 parameter indicates the number of threads on the server equal to the number of cores.

The test was carried out three times. The results are shown in table 1.

Table 1. Test result No. 1

Based on the results, the following conclusions are made:

1) the E3-1230v2 processor in the encryption task can provide 1Gb / s network bandwidth;

2) the virtual coordinator is less than 25% loaded;

3) with a similar processor, the official performance of ViPNet Coordinator HW1000 is exceeded almost 4 times.

Based on the data obtained, it is clear that the Fujitsu TX140 S1 server has reached its maximum performance. Therefore, further testing will be carried out only with virtual machines.

3.2. Test No. 2

So the time has come for high speeds. We will test the virtual coordinator VM1 Windows 2012 R2 (ViPNet Coordinator 4.3) and VM2 Windows 8.1 (ViPNet Client 4.3).

On the VM1 side, Iperf3 is running in server mode.

On the server side, VM2 Iperf3 is started with the parameters

Iperf.exe –c IP server_P10 –t 100,

where the –P10 parameter indicates the number of threads on the server equal to the number of cores.

The test was carried out three times. The results are shown in table 2

Table 2. Test result No. 2

As you can see, the results are not very different from the previous ones. The test was performed several more times with the following changes:

• the server part of iperf was transferred to VM2;

• replaced guest OS on VM2 on Windows Server 2012 R2 with ViPNet Coordinator 4.3;

In all tested combinations, the results remained the same within the margin of error.

There was an understanding that, most likely, there is a built-in limitation in the ViPNet software itself.

After several testing options, it turned out that when Iperf3 was launched with the parameters

Iperf.exe –c IP_server –P4 –t 100, the

throughput became almost the same as achieved earlier on the Fujitsu server.

At the same time, 4 processor cores became the most loaded - exactly 25% of its power.

The results obtained finally convinced that the limitation is present. The results are sent to the manufacturer with a request for solutions to the problem.

4. Continuation of the experiment

Soon a response was received from the manufacturer:

“The number of processors can be controlled by the key value HKLM \ System \ CurrentControlSet \ Control \ Infotecs \ Iplir, in it the value ThreadCount. If the value is -1 or not set, then the number of threads is chosen equal to the number of processors, but no more than 4. If a value is set, then the number of threads equal to this value is selected. "

Well, the guess was right. Configure the stand for maximum performance by setting the ThreadCount parameter to 10 on both virtual machines.

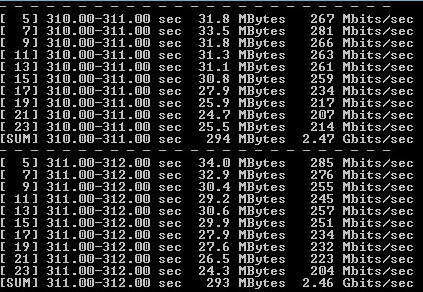

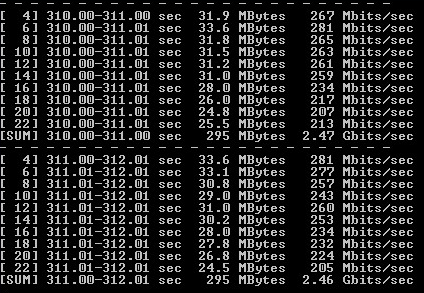

4.1. Test number 3

After making all the necessary changes, start Iperf again.

On the VM1 side, Iperf3 is running in server mode. On the server side, VM2 Iperf3 is started with the parameters

Iperf.exe –c IP server_P10 –t 100,

where the –P10 parameter indicates the number of threads on the server equal to the number of cores.

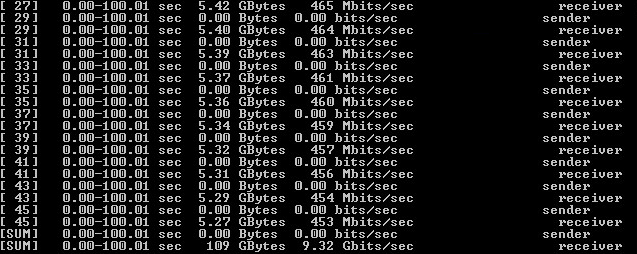

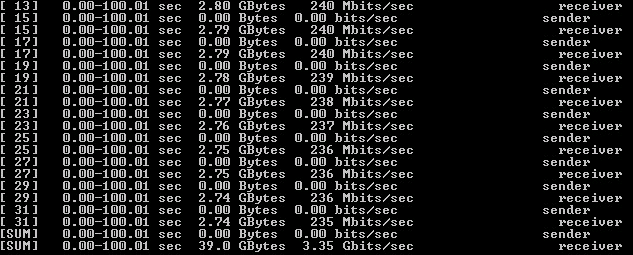

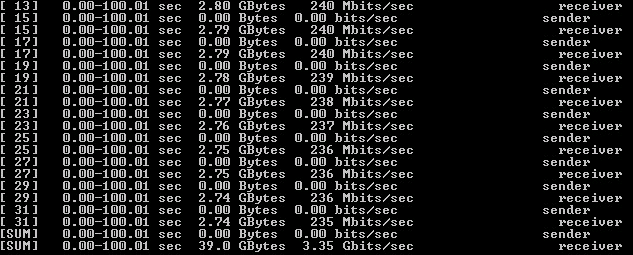

The test was carried out three times. The results are shown in table 3 and figures 2-3

table 3. The result of test No. 3

Figure 2 - Output of iPerf3 to VM1 Windows 2012 R2 (ViPNet Coordinator 4.3)

Figure 3 - Output of iPerf3 to VM2 Windows 8.1 (ViPNet Client 4.3)

Based on the results, the following conclusions were made:

1) the changes made it possible to achieve maximum encryption performance with full processor utilization;

2) the total performance when using two Xeon E5-2960v2 can be considered equal to 5 Gbps;

3) taking into account the overall performance of the two processors, the obtained encryption performance 2 times exceeds the official results of ViPNet Coordinator HW2000.

The results obtained only fueled the interest that you can get even more. Fortunately, it turned out to gain access to more powerful equipment.

It is also worth noting that during testing, there was no difference in throughput between ViPNet Client and ViPNet Coordinator.

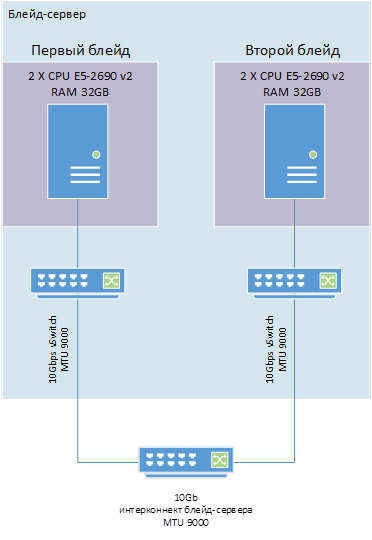

5. The second stage of testing.

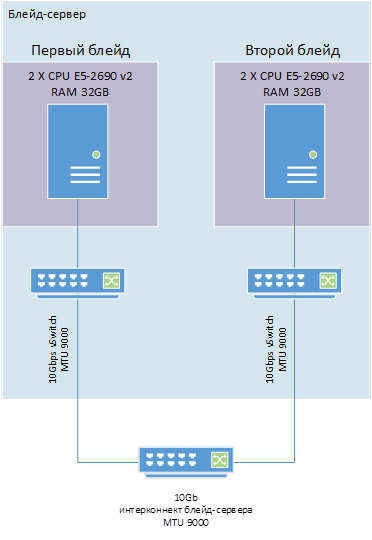

For further research on the performance of the software part of ViPNet software, we got access to two separate blade servers with the following characteristics:

• CPU 2 x E5-2690v2 10 core;

• ESXi 6.0u1.

Each virtual machine is located on its own separate “blade”.

VM1: Windows 2012 R2 (ViPNet Client 4.3_ (1.33043)):

• 2 CPU 20 core;

• 32 GB RAM.

VM2: Windows 2012 R2 (ViPNet Client 4.3_ (1.33043)):

• 2 CPU 20 core;

• 32 GB RAM.

The network connection between the virtual machines is via the 10 Gbps server blade connectivity with MTU 9000. Hyper Threading is disabled on both servers.

To simulate the load, iPerf3 software and, in addition, Ntttcp were used with the following main parameters:

1) on the receiving side:

Iperf.exe –s;

on the transmitting side:

Iperf.exe –cserver_ip –P20 –t100;

2) on the receiving side:

NTttcp.exe -r -wu 5 -cd 5 -m 20, *, self_ip -l 64k -t 60 -sb 128k -rb 128k;

on the transmitting side:

NTttcp.exe -s -wu 5 -cd 5 -m 20, *, server_ip -l 64k -t 60 -sb 128k -rb 128k.

The layout of the stand is shown in Figure 4.

Figure 4 - Scheme of organization of the test bench

5.1. Test No. 4

First, we will test the network bandwidth without encryption. ViPNet software is not installed.

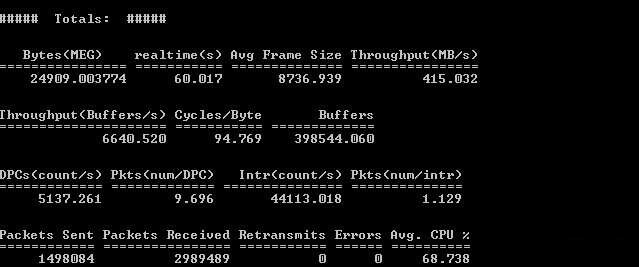

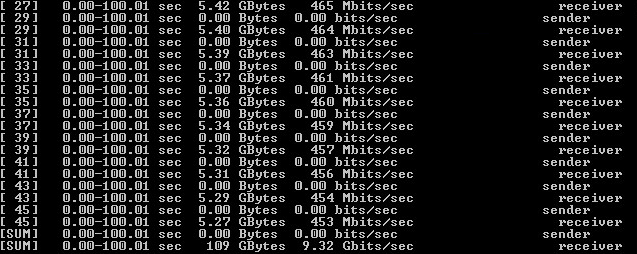

The test was carried out three times. The results are shown in table 4 and figures 5-6.

Table 4. The result of test No. 4

Figure 5 - Test result of Ntttcp without encryption

Figure 6 - Test result of Iperf without encryption

Based on the results, the following conclusions were made:

1) network bandwidth of 10 Gbps was achieved;

2) there is a difference in the results of the testing software. Further, for reliability, the results will be published for both Iperf and Ntttcp.

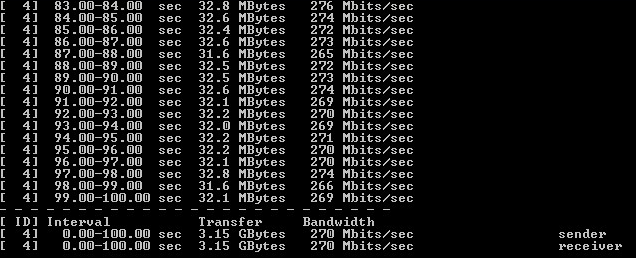

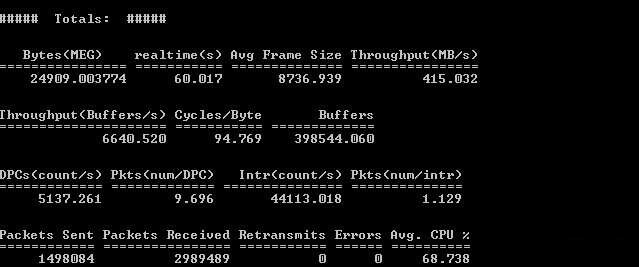

5.2. Test No. 5

Set the ThreadCount parameter to 20 on both virtual machines and measure the results.

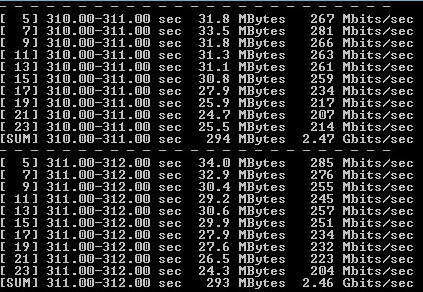

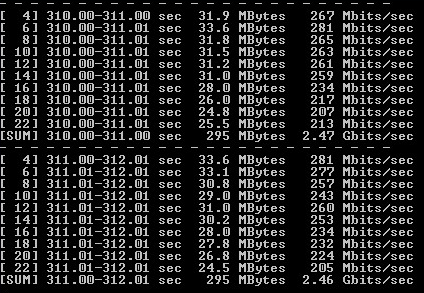

The test was carried out three times. The results are shown in table 5 and figures 7-8.

Table 5 - the result of test No. 5

Figure 7 - Test result of Ntttcp with encryption

Figure 8 - Test result of Iperf with encryption

Based on the results, the following conclusions were made:

1) on a single server, the theoretical performance of ViPNet Coordinator HW2000 was exceeded;

2) theoretical performance of 5 Gbps has not been achieved;

3) CPU load has not reached 100%;

4) the difference in the results of the software for testing remains, but, at the moment, it is minimal.

Based on the fact that the processors on the servers were not completely utilized, let us pay attention to the limitation on the part of the ViPNet driver to the simultaneous number of encryption streams.

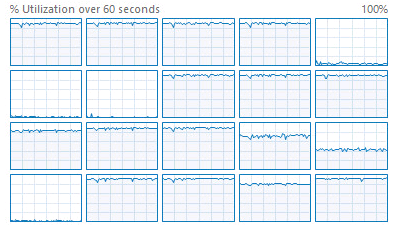

To check the limitation, we will look at loading one processor core during an encryption operation.

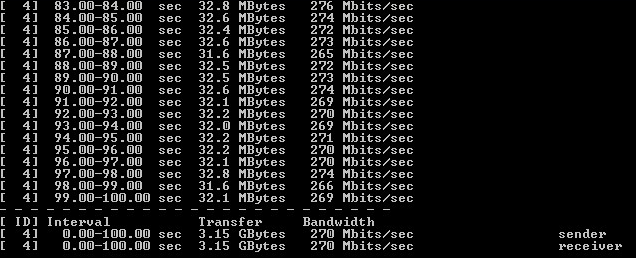

Test No. 6

In this test we will use only Iperf, since, according to the results of previous tests, it gives a large processor load, with the parameters

Iperf.exe –cIP_server –P1 –t100.

On each server through the registry, we restrict ViPNet to use one core during the encryption operation.

The test was carried out three times. The results are shown in Figures 9-10.

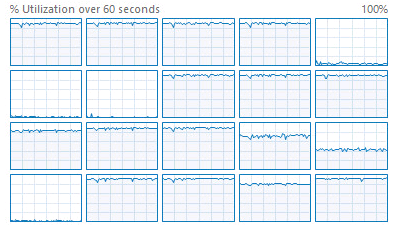

Figure 9 - Utilization of one core during the encryption operation

Figure 10 - The result of testing Iperf with encryption when loading one core

Based on the results, the following conclusions were made:

1) a single core was 100% loaded;

2) based on the load of one core, 20 cores should give a theoretical performance of 5.25 Gbps;

3) based on the loading of one core, ViPNet software has a limit of 14 cores.

To check the limitations on the cores, we will conduct another test with 14 cores involved on the processor.

Test No. 7

Testing with encryption with 14 cores.

In this test we will use only Iperf with the parameters

Iperf.exe –cIP_server –P12 –t100.

On each server through the registry, we restrict ViPNet to use no more than 14 cores during the encryption operation.

Figure 11 - Disposal of 14 cores during the encryption operation

Figure 12 - The result of testing Iperf with encryption when loading 14 cores

Based on the result, conclusions were made:

1) all 14 cores were loaded;

2) performance is similar to the option with 20 cores;

3) there is a limit of 14 cores for multi-threaded encryption operations.

The test results were sent to the manufacturer with a request for solutions to the problem.

6. Conclusion

After some time, the manufacturer’s response was received:

“The use of more than four cores on the Windows coordinator is an undocumented feature, and its correct operation is not verified and not guaranteed.”

I think you can finish testing on this.

Why is the software version pretty much ahead in the test results?

Most likely there are several reasons:

1) Old test results. On new firmware, according to unofficial data, the performance of HW has grown significantly;

2) Undocumented testing conditions.

3) Do not forget that to obtain the maximum result, an undocumented opportunity was used.

Still have questions? Ask them in the comments.

Andrey Kurtasanov, Softline

Are you curious already? Then look under the cat.

1. Introductory

Initially, this testing was simply an internal experiment of its own. But since, in our opinion, the results were quite interesting, we decided to publish the received data.

What is ViPNet Custom Software? In short, this is a complex of firewall and encryption developed by InfoTeKS in accordance with the requirements of the FSB and FSTEC of Russia.

At the time of testing the solution, there was no data on the performance measurements of the ViPNet Custom software version on various configurations. It was interesting to compare the results of the ViPNet Custom software implementation with the hardware implementation of the ViPNet Coordinator HW1000 / HW2000, the performance of which is known and documented by the vendor.

2. Description of the initial stand

According to the data from the site infotecs.ru of OJSC InfoTeKS , the most powerful configurations of HW platforms have the following characteristics:

| A type | Platform | CPU | Throughput |

|---|---|---|---|

| HW1000 Q2 | AquaServer T40 S44 | Intel Core i5-750 | Up to 280 Mb / s |

| HW2000 Q3 | AquaServer T50 D14 | Intel Xeon E5-2620 v2 | Up to 2.7 Gb / s |

Unfortunately, no additional information was provided about the testing conditions.

Initially, we got access to equipment with the following characteristics:

1) IBM system x3550 M4 2 x E5-2960v2 10 core 64 GB RAM;

2) Fujitsu TX140 S1 E3-1230v2 4 core 16GB RAM.

Using the Fujitsu server in the experiment was called into question ... The desire to immediately storm 10GbE was prevented by the lack of a network on the equipment, so we decided to leave it as the starting point.

At IBM, we organized 2 virtual servers with a virtual 10-gigabit switch based on the ESX 6.0u1b platform and after that we evaluated the overall performance of the two virtual machines.

Stand Description:

1. IBM system server x3550 M4 2 x E5-2960v2 10 core 64 GB RAM, ESXi 6.0u1.

For each virtual machine (VM), one physical processor with 10 cores is allocated.

VM1: Windows 2012 R2 (VipNet Coordinator 4.3_ (1.33043)):

• 1 CPU 10 core;

• 8GB RAM.

VM2: Windows 8.1 (ViPNet Client 4.3_ (1.33043)):

• 1 CPU 10 core;

• 8GB RAM.

VMs are connected to a 10Gbps virtual switch, MTU 9000 is installed.

2. Fujitsu Server TX140 S1 E3-1230v2 4 core 16Gb RAM, Windows 2012 R2, ViPNet Client 4.3_ (1.33043).

The IBM and Fujitsu physical servers are connected by a gigabit network to the MTU 9000. Hyper Threading is disabled on both servers. As load software Iperf3 was used.

The layout of the stand is shown in Figure 1.

Figure 1 - Scheme of the organization of the test bench

3. The first stage of testing

Unfortunately, the preparation of this article began after all the tests, so for this section screenshots of the results, except the final one, were not saved.

3.1. Test No. 1

First, we will assess whether we can provide the network bandwidth of 1 Gb / s. To do this, we load the virtual coordinator VM1 Windows 2012 R2 (ViPNet Coordinator 4.3) and the physical server Fujitsu TX140 S1.

On the VM1 side, Iperf3 is running in server mode.

On the server side, Fujitsu launched Iperf3 with the parameters

Iperf.exe –c server IP –P4 –t 100,

where the –P4 parameter indicates the number of threads on the server equal to the number of cores.

The test was carried out three times. The results are shown in table 1.

Table 1. Test result No. 1

| Host | CPU load | Load reached | Channel |

|---|---|---|---|

| VM1 Windows 2012 R2 (ViPNet Coordinator 4.3) | <25% | 972 Mbps | 1Gbps |

| Fujitsu TX140 S1 | 100% | 972 Mbps | 1Gbps |

Based on the results, the following conclusions are made:

1) the E3-1230v2 processor in the encryption task can provide 1Gb / s network bandwidth;

2) the virtual coordinator is less than 25% loaded;

3) with a similar processor, the official performance of ViPNet Coordinator HW1000 is exceeded almost 4 times.

Based on the data obtained, it is clear that the Fujitsu TX140 S1 server has reached its maximum performance. Therefore, further testing will be carried out only with virtual machines.

3.2. Test No. 2

So the time has come for high speeds. We will test the virtual coordinator VM1 Windows 2012 R2 (ViPNet Coordinator 4.3) and VM2 Windows 8.1 (ViPNet Client 4.3).

On the VM1 side, Iperf3 is running in server mode.

On the server side, VM2 Iperf3 is started with the parameters

Iperf.exe –c IP server_P10 –t 100,

where the –P10 parameter indicates the number of threads on the server equal to the number of cores.

The test was carried out three times. The results are shown in table 2

Table 2. Test result No. 2

| Host | CPU load | Load reached | Channel |

|---|---|---|---|

| VM1 Windows 2012 R2 (ViPNet Coordinator 4.3) | 25-30% | 1.12 Gbps | 10 gbps |

| VM2 Windows 8.1 (ViPNet Client 4.3) | 25-30% | 1.12 Gbps | 10 gbps |

As you can see, the results are not very different from the previous ones. The test was performed several more times with the following changes:

• the server part of iperf was transferred to VM2;

• replaced guest OS on VM2 on Windows Server 2012 R2 with ViPNet Coordinator 4.3;

In all tested combinations, the results remained the same within the margin of error.

There was an understanding that, most likely, there is a built-in limitation in the ViPNet software itself.

After several testing options, it turned out that when Iperf3 was launched with the parameters

Iperf.exe –c IP_server –P4 –t 100, the

throughput became almost the same as achieved earlier on the Fujitsu server.

At the same time, 4 processor cores became the most loaded - exactly 25% of its power.

The results obtained finally convinced that the limitation is present. The results are sent to the manufacturer with a request for solutions to the problem.

4. Continuation of the experiment

Soon a response was received from the manufacturer:

“The number of processors can be controlled by the key value HKLM \ System \ CurrentControlSet \ Control \ Infotecs \ Iplir, in it the value ThreadCount. If the value is -1 or not set, then the number of threads is chosen equal to the number of processors, but no more than 4. If a value is set, then the number of threads equal to this value is selected. "

Well, the guess was right. Configure the stand for maximum performance by setting the ThreadCount parameter to 10 on both virtual machines.

4.1. Test number 3

After making all the necessary changes, start Iperf again.

On the VM1 side, Iperf3 is running in server mode. On the server side, VM2 Iperf3 is started with the parameters

Iperf.exe –c IP server_P10 –t 100,

where the –P10 parameter indicates the number of threads on the server equal to the number of cores.

The test was carried out three times. The results are shown in table 3 and figures 2-3

table 3. The result of test No. 3

| Host | CPU load | Load reached | Channel |

|---|---|---|---|

| VM1 Windows 2012 R2 (ViPNet Coordinator 4.3) | 100% | 2.47 Gbps | 10 gbps |

| VM2 Windows 8.1 (ViPNet Client 4.3) | 100% | 2.47 Gbps | 10 gbps |

Figure 2 - Output of iPerf3 to VM1 Windows 2012 R2 (ViPNet Coordinator 4.3)

Figure 3 - Output of iPerf3 to VM2 Windows 8.1 (ViPNet Client 4.3)

Based on the results, the following conclusions were made:

1) the changes made it possible to achieve maximum encryption performance with full processor utilization;

2) the total performance when using two Xeon E5-2960v2 can be considered equal to 5 Gbps;

3) taking into account the overall performance of the two processors, the obtained encryption performance 2 times exceeds the official results of ViPNet Coordinator HW2000.

The results obtained only fueled the interest that you can get even more. Fortunately, it turned out to gain access to more powerful equipment.

It is also worth noting that during testing, there was no difference in throughput between ViPNet Client and ViPNet Coordinator.

5. The second stage of testing.

For further research on the performance of the software part of ViPNet software, we got access to two separate blade servers with the following characteristics:

• CPU 2 x E5-2690v2 10 core;

• ESXi 6.0u1.

Each virtual machine is located on its own separate “blade”.

VM1: Windows 2012 R2 (ViPNet Client 4.3_ (1.33043)):

• 2 CPU 20 core;

• 32 GB RAM.

VM2: Windows 2012 R2 (ViPNet Client 4.3_ (1.33043)):

• 2 CPU 20 core;

• 32 GB RAM.

The network connection between the virtual machines is via the 10 Gbps server blade connectivity with MTU 9000. Hyper Threading is disabled on both servers.

To simulate the load, iPerf3 software and, in addition, Ntttcp were used with the following main parameters:

1) on the receiving side:

Iperf.exe –s;

on the transmitting side:

Iperf.exe –cserver_ip –P20 –t100;

2) on the receiving side:

NTttcp.exe -r -wu 5 -cd 5 -m 20, *, self_ip -l 64k -t 60 -sb 128k -rb 128k;

on the transmitting side:

NTttcp.exe -s -wu 5 -cd 5 -m 20, *, server_ip -l 64k -t 60 -sb 128k -rb 128k.

The layout of the stand is shown in Figure 4.

Figure 4 - Scheme of organization of the test bench

5.1. Test No. 4

First, we will test the network bandwidth without encryption. ViPNet software is not installed.

The test was carried out three times. The results are shown in table 4 and figures 5-6.

Table 4. The result of test No. 4

| Host | CPU load | Load reached | Channel |

|---|---|---|---|

| Tttcp | 2.5% | 8.5 gbps | 10 gbps |

| Iperf | 4% | 9.3 gbps | 10 gbps |

Figure 5 - Test result of Ntttcp without encryption

Figure 6 - Test result of Iperf without encryption

Based on the results, the following conclusions were made:

1) network bandwidth of 10 Gbps was achieved;

2) there is a difference in the results of the testing software. Further, for reliability, the results will be published for both Iperf and Ntttcp.

5.2. Test No. 5

Set the ThreadCount parameter to 20 on both virtual machines and measure the results.

The test was carried out three times. The results are shown in table 5 and figures 7-8.

Table 5 - the result of test No. 5

| Host | CPU load | Load reached | Channel |

|---|---|---|---|

| Tttcp | 74-76% | 3.24 gbps | 10 gbps |

| Iperf | 68-71% | 3.36 gbps | 10 gbps |

Figure 7 - Test result of Ntttcp with encryption

Figure 8 - Test result of Iperf with encryption

Based on the results, the following conclusions were made:

1) on a single server, the theoretical performance of ViPNet Coordinator HW2000 was exceeded;

2) theoretical performance of 5 Gbps has not been achieved;

3) CPU load has not reached 100%;

4) the difference in the results of the software for testing remains, but, at the moment, it is minimal.

Based on the fact that the processors on the servers were not completely utilized, let us pay attention to the limitation on the part of the ViPNet driver to the simultaneous number of encryption streams.

To check the limitation, we will look at loading one processor core during an encryption operation.

Test No. 6

In this test we will use only Iperf, since, according to the results of previous tests, it gives a large processor load, with the parameters

Iperf.exe –cIP_server –P1 –t100.

On each server through the registry, we restrict ViPNet to use one core during the encryption operation.

The test was carried out three times. The results are shown in Figures 9-10.

Figure 9 - Utilization of one core during the encryption operation

Figure 10 - The result of testing Iperf with encryption when loading one core

Based on the results, the following conclusions were made:

1) a single core was 100% loaded;

2) based on the load of one core, 20 cores should give a theoretical performance of 5.25 Gbps;

3) based on the loading of one core, ViPNet software has a limit of 14 cores.

To check the limitations on the cores, we will conduct another test with 14 cores involved on the processor.

Test No. 7

Testing with encryption with 14 cores.

In this test we will use only Iperf with the parameters

Iperf.exe –cIP_server –P12 –t100.

On each server through the registry, we restrict ViPNet to use no more than 14 cores during the encryption operation.

Figure 11 - Disposal of 14 cores during the encryption operation

Figure 12 - The result of testing Iperf with encryption when loading 14 cores

Based on the result, conclusions were made:

1) all 14 cores were loaded;

2) performance is similar to the option with 20 cores;

3) there is a limit of 14 cores for multi-threaded encryption operations.

The test results were sent to the manufacturer with a request for solutions to the problem.

6. Conclusion

After some time, the manufacturer’s response was received:

“The use of more than four cores on the Windows coordinator is an undocumented feature, and its correct operation is not verified and not guaranteed.”

I think you can finish testing on this.

Why is the software version pretty much ahead in the test results?

Most likely there are several reasons:

1) Old test results. On new firmware, according to unofficial data, the performance of HW has grown significantly;

2) Undocumented testing conditions.

3) Do not forget that to obtain the maximum result, an undocumented opportunity was used.

Still have questions? Ask them in the comments.

Andrey Kurtasanov, Softline