React & BEM - official collaboration. Part of the historical

This is the story of integrating the BEM methodology into the React universe. The material that you read is built on the experience of Yandex developers developing the largest and most loaded service in Russia - Yandex.Search. We have never before told in such detail and deeply about why we did it this way and not otherwise, what moved us and what we really wanted. The external person got dry releases and reviews at conferences. Only on the sidelines could you hear something similar. As a coauthor, I was indignant because of the paucity of information outside each time I talked about new versions of libraries. But this time we will share all the details.

Everyone has heard of the BEM methodology. Underline CSS selectors. Component approach , which is said, referring to the way of writing CSS-selectors. But about the CSS in the article will not be a word. Only JS, only hardcore!

To understand why the methodology appeared and what problems Yandex faced then, I recommend that you familiarize yourself with the history of BEM.

Prologue

BEM really was born as a salvation from strong connectivity and nesting in CSS. But dividing the sheets style.css into files for each block, element, or modifier inevitably led to a similar structuring of JavaScript code.

In 2011, Open Source acquired the first commits of the framework i-bem.js, which worked in conjunction with the template engine bem-xjst. Both technologies grew out of XSLT and served as a popular idea at the time to separate business logic and component presentation. In the outside world, these were the beautiful times of Handlebars and Underscore.

bem-xjst- shablonizator other type. In order to supplement the knowledge about the architecture of approaches to standardization, I strongly recommend the report of Sergey Berezhnoy . And bem-xjstyou can try the template engine in the online sandbox .

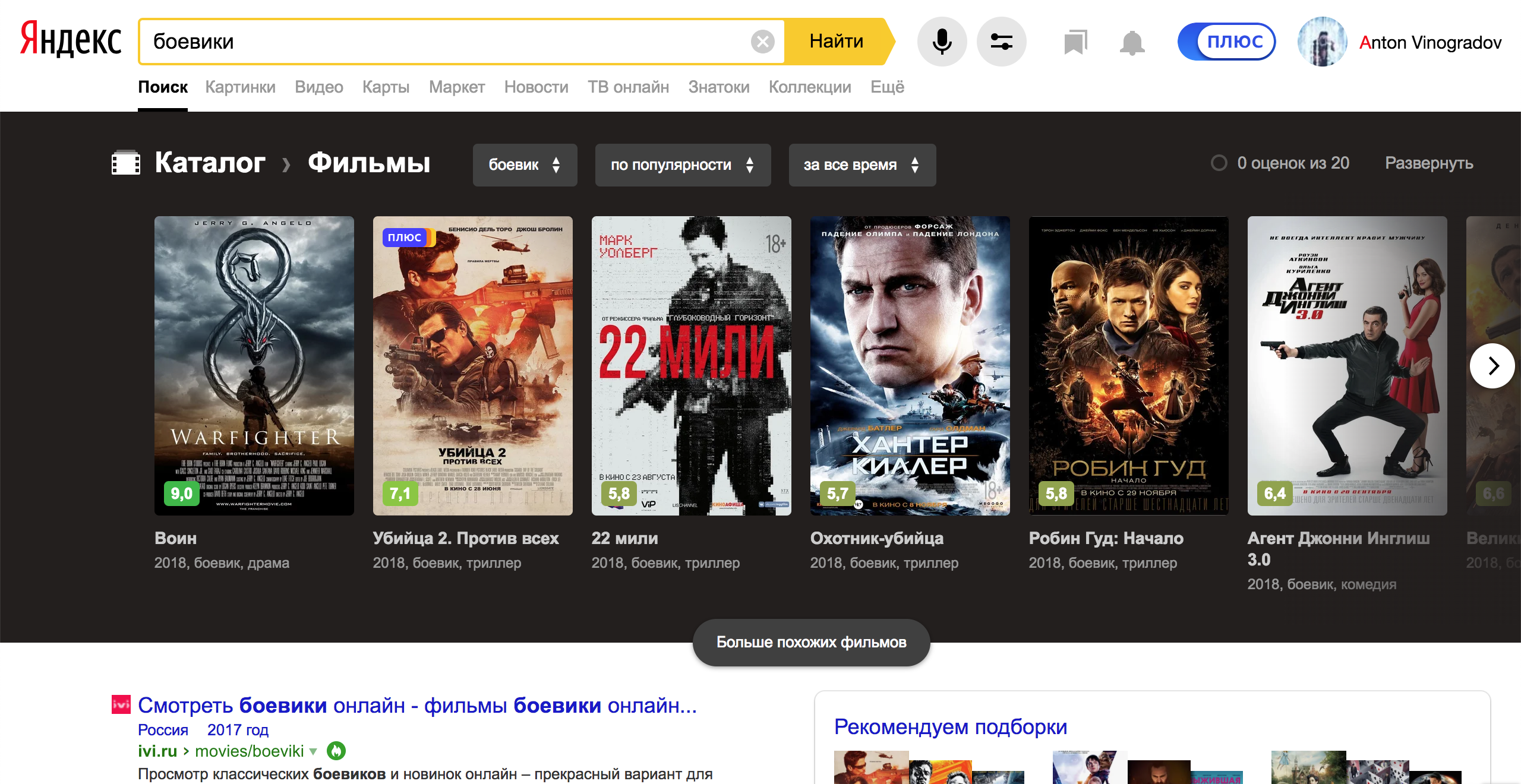

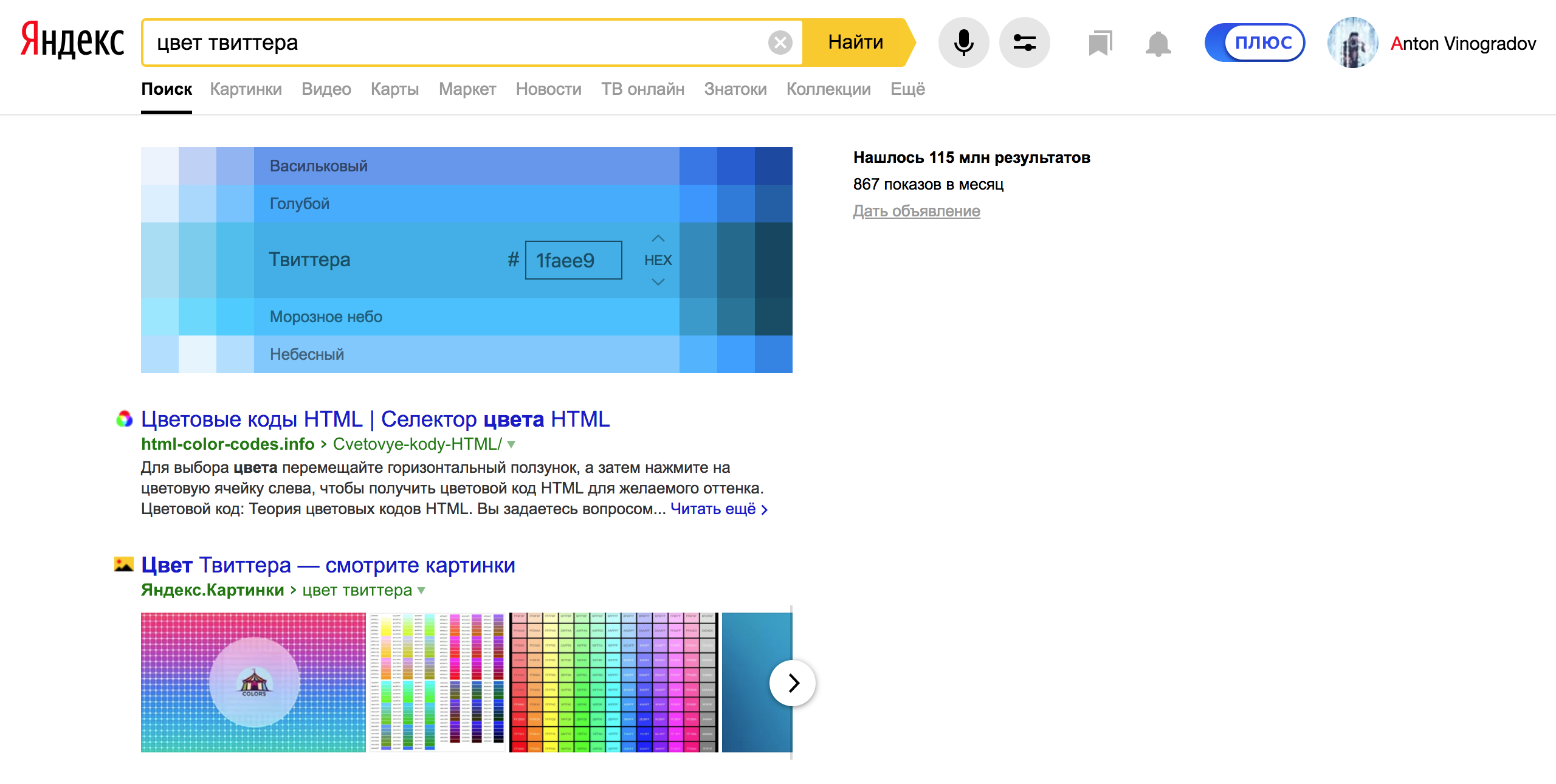

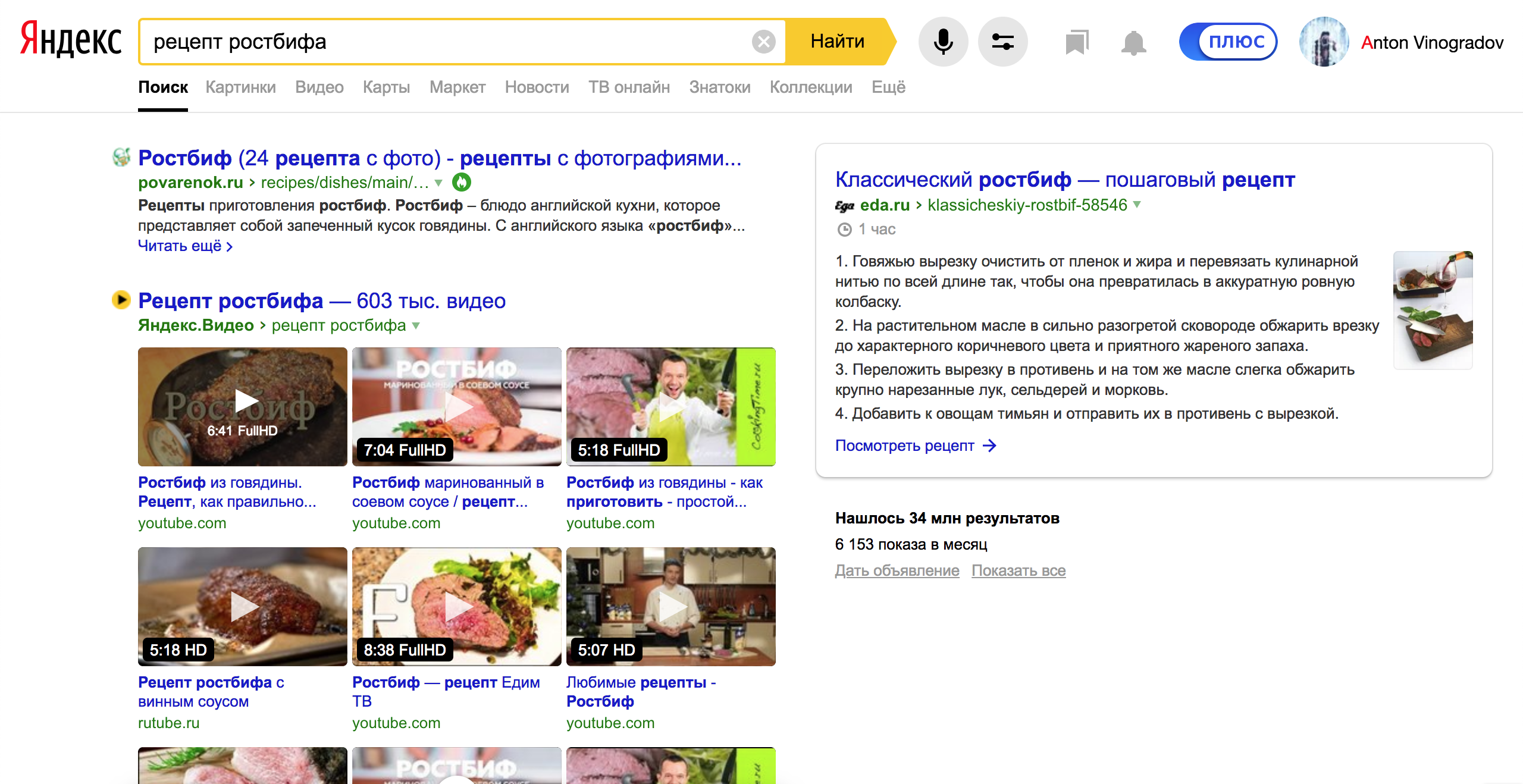

Due to the specifics of Yandex search services, user interfaces are built according to data. The search results page is unique for each query.

Search query by reference

Search query by reference

Search query by reference

When the division into a block, an element and a modifier spread to the file system, this allowed us to efficiently collect only the necessary code, in fact, for each page, for every user request. But how?

src/components

├── ComponentName

│ ├── _modName

│ │ ├── ComponentName_modName.tsx — простой модификатор

│ │ └── ComponentName_modName_modVal.tsx — модификатор со значением

│ ├── ElementName

│ │ └── ComponentName-ElementName.tsx — элемент блока ComponentName

│ ├── ComponentName.i18n — файлы переводов

│ │ ├── ru.ts — словарь для русского языка

│ │ ├── en.ts — словарь для английского языка

│ │ └── index.ts — словарь используемых языков

│ ├── ComponentName.test — файлы тестов

│ │ ├── ComponentName.page-object.js — Page Object

│ │ ├── ComponentName.hermione.js — функциональный тест

│ │ └── ComponentName.test.tsx — unit-тест

│ ├── ComponentName.tsx — визуальное представление блока

│ ├── ComponentName.scss — визуальные стили

│ ├── ComponentName.examples.tsx — примеры компонента для Storybook

│ └── README.md — описание компонентаModern component directory structure

As in some other companies, in Yandex, interface developers are responsible for the frontend consisting of the client side in the browser and the server side on Node.js. The server part processes the data of a “large” search and imposes templates on them. Primary data processing converts JSON to BEMJSON - the data structure for the template engine bem-xjst. Template engine bypasses each node of the tree and imposes a template on it. Since the primary conversion takes place on the server and, due to the division into small entities, the nodes correspond to the files, with templating we push code to the browser that will be used only on the current page.

Below is the correspondence of BEMJSON nodes to files on the file system.

module.exports = {

block: 'Select',

elem: 'Item',

elemMods: {

type: 'navigation'

}

};src/components

├── Select

│ ├── Item

│ │ _type

│ │ ├── Select-Item_type_navigation.js

│ │ └── Select-Item_type_navigation.cssThe modular system was responsible for isolating the JavaScript code components in the browser YModules. It allows synchronous and asynchronous delivery of modules to the browser. An example of how components work with YModulesand i-bem.js can be found here . Today, for most developers модульная система webpack , the unimpressed standard of dynamic imports does the same .

A set of BEM methodology, declarative template engine and JS framework with a modular system allowed to solve any problem. But over time, the dynamics came to the user interfaces.

New Hope

In 2013, React came to the Open Source . In fact, Facebook began to use it back in 2011. James Long in his notes from the JS Conf US conference says:

The last two sessions were a surprise. WAS one's first of The Given to two two by Facebook developers and for They Announced Facebook the React . I think it is a bad idea. Essentially, it lets you embed XML in JavaScript to create live reactive user interfaces. XML. In javascript.

React has changed the approach to designing web applications. He has become so popular that today he cannot find a developer who would not hear about React. But the important thing is different: applications have become different, SPA have come to our life .

It is believed that the developers of Yandex have a special sense of beauty in terms of technology. Sometimes strange, with which it is difficult to argue, but groundless - never. When React scored stars on GitHub , many who were familiar with Yandex web technologies insisted: Facebook won, drop your crafts and run everything to rewrite to React before it is too late. It is important to understand two things.

First, there was no war. Companies do not compete to create the best framework on Earth. If a company starts spending less time (read money) on infrastructure tasks with the same productivity, everyone will benefit from it. It makes no sense to write frameworks to write frameworks. The best developers create tools that solve the company's problems in the best way. Companies, services, goals - all this is different. Hence the variety of tools.

Secondly, we were looking for a way to apply React as we would like. With all the features that our technology gave, as described above.

Argued that the code using React default fast. If you think so, then you are deeply mistaken. The only thing React does is in most cases helping to optimally interact with the DOM.

Up to version 16, React had a fatal flaw. He was 10 times slower bem-xjston the server. We could not afford such waste. The response time for Yandex is one of the key metrics. Imagine a query with a mulled wine recipe you will receive a response 10 times slower than usual. You will not be satisfied with an excuse, even if you at least understand something in web development. What to say about the explanation like "but the developers have become more convenient to communicate with the DOM." Add to this the ratio of the price of implementation and profit - and you yourself will take the only right decision.

Fortunately, whether to grief, the developers are strange people. If something does not work, then this is not a reason to drop everything ...

Inside out

We were confident that we could beat the slowness of React. We already have a fast template engine. All you need to do is generate HTML on the server using bem-xjst, and on the client, “force” React to accept this markup as your own. The idea was so simple that nothing foretold failure.

In versions up to 15 inclusive, React validated the accuracy of the markup through the hash sum — an algorithm that turns any optimization into a pumpkin. To convince React of the validity of the markup, it was necessary to put an id on each node and calculate the hash sum of all the nodes. It also meant support for a double set of templates: React for the client and bem-xjstfor the server. Simple speed tests with setting id made it clear that there is no point in continuing.

The template engine bem-xjst is a very undervalued tool. Look at the report of the main maintainer Glory Oliyanchuk and see for yourself. bem-xjstbuilt on the basis of the architecture, which allows using one syntax of templates for different transformations of the source tree. Very similar to React, isn't it? This feature today allows such tools as exist react-sketchapp.

Out of the box bem-xjstcontains two types of transformations: in HTML and in JSON. Any prudent developer can write his own template transformation engine for anything. We taught how to bem-xjsttransform a tree with data into a sequence of calls to HyperScript functions . That meant full compatibility with React, and with other implementations of the Virtual DOM algorithm, for example with Preact .

A detailed account of the approach to generating calls to HyperScript functions.

Since the React templates assume the coexistence of layout and business logic, we had to bring the logic out i-bem.jsto our templates, which were not intended for this. For them it was unnatural. They were going otherwise. By the way!

Below is an example from the depths of gluing different worlds in one runtime.

block('select').elem('menu')(

def()(function() {

const React = require('react');

const Menu = require('../components/menu/menu');

const MenuItem = require('../components/menu-item/menu-item');

const _select = this.ctx._select;

const selectComponent = _select._select;

return React.createElement.apply(React, [

Menu,

{

mix: { block : this.block, elem : this.elem },

ref: menu => selectComponent._menu = menu,

size: _select.mods.size,

disabled: _select.mods.disabled,

mode: _select.mods.mode,

content: _select.options,

checkedItems: _select.bindings.checkedItems,

style: _select.bindings.popupMenuWidth,

onKeyDown: _select.bindings.onKeyDown,

theme: _select.mods.theme,

}].concat(_select.options.map(option => React.createElement(

MenuItem,

{

onClick: _select.bindings.onOptionCheck,

theme: _select.mods.theme,

val: option.value,

}, option.content)

))

);

})

);Of course, we had our own build. As you know, the fastest operation is a concatenation of strings. The engine is built bem-xjston it, the assembly was built on it. Files of blocks, elements and modifiers lay in daddies, and the assembly only needed to glue the files together in the correct sequence. With this approach, you can simultaneously glue together JS, CSS and templates, as well as the entities themselves. That is, if you have four components in a project, there are four cores on a laptop, and the assembly of one component technology takes one second, then the project build will take two seconds. Here it should become clearer how we manage to push only the necessary code into the browser.

All this for us did ENB . We received the final tree for templating only at runtime, and since the dependency between the components should have arisen a bit earlier in order to assemble the bundles, the little-known technology took over this function deps.js. It allowed to build a dependency graph between components, after which the builder could glue the code in the desired sequence, bypassing the graph.

The work in this direction has been stopped by the release of React version 16. The execution speeds of templates on the server have become equal . At the production capacities, the difference became imperceptible.

Node: v8.4.0

Children: 5K

| renderer | mean time | ops / sec |

|---|---|---|

| preact v8.2.6 | 66.235ms | 15 |

| bem-xjst v8.8.4 | 71.326ms | 14 |

| react v16.1.0 | 73.966ms | 14 |

The links below can restore the history of the approach:

- https://ru.bem.info/forum/961/

- https://github.com/awinogradov/react-bl

- https://github.com/awinogradov/xjst-ddsl

- https://github.com/awinogradov/ddsl-react

Have we tried anything else?

- https://github.com/veged/bem-components-react - the first approach to the implementation of the methodology in React;

- https://github.com/Yeti-or/bem-hazard is a similar approach based on the BH template engine. With BH, by the way, you can play around online;

- https://github.com/dfilatov/bem-react - creating components via BEMJSON;

- https://github.com/rebem - utilities for working with BEM in the world of React.

Motivation

In the middle of the story it will be useful to talk about what moved us. It was worth doing it at the beginning, but - whoever remembers the old, will give that eye as a gift. Why do we need all this? What can BEM bring, what can't React do? Questions that almost everyone asks.

Decomposition

The functionality of the components from year to year is complicated, and the number of variations increases. This is expressed by constructions if or switch, as a result, the code base inevitably grows, as a result, the weight of the component and the project using such a component increase. The main part of React-component logic is enclosed in a method render(). To change the functionality of a component, it is necessary to rewrite most of the method, which inevitably leads to an exponential increase in the number of highly specialized components.

Everyone knows the material-ui , fabric-ui and react-bootstrap libraries . In general, all known libraries with components have the same drawback. Imagine that you have several projects and all use the same library. You take the same components, but in different variations: there are selections with checkboxes, there are no, there are blue buttons with an icon, there are red ones without. The weight of CSS and JS, which brings you the library, in all projects will be the same. But why? Component variations are embedded inside the component itself and are supplied with it, whether you like it or not. For us, this is unacceptable.

Yandex also has its own library with components - Lego. Used in ~ 200 services. Do we want the use of Lego in Search to be the same for Yandex.Health? You know the answer.

Cross Platform Development

To support multiple platforms, most often create either a separate version for each platform, or one adaptive one.

Development of individual versions requires additional resources: the more platforms, the more effort. Support for the synchronous state of product properties in different versions will cause new difficulties.

The development of an adaptive version complicates the code, increases the weight, reduces the speed of the product with a proper difference between the platforms.

Do we want our parents / friends / colleagues / children to use desktop versions on mobiles with lower internet speed and lower performance? You know the answer.

Experiments

If you are developing projects for a large audience, you must be confident in every change. A / B experiments are one way to get this confidence.

Ways to organize code for experiments:

- fork of the project and creation of production service instances;

- point conditions inside the code base.

If the project has a lot of lengthy experiments, the codebase branching causes significant costs. It is necessary to keep up every branch with the experiment: to port the corrected errors and product functionality. The codebase branch multiplies complicates overlapping experiments.

Point conditions work more flexibly, but complicate the code base: experimental conditions can affect different parts of a project. A large number of conditions affects performance by increasing the amount of code for the browser. We must remove the conditions, make the code basic, or completely remove the failed experiment.

In Search ~ 100 experiments online in various combinations for different audiences. You could see it for yourself. Remember, maybe you noticed the functionality, and after a week it magically disappeared. Do we want to test product theories at the cost of maintaining hundreds of branches of the active code base of 500,000 lines, which ~ 60 developers change every day? You know the answer.

Global change

For example, you can create a component CustomButtoninherited from a Button library. But the inherited CustomButton does not apply to all components from the library containing Button. The library may have a component Searchbuilt from Input and Button. In this case, the Search inherited component will not appear inside the component CustomButton. Do we want to manually bypass the entire codebase where it is used Button?

Long road to composition

We decided to change the strategy. In the previous approach, they took Yandex technology as a basis and tried to make React work on this basis. New tactics suggested the opposite. This is how the bem-react-core project appeared .

Stop! Why generally React?

We saw in it the opportunity to get rid of the explicit initial rendering in HTML and manual support of the state of the JS component later in runtime - in fact, it became possible to merge BEMHMTL templates and JS components into one technology.

v1.0.0

Initially, we planned to transfer all the best practices and properties bem-xjstto the library on top of React. The first thing that catches your eye is the signature, or, if you prefer, the syntax of the component description.

What have you done, there is the JSX!

The first version was built on the basis of inherit - a library that helps implement classes and inheritance. As some of you remember, in those very times there were no classes of JavaScript prototypes in JavaScript, there were no classes super. In general, they are still not there, or rather, these are not the classes that first come to mind. inheritdid everything that classes in the ES2015 standard can do now, and what is considered to be black magic: multiple inheritance and fusion of prototypes instead of rebuilding the chain, which has a positive effect on performance. You won’t go wrong if you think it’s similar to the inherits in Node.js , but they work differently.

Below is an example of template syntax bem-react-core@v1.0.0.

App-Header.js

import { decl } from'bem-react-core';

exportdefault decl({

block: 'App',

elem: 'Header',

attrs: {

role: 'heading'

},

content() {

return'я заголовок';

}

});App-Header@desktop.js

import { decl } from'bem-react-core';

exportdefault decl({

block: 'App',

elem: 'Header',

tag: 'h1',

attrs() {

return {

...this.__base(...arguments),

'aria-level': 1

},

},

content() {

return`А ${this.__base(...arguments)} на десктопах превращаюсь в h1`;

}

});App-Header@touch.js

import { decl } from'bem-react-core';

exportdefault decl({

block: 'App',

elem: 'Header',

tag: 'h2',

content() {

return`А ${this.__base(...arguments)} на тачах`;

}

});index.js

import ReactDomServer from'react-dom/server';

import AppHeader from'b:App e:Header';

ReactDomServer.renderToStaticMarkup(<AppHeader />);output@desktop.html

<h1class="App-Header"role="heading"aria-level="1">A я заголовок на десктопах превращаюсь в h1</h2>output@touch.html

<h2class="App-Header"role="heading">я заголовок на тачах</h2>The device templates of more complex components can be found here .

Since the class is an object, and the most convenient way to work with objects in JavaScript is that the syntax is appropriate. Later, the syntax migrated to its mastermind bem-xjst.

The library was a global repository of object declarations - the results of the function decl, parts of the entities: block, element or modifier. BEM provides a unique naming mechanism and is therefore suitable for creating keys in the repository. The final React component stuck together at its place of use. The trick is to decl work out when importing a module. This made it possible to indicate which parts of the component are needed in each particular place, using a simple list of imports. But remember: the components are complex, there are many parts, the list of imports is long, the developers are lazy.

Import magic

As you can see, the code examples have lines import AppHeader from 'b:App e:Header'.

You broke the standard! You can not do it this way! It just won't work!

Firstly, the standard of imports does not use terms in the spirit of “in the import line there must be a path to a real-life module”. Secondly, it is syntactic sugar, which was transformed with the help of Babel. Thirdly, strange constructions of punctuation marks in imports for webpack for some import txt from 'raw-loader!./file.txt'; reason did not confuse anyone.

So, our unit is presented in two platforms: desktop, touch.

import Hello from'b:Hello';

// Запись будет трансформирована в следующее:var Hello = [

require('path/to/desktop/Hello/Hello.js'),

require('path/to/touch/Hello/Hello.js')

][0].applyDecls();Here in the code, a sequential import of all component definitions will occur Hello, and then a function call applyDeclsthat will merge all block declarations from the global repository through inheritand create a new, unique for a specific place in the React component project.

A Babel plugin that performs this conversion can be found here . A webpack loader that was looking for a component definition file system, right here .

In the end, what was good:

- short, declarative syntax of templates, allowing to define different parts of a component anywhere in the project;

- no prototype chain in inheritance;

- unique React component for each place of use.

And it was bad:

- no typeScript / flow support;

- unusual syntax for most React developers;

- due to the dynamic nature of imports, it is not possible to deliver code in a transpiled form;

- Required special customization of the assembly on the project.

v2.0.0

We took into account the experience of use bem-react-core@v1.0.0in projects, reviews and common sense and tried again.

import { Elem } from'bem-react-core';

import { Button } from'../Button';

exportclassAppHeaderextendsElem{

block = 'App';

elem = 'Header';

tag() {

return'h2';

}

content() {

return (

<Button>Я кнопка</Button>

);

}

}As the syntax for describing blocks, elements and modifiers, classes were chosen. Classes are distinguished by declarative writing, built-in support for inheritance, they just work great with TypeScript / Flow. The attentive reader remarked that we abandoned inherit both “our own” imports, which meant more convenient debugging, but also a longer chain of prototypes with all the ensuing consequences for performance.

The main tasks were:

- to abandon additional add-ons in the form of loaders for webpack and plug-ins for Babel;

- as close as possible to the usual language;

- Get native support for all tools for debugging, writing and testing code.

We removed the modifier declaration for all the familiar HOCs , inside them created a new class relative to the base class and added the necessary methods of the base class.

import * as React from'react';

import * as ReactDOM from'react-dom';

import { Block, Elem, withMods } from'bem-react-core';

interface IButtonProps {

children: string;

}

interface IModsProps extends IButtonProps {

type: 'link' | 'button';

}

// Создание элемента TextclassTextextendsElem{

block = 'Button';

elem = 'Text';

tag() {

return'span';

}

}

// Создание блока ButtonclassButton<TextendsIModsProps> extendsBlock<T> {

block = 'Button';

tag() {

return'button';

}

mods() {

return {

type: this.props.type

};

}

content() {

return (

<Text>{this.props.children}</Text>

);

}

}

// Расширение функциональности блока Button, при наличии свойства type со значением linkclassButtonLinkextendsButton<IModsProps> {

static mod = ({ type }: any) => type === 'link';

tag() {

return'a';

}

mods() {

return {

type: this.props.type

};

}

attrs() {

return {

href: 'www.yandex.ru'

};

}

}

// Объединение классов Button и ButtonLinkconst ButtonView = withMods(Button, ButtonLink);

ReactDOM.render(

<React.Fragment><ButtonViewtype='button'>Click me</ButtonView><ButtonViewtype='link'>Click me</ButtonView></React.Fragment>,

document.getElementById('root')

);After some time, we found architectural problems in the operation of modifiers and a number of flaws that could not be solved by corrections.

withModsit takes as arguments the base class of the block and the classes that extend the base (modifiers), the modifiers possessed a predicate for incoming props. When drawing components, as soon as the modifier predicate is triggered, withMods rebuilds the prototype chain relative to all active modifiers so that each following will be the heir of the previous one. This happens with every change of props. There are no problems on the first draw, but as soon as the modifiers start to turn on, the base unit (its prototype) gets the functionality of the modifier. As a result, all instances on the page will have all the functionality of the modifiers, regardless of the incoming props. A case can repeat on the second redraw, on the third, and on the fourth, depending on when the modifiers predicates work.

Solutions that did not help:

- Wrap modifiers in a function. So that on each call of the modifier a new class is returned. Partially solves the problem, but is incompatible with TS. Since from the function of the modifier, the class starts to return, which is an extender base from the outer osprey. When compiled for ES5, TS rebuilds super calls not through a prototype, but through an external reference to the base class from a constant. Yes, TS translates the code not according to the standards, but as he likes.

- Compile in two passes. TS for ES6 and Babel for ES5. It helps only at the level of the collected project, the supplied code in npm-packages will not be processed this way. In addition, it will greatly slow down the assembly and tie everyone to use Babel.

Additional difficulties:

- It is not possible to extend the base blocks at the level of the project that uses them. For example, use library blocks on services. Case: attributes of counters on DOM nodes. Expandable only through HOC, and modifiers apply only to the class. Any use withMods did not block access to the methods of the base class.

- All entities (block, element, modifier) for the correct generation of classes must be classes. While most entities have weak functionality and can be expressed through SFC .

- Fat CSS modifiers. Any CSS modifier must have a JS representation in the form of an extension of the base class. This is not a problem in itself, but there was a suspicion that this approach did not reduce our amount of code in the browser.

We had to interrupt the development of v2.

Manifesto

Naturally, this did not stop us. We wrote a manifest. Following it, it was possible to solve the problems that we encountered in versions 1 and 2. Below, I will retell some of this manifesto .

The main idea - working through the full composition. We express the work with CSS classes and modifiers through HOC, and the code redefinition by platforms and experiments is expressed through dependency injection .

Necessary and sufficient from BEM in React:

- working with CSS classes.

- declarative code separation by modifiers and redefinition levels (platforms, experiments);

The modifier can no longer affect the internal structure of the component. Additional functionality must be expressed through the control components above. And the components themselves can be expressed as React.ComponentType needed without the basic BEM components. Connecting modifiers is no different from connecting any other HOC and works through any compose and in any order.

The modifier determines the truth of the predicate and adds an additional class through propsy to the base class.

The redefinition of components and their components is expressed through dependency injection, which is implemented on the basis of React.ContextAPI multiple component registries. Each component is free to register its dependencies in the registry and allow them to be overridden from above, which is not directly incorporated into the work of the standard context, but is implemented by another way of calculating the new context value. By default, dependencies can be redefined by context down, which is the standard context operation mechanism. As a result, DI is the HOC that will put the registries in context. Registries can work in failing and ascending dependencies. This allows you to override anything, anywhere, at any level of nesting by simply adding components to the registry.

What we have done is already visible in the production on the Search results page. We fit everything that we needed from BEM to a library of 4 packages, total weight in 1.5Kb.

This is where the historical part ends. Thanks to those who read to the end. In the next article I will tell you how we work with React in Yandex.Search today.