20 years of tech support: how the world has changed around us

We were in 1995.

My colleague somehow urgently brought power supplies to Murmansk - there the plant got up on December 30, not a single delivery service worked. Yes, and with the conductor or pilot can not pass. Plus, no one has had this kind of iron for about 5 years. Found miraculously through friends, loaded into the car, he drove himself. On the way, the car broke down, he repaired it at -25 right in the strong wind. Arrived, they inserted the blocks into the rack - so they immediately burned there.

In general, take tea and go inside to listen to warm good stories. We have the support of hundreds of customers, including the largest companies in the country. Anything happened. I will slowly tell you how we started, and what we are doing now.

Introduction

I’ll tell you right away that I’ll only talk about the service of “heavy” expensive equipment: IBM, HP and SUN RISC servers (now Oracle), EMC big data storage systems, IBM, Hitachi, CISCO, Nortel core level switches, HPN etc. It depends on such systems, for example, whether bank call centers will work, whether we can withdraw money from an ATM, check in for a flight or reach the person we need at the right time.

Cockroaches in a home PC are understandable to everyone. But the fact that they live in servers is a little more interesting, and the responsibility of a systems engineer for such systems and equipment is much higher. For obvious reasons, I have no right to call customers, but anyone who knows, knows a lot of people. In half the cases, it is a mistake, because the same stories have the property of repeating themselves. Oh, and where completely critical bugs went, I changed some minor details at the request of the security team. But let's go.

That's what we do.

My most memorable story is this.We somehow set up a cluster in one bank. And a database on it. All this works fine individually, but if you run a real request, it does not pass. At the same time, pings go normally. We try from another place - everything is fine. We watched for several days, they began to take apart packages. As a result, we found a network printer with a small bug in this subnet with users. And now he, like a time bomb, has been waiting for a high point throughout his long working life. And he waited. As a result, he specifically caught our packages. They took it out of the socket - everything worked as it should.

A year later, the story with the Packet Killer repeated. Already from another customer. They beat their heads against the wall for a couple of days, then they called us to figure out what was wrong. We look - according to the documentation all network nodes are the same, in fact, one of the boxes was different in color. They took it out - the traffic immediately went. Then it turned out that it was a non-original module that was installed during the repair. And his firmware is different. We just replaced it with a normal one.

How did everything evolve?

At first it was like that. In the 90s, in order to assemble a computer, it was still necessary to assemble everything individually working on one motherboard - and here any surprises could be waiting for you. Compatibility had to be checked, and the tolerances of the iron were such that two identical pieces of iron could work in completely different ways.

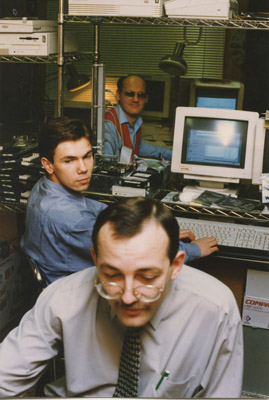

That we in 2002-2003 test computers.

At first, technical support was a kind of work on the verge of microelectronics. Then the problems of compatibility of iron began to arise, and the problems were solved by replacing entire modules. Now the main job in administration, because everything is very complicated, and the main incidents are most often associated with software.

Or here comes the original piece of iron and the original power supply to it. But for some reason the last one doesn’t fit into the compartment even though you crack. It can’t be like that, but you can’t argue with reality. It is necessary to start already in the morning, therefore it is possible to sharpen one of the corners of the PSU with a file - and it is stuffed as it should. Will you do that?

Yes? What if you don’t understand something, and now you’ll lose a guarantee worth hundreds of thousands of dollars? Or, on the other hand, did the vendor make a mistake himself? Now you cut off a corner for him - and tomorrow the service engineers of the supplier will take the ass - "who allowed to do this?"

Or the server arrives, there is no single driver.In Runet, the distribution is, but left. Will you take or wait a week? If you take it, then you will use non-certified drivers, which in which case can become a serious problem. If not, break the deadline. By the way, I had this case: the iron manufacturer released a new driver with a patch for one exotic situation, but the supplier did not include it in the package - they have a test program for a couple of months. I wrote an official letter asking if it could be set to work at least somehow. Fortunately, we went forward, confirmed that it is possible, of course, only until the end of the tests they can not promise that there will be no sudden reboots.

In some places they even scold us: "even my neighbor would have repaired here, there, just replace the fuse." We also know this, only a case without a certified engineer cannot be opened, a guarantee. But from the point of view of the user - we are evil bad people who decided that the letter of the instruction is most important in the world.

By the way, until recently, one specialist soldered. Although in most cases it is necessary to change whole blocks, I soldered. Sometimes there were fans when it was impossible, for example, to find a piece of iron from a vendor. Or is there somewhere in the factory a device that is already 15 years old, and it is very necessary there. It breaks, but no spare parts can be found, neither paid nor free, no at all. Bring to him, he looks, calls. Well, why not solder? Took - soldered. Now there is no this romance, and vendors are very scrupulous about everything that is not a replacement module.

Today, iron repair looks like this : on the rack, the LED lights up opposite the failed module, SMS comes from monitoring, you take the module in the warehouse (and usually it is - this is just our job), pull out the broken one and put in a new one. That's it, the repair is over.

But 10 years ago there was still a memorable story. An engineer calls me at 2 am and says:

“Oh, the customer called, they have something with a local network there.”

- And what?

- I do not know (he was a student, our intern, he did not know and understand everything yet).

- Do we have a service contract with them?

- No, there is no contract.

I called my colleague, asked what to do - to help or not? He says:

- What are you! There everyone is fired by the morning, they have just now the time for reports. In general, if we can, let's help.

I call the engineer back, tell what and how. Well, in general, “phone sex” - we understand, look for the necessary modules, check that there is memory, software, we collect all this. The customer understood everything, was very glad that at least someone was helping him, he sent a car. "Meet a young man with glasses, in an acid sweater with a divorce, berets, pants like the Beatles, his hair on end are combed, next to our guard ... see this?" Found it. They saw, brought to the place, everything changed, wound up.

In the morning, a colleague arrives, says, “Well, they gave me the signed contracts in the morning, I couldn’t sign them there for more than a month, and came in the morning, and they are lying.” It turned out that we were not the first to whom they called, but the only ones who came to them at all. Then this was a feat, and now the SLA is committed to run somewhere, to do something.

Even now, we have a decompiler for working at the micro level instead of a soldering iron.Here is an example. At the end of each month, one call center fell. During the fall, up to 3-4 thousand calls could be missed. They started to figure it out, found a couple of bugs, but it is not clear whether or not there. The vendor checked all the iron, also clean. But no, next month the call center fell again, they started to sin on the virtualization server, changed to physical, it turned out to be wrong again. And if it falls again next month, the losses will be huge. I had to decompile adjacent systems. It turned out that in one place there was an incorrect timeout, changed. Everything worked. But by the way, decompiling is also not always possible, permission is required for everything.

Sometimes you have to reverse engineer iron.It happens that a new component needs to be plugged into the old system, but there is no control software: you need to try to understand the protocol and add functionality. Or, conversely, to the old equipment you need a new piece of iron. There was a case when at one factory a punch card programmer was stolen from a laser machine (by mistake, they thought the machine itself was being taken). Still, it's simple - you need to understand what to apply instead of a punch card to the input, and it will work again. Reverse engineering in all its glory.

Or it happens that new iron comes out, speeds change, timings float. What was almost constant on the new equipment may not be calculated that way. And where the problem is - it is not clear, maybe the hardware is raw, maybe software with bugs, or maybe somewhere at the junction in an almost random situation an error accumulates.

What else has changed?

Well, probably there has been more prevention. Typically, a breakdown is not only direct losses, but also reputational risks. For example, imagine that the ATMs of one of the banks fly out for a day due to an accident in the data center. The losses are huge. Accordingly, all critical systems themselves are diagnosed and, if possible, are honed for replacement before failure. Like flash drives in server storages - they know for sure their moment of failure.

Or we used to go around the country, but now a lot of things have become remotely administered. But still, we urgently flew to Yuzhno-Sakhalinsk, and in Yakutia we drove reindeer in sleighs to iron.

From old stories - once we come to see the customer’s hardware, where the critical mail is shunted, and there the mice in the power circuit settled. It’s warm there, tasty - they eat insulation. Cockroaches were also often seen. Servers were seen in basins (so that water would not be flooded from the floor). By the way, small animals in the winter runs to server factories and large warehouses. They gnaw something small, glitches go incomprehensible, and you can only diagnose locally. Or, better, small animals act as conductors. Not very good, but conductors. Hence the difficultly reproducible problems. Cockroaches sometimes portray such spontaneous fuses.

Many new services have appeared. Previously, they simply "traded bodies" - they sent visiting admins. Now we have round-the-clock consulting, a hotline, dedicated service engineers (these are guys who, like firefighters, are waiting for an urgent departure all the time), spare parts warehouses for specific facilities, “detectives” (investigating incidents), there are planned iron replacements, a bunch responsibilities with software updates, sophisticated database management, detailed reporting, financial planning, documentation, monitoring, audits, inventories, test stands for new hardware, rental of iron for hot swaps and so on. We help move, and we “raised” and trained dozens of technical teams in large companies.

What is important to the customer?

When we started - for at least someone to do something. Now - quality and speed. Customers are becoming demanding. If before a bearded uncle came, speaking an incomprehensible language, he was treated with understanding. Because there is no other. And now there should always be a person who understands the process as a whole - for example, if a plant rises, it is necessary that he quickly be able to the CFO to draw up measures to rectify the situation or name something that can speed up the repair. And then you need to explain what it was, why, whether there is a chance that it will happen again. And who should tear their hands off so that no longer happens.

The situation will also change for the vendors themselves, who strive to meet increasingly high standards of service. For example, Cisco organized the replacement of failed equipment within 4 hours, not only in Moscow, but also in other regions. At the same time, vendor specialists also work 24x7.

Therefore, by the way, often good support begins with writing an emergency plan. There are specially trained paranoids who find the most likely or dangerous places of failure, we reserve them. Something is wrong - we switch to the backup data center, for example, we urgently go to understand. Plans, by the way, are multi-level. For example, a spare part arrives according to the usual plan, and it is dropped when unloading - what should I do? They open the envelope "very badly" on the spot - it says what to do if plan A breaks down.

Where there is no plan, it is difficult for our colleagues. We, as an external company, have financial responsibility, but psychological pressure is often very strong on a regular system administrator. “It doesn’t work for everything, everything is broken, we will fire you” and so on. Not only is the problem, everyone is screaming, but at that moment he must make some right decisions, competent. Then later, in an hour, it will be possible to get cognac from under the raised floor. And now 30 seconds to pull the switch or press the button - and if anything, damage, a couple million dollars.

Or here is a very typical situation for our days.A new piece of iron comes to you. Fresh, in such a hefty packaging from a Chinese factory. You run the tests, then carefully include it in the combat configuration at night. It works great for 20 minutes, and then it starts to fail irreparably. You swear, stop the service for 10 minutes, but manage to remove it from the system without loss. What happened? Yes, hell knows. The manufacturer tested a ready-made solution for a couple of months under load, experienced different situations, gave it to real companies - except that he didn’t let children in for the car. And the program was very extensive. And here - bam! - everything stops just for you. Americans find a bug. The Chinese on their knees collect the patch, you roll it. And then problems begin to pour in from all sides where everything has already worked. Rollback gives nothing. You, as they say, specifically get stuck.

Why? Because software plus hardware is a very complicated thing. Here take the new Airbus 370, for example. This is such a hefty airplane with a bunch of subsystems. Everything is duplicated there, reliably, critical nodes work almost from hitting a piece of iron with another piece of iron. Before each flight, it is checked. Presented? This is a very complex design, in which there is both software and hardware that have been developed for decades. Bugs cost hundreds of lives there, and all parts of the plane are really well tested. But bugs do happen. OS level software packages can be much more complicated than such an object.

Now let's look at any new piece of hardware or a hardware-software complex that is being implemented somewhere. In any case, all this will have to be brought to mind, maintained, verified, maintained. As a result, special people appear, such a kind of shaman - they know where to hit. This is just us.

It is important to diagnose very quickly.Downtime is often considered for seconds, and therefore experience is very important here. There is simply no time to drive tests for hours. You need to know thousands of situations from hundreds of customers in order to come to the place and immediately look where you need to. This, by the way, is another reason why we are considered shamans. Like Feynman - we come, poke, everyone is surprised. Only he randomly showed in the scheme, and we know. At some particularly critical facilities, our SLA has 15 minutes to resolve the problem from the moment a specialist arrives, for example. Or 30 minutes from recording the incident. Where is important? Yes, please - the failure of the mobile operator, the problems of the bank and so on. It is clear that everything is reserved several times, but anything happens.

References

- Single accident investigation

- If interested, here is a list of our services . And there are contacts for communication, if necessary.

- Documents of our department for free download and use: come in handy for any technical support and outsourcing.

That's all for now. I think you also have a bunch of stories from practice. Tell us the most interesting ones in the comments, please.