How e-commerce survive major promotions. Getting ready for peak loads on the web [Part 1]

Hello everyone, online Alexey Pristavko, director of web projects DataLine.

Every Friday, in the last days of November, Black Friday takes place, the largest event in the world of e-commerce. This is a time of record discounts, stores open almost at midnight, and sites participating in the campaign are falling, unable to withstand the sharply increased traffic flow.

Therefore, using her example, we will analyze how to prepare for a serious increase in the load on the site or web application.

Under the cut we will talk in detail about how IT managers, developers and administrators of online stores to survive large-scale actions.

What threatens Black Friday

As I wrote above, Black Friday is a day that delivers a lot of hassle to everyone who is involved in maintaining e-commerce sites.

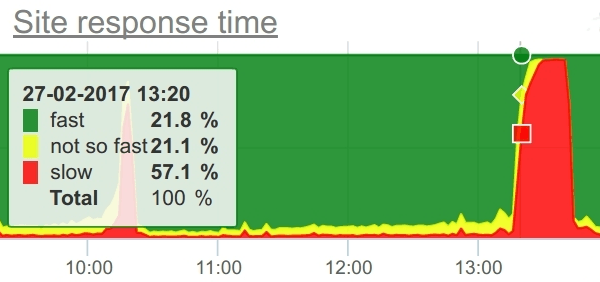

To feel this, consider the chart below. It reflects the increase in the number of requests on the site during Black Friday.

Compared with normal daily peaks, when no shares are held, on Black Friday the traffic is growing by 100-200%, and this is not the limit.

If a large site already has a lot of its own traffic, then a modest online store will easily get as many visitors during the promotion period and drown. Slightly paraphrasing the old joke: “the business made all the stores different, and online Black Friday equalized everyone”.

Earned on Black Friday "feeds" the seller all the next year. The business is extremely interested in attracting the maximum number of new customers who will return to the site to buy again and again. But for this, their first experience with the site should go smoothly, and to ensure this - the task of IT-specialists.

The more customers are on the site at the same time, the higher the requirements for its stability and speed of response. Surely, you noticed that the site of the store to which you switched from the landing page of blackfridaysales, worked extremely slowly or did not work at all. I doubt that you were waiting for the download, and did not leave after a few seconds.

We will discuss below how to protect customers from negative experiences, and the site from drops due to overload. However, further advice is valid for any perceived peak load.

In general, it is possible to distinguish two stages of preparing a site for increased traffic: technical and organizational. In this article we will discuss the technical stage, and I will describe in detail the organizational details in the second part, which will be released in a week.

Technical training site

A small disclaimer: do not expect that you will find here detailed instructions on what, where and how to turn it up, so that “happiness for all, for nothing, and no one left offended”. First, the sites and projects are different for everyone. Secondly, specific technical advice for a specific software is more than enough. Including on Habré. First of all, I want to convey to readers the fundamental principles of working with web projects and show the starting points, to acquaint with the main techniques and platform-independent nuances.

Let's start with the axiom: the user does not like to wait, so it is extremely important to work with timings and speed of response. On Black Friday, requests to the site will become much more than usual, and you may encounter not only falling conversions due to lengthy downloads, but also exceeding HTTP timeouts (the site does not respond).

Most often, under load sites fall not because of physical breakdowns, but because the response time began to exceed the time-outs due to overload on some node. This is similar to a traffic jam, and you will have to take control in time: expand (scale) equipment, adjust, cut off and adjust (timeouts), optimize the workload of vehicles (packages and requests), work with exceptions.

Since in the context of the site performance has two aspects - the speed of response and the number of requests processed simultaneously, we will improve these parameters.

Most often, a business presents response speed as a site speed according to Google Analytics, and the number of simultaneous requests is the number of users simultaneously on the site.

In technical work, it is not very convenient to operate with these parameters.

Further I will offer a metric that is more suitable for calculations.

Optimize response speed

When optimizing response speed, we are interested in two indicators: server response speed and page load time.

Loading time (Page Load Time) is made up of the following links:

- The time the page was generated by the server;

- The page transfer time from the server to the client;

- The processing time of the page in the client browser.

When everything works in normal mode, not the time the page is generated by the server and the page delivery time over the network, but the quality of the front end and the speed of the site in the browser are crucial for the Page Load Time. Since the latter is entirely happening on the user's side, you can not be afraid of the appearance of brakes on Black Friday. However, problems may arise due to overloading on your Internet channel or on the return of an external component (partner counters, online chat rooms, CRM plug-ins, etc.).

How to deal with it? Here are some work tips:

- Check the load of the Internet channel. Calculate the estimated growth. If in doubt, expand the channel. Some providers, in addition to expensive expansion on an ongoing basis, may offer you a temporary expansion for the period of the peak (much cheaper) or even allow you to briefly exceed the maximum speed.

- Do you use CDN? Contact technical support and warn about the planned growth of traffic. They, too, are preparing for a universal peak, and your forecast will come in handy. If the CDN promises that everything will be fine, but in spite of everything, it will “lie down”, the presence of correspondence will help to set demands for compensation.

- Work out in advance the scenario of temporary shutdown of external components in case of problems. Align the script with the business. It would not be superfluous to communicate with technical support of the services used, anyway, it is usually impossible to influence them in any other way.

- On many sites, static is given through the application server. Under high load, the number of requests for statics increases many times, and they begin to compete for resources with the application itself. Be sure to configure static feedback directly from Nginx. First, it will cope much better with this, and secondly, there will be much more useful work for your Apache, Tomcat or Jetty streams.

Improving the speed of response

By itself, the optimization of the response rate refers to the overall improvement in the performance of the site. Theoretically, it helps reduce the amount of work done by the application, and thus improves scaling - because if each request becomes “cheaper”, you can handle more requests with the same resources.

But in practice, optimizing the response rate requires a large amount of independent work. It’s impossible to optimize everything at once, but breaking something in the process is easy.

Tip: Think systemically. Let's say the performance of the code increased, and the application began to make more simultaneous queries to the database. But here's the ill luck: the performance of the database does not allow to process such a number of requests, and the site as a whole only got worse, and the valuable time before the start of the action was spent.So it is better to focus on scaling and scalability, and the general optimization should be done separately from the preparation for Black Friday, so as not to screw up due to tight deadlines. Remember, now our task is to ensure that at peak loads the site works no worse than outside of it.

The speed of page generation will be of interest to us only in conjunction with another indicator - the volume of incoming load.

Please note: for a site, only the number of simultaneous requests that are created by users is important, not the number of online users on the site. And count with acceptable accuracy the number of requests per second by the number of visitorsquite difficult (I wrote about this above). It is better to request other metrics from business - the number of page views per hour and server time.

As a result, we will get a clear goal: to ensure the generation of the page in X time with the number of requests per second Y. Having specific numerical metrics in hand, it is much easier to assess the level of readiness and the current result.

Here is a general technical training plan:

- Find out the current indicators (conduct load testing of the current version of the site);

- Understand what exactly is missing and how many resources need to be backed up;

- Add resources;

- Repeat load testing and see what helped.

Looks too easy? You are right, each item is fraught with many surprises.

Very often, the addition of resources partially improves the situation, but does not completely save. Or in a test environment, the site works like a clock, and on the sale - again the brakes.

Next, I will explain how to identify potential problems and fix weaknesses. To begin with, how to conduct load testing and get a realistic result.

We carry out load testing correctly

Where to conduct tests?

Often load tests are conducted on a productive system. This may be good for controlling the situation as a whole, but is not suitable for iteratively solving specific problems. Remember, usually after the newly discovered problems after the elimination of new problems may occur. Silver bullets rarely hit the mark.

A failed load test can cause inconvenience to users of the site or even “break” it for a while. It is best to use a specially designated area as a guinea pig.

It must meet the following requirements:

- The selected area must be completely independent and isolated from the productive;

- Ideally, the selected area should be sized according to size. A scale model is also suitable, but it will reduce the quality and demonstration of tests. If the load on a resource grows nonlinearly (as it usually happens), your model will not show that under full load the resource is exhausted ahead of time;

- It is best to use exactly the same (but not the same!) Equipment for tests as in the sale. Otherwise, even with the observance of quantitative values of resources, it will not be possible to provide quality. This can play a cruel joke at the crucial moment. If this is not possible, test the performance of the equipment under your load and determine the coefficient for the adjustment.

Now let's talk about how to generate a test load. I will give a few basic techniques, each of which has its pros and cons.

How to generate load?

1. We test on requests from logs

It is possible to emulate the flow of traffic on the logs of combat servers. The obvious advantage of this approach is that you will not have to bother with analytics, statistical modeling and a synthetic traffic profile.

But you still have to clean the logs from requests that can not be done or not necessary.

For example, it is not necessary to “buy” goods on a productive basis, this will cause problems with the commodity content of the base.

It will be difficult to reproduce realistic delays between requests.

It is also extremely difficult to emulate user sessions, this method is very close to hit-based testing.

2. Using Yandex.Tank and Phantom

The tank in conjunction with the Phantom is a very convenient and popular tool for hit-based tests. It has a thoughtful interface and allows you to manage the load. To begin shelling from Tank, you must prepare “ammunition” - special files containing requests for the generator.

But, despite all the convenience, the Tank has a major drawback: it does not know how to user sessions.

You can forget about authorization, about full-fledged work with cookies and variable delays. A tank can only “peck” with requests from one address.

It will suit you if:

- There is no difference in server response time for authorized and unauthorized users, or it is negligible;

- API is tested without HTTP sessions explicitly;

- This approach generally corresponds to the logic of your site (usually not suitable for online stores).

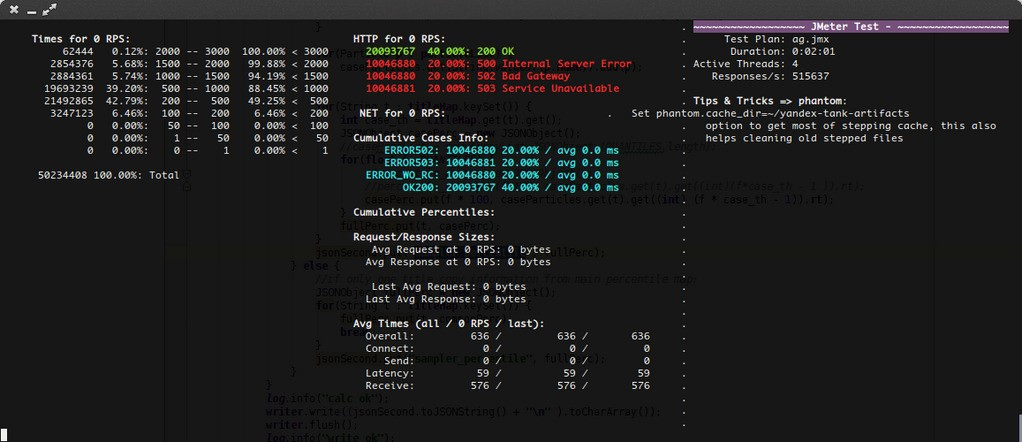

3. Using Apache JMeter

This is the most flexible tool that allows you to emulate user sessions in detail. So, with its help, you can still answer the question of the business "how many users does the site sustain?". In addition, JMeter can work in conjunction with Yandex.Tank.

Its key minus is the resource intensity and laboriousness of test preparation.

The main advice directly on JMeter: try to avoid parsing the bodies of the pages by its forces; it is better to use previously prepared data sets to reproduce the logic of the sessions. In principle, it is better to generally minimize the work done by JMeter. Like the Tank, it can be put in pre-generated "cartridges." Here they also need to take into account the distribution of specific pages within one type, the variability of requests and all that jazz

In JMeter itself, it is the user behavior patterns that need to be programmed. If the task is to test not only the server part, but also the return of static content, run this test separately using Phantom, if necessary simultaneously with the JMeter test. This will help to significantly reduce the resource consumption of the load generator and improve the reproducibility of the test.

Recommendations for load testing

The basis of good load testing is a competent analysis of traffic and high-quality preparation of a statistical model and profiles for emulation.

Highlight 5-7 main types of pages (do not forget also about landing pages for stocks), count in what percentage ratio the total traffic is distributed between them. Keep in mind, however, that there may be several requests for dynamic content per page. For you, the page is the whole group of such requests. Analyze how much time users spend on each type of page: on average, average minimum, average maximum.

If the page is based on several requests, consider the delay between them. See how many pages the user usually visits per session, what is the distribution of this number. Highlight 5-10 of the most typical user paths by page type.

Using the data obtained, build the scenarios so as to accurately reproduce all the described statistical parameters. Do not forget about the variability of scenarios, they should differ in the number and composition of clicks.

Within each page type, select individual addresses. The more, the better, but two or three thousand of the most popular addresses will already be behind the eyes. Of these, you can prepare lists of requests by adding each of the addresses to the list the required number of times.

If there are too many pages, divide them into several groups according to the percentage of traffic.

Profiles play a role only for JMeter, but by building lists of requests, you can equip "tank" cartridges.

And once again: when using JMeter in the mode of user emulation, do not forget about the delay between requests. If you do not add them, your generated load will exceed the planned one many times!After the trial run, be sure to calculate from the logs of the web server whether the emulated traffic is productive.

Aerobatics will prepare in advance the scripts to bring the site database to the desired state. Usually, the data prepared as described above work only with the state of the base for which the information on the goods was collected. Overloading each time a SQL dump may be prohibitively long. In addition, it is very desirable to learn how to manage the launched shares using scripts in the test zone. Often they are important for the operation of the system, and you need to understand at what set and exactly how the working shares are tested.

All is ready? Great, full speed ahead!

We use monitoring in tests

So, we conducted a competent testing and got the results. If everything is good, and your site is coping with a high load, then no Black Friday is terrible for you. But this article would be incomplete if we did not consider the

Imagine that a website can withstand an acceptable speed ... well, even if one fifth of what it wants to get a business. Do you have to call the hoster in a panic and order five times more capacity?

In principle, the hoster will like this approach. You may even get gold client status.

But before you act recklessly, let's try to figure out what exactly went wrong.

Your lifeline in the sea of possible causes is a monitoring system.

Therefore, we will take a step back and install as many samples as possible before testing. Ideally, you need to monitor all exhaustible resources.

Below is a list that will help you get started:

- CPU load (CPU Usage, CPU Load);

- Load RAM;

- Disk loading (IOPS, Latency);

- Number of network connections (Time wait, Fin wait, Close wait, Established);

- The number of open sockets;

- The number of user processes;

- The number of open files;

- Network traffic (in megabits and in packets, plus errors and drops).

And:

- The number of requests, responses and connections to the database and other components;

- Component response speed (database, search server, caches, etc.);

- All available logs for errors.

For all these parameters, you should know the available limits. This will allow during the load testing to understand exactly where the "bottleneck" was formed. Do not forget, most of these "resources" are configurable, some are generally controlled by settings.

Perhaps a couple of minor edits are enough, and the indicators will improve.

Somewhere you need not quantitative, but qualitative changes: for example, replacing HDD with SSD. A number of tips on optimizing and scaling web applications, as well as improving resiliency, can be found in my previous article.

Also, before ordering resources, I recommend that you compare the load growth schedule of the system with its load schedule . It is not necessarily there will be a linear relationship.

Another tip: remember, we said that users can’t wait long? If you and the business have agreed that the page generation time, conditionally, more than 2 seconds is absolutely unacceptable and such a user can be considered gone, record a timeout (for example, 0.5 seconds) to connect to the backend and take 1.5 seconds to answer. In the case of a longer download, you can give the user an error page or a stub a la "Opanki, try again."

On the backend, you can set the maximum wait from the database in 1 second, and so on. Such mechanics will allow not to apply the load on a component that does not have time. Accordingly, you will reduce the overall load on the system and protect it from long-term overload and failure. Ultimately, the tactic of providing quality work (and not an attempt to give an answer at any cost) significantly reduces the number of users facing the problem.

We order equipment

After you have discovered the zones that need to be scaled, you can order equipment.

If you order a lot of "physical" iron, keep in mind the delivery time. They will be about 2-3 months, plus another month will be spent on testing, installation and configuration.

Ordering a lot of resources in the cloud, remember: you are not the only ones who are going to experience Black Friday there. Warn the host for a few months, so you just enough equipment. Clouds are elastic, of course, but when everything is naked at once, it can be embarrassing.

Even more far-sighted will be to order the processor power in the cloud with some margin. During the promotion period, many providers will increase the workload of physical resources. They are not likely to go beyond the limits prescribed in the SLA, but the iron can work somewhat slower than during testing during the “quiet” period.

Do not forget about the means of ensuring information security. Few people keep them not only in production, but also in the test area. They, too, may not have enough resources.

Do not forget that any, even the most useful intervention in the operation of the system can shake its stability. You can not postpone the addition of resources on the last day, leave yourself at least a couple of weeks to have time to check everything and catch the last little drums.

After upgrading, you need to repeat the tests and make sure that the site holds the load.

First, the process of adding resources may be iterative (new needs may emerge), and secondly, the human factor has also not been canceled. Even an experienced technician can prevent the cant during installation or carry out inaccurate calculations.

Perhaps that's all. We looked at the most important aspects of preparing web applications for a large volume of traffic, popular load testing methods and ways to identify bottlenecks at this stage.

If you have any questions, I invite them to ask not only in the comments, but also personally, at my seminar “Black Friday in e-commerce. Secrets of Survival ", which will be held on August 16 in Moscow. Sign up for a seminar here.