Almost everything you wanted to know about the floating point in ARM, but were afraid to ask

Hi, Habr! In this article I want to tell about the work with a floating point for processors with ARM architecture. I think this article will be useful primarily for those who are porting their OS to the ARM architecture and at the same time they need hardware floating point support (which we did for Embox , which previously used the software implementation of floating point operations).

Hi, Habr! In this article I want to tell about the work with a floating point for processors with ARM architecture. I think this article will be useful primarily for those who are porting their OS to the ARM architecture and at the same time they need hardware floating point support (which we did for Embox , which previously used the software implementation of floating point operations). So let's get started.

Compiler flags

To support floating point, you must pass the correct flags to the compiler. A quick googling leads us to believe that two options are especially important: -mfloat-abi and -mfpu. The option -mfloat-abi sets the floating point ABI and can be one of three values: 'soft', 'softfp' and 'hard'. The 'soft' option, as the name implies, tells the compiler to use the built-in function calls for floating-point software (this option has been used before). The remaining two 'softfp' and 'hard' will be reviewed a little later, after considering the option -mfpu.

-Mfpu flag and VFP version

The -mfpu option, as written in the online gcc documentation , allows you to specify the type of hardware and can accept the following options:

'auto', 'vfpv2', 'vfpv3', 'vfpv3-fp16', 'vfpv3-d16', 'vfpv3-d16-fp16', 'vfpv3xd', 'vfpv3xd-fp16', 'neon-vfpv3', 'neon -fp16 ',' vfpv4 ',' vfpv4-d16 ',' fpv4-sp-d16 ',' neon-vfpv4 ',' fpv5-d16 ',' fpv5-sp-d16 ',' fp-armv8 ',' neon -fp-armv8 'and' crypto-neon-fp-armv8 '. Moreover, 'neon' is the same as 'neon-vfpv3', and 'vfp' is 'vfpv2'.My compiler (arm-none-eabi-gcc (15: 5.4.1 + svn241155-1) 5.4.1 20160919) produces a slightly different list, but this does not change the essence of the matter. In any case, we need to understand how this or that flag affects the operation of the compiler, and of course, which flag should be used when.

I started to deal with platform-based imx6 processor, but postponed it for a short while as a coprocessor neon has features which I will discuss later, but start with a simple case - a platform Integrator / the cp ,

most boards I have not, so debugging was performed on qemu emulator In qemu, the Interator / cp platform is based on the ARM926EJ-S processor , which in turn supports the VFP9-S co - processor. This coprocessor conforms to the Vector Floating-point Architecture version 2 (VFPv2) standard. Accordingly, you need to put -mfpu = vfpv2, but in the list of options of my compiler, there was no such option. On the Internet, I met a version of the compilation with the flags -mcpu = arm926ej-s -mfpu = vfpv3-d16, set, and I compiled everything. At startup, I received an exception undefined instruction , which was predictable, because the coprocessor was turned off .

In order to enable the coprocessor to work, you need to set the EN bit [30] in the FPEXC register . This is done using the VMSR command.

/* Enable FPU extensions */asmvolatile("VMSR FPEXC, %0" : : "r" (1 << 30);In general, the VMSR command is processed by the coprocessor and raises an exception if the coprocessor is not turned on, but accessing this register does not cause it . True, unlike the others, access to this register is possible only in privileged mode .

After the coprocessor was enabled, our tests for mathematical functions began to pass. But when I turned on optimization (-O2), the previously mentioned exception undefined instruction began to occur. And it appeared on the instructions vmovwhich was called in the code before, but was executed successfully (without an exception). Finally, I found the phrase “The instructions for copying constants available in VFPv3” at the bottom of this page (i.e., operations with constants are supported starting with VFPv3). And I decided to check which version was released in my emulator. The version is recorded in the FPSID register . From the documentation it follows that the register value should be 0x41011090. This corresponds to 1 in the architecture [19..16] field, that is, VFPv2. Actually, having made a printout at the start, I got it

unit: initializing embox.arch.arm.fpu.vfp9_s:

VPF info:

Hardware FP support

Implementer = 0x41 (ARM)

Subarch: VFPv2

Part number = 0x10

Variant = 0x09

Revision = 0x00After reading carefully that 'vfp' is alias 'vfpv2', I set the correct flag, it all worked. Returning to the page where I saw the combination of flags -mcpu = arm926ej-s -mfpu = vfpv3-d16, I note that I was not attentive enough, because the list of flags includes -mfloat-abi = soft. That is, there is no hardware support in this case. More precisely, -mfpu matters only if a value other than 'soft' is set for -mfloat-abi.

Assembler

It's time to talk about assembly language. After all, I had to do runtime support as well, for example, the compiler, of course, does not know about context switching.

Registers

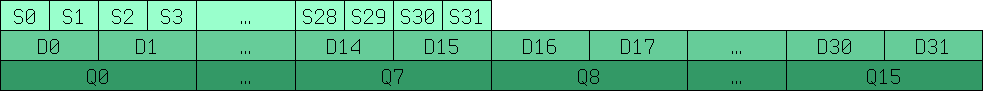

Let's start with a description of the registers. VFP allows you to perform operations with 32-bit (s0..s31) and 64-bit (d0..d15) floating-point numbers. The correspondence between these registers is shown in the picture below.

Q0-Q15 are 128-bit registers from older versions for working with SIMD, about them a bit later.

Command system

Of course, most of the time working with VFP registers should be given to the compiler, but at least you have to write the context switch manually. If you already have a rough understanding of the syntax of assembler commands for working with general-purpose registers, dealing with new commands should be easy. Most often, the prefix “v” is simply added.

vmov d0, r0, r1 /* Указывается r0 и r1, т.к. в d0 64 бита, а в r0-1 только 32 */

vmov r0, r1, d0

vadd d0, d1, d2

vldr d0, r0

vstm r0!, {d0-d15}

vldm r0!, {d0-d15}And so on. A complete list of commands can be found on the ARM website .

And of course you should not forget about the version of VFP, so that situations like the one described above do not arise.

-Mfloat-abi flag 'softfp' and 'hard'

Let's go back to -mfloat-abi. If you read the documentation , we will see:

The softfp allows you to use soft-float calling conventions. 'hard' allows for generation of floating-point instructions and uses.That is, we are talking about passing arguments to a function. But at least I was not very clear about the difference between “soft-float” and “FPU-specific” calling conventions. Assuming that the hard case uses floating point registers, and the softfp case uses integer registers, I found confirmation of this on the debian wiki . And although this is for the NEON coprocessors, it does not matter. Another interesting point is that with the softfp option the compiler can, but does not have to use hardware support:

“Filer type emulator or real FPU instructions determined by FPU type (-mfpu =)“For better clarity, I decided to experiment, and I was very surprised, because with the -O0 optimization turned off, the difference was very small and did not apply to those places where the floating point was actually used. Having guessed that the compiler simply puts everything on the stack, and does not use registers, I turned on the -O2 optimization and was again surprised, because with the optimization the compiler started using floating-point hardware registers, both for hard and sotffp, and the difference is and in the case of -O0 was very small. In the end, for myself, I explained this to the fact that the compiler solves the problemThis is due to the fact that if you copy data between floating point and integer registers, performance drops significantly. And when optimizing, the compiler starts using all the resources at its disposal.

When asked what flag to use 'softfp' or 'hard', I answered for myself as follows: everywhere where there are no parts already compiled with the 'softfp' flag, you should use 'hard'. If there are such, it is necessary to use 'softfp'.

Context switch

Since Embox supports preemptive multitasking, to work correctly in runtime, of course, it was necessary to implement a context switch. To do this, save the registers of the coprocessor. There are a couple of nuances. First: it turned out that the stack operation commands for floating points (vstm / vldm) do not support all modes . Second, these operations do not support work with more than sixteen 64-bit registers. If you need to load / save more registers at a time, you need to use two instructions.

I will also give you one small optimization. In fact, saving and restoring 256 bytes of VFP registers is not necessary at all (general registers occupy only 64 bytes, so the difference is significant). Obvious optimization will perform these operations only if the process uses these registers in principle.

As I already mentioned, when the VFP coprocessor is turned off, an attempt to execute the corresponding instruction will result in the “Undefined Instruction” exception. In the handler for this exception, you need to check what the exception is caused by, and if it is a matter of using a VPF coprocessor, the process is marked as using a VFP coprocessor.

As a result, already written save / restore context was added with macros

#define ARM_FPU_CONTEXT_SAVE_INC(tmp, stack) \ vmrs tmp, FPEXC ; \ stmia stack!, {tmp}; \ ands tmp, tmp, #1<<30; \ beq fpu_out_save_inc; \ vstmia stack!, {d0-d15}; \fpu_out_save_inc:

#define ARM_FPU_CONTEXT_LOAD_INC(tmp, stack) \ ldmia stack!, {tmp}; \ vmsr FPEXC, tmp; \ ands tmp, tmp, #1<<30; \ beq fpu_out_load_inc; \ vldmia stack!, {d0-d15}; \fpu_out_load_inc:To test the correctness of the context switching operation under floating point conditions, we wrote a test in which we do multiplication in one thread and then divide in another thread, then compare the results.

EMBOX_TEST_SUITE("FPU context consistency test. Must be compiled with -02");

#define TICK_COUNT 10staticfloat res_out[2][TICK_COUNT];

staticvoid *fpu_context_thr1_hnd(void *arg){

float res = 1.0f;

int i;

for (i = 0; i < TICK_COUNT; ) {

res_out[0][i] = res;

if (i == 0 || res_out[1][i - 1] > 0) {

i++;

}

if (res > 0.000001f) {

res /= 1.01f;

}

sleep(0);

}

returnNULL;

}

staticvoid *fpu_context_thr2_hnd(void *arg){

float res = 1.0f;

int i = 0;

for (i = 0; i < TICK_COUNT; ) {

res_out[1][i] = res;

if (res_out[0][i] != 0) {

i++;

}

if (res < 1000000.f) {

res *= 1.01f;

}

sleep(0);

}

returnNULL;

}

TEST_CASE("Test FPU context consistency") {

pthread_t threads[2];

pthread_t tid = 0;

int status;

status = pthread_create(&threads[0], NULL, fpu_context_thr1_hnd, &tid);

if (status != 0) {

test_assert(0);

}

status = pthread_create(&threads[1], NULL, fpu_context_thr2_hnd, &tid);

if (status != 0) {

test_assert(0);

}

pthread_join(threads[0], (void**)&status);

pthread_join(threads[1], (void**)&status);

test_assert(res_out[0][0] != 0 && res_out[1][0] != 0);

for (int i = 1; i < TICK_COUNT; i++) {

test_assert(res_out[0][i] < res_out[0][i - 1]);

test_assert(res_out[1][i] > res_out[1][i - 1]);

}

}The test passed successfully with the optimization turned off, which is why we indicated in the test description that it should be compiled with optimization, EMBOX_TEST_SUITE (“FPU context consistency test. Must be compiled with -02”); although we know that tests should not rely on it.

NEON and SIMD coprocessor

It's time to tell why I postponed the story about imx6. The fact is that it is based on the Cortex-A9 core and contains the more advanced NEON coprocessor (https://developer.arm.com/technologies/neon). NEON is not only VFPv3, but it is also a SIMD co-processor. VFP and NEON use the same registers. VFP uses 32-bit and 64-bit registers for operation, and NEON uses 64-bit and 128-bit registers, the latter just were designated Q0-Q16. In addition to integer values and floating point numbers, NEON can also work with a polynomial ring of 16th or 8th degree modulo 2.

The vfp mode for NEON is almost the same as the disassembled vfp9-s coprocessor. Of course, it is better to specify for -mfpu variants of vfpv3 or vfpv3-d32 for better optimization, since it has 32 64-bit registers. And to enable the coprocessor, you must give access to the c10 and c11 coprocessors. this is done using commands

/* Allow access to c10 & c11 coprocessors */asmvolatile("mrc p15, 0, %0, c1, c0, 2" : "=r" (val) :);

val |= 0xf << 20;

asmvolatile("mcr p15, 0, %0, c1, c0, 2" : : "r" (val));but there are no other fundamental differences.

Another thing is if you specify -mfpu = neon, in this case, the compiler can use SIMD instructions.

Using SIMD in C

In order to “break through” the values by register manually, you can turn on “arm_neon.h” and use the appropriate data types:

float32x4_t for four 32-bit floats in one register, uint8x8_t for eight 8-bit integers and so on. To refer to a single value, we refer to as an array, addition, multiplication, assignment, etc. as for ordinary variables, for example:

uint32x4_t a = {1, 2, 3, 4}, b = {5, 6, 7, 8};

uint32x4_t c = a * b;

printf(“Result=[%d, %d, %d, %d]\n”, c[0], c[1], c[2], c[3]);Of course, using automatic vectorization is easier. For automatic vectorization, add the -ftree-vectorize flag to GCC.

voidsimd_test(){

int a[LEN], b[LEN], c[LEN];

for (int i = 0; i < LEN; i++) {

a[i] = i;

b[i] = LEN - i;

}

for (int i = 0; i < LEN; i++) {

c[i] = a[i] + b[i];

}

for (int i = 0; i < LEN; i++) {

printf("c[i] = %d\n", c[i]);

}

}The cycle with additions generates the following code:

600059a0: f4610adf vld1.64 {d16-d17}, [r1 :64]

600059a4: e2833010 add r3, r3, #16 600059a8: e28d0a03 add r0, sp, #12288 ; 0x3000 600059ac: e2811010 add r1, r1, #16 600059b0: f4622adf vld1.64 {d18-d19}, [r2 :64]

600059b4: e2822010 add r2, r2, #16 600059b8: f26008e2 vadd.i32 q8, q8, q9

600059bc: ed430b04 vstr d16, [r3, #-16] 600059c0: ed431b02 vstr d17, [r3, #-8] 600059c4: e1530000 cmp r3, r0

600059c8: 1afffff4 bne 600059a0 <foo+0x58>

600059cc: e28d5dbf add r5, sp, #12224 ; 0x2fc0 600059d0: e2444004 sub r4, r4, #4 600059d4: e285503c add r5, r5, #60 ; 0x3c Having conducted tests on parallelized code, we got that simple addition in a cycle, provided the variables are independent, gives acceleration as much as 7 times. In addition, we decided to see how parallelization affects real tasks, took MESA3d with its software emulation and measured the number of fps with different flags, it turned out to be a gain of 2 frames per second (15 vs. 13), that is, acceleration is about 15-20% .

I will give one more example of acceleration using NEON commands , not ours, but from ARM.

Memory copying is accelerated by almost 50 percent compared to normal. True examples are there in assembler.

Normal copy cycle:

WordCopyLDRr3, [r1], #4STRr3, [r0], #4SUBSr2, r2, #4BGEWordCopyloop with neon commands and registers:

NEONCopyPLD

PLD [r1, #0xC0]

VLDM r1!,{d0-d7}

VSTM r0!,{d0-d7}

SUBS r2,r2,#0x40

BGE NEONCopyPLDIt is clear that copying 64 bytes faster than 4, and such copying will increase by 10%, but the remaining 40% seems to be the work of the coprocessor.

Cortex-m

Working with FPU in Cortex-M is not much different from that described above. For example, this is what the above macro looks like to save the fpu context

#define ARM_FPU_CONTEXT_SAVE_INC(tmp, stack) \ ldr tmp, =CPACR; \ ldr tmp, [tmp]; \ tst tmp, #0xF00000; \ beq fpu_out_save_inc; \ vstmia stack!, {s0-s31};

fpu_out_save_inc:Also, the vstmia command uses only the registers s0-s31 and in a different way it is accessing the control registers. Therefore, I will not go into much detail, I will explain only diff. So, we made support for STM32F7discovery with cortex-m7 for it, respectively, you need to set the flag -mfpu = fpv5-sp-d16. Please note that in mobile versions, you need to look at the version of the coprocessor even more closely, since there may be different options for the same cortex-m. So, if you have a variant not with double precision, but with a single one, then there may be no D0-D16 registers, as we have in stm32f4discovery , which is why the S0-S31 variant is used. For this controller, we use -mfpu = fpv4-sp-d16.

The main difference is the access to the control registers of the controller, they are located directly in the address space of the main core, and for different types they are different cortex-m4 for cortex-m7 .

Conclusion

At this point I will finish my short story about the floating point for ARM. I note that modern microcontrollers are very powerful and are suitable not only for control, but also for processing signals or various kinds of multimedia information. In order to effectively use all this power, you need to understand how it works. I hope this article helped in this a little better understand.