MIT course "Security of computer systems". Lecture 7: "Sandbox Native Client", part 1

- Transfer

- Tutorial

Massachusetts Institute of Technology. Lecture course # 6.858. "Security of computer systems." Nikolai Zeldovich, James Mykens. year 2014

Computer Systems Security is a course on the development and implementation of secure computer systems. Lectures cover threat models, attacks that compromise security, and security methods based on the latest scientific work. Topics include operating system (OS) security, capabilities, information flow control, language security, network protocols, hardware protection and security in web applications.

Lecture 1: “Introduction: threat models” Part 1 / Part 2 / Part 3

Lecture 2: “Control of hacker attacks” Part 1 / Part 2 / Part 3

Lecture 3: “Buffer overflow: exploits and protection” Part 1 /Part 2 / Part 3

Lecture 4: “Privilege Separation” Part 1 / Part 2 / Part 3

Lecture 5: “Where Security System Errors Come From” Part 1 / Part 2

Lecture 6: “Capabilities” Part 1 / Part 2 / Part 3

Lecture 7: "Native Client Sandbox" Part 1 / Part 2 / Part 3

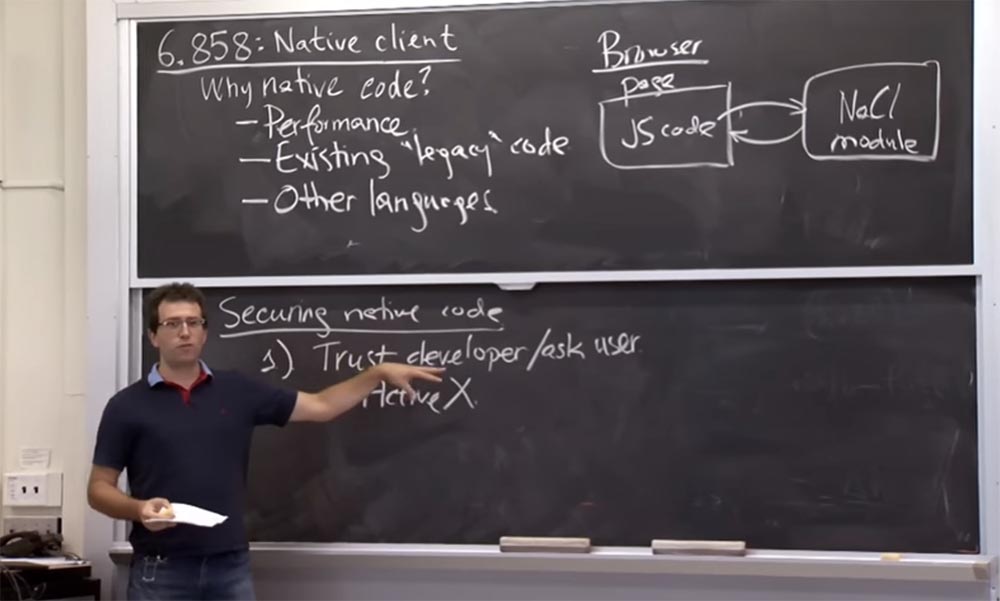

Today we will talk about a system called Native Client , which Google uses in the real world. It is a sandbox technology for running code on different platforms. It is used in the Chrome browser , allowing web applications to run arbitrary machine code. This is actually a pretty cool system. It also illustrates the isolation capabilities and a peculiar sandbox or privilege separation method called “ software fault isolation ”, software fault isolation , without using an operating system or virtual machine to create a sandbox.

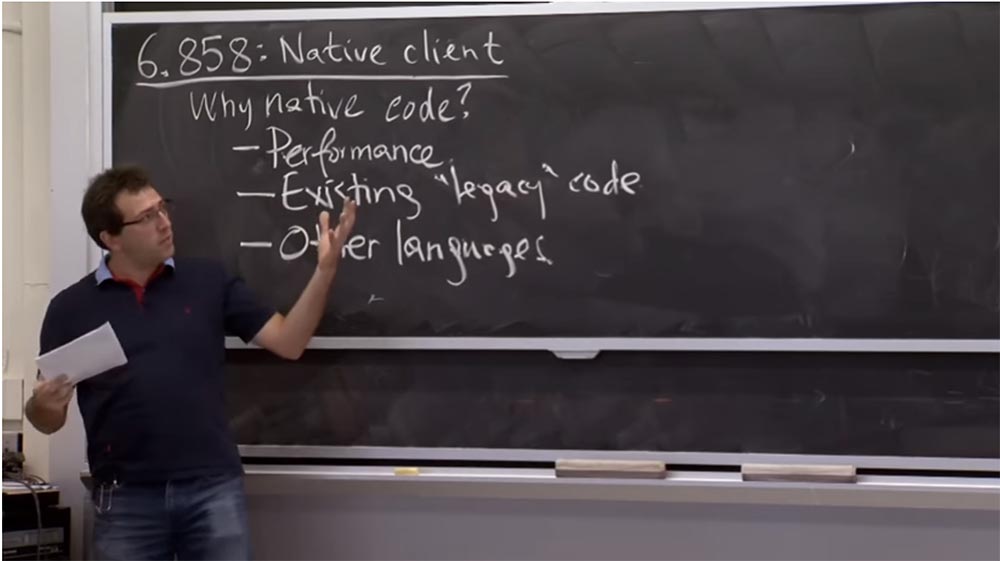

Instead, Native ClientIt has a completely different approach to reviewing specific instructions in a binary file to find out whether it will be safe to run it or not. Therefore, before we begin to study the technical details of the system, let's find out why these guys really want to run machine code? Their particular interest is in applying this solution in a web browser, where you can already run JavaScript code , Flash Player, and some other processes. Why are these guys so worried about the possibility of running code on the x86 platform ? After all, it seems that this is a step back.

Audience: they want very fast calculations.

Professor:Yes, this is one huge machine code advantage. Even if it may be unsafe in the future, it does provide high performance. Everything that you wouldn’t do in JavaScript , for example, would write a program and compile it, it will actually work much faster. Are there any other reasons?

Audience: running existing code?

Professor: right. It is important that not everything can be written in JavaScript. So if you have an existing application, or, in industry terminology, “legacy” code that you are going to run on the Internet, this seems like a great solution. Because you can simply take an existing library, for example, some complex graphic “engine”, which is both sensitive to performance and to many other complex things that you do not want to re-implement, and this will be a good solution.

If you are just programming a new web application, should you use your own client if you are not particularly concerned about inheritance or performance?

Audience: then you don't need to use javascript .

Professor: yes, this is a good reason. If you don't like javascriptthen you don't need to use it, right? You can use, for example, C , you can run Python code , write it in Haskell , in any language you think is more appropriate.

So, this is quite a convincing list of motivations for running your own code in the browser, while it is quite difficult to obtain the rights to such an action. Literally in a second we will look at technical details, and now I want to show you a simple training demo video that I received from the Native Client website .

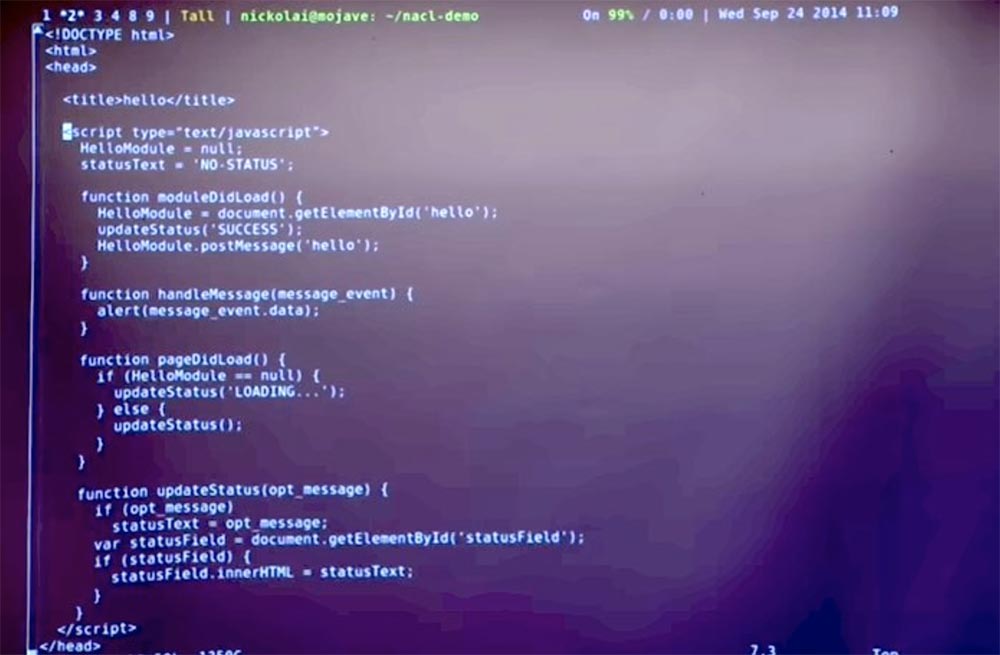

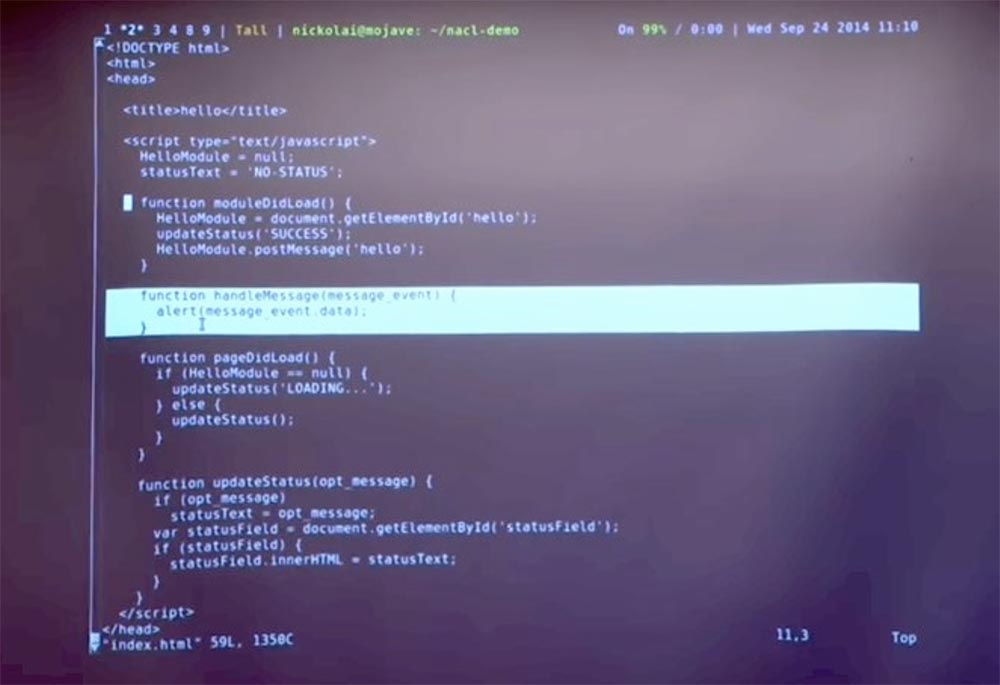

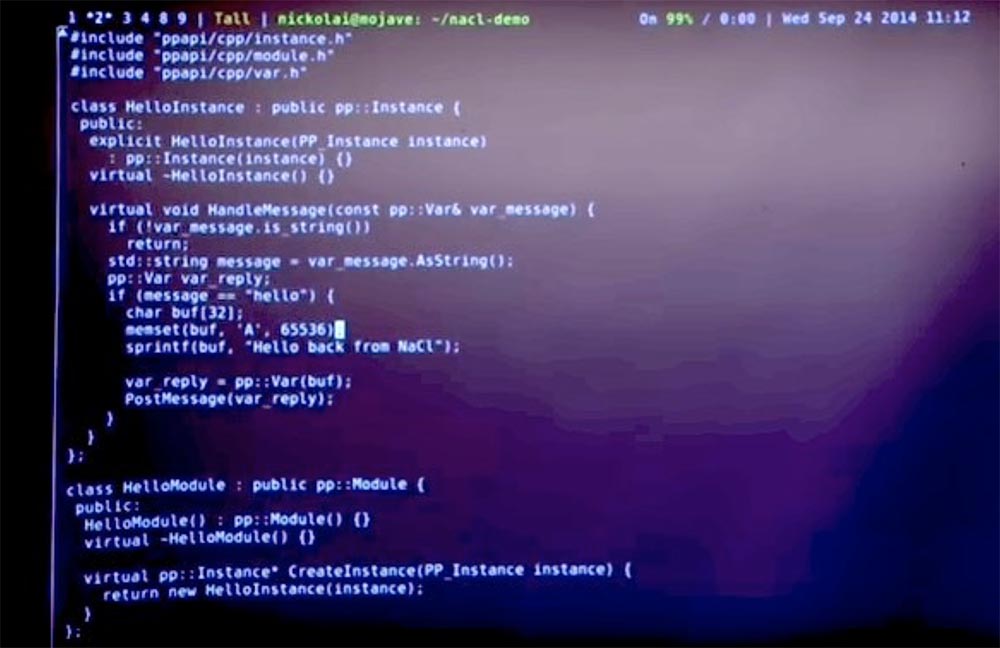

It's pretty simple because you can take C ++ or a C program.and run it in the browser. You can look at this webpage, which is an HTML file, inside which there is a bunch of JavaScript code .

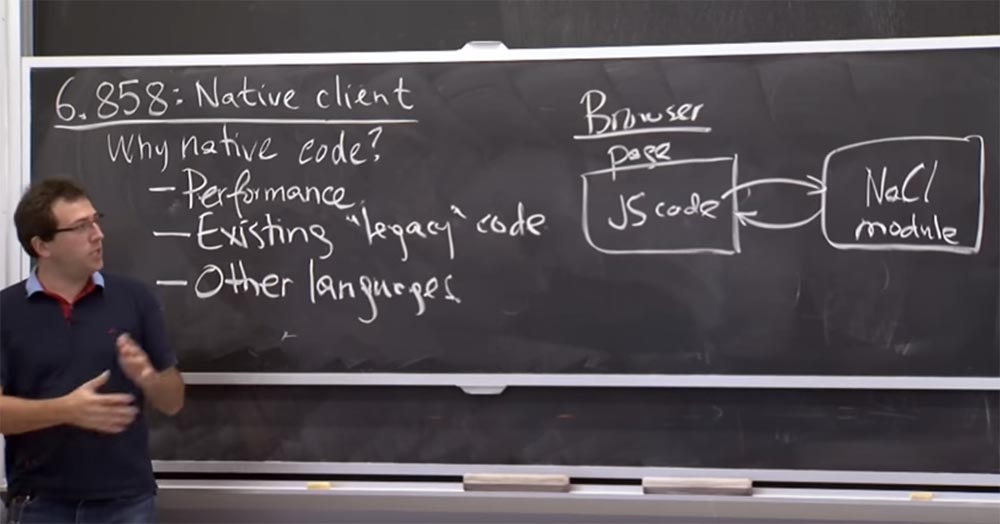

The reason for the existence of this JavaScript code is that it allows you to interact with parts of the Native Client . Regarding the work of the browser, the point of such a solution is that you have some kind of web page containing JavaScript code . And this solution works with the privileges of the pages and allows you to do various things on the web page itself, for example, to communicate with the network in some circumstances.

Native Client allows you to run your module inside the browser, so the JavaScript codecan interact with it and receive a response. This shows some of the JavaScript code that is required in the Native Client in order to interact with the specific NaCl module we are about to launch.

And you can send messages to this module. How it's done? You take the object of this module in JavaScript , call it postMessage and thus support sending this message to the NaCl module . When the NaCl module responds, it will start the message function in javascript . And in this particular case, a dialog box pops up in the browser.

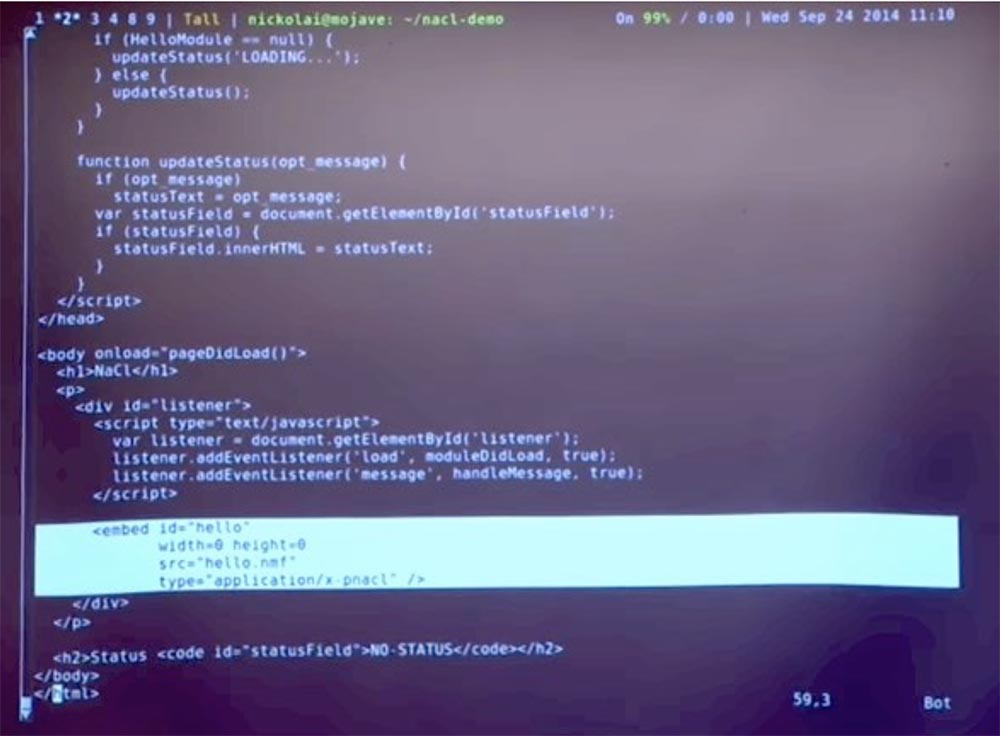

So from the sideJavaScript is a fairly simple web page interface. The only thing you need to do additionally is to assign the NaCl module in this way . That is, you simply insert a module with a specific ID here . The most interesting code here is hello with the nmf extension . It simply says that there is an executable file that you need to download and start working with it in the NaCl environment .

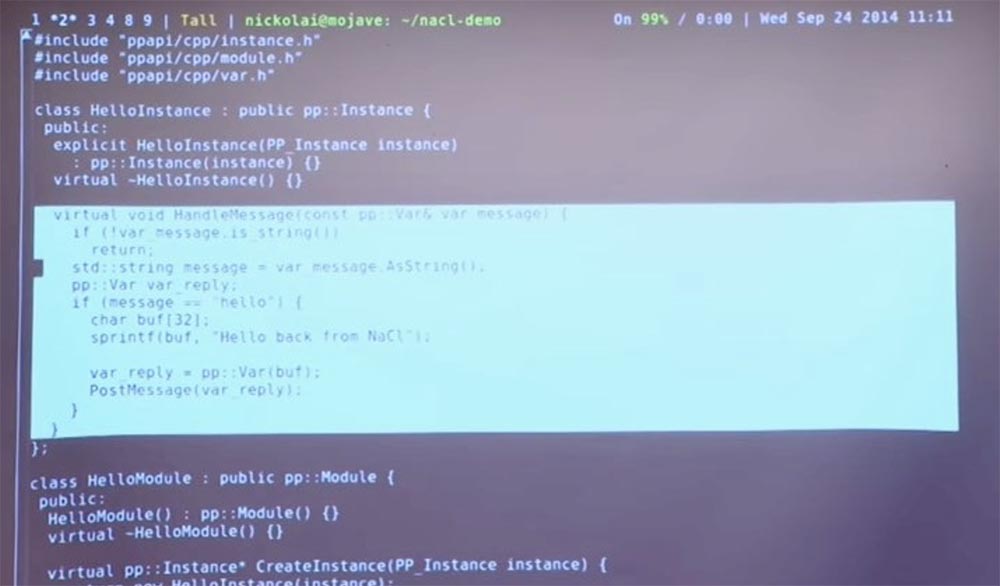

This machine code is in fact similar to any other C ++ code you could write. An interesting part is this HandleMessage message handling function .

This is a C ++ class , and whenever JavaScript codesends some message to the native code, it will perform this function. It performs an if (message = = 'hello') check . If so, it will create a return line of some kind and send it back. This is pretty simple stuff. But for specifics, let's try to run it and see what happens.

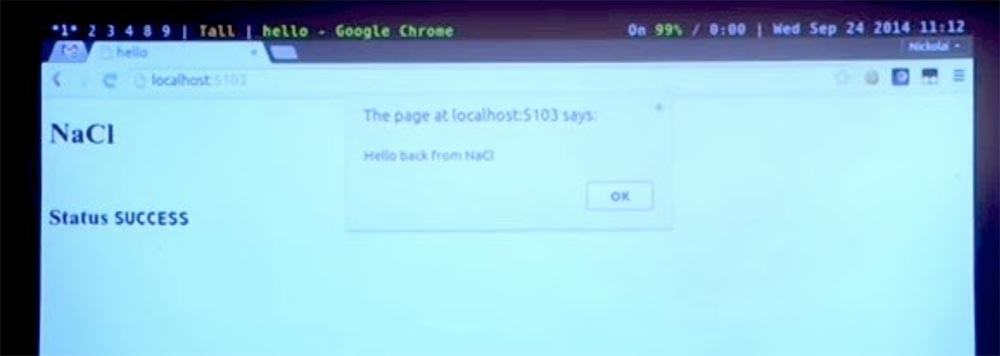

We can build and run a small web server that will serve this page and the Native Client module . Here I can go to this URL , and here we see the NaCl web page . The module received our welcome message from javascript , answered back with a string in javascript , and javascript codetriggered a popup dialog containing this response.

So it really works.

Try to find out if we can cause the Native Client to fail . I hope not, but we can take this code and this buffer and write a bunch of nonsense in it, for example, 65536 and see what happens.

I hope this should not cause my browser to crash, because the Native Client is trying to provide isolation. But let's see what happens.

Restart the web server. We see that the entrance to the module is still successful, our browser is not affected. However, the message exchange with the client did not take place, so the dialog box is missing. Look at the javascript consoleat the bottom of the page and see that the Native Client module informs us about the failure of the NaCl module .

It is possible that the argument I entered caused a buffer overflow or a call to some wrong address, but in any case, the NaCl module is really able to provide isolation of accidental memory corruption in such a way that it does not affect the browser.

This is a quick demonstration of this system in the form in which you can use it as an end user or developer. Let's look at some more examples. For example, how the Native Client will work , or why we need just such, and not an alternative design.

Therefore, if your goal is to isolate your own code, there are several alternatives with which you can do this. In fact, people have had problems with using inherited code and other languages before the Native Client appeared . They solved them in various ways that might not have been as safe and convenient as the Native Client , but provided the same isolation options.

So what should you do if you really want to run the machine code in a browser? One option is to trust the developer. Perhaps the variant of this approach is that you are asking the user if he wants to run some code fragment in his browser or not.

So, everyone understands roughly what the plan is, right? For example, instead of this whole strategy of compiling the Native Client, I could just create a C program , run it in a browser, and he would ask me if I want to launch this site or not? And if I click "yes", accidentally "nakosyach" in the memory of the browser, it will fail. So it is possible, right? This, of course, solves all these problems, but what's wrong with that?

I think the bad thing is that this solution is not safe. This is one of the ways to “get around” this system and many other systems.

At Microsoft has a system called an ActiveX , which is basically to implement this plan. You could send binary files in IE, a browser on your computer, and until they return with a certificate from a particular developer, signed by, say, Microsoft or someone else, the browser will not run your code. Do you think this is a useful plan?

Audience: This is a question of trust!

Professor: yes it is. You really need to trust a little that the developer will only sign those “binaries” that will not do anything wrong. But it’s often impossible to find out if it’s a bad thing or not, so they just write C code and blindly sign it without doing a huge amount of work. In this case, you may well get some problems in the future.

Likewise, the decision to ask the user if he really wants to launch a thing does not guarantee security at all. Even if the user wants to be careful, it is actually not clear how he should decide? Suppose I really want to understand, can I let this program work? I am told that everything is fine, maybe it was created by reputable Google.com or Microsoft.com developers . However, this is the executable file foo.exe and I absolutely do not know what is inside it. Even if I disassemble his code, it will be very difficult to say whether he is going to do something bad or not. Therefore, it is really difficult for the user to decide whether the code launch will be safe for the system.

In this way,Native Client can act as a mechanism by which users can gain some confidence in whether they should say yes or no to a program.

So in practice, I think, there should be an option that was offered by our guest lecturer Paul Yang last week. He advised running the “ play extension ” plugin , or “play the extension” in the Chrome browser . That is, it turns out that before running any extension, including the Native Client , you need to click on this thing. In some ways, this is the same as asking the user. But in this case, even if the user decides to answer “yes”, the system will still be safe, because the Native Client will turn on.. In this sense, we have a double security mechanism: first ask the user, and then, with a positive answer, start the sandbox client, which will not allow the browser to crash.

Thus, another approach that should be applied is to use a sandbox implemented by means of an OS or hardware, or to isolate processes. This is what we considered in the last 2 lectures.

Perhaps you would use Unix isolation mechanisms . If you had something more complicated, you would use FreeBSD or Capsicum . It is great for isolating a piece of code in the sandbox, because you can limit its capabilities. Linuxhas a similar mechanism called Seccomp , which we briefly touched on in the last lecture, it also allows you to do such things.

Thus, there is already a mechanism for writing code in isolation on your machine. Why are these guys against using this existing solution? It seems that they are “reinventing the wheel” for some reason. So what happens?

Audience: maybe they want to minimize mistakes?

Professor: Yes, in a sense, they do not trust the operating system. Perhaps they are actually worried about OS errors. It is likely that the FreeBSD kernel or the Linux kernel contains quite a lot of code written in Cwhich they do not want or cannot verify for correctness, even if they wanted to. And in Capsicum or Seccomp, the work is done on the basis of the isolation plan, so it is enough that there is only a small correct part of the code in the kernel for the sandbox to maintain and apply isolation.

Audience: since you get a lot more ways to use browsers, you will have to deal with different operating systems, such as iOS and Android, and access ...

Professor: yes, in fact, another interesting consideration is that usually many operating systems there are mistakes. And in fact, different operating systems are in some way incompatible with each other. This means that each OS has its own mechanism, as shown here:Unix has Capsicum , Linux has Seccomp , but these are just Unix variations . In Mac OS there Seatbelt , in Windows is something else, and the list goes on.

So in the end, every platform you work with has its own isolation mechanism. And the fact that they are not really worried about them too much is that they will have to write different code for Mac , Windows and Linux . But to a large extent, this affects how you have to write these things to work inside the sandbox. Because in the Native Clientyou actually write a piece of code that runs in the same way as the native OS code, in the same way that Apple code, Windows code , or Linux system code is executed .

And if you use these isolation mechanisms, they actually impose various restrictions on the program placed in the sandbox. Therefore, you have to write one program that will serve to run inside the Linux sandbox , another program to run inside the Windows sandbox , and so on.

This is actually unacceptable for them. They do not want to deal with problems of this kind. What other thoughts do you have?

Lecture hall:presumably system performance. Because if you use Capsicum , you need to take care of sufficient resources to ensure the work of the processes inside the sandbox. Here they may face the same problem.

Professor: yes it is true. The software failure isolation plan is actually very resource-intensive, which at the OS level can cause a lack of resources to support the sandbox. It turns out that in their own Native Client, they actually use both their sandbox and OS sandbox for additional security. Thus, in fact, they do not win in the performance of their implementation, although they probably could.

Lecture hall:perhaps they want to control everything. Because they can control what is happening in the browser, but if they sent it to the OS on the client's computer, they do not know what could happen there.

Professor: we can say that yes, the OS may have errors or not well cope with the "sandbox". Or the interface is slightly different, so you don’t know what the operating system is going to reveal.

Audience: this will not prevent the code from doing some bad things. There are many such things that the code does, for example, you want to perform static analysis, but looping the code does not allow the program to act.

Professor:in fact, it is very difficult to determine whether an infinitely looped process takes place or not, but in principle, this approach allows us to catch some of the problems of the code. I think that one really interesting example, about which I did not know until I read their article, shows that these guys are worried about hardware errors, and not just about OS vulnerabilities, which can be used by the running code. For example, the processor itself has some instructions, because of which it can “hang” or restart the computer. In principle, your hardware should not have such an error, because the operating system relies on the fact that the hardware will in any case help it reach the core to take care of eliminating the consequences of a user error.

But it turns out that the processors are so complex that they have errors, and these guys say they have found evidence. If there are some complex instructions that the processor did not expect to receive, it will stop instead of processing the core of the system. This is bad. I think that it is not catastrophic if I just run a few useful things on my laptop, but much worse if the computer freezes when I visit some web pages.

Thus, they wanted to create a higher level of protection for the Native Client modules than the one that provides isolation at the OS level, even free of hardware errors. So about security, they behave like paranoids, including the problem of hardware security.

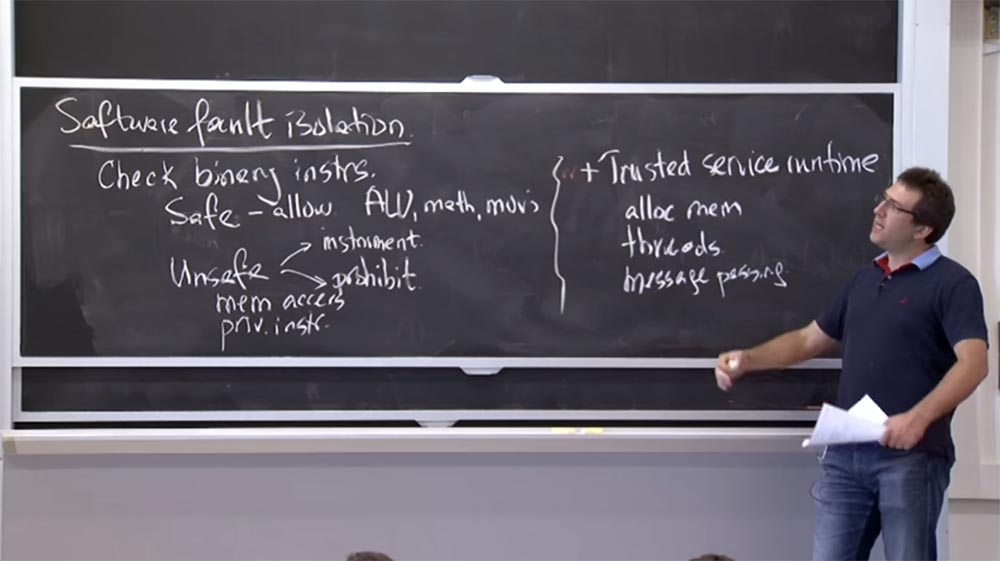

Let's see howNative Client actually isolates processes in the sandbox. Thus, the Native Client uses a different approach, which can be called "isolating software failures."

The plan is not to rely on the operating system or hardware to check things while the program is running, but to rely on them to review the instructions in advance and decide that they can be completed safely. Thus, it is actually enough to look at the binary file to check all possible instructions and see if they will be safe or unsafe. Once you have decided that everything will be safe, you can simply start the process because you know that it consists of safe things and there will be no failures.

So what they are going to do is to watch almost all the instructions in binary code that is sent to the browser, and decide whether specific instructions will be safe or not.

What do they do for safe instructions? They simply allow them to act. What is an example of a safe instruction? Probably, they believe that a safe instruction does not need additional checks or protection.

Safe instructions are ALU arithmetic logic units., mathematical calculations, moving between registers and so on. In fact, they do not affect the security of the system that bothers them so much. Because they really care about things like memory security, where you run this code, and so on. As long as you just perform some calculations, they don’t care, because these calculations do not affect other processes in the browser.

But what about unsafe operations? I think these are things that concern them much more. This is all that provides access to memory, or performs some privileged instructions, or performs a system call on this computer. Maybe these processes will try to "jump out" from the sandbox, who knows? For this kind of instruction, they are going to do one of two things.

If the instruction is really necessary for the operation of the application, for example, it provides it with access to memory, then they are going to somehow make sure that they can execute this unsafe instruction in a secure manner. Or if you "jump" in the address space of the program, then they will try to somehow instruct the instruction. Instrument instrumentation means that an insecure instruction will do the “right” things that an application requires of it. Because sometimes an instruction can do bad things, and instrumentation means that you add before it some additional conditions that will check it or use it if its performance does not cause any harm.

For example, when accessing memory, you could do (they abandoned it for performance reasons) a memory access instrumentation. For this you need to install several checks. For example, you can set an if statement before the instruction to check if the address is in the range of addresses allowed for this module. If so, the instruction is executed; if not, it is not executed.

That is, we create a tool for instructions, which turns it from unsafe into safe, thanks to the fact that it has an operator for checking the condition. This is their idea of ensuring security without using the support of the operating system at a certain level. I think that they do not instrument all unsafe instructions, as some of them can be simply forbidden. For example, if they believe that this instruction is not needed for the application to work, and the application still tries to launch it, we should simply “kill” this application or prevent it from working.

This is a kind of great software failure isolation plan. Once you have checked the application's binary file, and it satisfies all the conditions and everything is properly instrumented, you can simply run the program. And by definition, she shouldn’t do anything dangerous if we did all these checks and applied the tools.

There is another part in the history of isolating software errors, which is that when you make sure everything is safe, the process really cannot do something more dangerous than computing in its own little memory. He will not be able to access the network, to the disk, to your browser, display, keyboard. But almost every software error isolation history is the existence of some trusted services. Trusted Service Runtimethat are not subject to instruction checks. Therefore, a trusted service can perform all of these potentially dangerous activities. In this case, this trusted application is written by Google developers . So they hope that with the implementation of NaCl everything will be fine.

There may be such things as allocating memory, perhaps creating a stream, maybe communicating with a browser - we saw how the system sends a message to it - and so on. And all of them are exposed to this isolated module, which should ensure the normality of operations in a predictable way.

Audience: Interesting, we must develop this application, taking into account that it will be sent to the NaCl module? Or does the module in any case change things that can lead to incorrect operation?

Professor: I think that if you create an application, you should know that it will work inside NaCl . Therefore, some function calls, such as malloc or pthread_create , are simply transparently combined with calls from their Trusted Service Runtime . But if you do something like opening a file by the name of a path or the like that can be done on a computer running Unix , you will probably have to replace this action with something else. You will probably want to structure your thing so you can interact with your JavaScript at least a little.or your webpage. Therefore, you will have to send some messages or RPC to the JavaScript part , that is, you will have to make changes to your code.

Perhaps, if you try hard, you can try launching an arbitrary Unix program in NaCl by placing it in some kind of shell, but by default you can work only inside this web page. This is the general plan for isolating software errors. Let's look at what security means when using the Native Client . We just talked about safe and insecure instructions - what might worry the Native Client developers ? As far as I can tell, for

Native Client security means two things. One is that there are no prohibited instructions that he can follow. And these forbidden instructions can perform such things as system calls or launching interaction, which on x86 platforms is another mechanism for moving to the kernel and making a system call. It will probably also not allow you to follow other privileged instructions that would allow you to get out of the sandbox; later we will look at these instructions.

And in addition to the absence of prohibited instructions, they want to make sure that all code and data access are within the module. This means that they actually allocate a certain portion of the program address space, usually from 0 to 256 MB per process. And all that can be done by processes that are not among the trusted ones is to refer to addresses that are within this reserved in the program memory.

So why do they want to ban these instructions? And what happens if they can't ban them?

Audience: probably because the module can manipulate the system.

Professor:true, because it's pretty simple. For example, he may restart the computer or open the home directory, list all the files and perform any such actions. Why do they care to isolate code and data so that they can access only these restricted addresses? What will go wrong if they do not?

Audience: because code or data can access and interrupt the execution of a module on a computer.

Professor: right!

Audience: we care if they ruin the work of their own program, and it will fail. But they can disrupt the entire system.

Professor:yes, in a way it's true. But in their case, they actually manage things in a separate process. Thus, in theory, it will only fail in an additional process. I think that in reality they are more concerned about non-prohibited instructions in the sense that there are things like Trusted Service Runtime in your process . So you really care that they do not destroy the rest of your computer if an unreliable module arbitrarily goes into the Trusted Service Runtime trusted environment and does everything this environment can do. Thus, the prohibition of instructions acts as an auxiliary mechanism to prevent such a case.

In principle, this can be used as an option for easier isolation if you can run the NaCl module inside the browser process itself, rather than launch an additional process. But for performance reasons, they really need to bind the module to this specific memory range, or it should start at 0. This means that you can only have one really unreliable NaCl module for each process. Therefore, in any case, you should start a separate process.

28:00 min.

Continuation:

Course MIT "Computer Systems Security". Lecture 7: "Sandbox Native Client", part 2

Full version of the course is available here .

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending to friends, 30% discount for Habr's users on a unique analogue of the entry-level servers that we invented for you: The whole truth about VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps from $ 20 or how to share the server? (Options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

3 months for free if you pay for new Dell R630 for a period of half a year - 2 x Intel Deca-Core Xeon E5-2630 v4 / 128GB DDR4 / 4x1TB HDD or 2x240GB SSD / 1Gbps 10 TB - from $ 99.33 a month , only until the end of August, order can be here.

Dell R730xd 2 times cheaper? Only we have 2 x Intel Dodeca-Core Xeon E5-2650v4 128GB DDR4 6x480GB SSD 1Gbps 100 TV from $ 249 in the Netherlands and the USA! Read about How to build an infrastructure building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?