Browsers turn off the sound in your WebRTC application. Stop what?

- Transfer

The WebRTC technology (voice and video calls) is good because it is built right into the web, which, of course, is perfect for WebRTC. However, sometimes the web delivers a lot of trouble when the needs of WebRTC run counter to the general requirements for using browsers. The last example is autoplay (hereinafter referred to as “autoplay”) audio / video, when many users suddenly lost sound. Former webrtcHacks author Dag-Inge Aas personally encountered this problem. Below are his thoughts: what to expect from browsers in terms of autoplay, the latest changes in Chrome 66+, as well as a couple of tips on how to live with these limitations.

Browsers do not want to hear Evil, so autoplay policies turn off the sound in any media. This can be a problem for WebRTC applications.

If you are reading this, then it is very likely that you have encountered a strange bug in Safari 11+ and Chrome 66+ browsers. Speech about the sounds of the interface, which suddenly became unheard (for example, an incoming call signal), or about a non-working audiovisualizer, or about how not to hear interlocutors.

At the moment, the bug has affected almost all popular WebRTC-players. It's funny, but it seems that Meet from Google and Chromebox for meetings are also affected.

The root of all evil: changes in policies autoplay (autoplay policies). In this post I will tell you about innovations, how they relate to WebRTC and how to deal with this in your applications.

Error of the year: Uncaught Error: The AudioContext was not allowed to start. It must be resumed (or created) after a user gesture on the page. https://goo.gl/7K7WLu

It all started in 2007, when the iPhone and iOS appeared. If you have worked with Safari for iOS in the past, you may have noticed that Safari needs user input to play the <audio> and <video> elements with sound . Later, this requirement was slightly weakened when iOS 10 allowed video elements to play automatically, but without sound. This has become a problem for WebRTC, because the <video> element must “see” and “hear” the media stream. In the context of WebRTC, allowing video to automatically start without sound is useless, because during a video call you need to hear interlocutors by default, and not click “play” to do this. Be that as it may, few WebRTC developers engaged in Safari for iOS, because the platform did not support WebRTC until recently . Before the release of iOS 11.

I first encountered a bug when I tested the latest (at that time) working implementation of video calls for iOS. To my surprise, it stopped working, and at the same time I was not alone. User Github kylemcdonald zareportil bug getUserMedia on iOS. Decision? Add video elements new property playsinline , that allow video play with sound. Unfortunately, the developers did not mention WebRTC in the original post about autoplay changes in Safari, but they still wrote about WebRTC separately, before the release. The article says the following about MediaStreams and audio playback:

So, this document does not mention playsinline , but if you combine two announcements, you can figure out how to make the WebRTC application work in Safari on iOS.

Initially, users wanted to save on unnecessary traffic costs. In 2007, data transmission was expensive (and remains so in most parts of the world), and only a few sites were adapted for mobile. In addition, auto-sound has been and remains the most annoying thing on the whole web. Therefore, user action was required to play (and download) the video; This provided a guarantee that the user is up to date.

Then came the gif. GIFs are just animations inside <img>, so they did not need the “permission” of the user. However they can be heavyand therefore bring pain to users of the mobile Internet. Video spares traffic, but requires consent for download - so the web pages continued to use GIF. Everything changed in iOS 10 when Safari allowed auto-play with the sound turned off. Since then, load optimization is a matter of resolved video and going three-minute gifs into oblivion.

If you search for how to stop auto-play sound, then you will find quite a few ways. News agencies recently found out that when they use REALLY VOLUME audio after loading a page, users spend more time on the site and click on ads. Of course, it’s worth doing that, but they still do it. Therefore, desktop browsers followed the lead of Safari and banned auto-sound, especially Chrome, which rolled out new autoplay policies in the 66 version.

However, Chrome unexpectedly turned to the original Media Engagement Index (MEI).

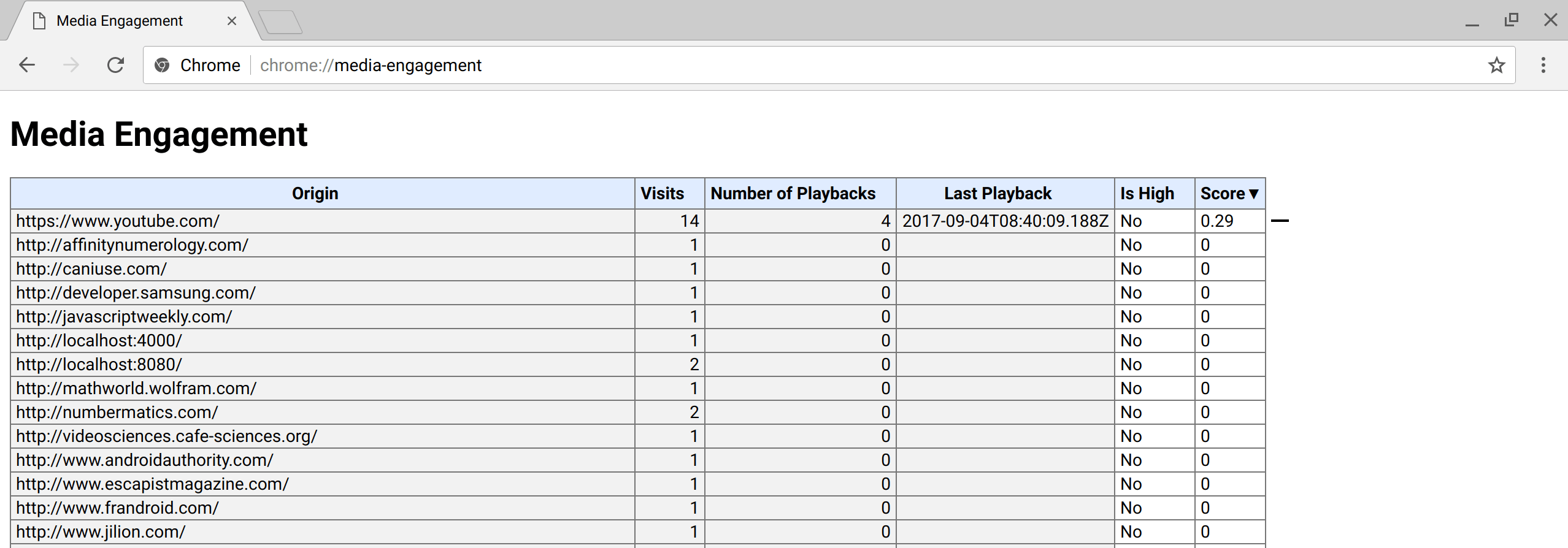

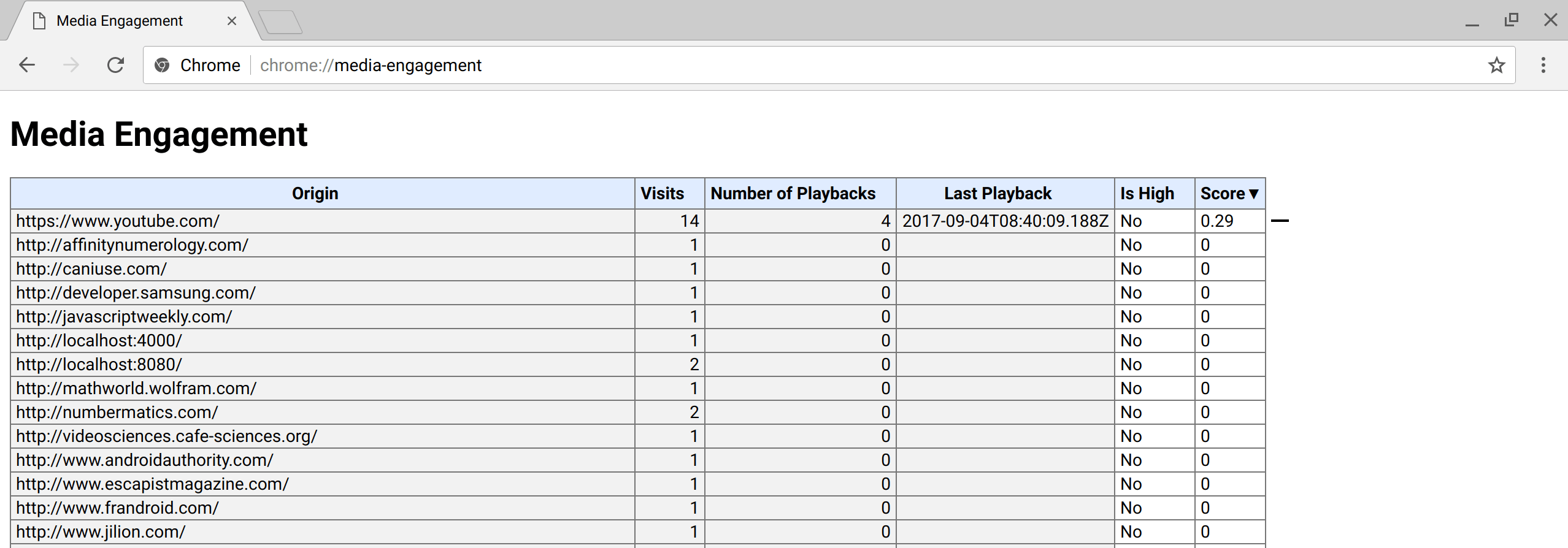

MEI is how Chrome measures the user's willingness to allow auto-play on a page; This index depends on the previous behavior on the page. See what it looks like here: chrome: // media-engagement / . MEI is considered separately for each user profile and works in incognito mode (because of this, it is very difficult for developers to test pages with zero MEI before rolling out into production). Does anyone guess what will happen next? Screenshot from chrome's internal page: // media-engagement / (source: developers.google.com/web/updates/2017/09/autoplay-policy-changes )

It turned out that the new policies have affected not only the tags <audio> and <video>. A popular UX pattern for WebRTC is to show the microphone volume level to the user . For this, the sound is analyzed through AudioContext , which takes the MediaStream and outputs its signal in the form of a histogram. In this case, the sound is not reproduced, but still audio analysis is blocked due to AudioContext , which, in theory, allows you to output sound.

An example of microphone testing A

problem was first reported in the Webkit bugtracker in December , and after six days a fix arrived in Webkit . Correction? Do not block AudioContext if the page already receives audio and video.

So why are you still reading this article? It turned out that Chrome made the same mistake as Safari . Although this has affected many WebRTC services, Google keeps quiet about it. There have been many attempts to get them to make a public statement about the influence of autoplay on WebRTC, but this has not happened yet.

How did we get into this mess? Of course, developers had to test AudioContext more before the changes in Chrome 66, which affected each user. And here comes MEI; you understand, frequent interaction with the page gives you a higher MEI, respectively, developers are less likely to encounter a bug, since audio has long been allowed to play and analyze. And yes, the incognito mode does not help, because MEI continues to be considered there. Only launching Chrome with a new account will reveal a bug — a fact that even experienced QA engineers from Google can easily forget .

Changes in core functionality are difficult to accomplish in the right way. Chrome has released many changes to the autoplay policy, but none of them related to WebRTC or MediaStreams . Apparently, the forgotten Permissions API was not updated, so developers cannot test the request for user actions. Alternatively, you can allow AudioContext to output sound if the page is already working with a camera / microphone (as Safari did), but it looks more like a hack than a solution. And it will not help in other cases of sound analysis, when getUserMedia is not used .

Reinforced solution for browser manufacturers - allow media permissionsaffect the MEI. If the user has given constant access to his camera and microphone, then it is logical to assume that the web page can be trusted enough to reproduce sound without additional actions and regardless of whether it works with the camera / microphone or not. In the end, the user believes that you will not share his camera and microphone to millions of people without their knowledge. In this case, playing the sounds of the interface is the least of the problems.

Fortunately, there are a couple of tricks that will help you. Here is a list of what we added to Confrere when we encountered a problem in Safari for iOS.

To return sound to video, add the playsinline attribute to the video element. This attribute is well documented, works in Safari and Chrome and has no side effects in other browsers.

To cure an audiovisualizer, simply add a custom action. We were lucky, because we could add (and add) to our test screen many steps that imply explicit user participation. Perhaps you are less fortunate. Until Google takes up the repair, there is no other way than to involve the user.

This is currently not possible. Someone tries to create AudioContext , which is reused in the application and all sounds go through it, but I have not tested this method. In Safari, a little easier: if you already work with a camera / microphone, you can play the sounds of incoming messages and calls. But I do not think that you want to constantly use the camera / microphone only to sound the user to notify the incoming call.

As you can see, there are already ways to fix the problem until a long-term solution appears. Yes, and do not forget to subscribe to a bug to receive updates.

Browsers do not want to hear Evil, so autoplay policies turn off the sound in any media. This can be a problem for WebRTC applications.

If you are reading this, then it is very likely that you have encountered a strange bug in Safari 11+ and Chrome 66+ browsers. Speech about the sounds of the interface, which suddenly became unheard (for example, an incoming call signal), or about a non-working audiovisualizer, or about how not to hear interlocutors.

At the moment, the bug has affected almost all popular WebRTC-players. It's funny, but it seems that Meet from Google and Chromebox for meetings are also affected.

The root of all evil: changes in policies autoplay (autoplay policies). In this post I will tell you about innovations, how they relate to WebRTC and how to deal with this in your applications.

Error of the year: Uncaught Error: The AudioContext was not allowed to start. It must be resumed (or created) after a user gesture on the page. https://goo.gl/7K7WLu

Changes

It all started in 2007, when the iPhone and iOS appeared. If you have worked with Safari for iOS in the past, you may have noticed that Safari needs user input to play the <audio> and <video> elements with sound . Later, this requirement was slightly weakened when iOS 10 allowed video elements to play automatically, but without sound. This has become a problem for WebRTC, because the <video> element must “see” and “hear” the media stream. In the context of WebRTC, allowing video to automatically start without sound is useless, because during a video call you need to hear interlocutors by default, and not click “play” to do this. Be that as it may, few WebRTC developers engaged in Safari for iOS, because the platform did not support WebRTC until recently . Before the release of iOS 11.

I first encountered a bug when I tested the latest (at that time) working implementation of video calls for iOS. To my surprise, it stopped working, and at the same time I was not alone. User Github kylemcdonald zareportil bug getUserMedia on iOS. Decision? Add video elements new property playsinline , that allow video play with sound. Unfortunately, the developers did not mention WebRTC in the original post about autoplay changes in Safari, but they still wrote about WebRTC separately, before the release. The article says the following about MediaStreams and audio playback:

- media using MediaStream will automatically play if the web page already uses a camera / microphone;

- media using MediaStream will automatically play if the web page is already playing sound. In order for the sound to start, the user still needs to be involved.

So, this document does not mention playsinline , but if you combine two announcements, you can figure out how to make the WebRTC application work in Safari on iOS.

Why generally limit autoplay?

Initially, users wanted to save on unnecessary traffic costs. In 2007, data transmission was expensive (and remains so in most parts of the world), and only a few sites were adapted for mobile. In addition, auto-sound has been and remains the most annoying thing on the whole web. Therefore, user action was required to play (and download) the video; This provided a guarantee that the user is up to date.

Then came the gif. GIFs are just animations inside <img>, so they did not need the “permission” of the user. However they can be heavyand therefore bring pain to users of the mobile Internet. Video spares traffic, but requires consent for download - so the web pages continued to use GIF. Everything changed in iOS 10 when Safari allowed auto-play with the sound turned off. Since then, load optimization is a matter of resolved video and going three-minute gifs into oblivion.

Restriction of autoplay in desktop browsers

If you search for how to stop auto-play sound, then you will find quite a few ways. News agencies recently found out that when they use REALLY VOLUME audio after loading a page, users spend more time on the site and click on ads. Of course, it’s worth doing that, but they still do it. Therefore, desktop browsers followed the lead of Safari and banned auto-sound, especially Chrome, which rolled out new autoplay policies in the 66 version.

However, Chrome unexpectedly turned to the original Media Engagement Index (MEI).

The Media Engagement Index (MEI)

MEI is how Chrome measures the user's willingness to allow auto-play on a page; This index depends on the previous behavior on the page. See what it looks like here: chrome: // media-engagement / . MEI is considered separately for each user profile and works in incognito mode (because of this, it is very difficult for developers to test pages with zero MEI before rolling out into production). Does anyone guess what will happen next? Screenshot from chrome's internal page: // media-engagement / (source: developers.google.com/web/updates/2017/09/autoplay-policy-changes )

Not only <audio> and <video>

It turned out that the new policies have affected not only the tags <audio> and <video>. A popular UX pattern for WebRTC is to show the microphone volume level to the user . For this, the sound is analyzed through AudioContext , which takes the MediaStream and outputs its signal in the form of a histogram. In this case, the sound is not reproduced, but still audio analysis is blocked due to AudioContext , which, in theory, allows you to output sound.

An example of microphone testing A

problem was first reported in the Webkit bugtracker in December , and after six days a fix arrived in Webkit . Correction? Do not block AudioContext if the page already receives audio and video.

So why are you still reading this article? It turned out that Chrome made the same mistake as Safari . Although this has affected many WebRTC services, Google keeps quiet about it. There have been many attempts to get them to make a public statement about the influence of autoplay on WebRTC, but this has not happened yet.

MEI values interfere with tests.

How did we get into this mess? Of course, developers had to test AudioContext more before the changes in Chrome 66, which affected each user. And here comes MEI; you understand, frequent interaction with the page gives you a higher MEI, respectively, developers are less likely to encounter a bug, since audio has long been allowed to play and analyze. And yes, the incognito mode does not help, because MEI continues to be considered there. Only launching Chrome with a new account will reveal a bug — a fact that even experienced QA engineers from Google can easily forget .

What should browser manufacturers do?

Changes in core functionality are difficult to accomplish in the right way. Chrome has released many changes to the autoplay policy, but none of them related to WebRTC or MediaStreams . Apparently, the forgotten Permissions API was not updated, so developers cannot test the request for user actions. Alternatively, you can allow AudioContext to output sound if the page is already working with a camera / microphone (as Safari did), but it looks more like a hack than a solution. And it will not help in other cases of sound analysis, when getUserMedia is not used .

Reinforced solution for browser manufacturers - allow media permissionsaffect the MEI. If the user has given constant access to his camera and microphone, then it is logical to assume that the web page can be trusted enough to reproduce sound without additional actions and regardless of whether it works with the camera / microphone or not. In the end, the user believes that you will not share his camera and microphone to millions of people without their knowledge. In this case, playing the sounds of the interface is the least of the problems.

How to help your application

Fortunately, there are a couple of tricks that will help you. Here is a list of what we added to Confrere when we encountered a problem in Safari for iOS.

Added playsinline

To return sound to video, add the playsinline attribute to the video element. This attribute is well documented, works in Safari and Chrome and has no side effects in other browsers.

User action

To cure an audiovisualizer, simply add a custom action. We were lucky, because we could add (and add) to our test screen many steps that imply explicit user participation. Perhaps you are less fortunate. Until Google takes up the repair, there is no other way than to involve the user.

Can't fix interface sounds

This is currently not possible. Someone tries to create AudioContext , which is reused in the application and all sounds go through it, but I have not tested this method. In Safari, a little easier: if you already work with a camera / microphone, you can play the sounds of incoming messages and calls. But I do not think that you want to constantly use the camera / microphone only to sound the user to notify the incoming call.

As you can see, there are already ways to fix the problem until a long-term solution appears. Yes, and do not forget to subscribe to a bug to receive updates.