A new face recognition system installed by London police cannot recognize anyone

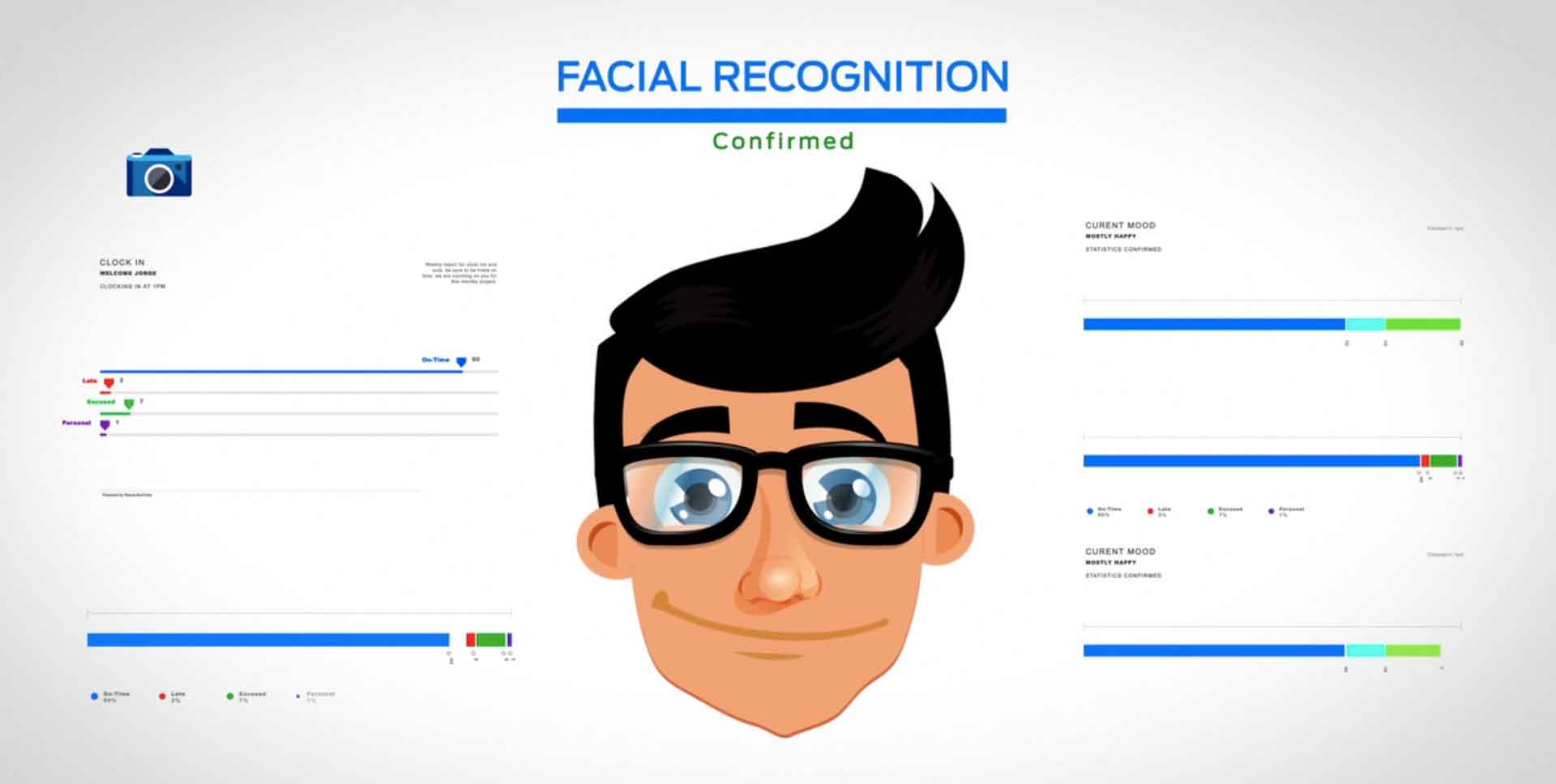

In some of the largest cities in the world (New York, Paris, London, Beijing), police are testing face recognition systems. This is done, in particular, in order to be able to “snatch” from the crowd the faces of criminals and suspects of various crimes, to check a person and, if suspicions are confirmed, to arrest. Usually, the analysis is carried out in real time and, if there is a coincidence in the image database, the police will receive a notification about the detection of the person to be detained.

In China, thanks to such a system, in April of this year, a suspect of economic crimes was caught at a concert with 50 thousand spectators. He went there for 90 km from the city in which he was hiding. After the arrest, the Chinese confessed that if he had known something about the current possibilities of the police, he would never have gone to the concert. He hoped that he could hide among a huge number of people. But it didn’t work out - the system nevertheless discovered him, law enforcement officers reacted in time and detained this person. As for face detection systems used in other countries, it seems to be a problem with them.

In particular, the Wales police (UK) had previously stated that one of the systems tested by law enforcement officers gave a huge amount of false positives - something around 92%.

Now Scotland Yard decided to test the effectiveness of another system already in London, in the hope that it will help fight crime. But for several months of testing, the system gave a warning only once - when the computer "thought" that a suspect in the crime passed in the crowd.

Perhaps there were more false positives, the law enforcement officers did not give too detailed details. The problem is that not a single criminal was detained during these few months. Cameras connected to a computer trying to recognize the faces of criminals were installed on a pedestrian bridge with a large cross. Scotland Yard hoped that among ordinary people hiding criminals who can be found and arrested.

So far these are only the results of preliminary tests, the police have pledged to give a full report after the testing phase has been completed. According to a police spokesman who is part of the “testers” team, photographs of the faces of people to whom the system has not responded are deleted immediately. Those pictures that are recognized by the system to be the same for a number of signs with photos of criminals are deleted after a month - in the event that the operation turned out to be false.

That only actuation, which is now known, was false. The system responded to a passing young black man. According to police, the difference between a snapshot of this person and a photograph of a criminal from the base of the police, with which the comparison was made, can be seen with the naked eye - it is immediately clear to the person that they are different people.

But the youngest man had to be nervous. The police immediately stopped him, took him aside and inspected the items found during the search. In addition, after viewing the documents, it turned out that this person has nothing to do with the criminal with whom his photo was compared. The young man, according to the police, "did not quite understand what had just happened and looked a little lost."

After that, he was given a booklet where the details of the computer system were described, so that the young man would get more information about the involuntary witness of what he had become.

As far as one can understand, the system turned out to be ineffective - not only because none of the criminals were detained (maybe, just as the bridge does not go, the criminals do not walk, who knows). But also because even false positives were a minimum.

In the UK, activists oppose the installation of face recognition systems, who consider this a violation of their own privacy and personal data protection. There are those who support such measures, believing that they help fight terrorism and crime. The fact that the system is ineffective does not change the opinion of supporters. They believe that it is only necessary to refine facial recognition so that everything is as it should.

In turn, the developers believe that testing the system does not speak at all about anything - it was a test, its results will be reviewed and the computer system will be modified in accordance with the results of the “field tests”. “We will review the results and get the information we need for the project itself,” the developers said.