Catastrophic IT systems: how to implement in your company

Imagine that your data center (or battle server) has fallen today . Just picked up and dropped. As practice shows, not everyone is ready for this:

In previous posts ( once and twice ) I wrote about organizational measures that will accelerate and facilitate the restoration of IT systems and related company processes in an emergency.

Now let's talk about technical solutions that will help in this. Their cost varies from several thousand to hundreds of thousands of dollars.

Very often, HA (High Availability) and DR (Disaster Recovery) solutions are confused. First of all, when we talk about business continuity, we mean a reserve site. Applied to IT - backup data center. Business continuity is not about backing up to a library in an adjacent rack (which is also very important). This is about the fact that the main building of the company will burn down, and in a few hours or days we will be able to resume work, turning around in a new place:

So, you need a backup data center. What are the options? Usually there are three: hot, warm and cold reserves.

Cold reserve implies that there is some kind of server room into which you can bring equipment and deploy it there. Upon restoration, the purchase of “iron” may be planned, or its storage in the warehouse. Keep in mind that most systems are supplied to order, and quickly find dozens of units of servers, storage, switches, and so on. will be a non-trivial task. As an alternative to storing equipment at home, you can consider storing the most important or rarest equipment at your suppliers ’warehouse. At the same time, telecommunication channels in the room must be present, but the conclusion of a contract with the provider usually occurs after a decision is made to launch a “cold” data center. Restoring work in such a data center in the event of a catastrophic failure of the main site may well take several weeks. Make sure that your company will be able to survive these few weeks without IT and not lose business (due to revocation of a license, or an irreplaceable cash gap, for example) - I wrote about this earlier. Honestly, I would not recommend this reservation option to anyone. Perhaps I am exaggerating the role of IT in the business of some companies.

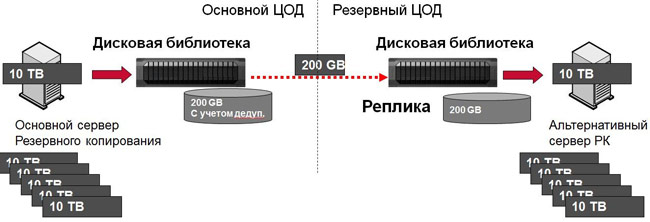

This means that we have an alternative platform operating in which there are active Internet and WAN channels, basic telecommunication and computing infrastructure. It is always “weaker” than the main one in terms of computing power; some equipment may be absent there. The most important thing is that the site always has an up-to-date data backup. Acting "the old fashioned way", you can organize regular movement of backup copies on tapes there. The modern method is to replicate backups over the network from the main data center. Using backup with deduplication will allow you to quickly transfer backups even on a “thin” channel between data centers.

Here it is, the choice of tough guys who support IT systems, the downtime of which even for several hours brings huge losses to the company. It has all the necessary equipment for the full operation of IT systems. Typically, the foundation of such a platform is a data storage system onto which data from the main data center is mirrored synchronously or asynchronously. In order for X to have a hot reserve at an o'clock could work out the money invested in it, regular test translations of the systems should be carried out, the settings and OS version of the servers on the main and backup sites should be constantly synchronized - manually or automatically.

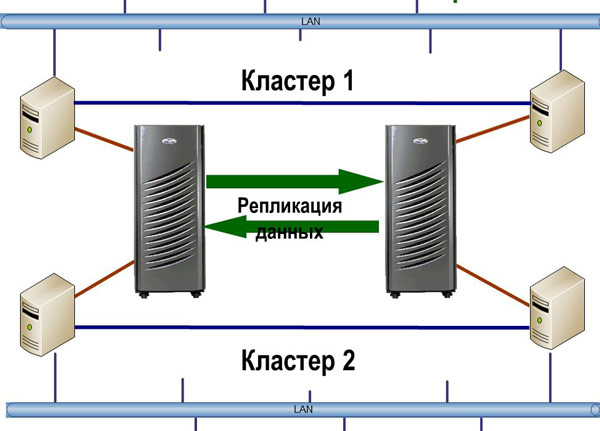

Minus hot and warm reserve - expensive equipment is idle in anticipation of a disaster. The way out of this situation is a distributed data center strategy.. With this option, two (or more) platforms are equal - most applications can run on either one or the other. This allows you to use the power of all equipment and provide load balancing. On the other hand, the requirements for automation of the transfer of IT services between data centers are seriously increasing. If both data centers are “fighting”, the business has the right to expect that, with the expected peak load on one of the applications, it can be quickly transferred to a more free data center. Most often, synchronous replication between storage systems is present in such data centers, but small asynchrony is possible (within a few minutes).

Before moving directly to the technology of disaster tolerance of IT services, I recall three “magic” words that determine the cost of any DR solution: RTO, RPO, RCO.

The first division we can draw between the full range of disaster tolerance IT solutions is whether they provide zero RPO or not. No data loss during a failure is provided by synchronous replication. Most often, this is done at the storage level, but it is possible to implement it at the DBMS or server level (using advanced LVM). In the first case, the server does not receive confirmation of the success of the recording from the storage system with which it works, until the storage system transferred this transaction to the second system and received confirmation from it that the recording was successful.

Synchronous replication can do 100% of storage related to the middle price segment and some entry-level systems from well-known vendors. The cost of licenses for synchronous replication on "simple" storage systems starts from several thousand dollars. Approximately the same cost of software for replication at the server level for 2-3 servers. If you do not have a functioning backup data center, do not forget to add the cost of purchasing backup equipment.

An RPO in a few minutes can provide asynchronous replication at the storage level, server volume management software (LVM - Logical volume manager), or DBMS. Until now, the standby copy of the database remains one of the most popular solutions for DR. Most often, the “log shipping” functionality, as it is called by DBMS administrators, is not licensed separately by the manufacturer. If you have a licensed database - replicate to health. The cost of asynchronous replication for servers and storage is no different from synchronous, see the previous paragraph.

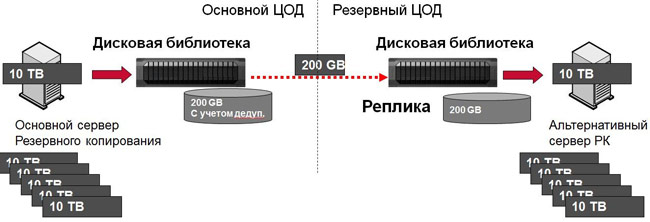

If we talk about RPO in a few hours, more often it is the replication of backups from one site to another. Most disk libraries can do this; some of the backup software also. As I said, with this option, deduplication will help a lot. You will not only load the channel less by transferring backups, but also do it much faster - each transferred backup will take tens or hundreds of times less time than in reality. On the other hand, we must remember that the first backup during deduplication should still transmit a lot of unique data to the system. You will see “real” deduplication after a weekly backup cycle. When synchronizing disk libraries - the same thing.

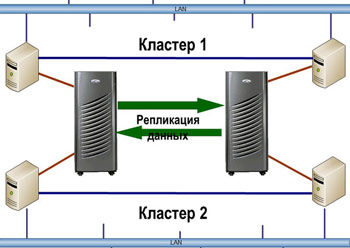

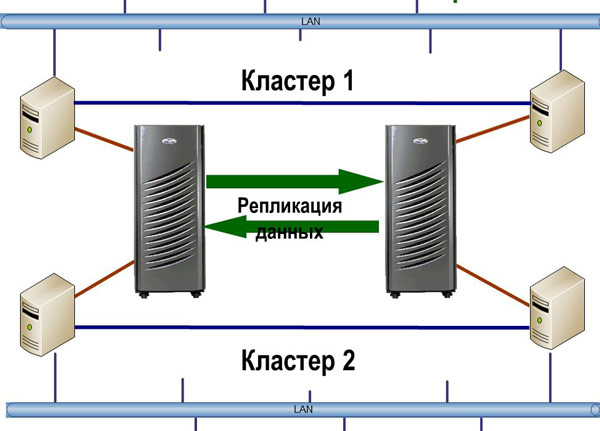

Backup synchronization between data centers

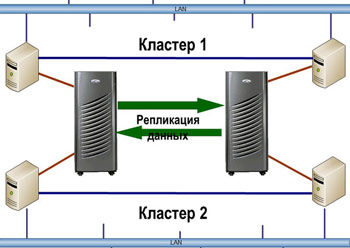

When the goal is to minimize recovery time ( RTO ), the process should be as documented and automated as possible. One of the best and most universal solutions is HA clusters with geographically spaced nodes. Most often, such solutions are built on the basis of storage replication, but other options are possible. Leading products in this area, for example, Symantec Veritas Cluster, include storage modules that switch replication direction when it is necessary to restart the service on the backup node. For less advanced clusters (for example, Microsoft Cluster Services, built-in on Windows), the major storage manufacturers (IBM, EMC, HP) offer add-ons, making disaster resistant from a regular HA-cluster.

Geographically distributed cluster

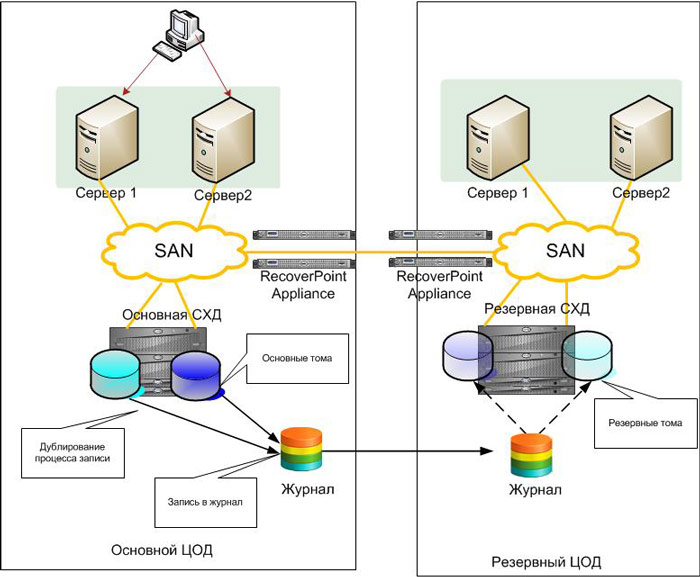

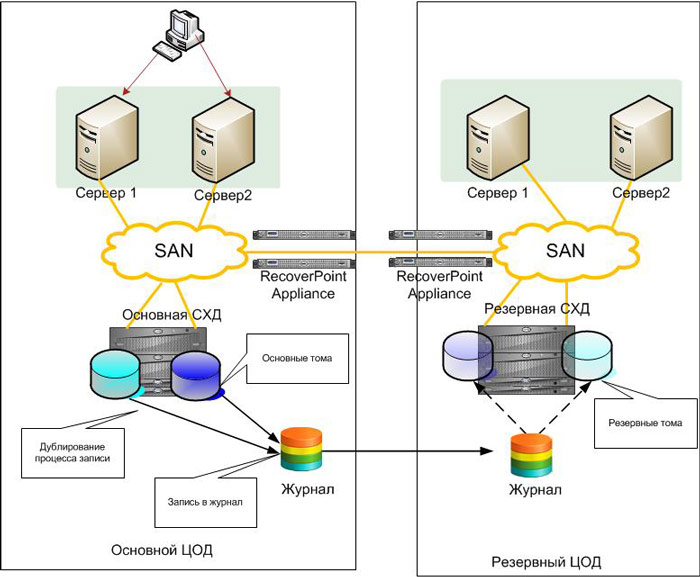

Few people think about an interesting feature of the overwhelming majority of data replication solutions - their "single-shot". You can get only one data state on the backup site. If the system with these data for some reason did not start, we proceed to plan “B”. Most often, this is a recovery from a backup with a lot of data loss. Of the technologies I have listed, the only exception is replication of the same backups. The answer here is to use the Continuous Data Protection class of solutions. Their essence is that all records coming from the server are marked and stored in a specific journal volume on the backup site. When restoring the system, you can select any point from this log and get the status not only at the time of the accident in which the data was corrupted, but also in a few seconds. Such solutions protect against an internal threat - data deletion by users. In the case of storage replication, it doesn’t matter what to transfer - an empty volume or your most critical database. When using CDP, you can choose the moment right before deleting the information and recover on it. CDP systems typically cost tens of thousands of dollars. One of the most successful examples, in my opinion, is EMC RecoverPoint.

Scheme of solutions based on RecoverPoint

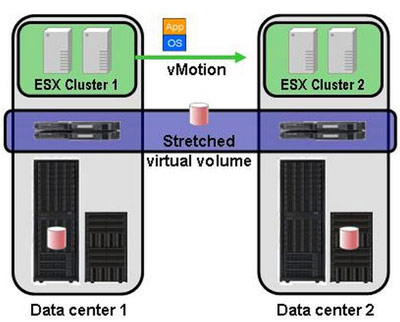

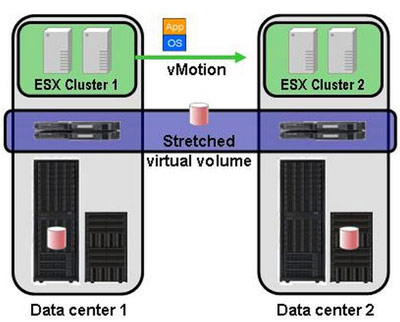

Recently, storage virtualization systems are gaining popularity. In addition to their main function - combining arrays of different vendors into a single resource pool - they can greatly help in organizing a distributed data center. The essence of storage virtualization is that between the servers and storage systems an intermediate layer of controllers appears, passing through all the traffic. Storage volumes are not presented directly to servers, but to these virtualizers. They, in turn, distribute to their hosts. In the virtualization layer, you can replicate data between different storage systems, and often there are more advanced features - snapshots, tiered storage, etc. Moreover, the most basic function of virtualizers is most necessary for DR purposes. If we have two storage systems in different data centers connected by an optical backbone, we take volumes from each of them and collect a “mirror” at the virtualizer level. As a result, we get one virtual volume for two data centers, which the servers see. If these servers are virtual - Live Migration of virtual machines starts working and you can transfer tasks between data centers on the go - users will not notice anything.

The complete loss of the data center will be worked out by a regular HA-cluster in an automatic mode in a few minutes. Perhaps the virtualization of distributed storage allows you to provide the minimum recovery time for most applications. For the DBMS there is an unsurpassed Oracle RAC and its analogues, but the cost makes you think. SAN virtualization is also not cheap, for small volumes of storage the cost of a solution can be less than $ 100K, but in most cases the price is higher. In my opinion, the most proven solution is the IBM SAN Volume Controller (SVC), the most technically advanced is EMC VPLEX.

By the way, if not all of your applications still live in a virtual environment, it is worth designing a backup data center for them in virtual machines. Firstly, it will be much cheaper, and secondly, by making it for a reserve, not far from the migration of the main systems under the control of some hypervisor ...

Competition in the outsourcing market of data centers makes renting a room in a provider’s data center more profitable than building and operating your own reserve center. If you host virtual infrastructure with him, there will be significant savings in rental payments. But outsourcing data centers are no longer at the top of progress. It’s better to build a backup infrastructure right in the cloud. In this case, data synchronization with the main systems can be ensured by server-level replication (there is an excellent DoubleTake family of solutions from Vision Solutions).

The last but very important point that should not be forgotten when designing a disaster-resistant IT infrastructure is user workstations. The fact that the database has risen does not mean the resumption of the business process. The user should be able to do their job. Even a full-fledged backup office, in which there are turned off computers for key employees, is not an ideal solution. A person at a lost workplace may have reference materials, macros, etc., full work without which it is impossible. For the most important users for the company, it seems reasonable to switch to virtual workstations (VDI). Then at the workplace (whether it is an ordinary PC or a fashionable “thin” client) no data is stored, it is used only as a terminal to reach Windows XP or Windows 7, running on a virtual machine in the data center. Access to such a workplace is easy to organize from home or from any computer in the branch network. For example, if you have several buildings and one of them is unavailable, key users can come to a neighboring office and take jobs “less key”. Then they calmly log in to the system, get into their virtual machine and the company comes to life!

In conclusion, here are the main questions to ask when evaluating a DR solution:

There are countless catastrophic solutions - both boxed and those that can be done practically with your own hands. Please share in the comments what you have and the stories of how these decisions helped you out. If you have any of the systems described above or their analogues working, leave feedback on how calmly you sleep when IT systems are protected by them.

- 93% of companies that lost their data center for 10 or more days due to a disaster went bankrupt within a year (National Archives & Records Administration in Washington)

- 140,000 Hard Drives Fail Every Week in the US (Mozy Online Backup)

- 75% of companies do not have disaster recovery solutions (Forrester Research, Inc.)

- 34% of companies do not test backups.

- 77% of those who tested found unreadable drives in their libraries.

In previous posts ( once and twice ) I wrote about organizational measures that will accelerate and facilitate the restoration of IT systems and related company processes in an emergency.

Now let's talk about technical solutions that will help in this. Their cost varies from several thousand to hundreds of thousands of dollars.

High Availability and Disaster Recovery

Very often, HA (High Availability) and DR (Disaster Recovery) solutions are confused. First of all, when we talk about business continuity, we mean a reserve site. Applied to IT - backup data center. Business continuity is not about backing up to a library in an adjacent rack (which is also very important). This is about the fact that the main building of the company will burn down, and in a few hours or days we will be able to resume work, turning around in a new place:

| High availability | Disaster recovery |

| Solution within a single data center | Includes several remote data centers |

| Recovery time <30 minutes | Recovery can take hours or even days |

| Zero or near zero data loss | Data loss can reach many hours |

| Requires quarterly testing | Requires annual testing |

Cold reserve

Cold reserve implies that there is some kind of server room into which you can bring equipment and deploy it there. Upon restoration, the purchase of “iron” may be planned, or its storage in the warehouse. Keep in mind that most systems are supplied to order, and quickly find dozens of units of servers, storage, switches, and so on. will be a non-trivial task. As an alternative to storing equipment at home, you can consider storing the most important or rarest equipment at your suppliers ’warehouse. At the same time, telecommunication channels in the room must be present, but the conclusion of a contract with the provider usually occurs after a decision is made to launch a “cold” data center. Restoring work in such a data center in the event of a catastrophic failure of the main site may well take several weeks. Make sure that your company will be able to survive these few weeks without IT and not lose business (due to revocation of a license, or an irreplaceable cash gap, for example) - I wrote about this earlier. Honestly, I would not recommend this reservation option to anyone. Perhaps I am exaggerating the role of IT in the business of some companies.

Warm reserve

This means that we have an alternative platform operating in which there are active Internet and WAN channels, basic telecommunication and computing infrastructure. It is always “weaker” than the main one in terms of computing power; some equipment may be absent there. The most important thing is that the site always has an up-to-date data backup. Acting "the old fashioned way", you can organize regular movement of backup copies on tapes there. The modern method is to replicate backups over the network from the main data center. Using backup with deduplication will allow you to quickly transfer backups even on a “thin” channel between data centers.

Hot reserve

Here it is, the choice of tough guys who support IT systems, the downtime of which even for several hours brings huge losses to the company. It has all the necessary equipment for the full operation of IT systems. Typically, the foundation of such a platform is a data storage system onto which data from the main data center is mirrored synchronously or asynchronously. In order for X to have a hot reserve at an o'clock could work out the money invested in it, regular test translations of the systems should be carried out, the settings and OS version of the servers on the main and backup sites should be constantly synchronized - manually or automatically.

Minus hot and warm reserve - expensive equipment is idle in anticipation of a disaster. The way out of this situation is a distributed data center strategy.. With this option, two (or more) platforms are equal - most applications can run on either one or the other. This allows you to use the power of all equipment and provide load balancing. On the other hand, the requirements for automation of the transfer of IT services between data centers are seriously increasing. If both data centers are “fighting”, the business has the right to expect that, with the expected peak load on one of the applications, it can be quickly transferred to a more free data center. Most often, synchronous replication between storage systems is present in such data centers, but small asynchrony is possible (within a few minutes).

Three magic words

Before moving directly to the technology of disaster tolerance of IT services, I recall three “magic” words that determine the cost of any DR solution: RTO, RPO, RCO.

- RTO (Recovery time objective) - time for which it is possible to restore an IT system

- RPO (Recovery point objective) - how much data will be lost during disaster recovery

- RCO (Recovery capacity objective) - what part of the load should the backup system provide. This indicator can be measured in percentages, transactions of IT systems and other values.

RPO

The first division we can draw between the full range of disaster tolerance IT solutions is whether they provide zero RPO or not. No data loss during a failure is provided by synchronous replication. Most often, this is done at the storage level, but it is possible to implement it at the DBMS or server level (using advanced LVM). In the first case, the server does not receive confirmation of the success of the recording from the storage system with which it works, until the storage system transferred this transaction to the second system and received confirmation from it that the recording was successful.

Synchronous replication can do 100% of storage related to the middle price segment and some entry-level systems from well-known vendors. The cost of licenses for synchronous replication on "simple" storage systems starts from several thousand dollars. Approximately the same cost of software for replication at the server level for 2-3 servers. If you do not have a functioning backup data center, do not forget to add the cost of purchasing backup equipment.

An RPO in a few minutes can provide asynchronous replication at the storage level, server volume management software (LVM - Logical volume manager), or DBMS. Until now, the standby copy of the database remains one of the most popular solutions for DR. Most often, the “log shipping” functionality, as it is called by DBMS administrators, is not licensed separately by the manufacturer. If you have a licensed database - replicate to health. The cost of asynchronous replication for servers and storage is no different from synchronous, see the previous paragraph.

If we talk about RPO in a few hours, more often it is the replication of backups from one site to another. Most disk libraries can do this; some of the backup software also. As I said, with this option, deduplication will help a lot. You will not only load the channel less by transferring backups, but also do it much faster - each transferred backup will take tens or hundreds of times less time than in reality. On the other hand, we must remember that the first backup during deduplication should still transmit a lot of unique data to the system. You will see “real” deduplication after a weekly backup cycle. When synchronizing disk libraries - the same thing.

Backup synchronization between data centers

RTO

When the goal is to minimize recovery time ( RTO ), the process should be as documented and automated as possible. One of the best and most universal solutions is HA clusters with geographically spaced nodes. Most often, such solutions are built on the basis of storage replication, but other options are possible. Leading products in this area, for example, Symantec Veritas Cluster, include storage modules that switch replication direction when it is necessary to restart the service on the backup node. For less advanced clusters (for example, Microsoft Cluster Services, built-in on Windows), the major storage manufacturers (IBM, EMC, HP) offer add-ons, making disaster resistant from a regular HA-cluster.

Geographically distributed cluster

Few people think about an interesting feature of the overwhelming majority of data replication solutions - their "single-shot". You can get only one data state on the backup site. If the system with these data for some reason did not start, we proceed to plan “B”. Most often, this is a recovery from a backup with a lot of data loss. Of the technologies I have listed, the only exception is replication of the same backups. The answer here is to use the Continuous Data Protection class of solutions. Their essence is that all records coming from the server are marked and stored in a specific journal volume on the backup site. When restoring the system, you can select any point from this log and get the status not only at the time of the accident in which the data was corrupted, but also in a few seconds. Such solutions protect against an internal threat - data deletion by users. In the case of storage replication, it doesn’t matter what to transfer - an empty volume or your most critical database. When using CDP, you can choose the moment right before deleting the information and recover on it. CDP systems typically cost tens of thousands of dollars. One of the most successful examples, in my opinion, is EMC RecoverPoint.

Scheme of solutions based on RecoverPoint

Recently, storage virtualization systems are gaining popularity. In addition to their main function - combining arrays of different vendors into a single resource pool - they can greatly help in organizing a distributed data center. The essence of storage virtualization is that between the servers and storage systems an intermediate layer of controllers appears, passing through all the traffic. Storage volumes are not presented directly to servers, but to these virtualizers. They, in turn, distribute to their hosts. In the virtualization layer, you can replicate data between different storage systems, and often there are more advanced features - snapshots, tiered storage, etc. Moreover, the most basic function of virtualizers is most necessary for DR purposes. If we have two storage systems in different data centers connected by an optical backbone, we take volumes from each of them and collect a “mirror” at the virtualizer level. As a result, we get one virtual volume for two data centers, which the servers see. If these servers are virtual - Live Migration of virtual machines starts working and you can transfer tasks between data centers on the go - users will not notice anything.

The complete loss of the data center will be worked out by a regular HA-cluster in an automatic mode in a few minutes. Perhaps the virtualization of distributed storage allows you to provide the minimum recovery time for most applications. For the DBMS there is an unsurpassed Oracle RAC and its analogues, but the cost makes you think. SAN virtualization is also not cheap, for small volumes of storage the cost of a solution can be less than $ 100K, but in most cases the price is higher. In my opinion, the most proven solution is the IBM SAN Volume Controller (SVC), the most technically advanced is EMC VPLEX.

By the way, if not all of your applications still live in a virtual environment, it is worth designing a backup data center for them in virtual machines. Firstly, it will be much cheaper, and secondly, by making it for a reserve, not far from the migration of the main systems under the control of some hypervisor ...

Competition in the outsourcing market of data centers makes renting a room in a provider’s data center more profitable than building and operating your own reserve center. If you host virtual infrastructure with him, there will be significant savings in rental payments. But outsourcing data centers are no longer at the top of progress. It’s better to build a backup infrastructure right in the cloud. In this case, data synchronization with the main systems can be ensured by server-level replication (there is an excellent DoubleTake family of solutions from Vision Solutions).

The last but very important point that should not be forgotten when designing a disaster-resistant IT infrastructure is user workstations. The fact that the database has risen does not mean the resumption of the business process. The user should be able to do their job. Even a full-fledged backup office, in which there are turned off computers for key employees, is not an ideal solution. A person at a lost workplace may have reference materials, macros, etc., full work without which it is impossible. For the most important users for the company, it seems reasonable to switch to virtual workstations (VDI). Then at the workplace (whether it is an ordinary PC or a fashionable “thin” client) no data is stored, it is used only as a terminal to reach Windows XP or Windows 7, running on a virtual machine in the data center. Access to such a workplace is easy to organize from home or from any computer in the branch network. For example, if you have several buildings and one of them is unavailable, key users can come to a neighboring office and take jobs “less key”. Then they calmly log in to the system, get into their virtual machine and the company comes to life!

In conclusion, here are the main questions to ask when evaluating a DR solution:

- What failures does it protect against?

- What RPO / RTO / RCO provides?

- How much is?

- How difficult is the operation?

There are countless catastrophic solutions - both boxed and those that can be done practically with your own hands. Please share in the comments what you have and the stories of how these decisions helped you out. If you have any of the systems described above or their analogues working, leave feedback on how calmly you sleep when IT systems are protected by them.