Naive Bayes, or how mathematics allows you to filter spam

Hello! In this article I will talk about the Bayes classifier, as one of the options for filtering spam emails. Let's go through the theory, then we fix it with practice, well, and at the end I will provide my sketch of the code in my adored R. language. I will try to present as easy as possible expressions and formulations. Let's get started!

Bayesian classifier belongs to the category of machine learning. The essence is this: the system, which is faced with the task of determining whether the next letter is spam, is pre-trained by some number of letters exactly known where "spam" and where "not spam". It has already become clear that this is training with a teacher, where we act as a teacher. The Bayesian classifier presents a document (in our case, a letter) in the form of a set of words that are supposedly independent of each other (this is the naivety from here).

It is necessary to calculate the grade for each class (spam / not spam) and choose the one that turned out to be the maximum. To do this, use the following formula:

- occurrence of the word

- occurrence of the word  in class document

in class document  (with smoothing) *

(with smoothing) *

- the number of words included in the document class

- the number of words included in the document class

M - the number of words from the training set

- the number of occurrences of the word

- the number of occurrences of the word  in class document

in class document

- parameter for smoothing

- parameter for smoothing

When the volume of text is very large, you have to work with very small numbers. In order to avoid this, you can convert the formula by the logarithm property **:

Substitute and get:

* During the calculation, you may encounter a word that was not at the stage of learning the system. This can lead to the fact that the assessment will be equal to zero and the document can not be attributed to any of the categories (spam / not spam). No matter how you want, you will not teach your system all the possible words. To do this, you need to apply anti-aliasing, or rather, to make small corrections in all the probabilities of words entering the document. The parameter 0 <α≤1 is selected (if α = 1, then this is Laplace smoothing)

** Logarithm is a monotonically increasing function. As can be seen from the first formula - we are looking for a maximum. The logarithm of the function will reach a maximum at the same point (on the x-axis) as the function itself. This simplifies the calculation, since only the numerical value changes.

Let our system learn from the following letters, which are known in advance where “spam” and where “not spam” (training set):

Spam:

Do not spam:

Task: determine which category the following letter belongs to:

Solution: We make the

table. We remove all the "stop words", we calculate the probabilities, the parameter for smoothing is taken as one.

Score for the category "Spam":

Rating for the category "Not spam":

Answer: “Not Spam” rating is more than “Spam” rating. So the verification letter is not spam!

The same is calculated using the function transformed by the logarithm property:

Evaluation for the category “Spam”:

Rating for the category "Not spam":

Answer: similar to the previous answer. Verification letter - not spam!

I commented on almost every action of my own, for I know how sometimes I don’t want to understand someone else’s code, so I hope reading mine will not cause you any difficulty. (oh, how I hope)

Thank you very much for taking the time to read my article. I hope you have learned something new for yourself, or just shed light on the moments that are incomprehensible to you. Good luck!

Sources:

Without formulas anywhere, well, a brief theory

Bayesian classifier belongs to the category of machine learning. The essence is this: the system, which is faced with the task of determining whether the next letter is spam, is pre-trained by some number of letters exactly known where "spam" and where "not spam". It has already become clear that this is training with a teacher, where we act as a teacher. The Bayesian classifier presents a document (in our case, a letter) in the form of a set of words that are supposedly independent of each other (this is the naivety from here).

It is necessary to calculate the grade for each class (spam / not spam) and choose the one that turned out to be the maximum. To do this, use the following formula:

M - the number of words from the training set

When the volume of text is very large, you have to work with very small numbers. In order to avoid this, you can convert the formula by the logarithm property **:

Substitute and get:

* During the calculation, you may encounter a word that was not at the stage of learning the system. This can lead to the fact that the assessment will be equal to zero and the document can not be attributed to any of the categories (spam / not spam). No matter how you want, you will not teach your system all the possible words. To do this, you need to apply anti-aliasing, or rather, to make small corrections in all the probabilities of words entering the document. The parameter 0 <α≤1 is selected (if α = 1, then this is Laplace smoothing)

** Logarithm is a monotonically increasing function. As can be seen from the first formula - we are looking for a maximum. The logarithm of the function will reach a maximum at the same point (on the x-axis) as the function itself. This simplifies the calculation, since only the numerical value changes.

From theory to practice

Let our system learn from the following letters, which are known in advance where “spam” and where “not spam” (training set):

Spam:

- “Vouchers at a low price”

- "Stock! Buy a chocolate bar and get a phone as a gift »

Do not spam:

- "Tomorrow will be a meeting"

- "Buy a kilo of apples and a chocolate bar"

Task: determine which category the following letter belongs to:

- “There is a mountain of apples in the store. Buy seven kilograms and chocolate "

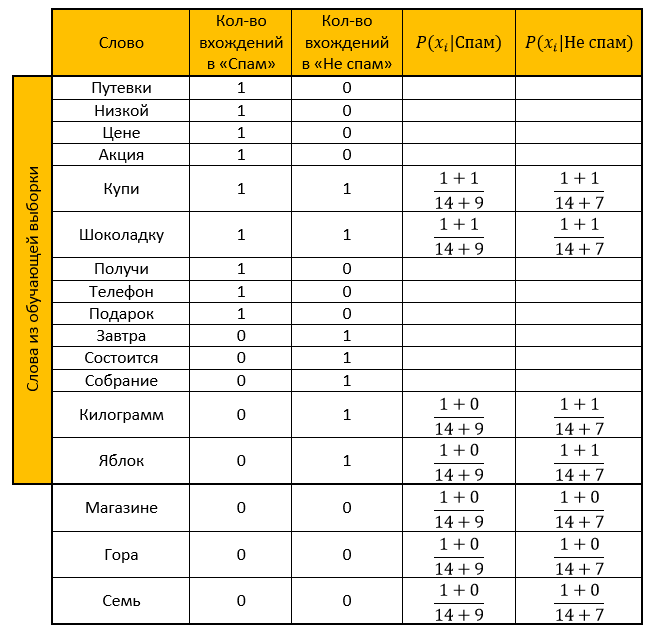

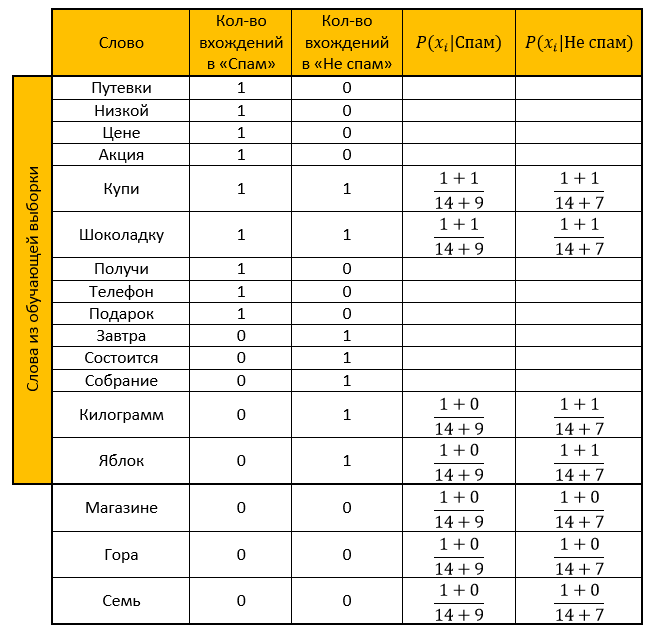

Solution: We make the

table. We remove all the "stop words", we calculate the probabilities, the parameter for smoothing is taken as one.

Score for the category "Spam":

Rating for the category "Not spam":

Answer: “Not Spam” rating is more than “Spam” rating. So the verification letter is not spam!

The same is calculated using the function transformed by the logarithm property:

Evaluation for the category “Spam”:

Rating for the category "Not spam":

Answer: similar to the previous answer. Verification letter - not spam!

Implementation in the R programming language

I commented on almost every action of my own, for I know how sometimes I don’t want to understand someone else’s code, so I hope reading mine will not cause you any difficulty. (oh, how I hope)

And here, in fact, the code itself

library("tm") #Библиотека для stopwords

library("stringr") #Библиотека для работы со строками

#Обучаюшая выборка со спам письмами:spam <- c(

'Путевки по низкой цене',

'Акция! Купи шоколадку и получи телефон в подарок'

)

#Обучающая выборка с не спам письмами:not_spam <- c(

'Завтра состоится собрание',

'Купи килограмм яблок и шоколадку'

)

#Письмо требующее проверкиtest_letter <- "В магазине гора яблок. Купи семь килограмм и шоколадку"#----------------Для спама--------------------#Убираем все знаки препинанияspam <- str_replace_all(spam, "[[:punct:]]", "")

#Делаем все маленьким регистромspam <- tolower(spam)

#Разбиваем слова по пробелуspam_words <- unlist(strsplit(spam, " "))

#Убираем слова, которые совпадают со словами из stopwordsspam_words <- spam_words[! spam_words %in% stopwords("ru")]

#Создаем таблицу с уникальными словами и их количествомunique_words <- table(spam_words)

#Создаем data framemain_table <- data.frame(u_words=unique_words)#Переименовываем столбцыnames(main_table) <- c("Слова","Спам")

#---------------Для не спама------------------not_spam <- str_replace_all(not_spam, "[[:punct:]]", "")

not_spam <- tolower(not_spam)

not_spam_words <- unlist(strsplit(not_spam, " "))

not_spam_words <- not_spam_words[! not_spam_words %in% stopwords("ru")]

#---------------Для проверки------------------test_letter <- str_replace_all(test_letter, "[[:punct:]]", "")

test_letter <- tolower(test_letter)

test_letter <- unlist(strsplit(test_letter, " "))

test_letter <- test_letter[! test_letter %in% stopwords("ru")]

#---------------------------------------------#Создаем новый столбик для подсчета не спам писемmain_table$Не_спам <- 0for(i in1:length(not_spam_words)){

#Создаем логическую переменную

need_word <- TRUE

for(j in1:(nrow(main_table))){

#Если "не спам" слово существует, то к счетчику уникальных слов +1if(not_spam_words[i]==main_table[j,1])

{

main_table$Не_спам[j] <- main_table$Не_спам[j]+1

need_word <- FALSE

}

}

#Если слово не встречалось еще, то добавляем его в конец data frame и создаем счетчикиif(need_word==TRUE)

{

main_table <- rbind(main_table,data.frame(Слова=not_spam_words[i],Спам=0,Не_спам=1))

}

}

#-------------#Создаем столбик содержащий вероятности того, что выбранное слово - спамmain_table$Вероятность_спам <- NA#Создаем столбик содержащий вероятности того, что выбранное слово - не спамmain_table$Вероятность_не_спам <- NA#-------------#Создаем функцию подсчета вероятности вхождения слова Xi в документ класса Qkformula_1 <- function(N_ik,M,N_k)

{

(1+N_ik)/(M+N_k)

}

#-------------#Считаем количество слов из обучающей выборкиquantity <- nrow(main_table)

for(i in1:length(test_letter))

{

#Используем ту же логическую переменную, чтобы не создавать новую

need_word <- TRUE

for(j in1:nrow(main_table))

{

#Если слово из проверочного письма уже существует в нашей выборке то считаем вероятность каждой категорииif(test_letter[i]==main_table$Слова[j])

{

main_table$Вероятность_спам[j] <- formula_1(main_table$Спам[j],quantity,sum(main_table$Спам))

main_table$Вероятность_не_спам[j] <- formula_1(main_table$Не_спам[j],quantity,sum(main_table$Не_спам))

need_word <- FALSE

}

}

#Если слова нет, то добавляем его в конец data frame, и считаем вероятность спама/не спамаif(need_word==TRUE)

{

main_table <- rbind(main_table,data.frame(Слова=test_letter[i],Спам=0,Не_спам=0,Вероятность_спам=NA,Вероятность_не_спам=NA))

main_table$Вероятность_спам[nrow(main_table)] <- formula_1(main_table$Спам[nrow(main_table)],quantity,sum(main_table$Спам))

main_table$Вероятность_не_спам[nrow(main_table)] <- formula_1(main_table$Не_спам[nrow(main_table)],quantity,sum(main_table$Не_спам))

}

}

#Переменная для подсчета оценки класса "Спам"probability_spam <- 1#Переменная для подсчета оценки класса "Не спам"probability_not_spam <- 1for(i in1:nrow(main_table))

{

if(!is.na(main_table$Вероятность_спам[i]))

{

#Шаг 1.1 Определяем оценку того, что письмо - спам

probability_spam <- probability_spam * main_table$Вероятность_спам[i]

}

if(!is.na(main_table$Вероятность_не_спам[i]))

{

#Шаг 1.2 Определяем оценку того, что письмо - не спам

probability_not_spam <- probability_not_spam * main_table$Вероятность_не_спам[i]

}

}

#Шаг 2.1 Определяем оценку того, что письмо - спамprobability_spam <- (length(spam)/(length(spam)+length(not_spam)))*probability_spam

#Шаг 2.2 Определяем оценку того, что письмо - не спамprobability_not_spam <- (length(not_spam)/(length(spam)+length(not_spam)))*probability_not_spam

#Чья оценка больше - тот и победилifelse(probability_spam>probability_not_spam,"Это сообщение - спам!","Это сообщение - не спам!")

Thank you very much for taking the time to read my article. I hope you have learned something new for yourself, or just shed light on the moments that are incomprehensible to you. Good luck!

Sources:

- Very good article on the naive Beyes classifier

- Drew knowledge from the Wiki: here , here , and here

- Lectures on Data Mining I. Chubukova