How to test in Autotech: MindMap's, static code analysis and MockServer

Hello! I want to tell you how testing is arranged in the Avtotek project , the VIN car inspection service. Under the cut - about what tools we use to test the requirements, planning the sprint, how the testing process in our project is arranged.

We in Avtotek use scram, as this is the most successful methodology for our tasks. We have weekly meetings where we prioritize, determine the complexity, decompose backlog tasks, and set the Definition of Ready and Definition of Done for each of the tasks (you can read about them in this wonderful article ). This process is called backlog grooming.

For effective grooming, it is necessary to take into account all dependencies. Know how the implementation of the task can adversely affect the project. Understand what functionality you need to support, and what - cut. Perhaps, in the process of implementing the task, the API for partners may suffer, or you just need to remember to implement metrics that can be used to understand business efficiency. With the development of any project, such dependencies become more and more, and it becomes more and more difficult to take them all into account. This is bad: it is important for the support service to know in time about all the features. And sometimes innovations need to be coordinated with the marketing department.

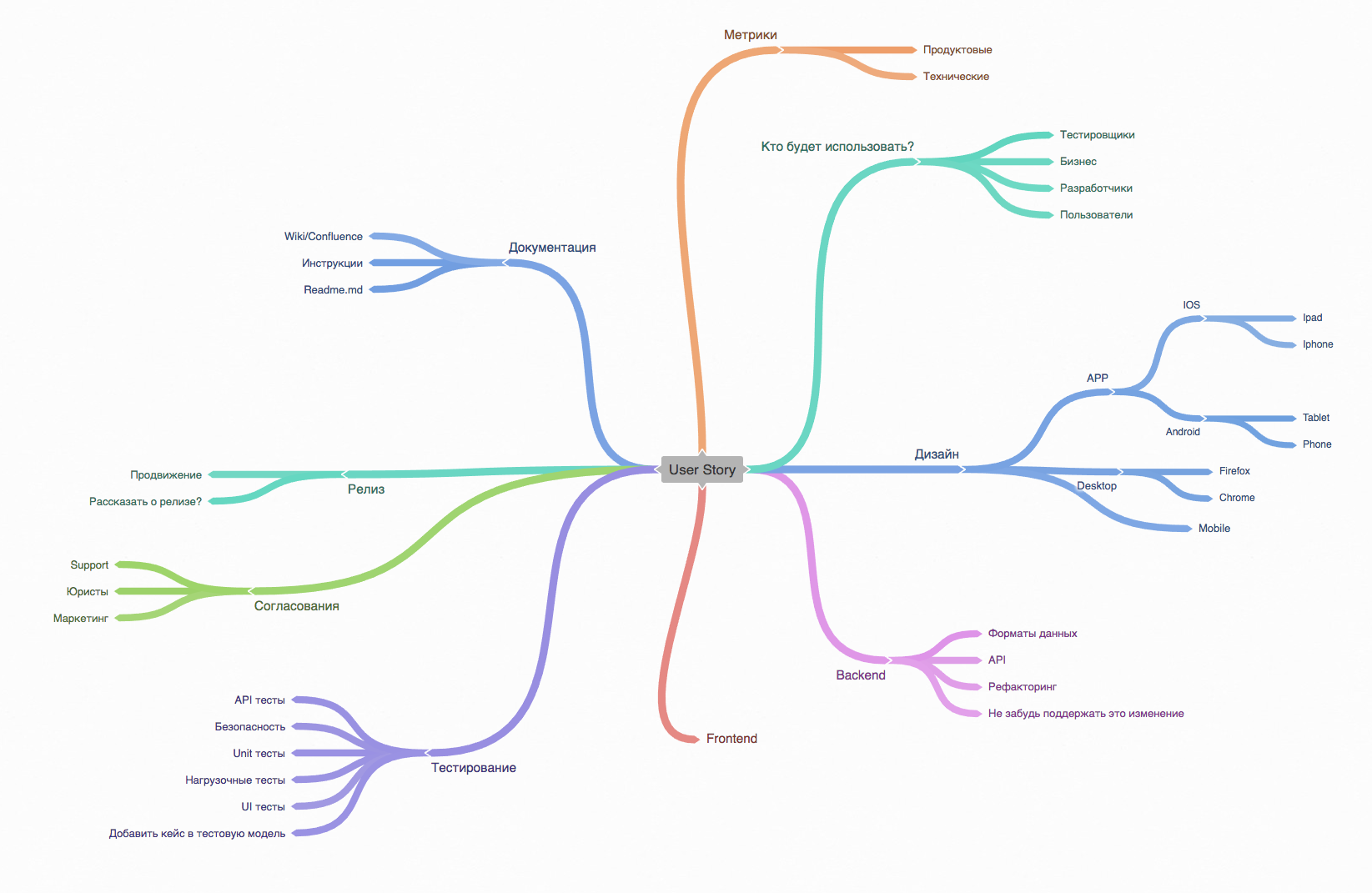

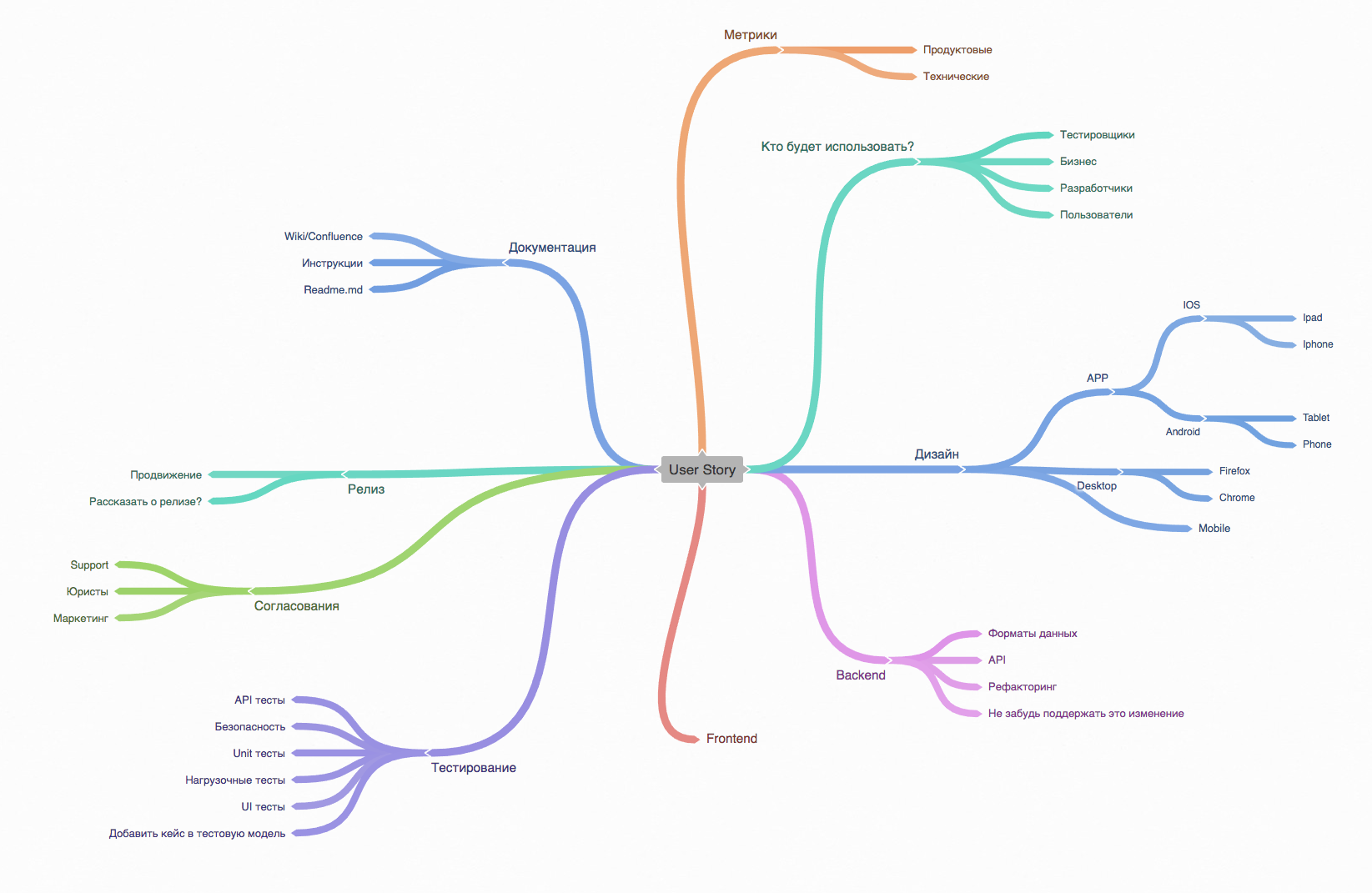

As a result, I proposed a solution based on MindMap, where almost all the dependencies that could affect DoD, DoR and task evaluation were reflected.

The advantage of this approach is a visual representation of all possible dependencies in a hierarchical style, as well as additional buns in the form of icons, text highlighting and colored branches. Access to this MindMap has the entire team, which allows you to keep the map up to date. A blank of such a map, which can be taken as a reference point, I post it here - pyn . (I’ll make a reservation right away that this is only a guideline, and it’s very doubtful to use this map for your tasks without revising the project.)

In our project, a fairly large amount of golang-code, and in order for the code style to meet certain standards, it was decided to apply static code analysis. About what it is, there is a great article on Habré .

We wanted to build the analyzer into the CI process, so that with each build of the project, the analyzer was launched, and depending on the results of the test, the build continued or fell with errors. In general, using gometalinter as a separate step (Build step) in Teamcity would be a good solution, but viewing errors in the build logs is not very convenient.

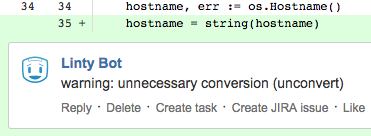

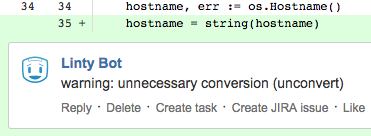

We continued to search and found the Linty Bot, developed in the framework of the hackathon in Avito by Artemy Flaker Ryabinkov.

This is the bot that keeps track of the project code in our version control system and starts a diff code analyzer with each pull request. If errors occurred during the analysis, the bot sends a comment to this PR to the desired line of code. Its advantages are the connection speed to the project, the speed of work, comments to pull requests, and the use of the rather popular Gometalinter linter, which by default already contains all the necessary checks.

The next section deals with the stability of the tests. Autotech is highly dependent on data sources (they come from dealers, government services, service stations, insurance companies and other partners), but their inoperability cannot be the basis for refusal to conduct tests.

We have to check the assembly of reports both with working sources and with their inoperability. Until recently, we used real data sources in the dev-environment, and, accordingly, were dependent on their state. It turned out that we indirectly checked these sources in UI tests. As a result, we had unstable tests that fell off along with the sources and waited for the survey of data sources, which did not contribute to the speed of passing autotests.

I had an idea to write my own moke and thus to make a substitution for the sources of Autotech. But in the end, a simpler solution was found - a ready-made MockServer , an open-source Java development.

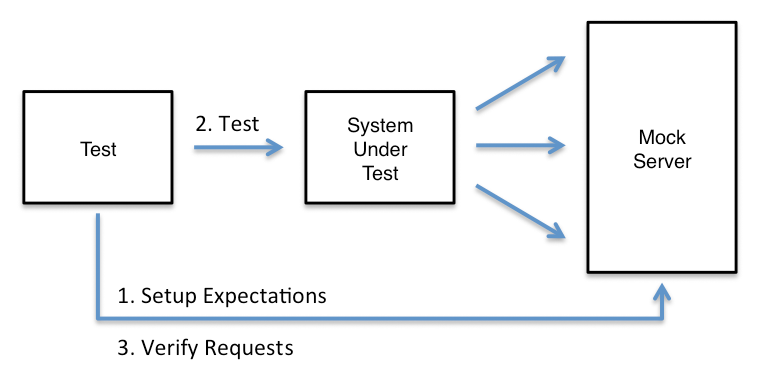

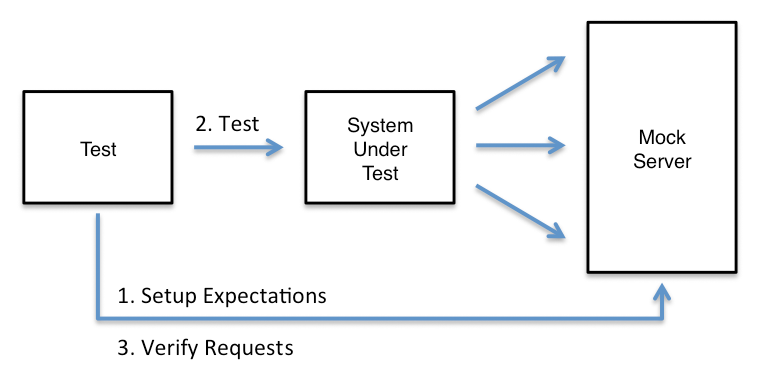

The principle of its work:

An example of creating a wait using a java client:

Apparently from an example, we describe request which we will send, and the answer which we want to receive. MockServer receives the request, tries to compare it with those that were created, and if there are matches, it returns a response. If the request does not match, we get 404.

For MockServer, there are clients for Java and JavaScript, excellent documentation and usage examples. It is possible to match requests via RegExp, detailed logging on the server, and a whole bunch of different chips. For our needs, this was the perfect candidate. The launch process is described in detail on the site, so retelling it here does not see the point. The only point is that the latest version is quite leaking from memory, so we are using version 5.2.3. Be careful. Another disadvantage is that Mockserver does not have SOAP support out of the box.

At the moment, MockServer has been working with us for about three months. As a result, the stability of tests, their speed and the ability to receive any data on the dev-environment has increased. And accordingly, more opportunities for testing.

These technologies are the main things I would like to talk about in this article. Otherwise, we use the usual tools for testing: API tests with a bunch of Kotlin + JUnit + RestAssured, Postman for ease of accessing the API. In this review article I didn’t talk about our approach to UI tests. We use MBT and graphwalker . We plan with colleagues to prepare a post about it.

If you have any questions, ask in the comments, I will try to answer. I hope this article will be useful for development teams. (By the way, while she was preparing for release, we had a QA developer job vacancy in the team , show those who might be interested in it).

MindMap's for grooming tasks

We in Avtotek use scram, as this is the most successful methodology for our tasks. We have weekly meetings where we prioritize, determine the complexity, decompose backlog tasks, and set the Definition of Ready and Definition of Done for each of the tasks (you can read about them in this wonderful article ). This process is called backlog grooming.

For effective grooming, it is necessary to take into account all dependencies. Know how the implementation of the task can adversely affect the project. Understand what functionality you need to support, and what - cut. Perhaps, in the process of implementing the task, the API for partners may suffer, or you just need to remember to implement metrics that can be used to understand business efficiency. With the development of any project, such dependencies become more and more, and it becomes more and more difficult to take them all into account. This is bad: it is important for the support service to know in time about all the features. And sometimes innovations need to be coordinated with the marketing department.

As a result, I proposed a solution based on MindMap, where almost all the dependencies that could affect DoD, DoR and task evaluation were reflected.

The advantage of this approach is a visual representation of all possible dependencies in a hierarchical style, as well as additional buns in the form of icons, text highlighting and colored branches. Access to this MindMap has the entire team, which allows you to keep the map up to date. A blank of such a map, which can be taken as a reference point, I post it here - pyn . (I’ll make a reservation right away that this is only a guideline, and it’s very doubtful to use this map for your tasks without revising the project.)

Linty and static code analysis for Go

In our project, a fairly large amount of golang-code, and in order for the code style to meet certain standards, it was decided to apply static code analysis. About what it is, there is a great article on Habré .

We wanted to build the analyzer into the CI process, so that with each build of the project, the analyzer was launched, and depending on the results of the test, the build continued or fell with errors. In general, using gometalinter as a separate step (Build step) in Teamcity would be a good solution, but viewing errors in the build logs is not very convenient.

We continued to search and found the Linty Bot, developed in the framework of the hackathon in Avito by Artemy Flaker Ryabinkov.

This is the bot that keeps track of the project code in our version control system and starts a diff code analyzer with each pull request. If errors occurred during the analysis, the bot sends a comment to this PR to the desired line of code. Its advantages are the connection speed to the project, the speed of work, comments to pull requests, and the use of the rather popular Gometalinter linter, which by default already contains all the necessary checks.

MockServer and how to get services to give what you need

The next section deals with the stability of the tests. Autotech is highly dependent on data sources (they come from dealers, government services, service stations, insurance companies and other partners), but their inoperability cannot be the basis for refusal to conduct tests.

We have to check the assembly of reports both with working sources and with their inoperability. Until recently, we used real data sources in the dev-environment, and, accordingly, were dependent on their state. It turned out that we indirectly checked these sources in UI tests. As a result, we had unstable tests that fell off along with the sources and waited for the survey of data sources, which did not contribute to the speed of passing autotests.

I had an idea to write my own moke and thus to make a substitution for the sources of Autotech. But in the end, a simpler solution was found - a ready-made MockServer , an open-source Java development.

The principle of its work:

- creating an expectation

- match incoming requests,

- If a match is found, send an answer.

An example of creating a wait using a java client:

new MockServerClient("localhost", 1080)

.when(

request()

.withMethod("POST")

.withPath("/login")

.withBody("{username: 'foo', password: 'bar'}")

)

.respond(

response()

.withStatusCode(302)

.withCookie(

"sessionId", "2By8LOhBmaW5nZXJwcmludCIlMDAzMW"

)

.withHeader(

"Location", "https://www.mock-server.com"

)

);

Apparently from an example, we describe request which we will send, and the answer which we want to receive. MockServer receives the request, tries to compare it with those that were created, and if there are matches, it returns a response. If the request does not match, we get 404.

For MockServer, there are clients for Java and JavaScript, excellent documentation and usage examples. It is possible to match requests via RegExp, detailed logging on the server, and a whole bunch of different chips. For our needs, this was the perfect candidate. The launch process is described in detail on the site, so retelling it here does not see the point. The only point is that the latest version is quite leaking from memory, so we are using version 5.2.3. Be careful. Another disadvantage is that Mockserver does not have SOAP support out of the box.

At the moment, MockServer has been working with us for about three months. As a result, the stability of tests, their speed and the ability to receive any data on the dev-environment has increased. And accordingly, more opportunities for testing.

Epilogue

These technologies are the main things I would like to talk about in this article. Otherwise, we use the usual tools for testing: API tests with a bunch of Kotlin + JUnit + RestAssured, Postman for ease of accessing the API. In this review article I didn’t talk about our approach to UI tests. We use MBT and graphwalker . We plan with colleagues to prepare a post about it.

If you have any questions, ask in the comments, I will try to answer. I hope this article will be useful for development teams. (By the way, while she was preparing for release, we had a QA developer job vacancy in the team , show those who might be interested in it).