Development of a new static analyzer: PVS-Studio Java

The PVS-Studio static analyzer is known in the world of C, C ++ and C # as a tool for detecting errors and potential vulnerabilities. However, we have few clients from the financial sector, as it turned out that Java and IBM RPG (!) Are now in demand there. We always wanted to be closer to the Enterprise world, so after some thought, we decided to start creating a Java analyzer.

Introduction

Of course, there were fears. Easy to occupy the market for analyzers in IBM RPG. I'm not at all sure that there are decent tools for static analysis of this language. In the Java world, things are very different. Already have a line of tools for static analysis, and to get ahead, you need to create a really powerful and cool analyzer.

However, our company has had the experience of using several tools for static Java analysis, and we are sure that we can do many things better.

In addition, we had an idea how to use all the power of our C ++ analyzer in a Java analyzer. But first things first.

Tree

First of all, it was necessary to determine how we would get the syntax tree and the semantic model.

The syntax tree is the base element around which the analyzer is built. When performing checks, the analyzer moves along the syntax tree and examines its individual nodes. Without such a tree to produce a serious static analysis is almost impossible. For example, finding errors using regular expressions is hopeless .

It should be noted that the merely syntactic tree is not enough. The analyzer also needs semantic information. For example, we need to know the types of all elements of the tree, to be able to go to a variable declaration, etc.

We considered several options for obtaining the syntactic tree and semantic model:

- ANTLR (with grammar for Java)

- JavaParser and JavaSymbolSolver

- Eclipse's ASTParser from Eclipse JDT

- Spoon

We abandoned the idea of using ANTLR almost immediately, since this would unnecessarily complicate the development of the analyzer (semantic analysis would have to be implemented on our own). Finally decided to stop at the Spoon library:

- It is not just a parser, but a whole ecosystem - it provides not only a parse tree, but also opportunities for semantic analysis, for example, it allows you to get information about the types of variables, go to the variable declaration, get information about the parent class, and so on.

- Based on Eclipse JDT and can compile code.

- It supports the latest version of Java and is constantly updated.

- Good documentation and clear API.

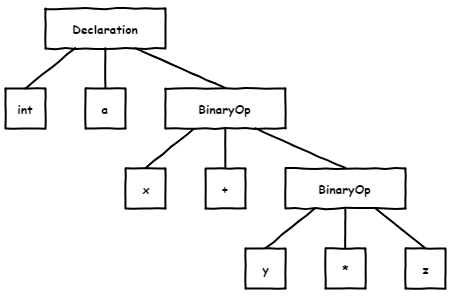

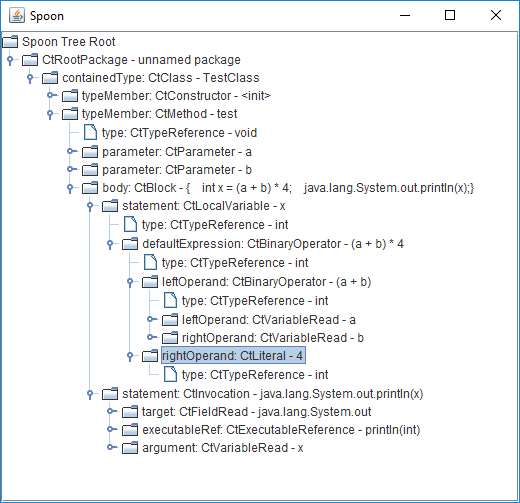

Here is an example of the metamodel that Spoon provides, and with which we work when creating diagnostic rules:

This metamodel corresponds to the following code:

classTestClass{

voidtest(int a, int b){

int x = (a + b) * 4;

System.out.println(x);

}

}One of the nice features of Spoon is that it simplifies the syntax tree (by removing and adding nodes) in order to make it easier to work with. At the same time, the semantic equivalence of the simplified original metamodel is guaranteed.

For us, this means, for example, that we no longer need to worry about skipping extra brackets when traversing a tree. In addition, each expression is placed in a block, imports are revealed, some more similar simplifications are made.

For example, the following code:

for (int i = ((0)); (i < 10); i++)

if (cond)

return (((42)));will be presented as follows:

for (int i = 0; i < 10; i++)

{

if (cond)

{

return42;

}

}On the basis of the syntax tree, a so-called pattern-based analysis is performed. This is a search for errors in the source code of a program using known code patterns with an error. In the simplest case, the analyzer searches in the tree for places that are similar to an error, according to the rules described in the corresponding diagnostics. The number of such patterns is large and their complexity can vary greatly.

The simplest example of an error detected using pattern-based analysis is the following code from the jMonkeyEngine project:

if (p.isConnected()) {

log.log(Level.FINE, "Connection closed:{0}.", p);

}

else {

log.log(Level.FINE, "Connection closed:{0}.", p);

}Blocks then and else statement if coincide, most likely, there is a logical error.

Here is another similar example from the Hive project:

if (obj instanceof Number) {

// widening conversionreturn ((Number) obj).doubleValue();

} elseif (obj instanceof HiveDecimal) { // <=return ((HiveDecimal) obj).doubleValue();

} elseif (obj instanceof String) {

return Double.valueOf(obj.toString());

} elseif (obj instanceof Timestamp) {

returnnew TimestampWritable((Timestamp)obj).getDouble();

} elseif (obj instanceof HiveDecimal) { // <=return ((HiveDecimal) obj).doubleValue();

} elseif (obj instanceof BigDecimal) {

return ((BigDecimal) obj).doubleValue();

}In this code there are two identical conditions in the sequence of the form if (....) else if (....) else if (....) . It is worth checking this section of the code for a logical error, or remove the duplicate code.

Data-flow analysis

In addition to the syntax tree and the semantic model, the analyzer needs a data flow analysis mechanism .

Analysis of the data flow allows you to calculate the allowable values of variables and expressions at each point of the program and, thanks to this, find errors. We call these valid values virtual values.

Virtual values are created for variables, class fields, method parameters, and others at the first mention. If this is an assignment, the Data Flow mechanism calculates the virtual value by analyzing the expression on the right; otherwise, the entire allowable range of values for this type of variable is taken as the virtual value. For example:

voidfunc(byte x)// x: [-128..127]{

int y = 5; // y: [5]

...

}Each time the value of a variable changes, the Data Flow mechanism recalculates the virtual value. For example:

voidfunc(){

int x = 5; // x: [5]

x += 7; // x: [12]

...

}The Data Flow mechanism also handles the control statements:

voidfunc(int x)// x: [-2147483648..2147483647]{

if (x > 3)

{

// x: [4..2147483647]if (x < 10)

{

// x: [4..9]

}

}

else

{

// x: [-2147483648..3]

}

...

}In this example, when entering the function, there is no information about the value of the variable x , so it is set according to the type of the variable (from -2147483648 to 2147483647). Then the first conditional block imposes the restriction x > 3, and the ranges are combined. As a result, in the then block, the range of values for x is from 4 to 2147483647, and in the else block from -2147483648 to 3. The second condition x <10 is processed in the same way .

In addition, you must be able to perform purely character calculations. The simplest example is:

voidf1(int a, int b, int c){

a = c;

b = c;

if (a == b) // <= always true

....

}Here, the variable a is assigned the value c , the variable b is also assigned the value c , after which a and b are compared. In this case, to find the error, simply remember the piece of wood corresponding to the right side.

Here is a slightly more complex example with symbolic calculations:

voidf2(int a, int b, int c){

if (a < b)

{

if (b < c)

{

if (c < a) // <= always false

....

}

}

}In such cases, it is already necessary to solve a system of inequalities in a symbolic form.

The Data Flow mechanism helps the analyzer to find errors that are very difficult to find using pattern-based analysis.

These errors include:

- Overflow;

- Overrun array;

- Access via zero or potentially zero reference;

- Meaningless conditions (always true / false);

- Memory and resource leaks;

- Division by 0;

- And some others.

Data flow analysis is especially important when searching for vulnerabilities. For example, in the event that a program receives input from the user, it is likely that the input will be used to cause a denial of service, or to gain control over the system. Examples include errors leading to buffer overflows with some input data, or, for example, SQL injections. In both cases, in order for the static analyzer to detect such errors and vulnerabilities, it is necessary to monitor the data flow and the possible values of the variables.

I must say that the mechanism for analyzing the flow of data is a complex and extensive mechanism, and in this article I touched on only the very basics.

Let's look at a few examples of errors that can be detected using the Data Flow mechanism.

Project Hive:

publicstaticbooleanequal(byte[] arg1, finalint start1,

finalint len1, byte[] arg2,

finalint start2, finalint len2){

if (len1 != len2) { // <=returnfalse;

}

if (len1 == 0) {

returntrue;

}

....

if (len1 == len2) { // <=

....

}

}The condition len1 == len2 is always fulfilled, since the opposite check has already been performed above.

Another example from the same project:

if (instances != null) { // <=

Set<String> oldKeys = new HashSet<>(instances.keySet());

if (oldKeys.removeAll(latestKeys)) {

....

}

this.instances.keySet().removeAll(oldKeys);

this.instances.putAll(freshInstances);

} else {

this.instances.putAll(freshInstances); // <=

}Here in the else block , the null pointer is guaranteed to be dereferenced. Note: here instances is the same as this.instances .

An example from the JMonkeyEngine project:

publicstaticintconvertNewtKey(short key){

....

if (key >= 0x10000) {

return key - 0x10000;

}

return0;

}Here, the key variable is compared with the number 65536, however, it has a short type , and the maximum possible value for short is 32767. Accordingly, the condition is never satisfied.

An example from the Jenkins project:

publicfinal R getSomeBuildWithWorkspace(){

int cnt = 0;

for (R b = getLastBuild();

cnt < 5 && b ! = null;

b = b.getPreviousBuild())

{

FilePath ws = b.getWorkspace();

if (ws != null) return b;

}

returnnull;

}In this code, we entered the variable cnt to limit the number of passes to five, but forgot to increment it, making the check useless.

Annotation mechanism

In addition, the analyzer needs an annotation mechanism. Annotations are a markup system that provides the analyzer with additional information about the methods used and the classes, in addition to what can be obtained by analyzing their signatures. The markup is done manually, it is a long and laborious process, as for achieving the best results it is necessary to annotate a large number of standard classes and methods of the Java language. It also makes sense to annotate popular libraries. In general, annotations can be viewed as a knowledge base of the analyzer on contracts of standard methods and classes.

Here is a small example of an error that can be detected using annotations:

inttest(int a, int b){

...

return Math.max(a, a);

}In this example, because of a typo, the same argument was passed to the second argument of the Math.max method as the first argument. Such an expression is meaningless and suspicious.

Knowing that the arguments of the Math.max method should always be different, the static analyzer will be able to issue a warning on a similar code.

Looking ahead, here are some examples of our markup of built-in classes and methods (C ++ code):

Class("java.lang.Math")

- Function("abs", Type::Int32)

.Pure()

.Set(FunctionClassification::NoDiscard)

.Returns(Arg1, [](const Int &v)

{ return v.Abs(); })

- Function("max", Type::Int32, Type::Int32)

.Pure()

.Set(FunctionClassification::NoDiscard)

.Requires(NotEquals(Arg1, Arg2)

.Returns(Arg1, Arg2, [](const Int &v1, const Int &v2)

{ return v1.Max(v2); })

Class("java.lang.String", TypeClassification::String)

- Function("split", Type::Pointer)

.Pure()

.Set(FunctionClassification::NoDiscard)

.Requires(NotNull(Arg1))

.Returns(Ptr(NotNullPointer))

Class("java.lang.Object")

- Function("equals", Type::Pointer)

.Pure()

.Set(FunctionClassification::NoDiscard)

.Requires(NotEquals(This, Arg1))

Class("java.lang.System")

- Function("exit", Type::Int32)

.Set(FunctionClassification::NoReturn)Explanations:

- Class - annotated class;

- Function - class annotated method;

- Pure is an abstract showing that the method is pure, i.e. deterministic and non-adverse;

- Set - sets an arbitrary flag for the method.

- FunctionClassification :: NoDiscard is a flag indicating that the return value of the method must be used;

- FunctionClassification :: NoReturn is a flag indicating that the method does not return;

- Arg1 , Arg2 , ... , ArgN - method arguments;

- Returns - the return value of the method;

- Requires - a contract for a method.

It is worth noting that in addition to manual markup, there is another approach to annotation - the automatic withdrawal of contracts based on bytecode. It is clear that such an approach allows only certain types of contracts to be derived, but it does provide an opportunity to receive additional information in general from all dependencies, and not just from those that were manually annotated.

By the way, there is already a tool that can display contracts like @Nullable , NotNull based on bytecode - FABA . As far as I understand, the derivative of FABA is used in IntelliJ IDEA.

Now we are also considering the possibility of adding bytecode analysis to obtain contracts for all methods, since these contracts could well complement our manual annotations.

Diagnostic rules when working often refer to annotations. In addition to diagnostics, annotations use the Data Flow mechanism. For example, using the annotation of the java.lang.Math.abs method , it can accurately calculate the value for the number modulus. At the same time, it is not necessary to write any additional code - just mark the method correctly.

Consider an example of an error from the Hibernate project that can be detected through annotations:

publicbooleanequals(Object other){

if (other instanceof Id) {

Id that = (Id) other;

return purchaseSequence.equals(this.purchaseSequence) &&

that.purchaseNumber == this.purchaseNumber;

}

else {

returnfalse;

}

}In this code, the equals () method compares the purchaseSequence object with itself. Surely this is a typo and the right should be that.purchaseSequence , not the purchaseSequence .

How Dr. Frankenstein assembled from parts analyzer

Since the Data Flow and annotation mechanisms themselves are not strongly tied to a specific language, it was decided to reuse these mechanisms from our C ++ analyzer. This allowed us in a short time to get all the power of the C ++ analyzer core in our Java analyzer. In addition, this decision was also influenced by the fact that these mechanisms were written in modern C ++ with a lot of metaprogramming and template magic, and, accordingly, are not very well suited for porting to another language.

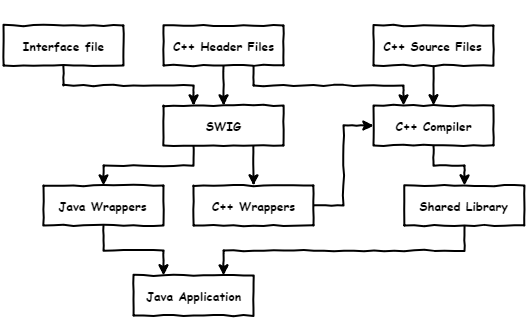

In order to connect the Java part with the C ++ kernel, we decided to use SWIG (Simplified Wrapper and Interface Generator) - a tool for automatically generating wrappers and interfaces for linking C and C ++ programs with programs written in other languages. For Java, SWIG generates code onJNI (Java Native Interface) .

SWIG is great for cases where there is already a large amount of C ++ code that needs to be integrated into a Java project.

Let me give you a minimal example of working with SWIG. Suppose we have a C ++ class that we want to use in a Java project:

CoolClass.h

classCoolClass

{public:

int val;

CoolClass(int val);

voidprintMe();

};Coolclass.cpp

#include<iostream>#include"CoolClass.h"

CoolClass::CoolClass(int v) : val(v) {}

void CoolClass::printMe()

{

std::cout << "val: " << val << '\n';

}First you need to create a SWIG interface file with a description of all exported functions and classes. Also in this file, if necessary, additional settings are made.

Example.i

%module MyModule

%{

#include"CoolClass.h"

%}

%include "CoolClass.h"After that, you can run SWIG:

$ swig -c++ -java Example.iIt will generate the following files:

- CoolClass.java - a class with which we will work directly in a Java project;

- MyModule.java - the class of the module in which all free functions and variables are placed;

- MyModuleJNI.java - Java wrappers;

- Example_wrap.cxx - C ++ wrappers.

Now you just need to add the resulting .java files to the Java project and the .cxx file to the C ++ project.

Finally, you need to compile a C ++ project as a dynamic library and load it into a Java project using System.loadLibary () :

App.java

classApp{

static {

System.loadLibary("example");

}

publicstaticvoidmain(String[] args){

CoolClass obj = new CoolClass(42);

obj.printMe();

}

}Schematically it can be represented as follows:

Of course, in a real project, everything is not so simple and you have to put a little more effort:

- In order to use template classes and methods from C ++, they must be instantiated for all accepted template parameters using the % template directive ;

- In some cases, it may be necessary to catch the exceptions thrown from the C ++ parts in the Java part. By default, SWIG does not catch exceptions from C ++ (segfault occurs), however, it is possible to do this using the % exception directive ;

- SWIG allows you to extend the plus code on the Java side using the % extend directive . In our project, for example, we add the toString () method to virtual values so that we can view them in the Java debugger;

- In order to emulate RAII behavior from C ++, the AutoClosable interface is implemented in all classes of interest ;

- The directors mechanism allows the use of cross-language polymorphism;

- For types that are allocated only inside C ++ (on their own memory pool), constructors and finalizers are removed to improve performance. The garbage collector will ignore these types.

You can read more about all these mechanisms in the SWIG documentation .

Our analyzer is built with the help of gradle, which calls CMake, which, in turn, calls SWIG and collects the C ++ part. For programmers, this happens almost imperceptibly, so we don’t experience any special inconveniences during development.

The core of our C ++ analyzer is built under Windows, Linux, macOS, so the Java analyzer also works in these operating systems.

What is the diagnostic rule?

We ourselves write diagnostics and code for analysis in Java. This is due to the close interaction with Spoon. Each diagnostic rule is a visitor, in which methods are overloaded, in which the elements of interest are bypassed:

For example, the V6004 diagnostics framework looks like this:

classV6004extendsPvsStudioRule{

....

@OverridepublicvoidvisitCtIf(CtIf ifElement){

// if ifElement.thenStatement statement is equivalent to// ifElement.elseStatement statement => add warning V6004

}

}Plugins

For the most simple integration of the static analyzer into the project, we have developed plug-ins for the Maven and Gradle assembly systems. The user needs only to add our plugin to the project.

For gradle:

....

apply plugin: com.pvsstudio.PvsStudioGradlePlugin

pvsstudio {

outputFile = 'path/to/output.json'

....

}For Maven:

....

<plugin>

<groupId>com.pvsstudio</groupId>

<artifactId>pvsstudio-maven-plugin</artifactId>

<version>0.1</version>

<configuration>

<analyzer>

<outputFile>path/to/output.json</outputFile>

....

</analyzer>

</configuration>

</plugin>After that, the plugin will independently receive the project structure and launch the analysis.

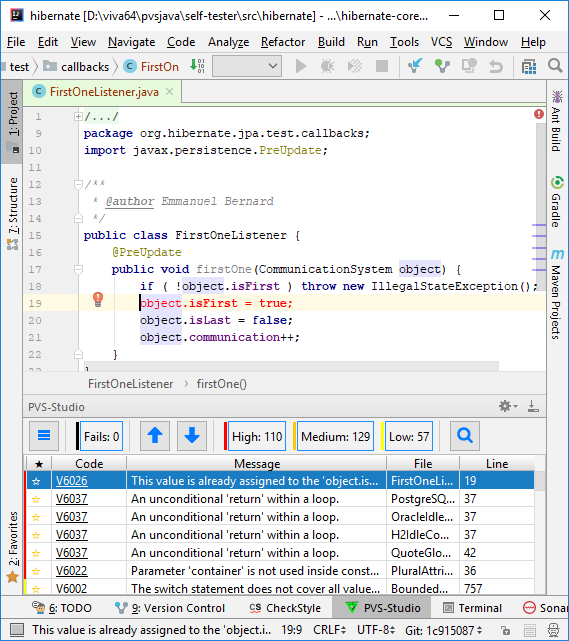

In addition, we developed a plug-in prototype for IntelliJ IDEA.

Also, this plugin works in Android Studio.

The plugin for Eclipse is currently under development.

Incremental analysis

We have provided an incremental analysis mode, which allows you to check only modified files and thereby significantly reduces the time required for code analysis. Due to this, developers will be able to run the analysis as often as necessary.

Incremental analysis includes several steps:

- Caching metamodel Spoon;

- Rebuilding the changed part of the metamodel;

- Analysis of changed files.

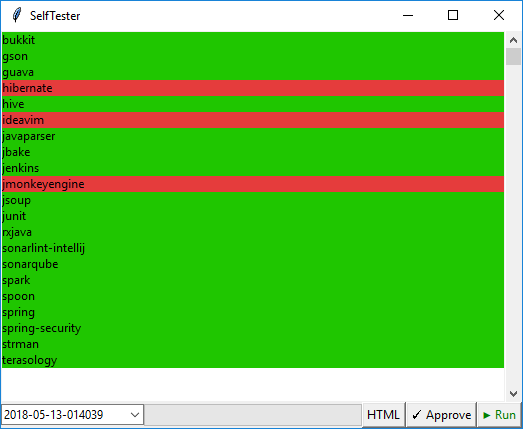

Our testing system

To test Java analyzer on real projects, we wrote a special toolkit that allows you to work with the database of open source projects. It was written on the ^ W Python + Tkinter knee and is cross-platform.

It works as follows:

- The tested project of a specific version is downloaded from the repository to GitHub;

- The project is being built;

- The pom.xml or build.gradle added to our plugin (using git apply);

- The static analyzer is launched using a plugin;

- The resulting report is compared with the benchmark for this project.

This approach ensures that good operations will not disappear as a result of changing the code of the analyzer. Below is the interface of our utility for testing.

Red marks those projects whose reports have any differences with the standard. The Approve button allows you to save the current version of the report as a reference.

Error examples

By tradition, I will cite several errors from various open projects that our Java analyzer found. In the future, it is planned to write articles with a more detailed report on each project.

Hibernate project

PVS-Studio warning : V6009 Function 'equals' receives odd arguments. Inspect arguments: this, 1. PurchaseRecord.java 57

publicbooleanequals(Object other){

if (other instanceof Id) {

Id that = (Id) other;

return purchaseSequence.equals(this.purchaseSequence) &&

that.purchaseNumber == this.purchaseNumber;

}

else {

returnfalse;

}

}In this code, the equals () method compares the purchaseSequence object with itself. Most likely, this is a typo and the right should be that.purchaseSequence , not the purchaseSequence .

PVS-Studio warning : V6009 Function 'equals' receives odd arguments. Inspect arguments: this, 1. ListHashcodeChangeTest.java 232

publicvoidremoveBook(String title){

for( Iterator<Book> it = books.iterator(); it.hasNext(); ) {

Book book = it.next();

if ( title.equals( title ) ) {

it.remove();

}

}

}Triggering, similar to the previous one - on the right should be book.title , not title .

Hive Project

PVS-Studio warning : V6007 Expression 'colOrScalar1.equals ("Column")' is always false. GenVectorCode.java 2768

PVS-Studio Warning: V6007 Expression 'colOrScalar1.equals ("Scalar")' is always false. GenVectorCode.java 2774

PVS-Studio Warning: V6007 Expression 'colOrScalar1.equals ("Column")' is always false. GenVectorCode.java 2785

String colOrScalar1 = tdesc[4];

....

if (colOrScalar1.equals("Col") &&

colOrScalar1.equals("Column")) {

....

} elseif (colOrScalar1.equals("Col") &&

colOrScalar1.equals("Scalar")) {

....

} elseif (colOrScalar1.equals("Scalar") &&

colOrScalar1.equals("Column")) {

....

}Here the operators are clearly confused and instead of ||| used ' &&' .

JavaParser project

PVS-Studio warning : V6001 There are identical sub-expressions 'tokenRange.getBegin (). GetRange (). IsPresent ()' to the left. Node.java 213

public Node setTokenRange(TokenRange tokenRange){

this.tokenRange = tokenRange;

if (tokenRange == null ||

!(tokenRange.getBegin().getRange().isPresent() &&

tokenRange.getBegin().getRange().isPresent()))

{

range = null;

}

else

{

range = new Range(

tokenRange.getBegin().getRange().get().begin,

tokenRange.getEnd().getRange().get().end);

}

returnthis;

}The analyzer found that the left and right of the && operator are identical expressions (all methods in the call chain are pure). Most likely, in the second case, you need to use tokenRange.getEnd () , and not tokenRange.getBegin () .

PVS-Studio warning : V6016 Suspicious access to element of 'typeDeclaration.getTypeParameters ()' object by a constant index inside a loop. ResolvedReferenceType.java 265

if (!isRawType()) {

for (int i=0; i<typeDeclaration.getTypeParams().size(); i++) {

typeParametersMap.add(

new Pair<>(typeDeclaration.getTypeParams().get(0),

typeParametersValues().get(i)));

}

}The analyzer detected suspicious access to a collection item at a constant index inside a loop. Perhaps there is an error in this code.

Jenkins Project

PVS-Studio warning : V6007 Expression 'cnt <5' is always true. AbstractProject.java 557

publicfinal R getSomeBuildWithWorkspace(){

int cnt = 0;

for (R b = getLastBuild();

cnt < 5 && b ! = null;

b = b.getPreviousBuild())

{

FilePath ws = b.getWorkspace();

if (ws != null) return b;

}

returnnull;

}In this code, we entered the variable cnt to limit the number of passes to five, but forgot to increment it, making the check useless.

Spark project

PVS-Studio warning : V6007 Expression 'sparkApplications! = Null' is always true. SparkFilter.java 127

if (StringUtils.isNotBlank(applications))

{

final String[] sparkApplications = applications.split(",");

if (sparkApplications != null && sparkApplications.length > 0)

{

...

}

}Checking for the null result returned by the split method is meaningless, since this method always returns the collection and never returns null .

Spoon project

PVS-Studio warning : V6001 There are identical sub-expressions "! M.getSimpleName (). Starts.With (" set ")" the operator. SpoonTestHelpers.java 108

if (!m.getSimpleName().startsWith("set") &&

!m.getSimpleName().startsWith("set")) {

continue;

}In this code, the left and right of the && operator are identical expressions (all the methods in the call chain are pure). Most likely, there is a logical error in the code.

PVS-Studio warning : V6007 Expression 'idxOfScopeBoundTypeParam> = 0' is always true. MethodTypingContext.java 243

privatebooleanisSameMethodFormalTypeParameter(....){

....

int idxOfScopeBoundTypeParam = getIndexOfTypeParam(....);

if (idxOfScopeBoundTypeParam >= 0) { // <=int idxOfSuperBoundTypeParam = getIndexOfTypeParam(....);

if (idxOfScopeBoundTypeParam >= 0) { // <=return idxOfScopeBoundTypeParam == idxOfSuperBoundTypeParam;

}

}

....

}Here they were sealed in the second condition and instead of idxOfSuperBoundTypeParam they wrote idxOfScopeBoundTypeParam .

Spring Security Project

PVS-Studio warning : V6001 There | || ' operator. Check lines: 38, 39. AnyRequestMatcher.java 38

@Override@SuppressWarnings("deprecation")

publicbooleanequals(Object obj){

return obj instanceof AnyRequestMatcher ||

obj instanceof security.web.util.matcher.AnyRequestMatcher;

}Triggering is similar to the previous one - here the name of the same class is written in different ways.

PVS-Studio warning : V6006 The object was not used. The 'throw' keyword could be missing. DigestAuthenticationFilter.java 434

if (!expectedNonceSignature.equals(nonceTokens[1])) {

new BadCredentialsException(

DigestAuthenticationFilter.this.messages

.getMessage("DigestAuthenticationFilter.nonceCompromised",

new Object[] { nonceAsPlainText },

"Nonce token compromised {0}"));

}In this code, you forgot to add a throw before the exception. As a result, the BadCredentialsException exception object is thrown but not used, i.e. exception is not thrown.

PVS-Studio Warning: V6030 The method is located on the right of the '|' Operator of the left operand. Perhaps it is better to use '|| RedirectUrlBuilder.java 38

publicvoidsetScheme(String scheme){

if (!("http".equals(scheme) | "https".equals(scheme))) {

thrownew IllegalArgumentException("...");

}

this.scheme = scheme;

}In this code, the use of the operator | unnecessarily, as with its use the right side will be calculated even if the left side is already true. In this case, this has no practical meaning, therefore the operator | worth replacing with || .

Project IntelliJ IDEA

PVS-Studio warning : V6008 Potential null dereference of 'editor'. IntroduceVariableBase.java:609

final PsiElement nameSuggestionContext =

editor == null ? null : file.findElementAt(...); // <=final RefactoringSupportProvider supportProvider =

LanguageRefactoringSupport.INSTANCE.forLanguage(...);

finalboolean isInplaceAvailableOnDataContext =

supportProvider != null &&

editor.getSettings().isVariableInplaceRenameEnabled() && // <=

...The analyzer has detected that in this code a dereferencing of the null editor pointer may occur . It is worth adding an additional check.

PVS-Studio warning : V6007 Expression is always false. RefResolveServiceImpl.java:814

@Overridepublicbooleancontains(@NotNull VirtualFile file){

....

returnfalse & !myProjectFileIndex.isUnderSourceRootOfType(....);

}It's hard for me to say what the author meant, but this code looks very suspicious. Even if suddenly there is no error here, I think it is worth rewriting this place so as not to embarrass the analyzer and other programmers.

PVS-Studio warning : V6007 Expression 'result [0]' is always false. CopyClassesHandler.java:298

finalboolean[] result = newboolean[] {false}; // <=

Runnable command = () -> {

PsiDirectory target;

if (targetDirectory instanceof PsiDirectory) {

target = (PsiDirectory)targetDirectory;

} else {

target = WriteAction.compute(() ->

((MoveDestination)targetDirectory).getTargetDirectory(

defaultTargetDirectory));

}

try {

Collection<PsiFile> files =

doCopyClasses(classes, map, copyClassName, target, project);

if (files != null) {

if (openInEditor) {

for (PsiFile file : files) {

CopyHandler.updateSelectionInActiveProjectView(

file,

project,

selectInActivePanel);

}

EditorHelper.openFilesInEditor(

files.toArray(PsiFile.EMPTY_ARRAY));

}

}

}

catch (IncorrectOperationException ex) {

Messages.showMessageDialog(project,

ex.getMessage(),

RefactoringBundle.message("error.title"),

Messages.getErrorIcon());

}

};

CommandProcessor processor = CommandProcessor.getInstance();

processor.executeCommand(project, command, commandName, null);

if (result[0]) { // <=

ToolWindowManager.getInstance(project).invokeLater(() ->

ToolWindowManager.getInstance(project)

.activateEditorComponent());

}I suspect that here they forgot to somehow change the value of the result . Because of this, the analyzer reports that checking if (result [0]) is meaningless.

Conclusion

Java direction is very versatile - it is the desktop, and android, and the web, and much more, so we have a lot of room for action. In the first place, of course, we will develop those areas that will be most in demand.

Here are our plans for the near future:

- Output bytecode based annotations;

- Integration into Ant projects (someone else uses it in 2018?);

- Plugin for Eclipse (under development);

- More diagnostics and annotations;

- Improving the mechanism of Data Flow.

I also suggest that those who wish to participate in testing the alpha version of our Java analyzer when it becomes available. To do this, write to us in support . We will add your contact list and write to you when we prepare the first alpha version.

If you want to share this article with an English-speaking audience, then please use the link to the translation: Egor Bredikhin. Development of a new static analyzer:

Read the article and have a question?

Often our articles are asked the same questions. We collected answers to them here: Answers to questions from readers of articles about PVS-Studio, version 2015 . Please review the list.