Secure communication in distributed systems

Hi Habr!

My name is Alex Solodky, I am a PHP developer at Badoo. And today I will share the text version of my report for the first Badoo PHP Meetup. A video of this and other reports from the mitap can be found here .

Any system consisting of at least two components (and if you have both PHP and a database, then these are already two components), encounters entire classes of risks in the interaction between these components.

The platform department in which I work integrates new internal services with our application. And solving these problems, we have gained experience, which I want to share.

Our backend is a PHP monolith that interacts with a variety of services (there are about fifty self-written ones). Services rarely interact with each other. But the problems that I talk about in the article are also relevant for microservice architecture. Indeed, in this case, the services are very actively interacting with each other, and the more interaction you have, the more problems you have.

Consider what to do when the service crashes or fails, how to organize the collection of metrics and what to do when all of the above does not save you.

Service crash

Sooner or later the server on which your service is installed will fall. This will surely happen, and you will not be able to defend against it - only reduce the likelihood. You can fail the hardware, the network, the code, the bad deployment - anything. And the more servers you have, the more often this will happen.

How to make your services survive in a world in which servers are constantly falling? The general approach to solving this class of problems is redundancy.

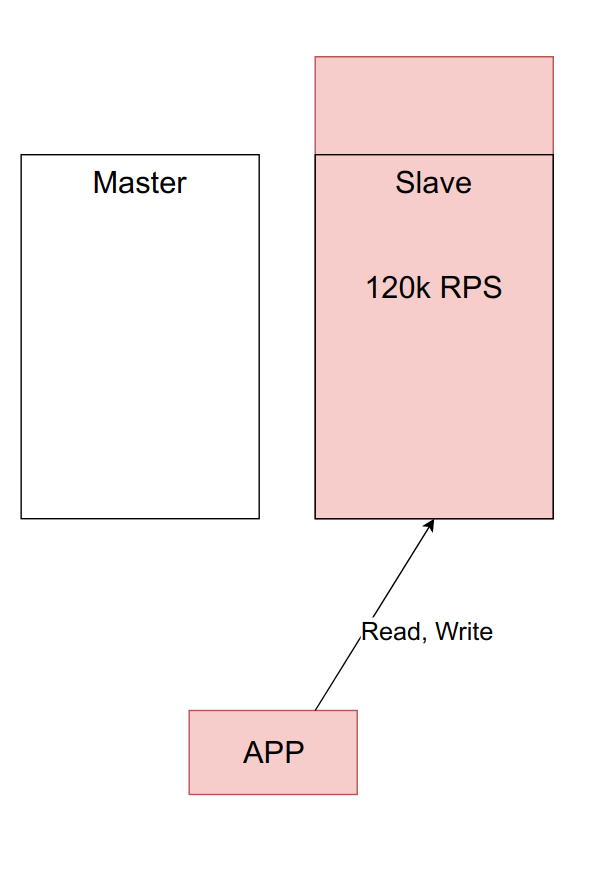

Reservation is used everywhere at different levels: from hardware to entire data centers. For example, RAID1 to protect against the failure of the hard drive or a backup power supply from your server, in case of failure of the first. Also this scheme is widely applied to databases. For example, for this you can use the master-slave.

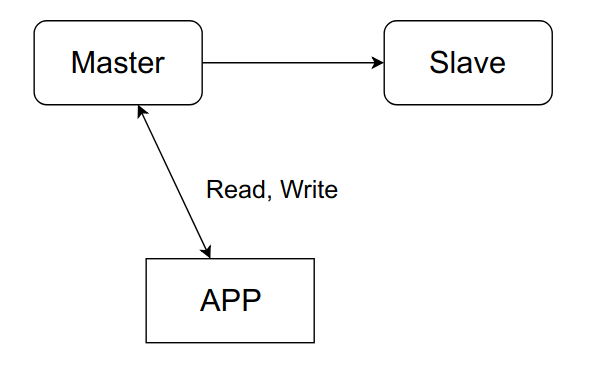

Consider typical problems with redundancy on the example of the simplest scheme:

The application communicates exclusively with the master, while in the background, asynchronously, data is transferred to the slave. When the master falls, we will switch to the slave and continue to work.

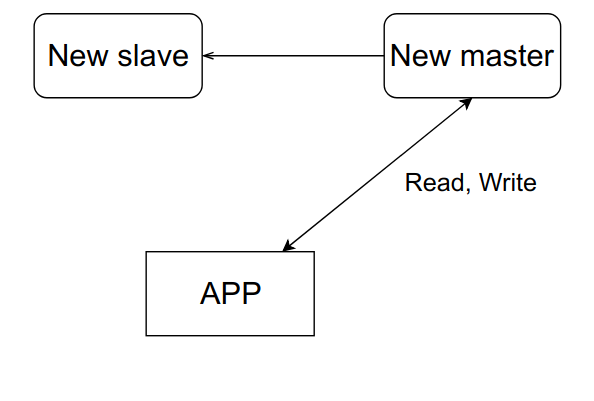

After the master is restored, we will simply make a new slave out of it, and the old one will turn into a master.

The scheme is simple, but even it has many nuances typical of any redundant schemes.

Load

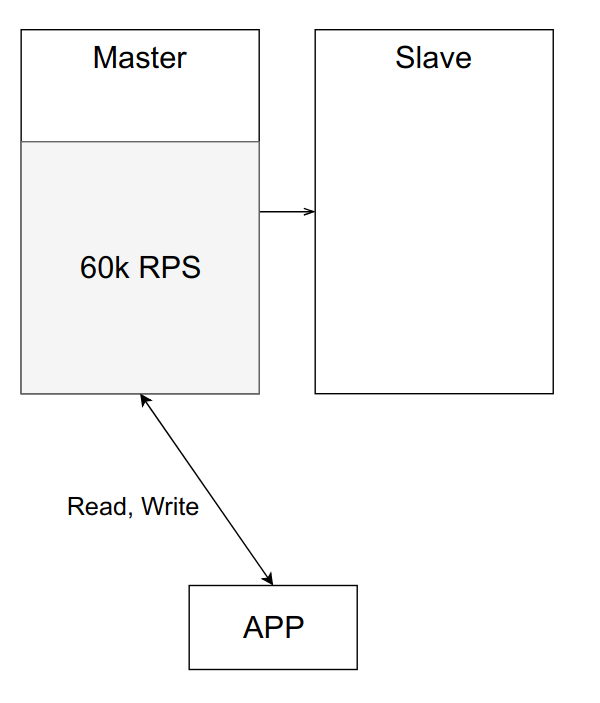

Suppose that one server from the example above can handle approximately 100k RPS. Now the load is 60k RPS, and everything works like a clock.

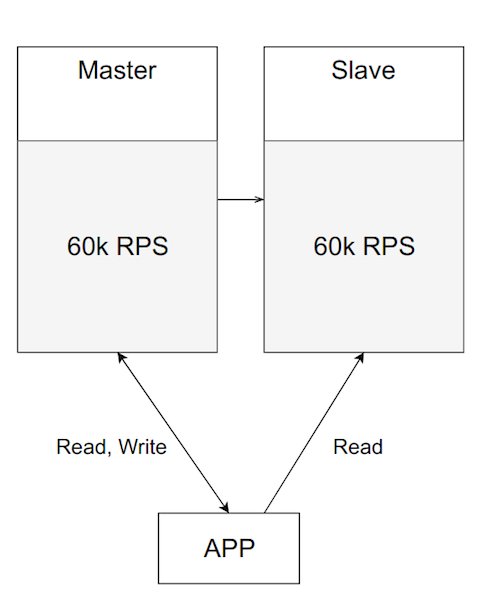

But over time, the load on the application, and hence the load on the master, increases. You may want to balance it by transferring part of the reading to the slave.

Looks pretty good. Holds the load, the server is no longer idle. But this is a bad idea. It is important to remember why you initially raised the slave - to switch to it in case of problems with the main one. If you started to load both servers, then when your master falls - and he sooner or later falls - you have to switch the main traffic from the master to the backup server, and he is already loaded. Such an overload will either make your system terribly slow or completely disable it.

Data

The main problem when adding fault tolerance to a service is a local state. If your stateless service, i.e., does not store any changeable data, then its scaling is not a problem. Just raise as many instances as we need, and balance the requests between them.

In the case when the stateful service, we can no longer do that. We need to think about how to store the same data on all instances of our service so that they remain consistent.

To solve this problem, one of two approaches is used: either synchronous or asynchronous replication. In general, I advise you to use the asynchronous version, since it is generally easier and faster to write, and, depending on the circumstances, see whether you need to switch to the synchronous one.

An important nuance that should be considered when working with asynchronous replication is eventual consistency . This means that at a specific point in time on different slaves, the data may lag behind the master at unpredictable and different time intervals.

Accordingly, you cannot read data every time from a random server, because then different answers may come to the same user requests. To work around this problem, sticky sessions are used , which ensures that all requests from one user go to one instance.

The advantages of the synchronous approach are that the data are always in a consistent state, and the risk of losing the data is lower (since they are considered recorded only after all the servers have done this). However, this has to be paid for by the write speed and complexity of the system itself (for example, various quorum algorithms for protection against split-brain ).

findings

- Reserve. If the data itself and the availability of a particular service are important, then make sure that your service survives a specific machine crash.

- When calculating the load, consider the drop in part of the servers. If there are four servers in your cluster, make sure that when one drops, the three remaining ones will pull up.

- Choose replication type depending on the tasks.

- Do not put all your eggs in one basket. Make sure that you distribute your backup servers far enough. Depending on the criticality of service availability, your servers can be in different racks in the same data center, or in different data centers in different countries. It all depends on how much you want a global catastrophe and are ready to survive.

Stupid service

At some point, your service may start to work very slowly. This problem can occur for a variety of reasons: excessive load, network lags, problems with hardware, or errors in the code. It looks like a not too terrible problem, but in fact it is more insidious than it seems.

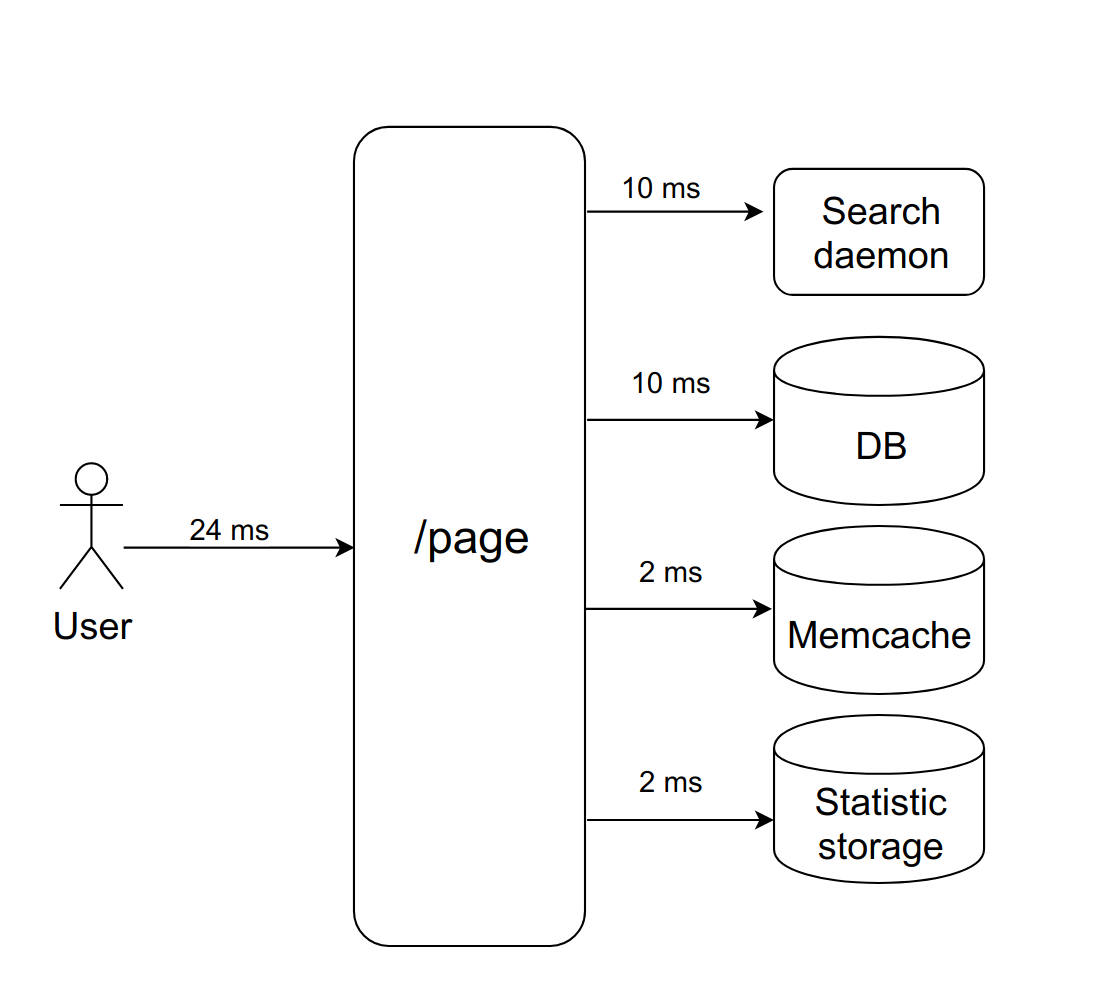

Imagine: the user requests a certain page. We synchronously and consistently appeal to the four demons to draw it. They quickly respond, everything works well.

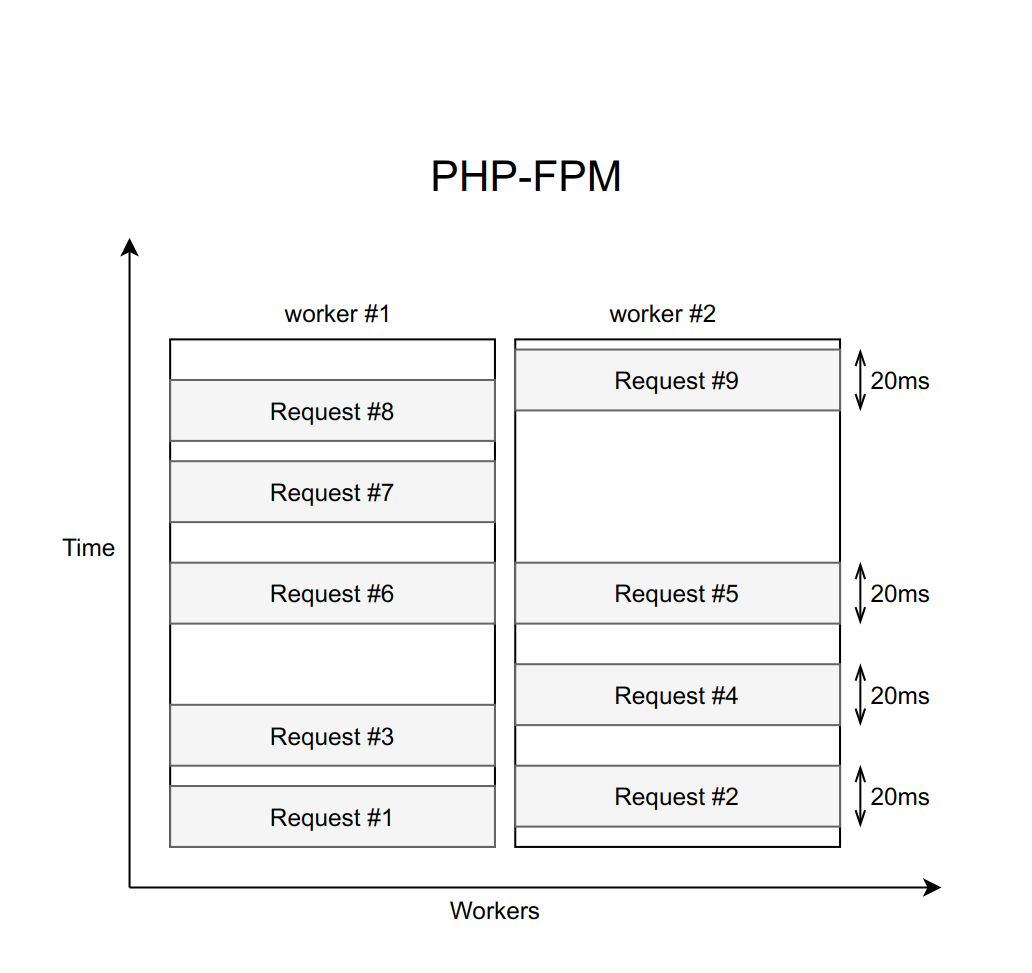

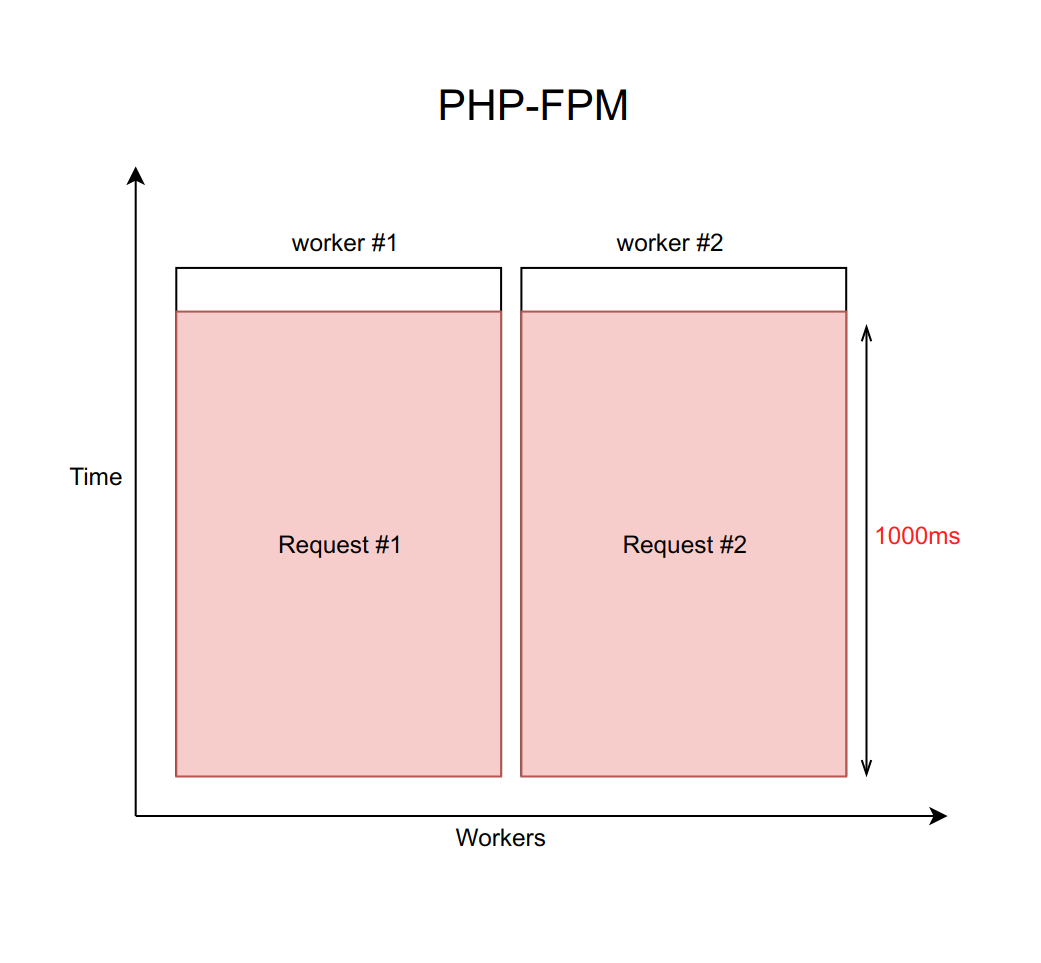

Suppose this case is handled by nginx with a fixed number of PHP FPM workers (with ten, for example). If each request is processed approximately 20 ms, then with the help of simple calculations it can be understood that our system is able to process about five hundred requests per second.

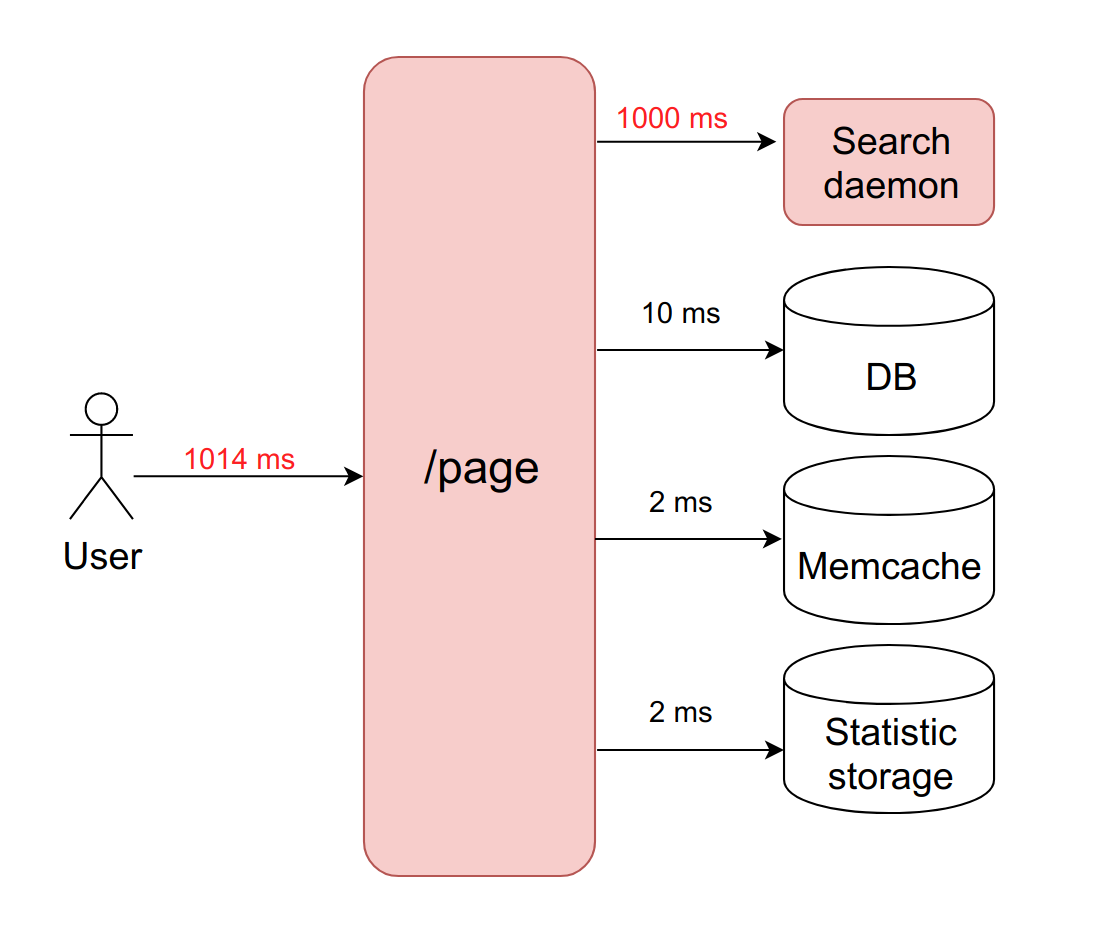

What happens when one of these four services starts to blunt, and the processing of requests to it will increase from 20 ms to a timeout of 1000 ms? Here it is important to remember that when we work with a network, the delay can be infinitely long. Therefore, you must always set a timeout (in this case it is equal to a second).

It turns out that the backend is forced to wait for the timeout to expire and get the error from the daemon. This means that the user receives the page in one second instead of ten milliseconds. Slowly, but not fatally.

But what is really the problem here? The fact is that when we have each request processed a second, the bandwidth tragically drops to ten requests per second. And the eleventh user will not be able to get an answer, even if he requested a page that is not related to the blunt service. Just because all ten workers are busy waiting for a timeout and cannot process new requests.

It is important to understand that this problem is not solved by increasing the number of workers. After all, every worker needs a certain amount of RAM for his work, even if he doesn’t do the actual work, but just hangs waiting for a timeout. Therefore, if you do not limit the number of workers in accordance with the capabilities of your server, then raising more and more new workers will put the server entirely. This case is an example of a cascade failure, when the fall of a single, even if not critical for the service user, causes the entire system to fail.

Decision

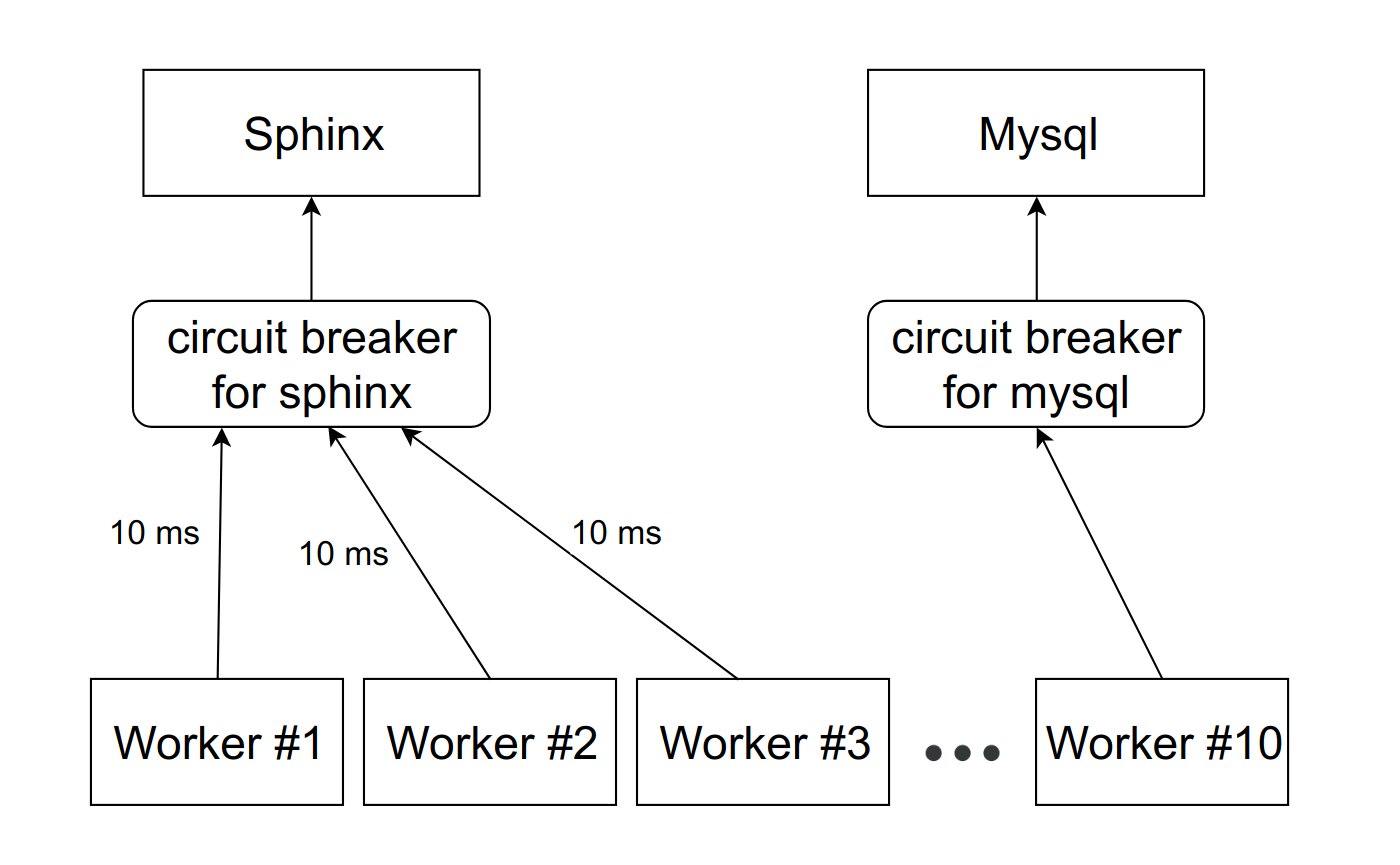

There is a pattern called circuit breaker . His task is quite simple: he must at some point cut down the stupid service. To do this, between the service and the workers put some proksya. This can be either a PHP code with a repository or a daemon on the local host. It is important to note that if you have several instances (your service is replicated), then this proxy should track each one separately.

We wrote our implementation of this pattern. But not because we love to write code, but because when we solved this problem many years ago, there were no ready-made solutions.

Now I will talk in general terms about our implementation and how it helps to avoid this problem. And more about it and its differences from other solutions can be heard.in Mikhail Kurmaev's report on Highload Siberia at the end of June. Decoding his report will also be in this blog.

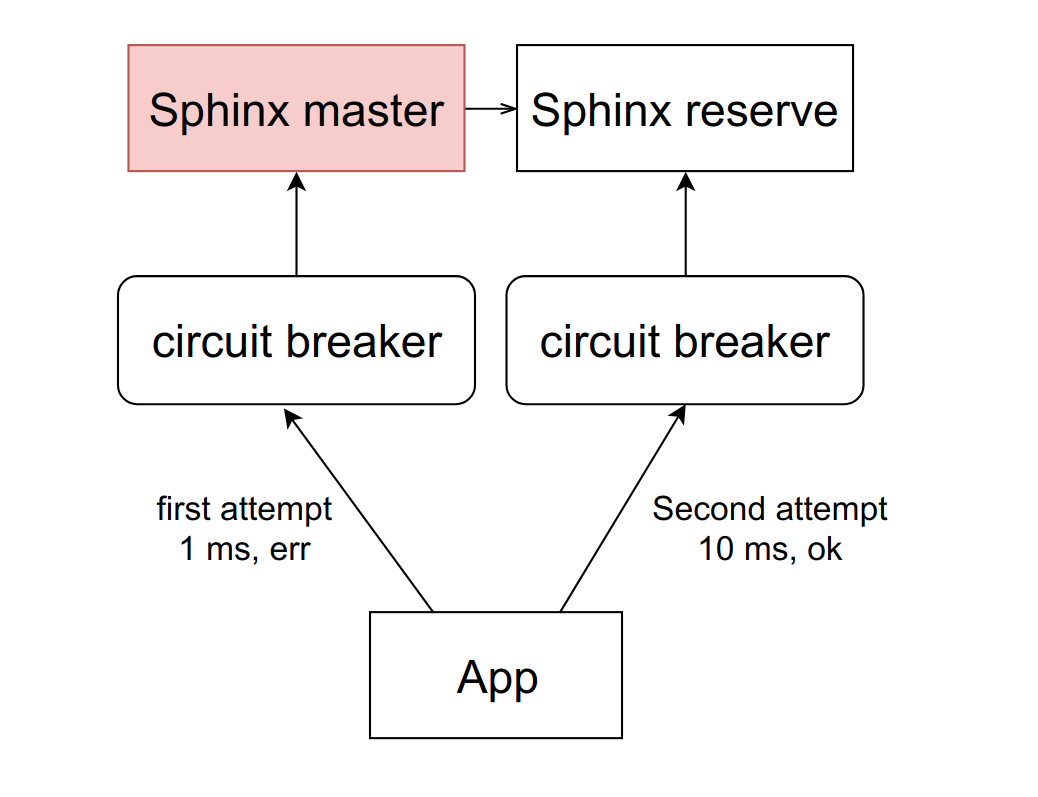

It looks like this:

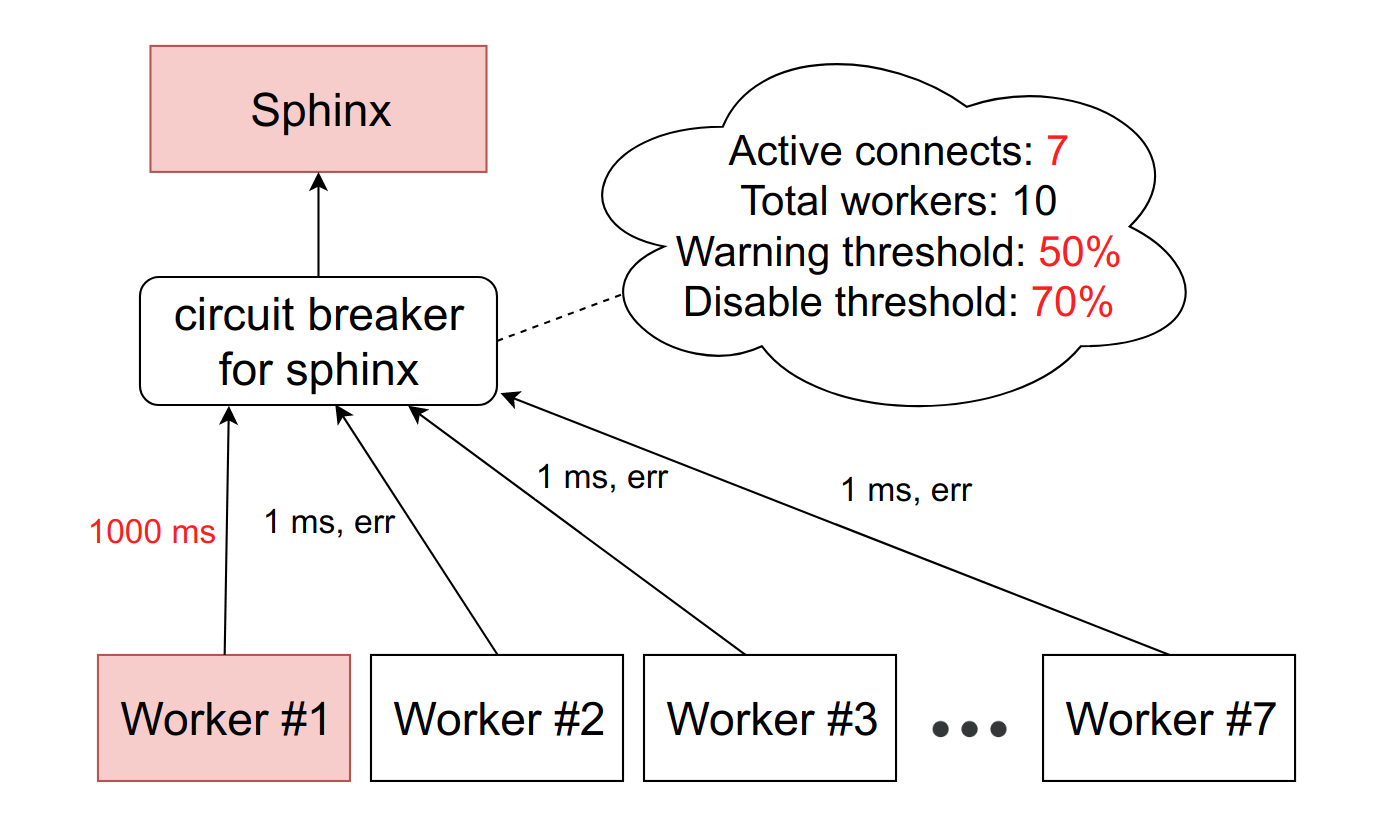

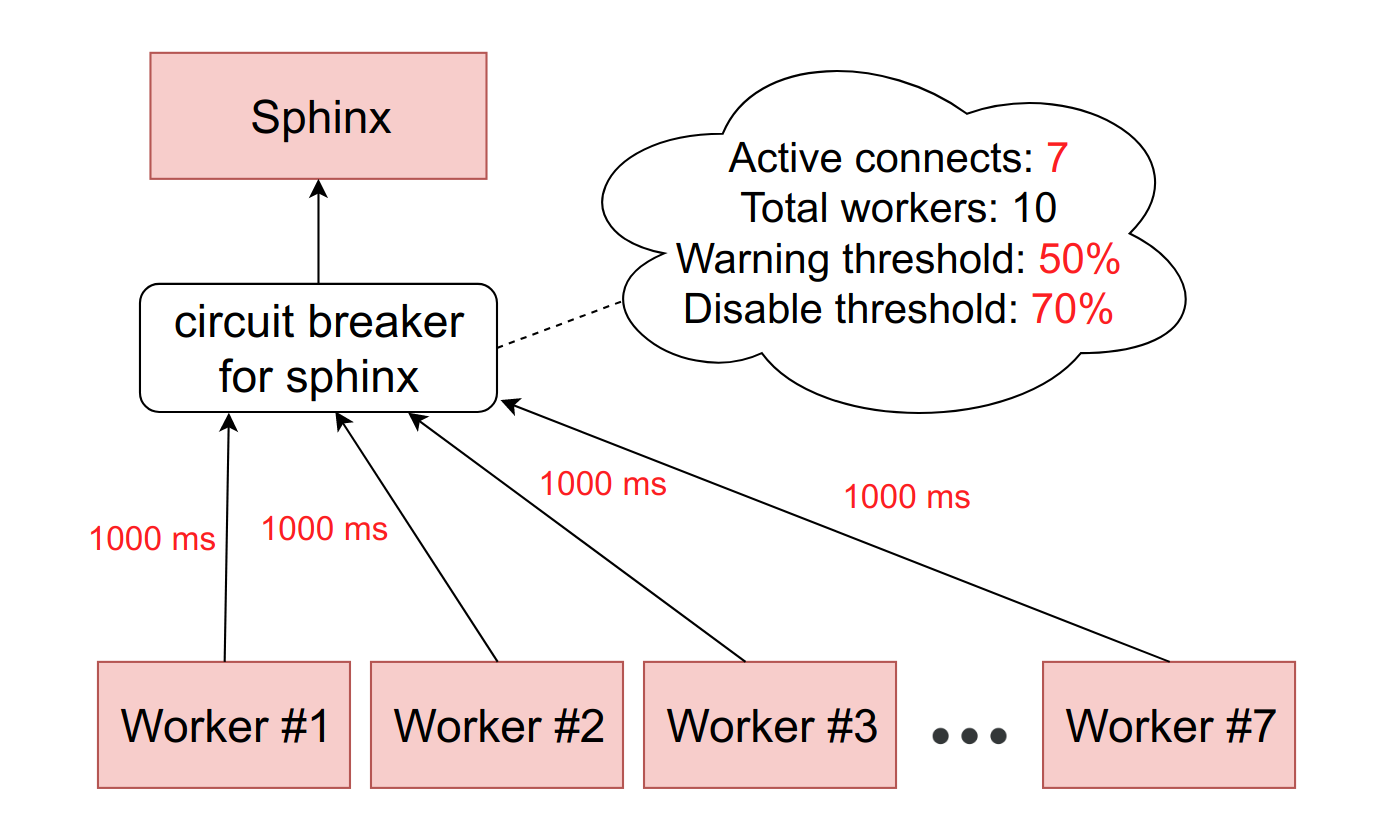

There is an abstract service Sphinx, which stands before the circuit breaker. Circuit breaker stores the number of active connections to a particular daemon. As soon as this value reaches the threshold, which we set as a percentage of the available FPM workers on the machine, we consider that the service has started to slow down. When you reach the first threshold, we send a notification to the person responsible for the service. Such a situation is either a sign that the limits need to be revised, or a precursor of problems with dullness.

If the situation worsens, and the number of slowing down workers reaches the second threshold value - we have about 10% in production - we cut down this host completely. More precisely, the service actually continues to work, but we stop sending requests to it. Circuit bäaker discards them and immediately gives the error to the workers, as if the service is lying.

From time to time, we automatically skip the request from some worker to see if the service has come to life anyway. If he answers adequately, then we again include him in the work.

All this is done in order to reduce the situation to the previous scheme with replication. Instead of waiting a second, before we realize that the host is unavailable, we immediately get an error and go to the backup host.

Implementations

Fortunately, Open Source does not stand still, and today you can take a turnkey solution on Github.

There are two basic approaches to implementing circuit breaker: a code-level library and a standalone daemon that proxies requests through itself.

The library option is more suitable if you have one main monolith in PHP that interacts with several services, and the services almost do not communicate with each other. Here are a few implementations available:

If you have a lot of services in different languages, and they all interact with each other, then the variant at the code level will have to be duplicated in all these languages. This is inconvenient in support, and ultimately leads to discrepancies in implementations.

Putting one demon in this case is much easier. In this case, you do not have to specifically edit the code. The demon tries to make the interaction transparent. However, this option is much more complex architectural .

Here are a few options (there is richer functionality, but there is also a circuit designer):

findings

- Do not rely on the network.

- All network requests must have a timeout, because the network can render indefinitely.

- Use circuit breaker if you want to avoid cascading application failure due to slowing down one small service.

Monitoring and telemetry

What does it give

- Predictability. It is important to predict what the load is now, and what will be in a month, in order to timely increase the number of service instances. This is especially true if you are dealing with an iron infrastructure, since ordering new servers takes time.

- Investigation of incidents. Sooner or later all the same something goes wrong, and you have to investigate it. And it is important to have enough data to understand the problem and be able to prevent such situations in the future.

- Accident prevention. Ideally, you should understand which patterns lead to accidents. It is important to track these patterns and promptly notify the team.

What to measure

Integration metrics

Since we are talking about the interaction between services, we monitor everything that is possible in relation to the communication of the service with the application. For example:

- number of requests;

- request processing time (including percentiles);

- number of logic errors;

- number of system errors.

It is important to distinguish between logic errors and system errors. If the service crashes, this is a regular situation: just switch to the second. But it is not so scary. If you start some kind of logic error, for example, strange data comes into the service or leave it, then this should be investigated. Most likely, the error is related to the bug in the code. She herself will not pass.

Internal metrics

By default, the service is a black box that does its job as it is not clear how. It is advisable to still understand and collect the maximum data that the service can provide. If the service is a specialized database that stores some data of your business logic, keep track of exactly how much data, what type they are, and other content metrics. If you have asynchronous interaction, it is also important to keep track of the queues through which your service communicates: their arrival and departure speed, time at different stages (if you have several intermediate points), the number of events in the queue.

Let's see what metrics can be collected using memcached as an example:

- hit / miss ratio;

- response time for different operations;

- RPS different operations;

- breakdown of the same data by different keys;

- top loaded keys;

- all internal metrics that the stats command gives.

How to do it

If you have a small company, a small project and few servers, then a good solution would be to connect some kind of SaaS for collecting and viewing - it is easier and cheaper. In this case, usually SaaS have extensive functionality, and do not have to take care of many things. Examples of such services:

Alternatively, you can always install on your own Zabbix, Grafana or any other self-hosted solution.

findings

- Collect all the metrics you can. Data is not superfluous. When you have to investigate something, you will thank yourself for your foresight.

- Do not forget about asynchronous interaction. If you have any queues that arrive gradually, it is important to understand how quickly they arrive, what happens to your events at the interface between services.

- If you write your service, teach him to give statistics on the work. Some of the data can be measured at the integration layer when we communicate with this service. The rest of the service should be able to give the stats conditional command. For example, in all of our services on Go, this functionality is standard.

- Customize triggers. Charts are good, but only while you look at them. It is important that you have a customized system that will tell you if something goes wrong.

Memento mori

And now a little about sad things. It may feel that the above is a panacea, and now nothing will ever fall. But even if you apply everything described above, something will ever fall. It is important to take this into account.

The reasons for the fall are many. For example, you could choose an insufficiently paranoid replication scheme. A meteorite fell into your data center, and then into the second. Or you just unrolled the code with a cunning error that unexpectedly surfaced.

For example, in Badoo there is a page "People nearby". There, users are looking for other people nearby to chat with them.

Now to render the page, the backend makes synchronous calls to about seven services. For clarity, reduce this number to two. One service is responsible for rendering the central unit with photos. The second - for the block of advertising on the left below. There may be those who want to become more visible. If we have a service that displays this advertisement, the block simply disappears.

Most users do not even know about this fact: our team responds quickly, and soon the unit simply appears again.

But not every functionality we can quietly remove. If we drop the service responsible for the central part of the page, it can’t be hidden. Therefore, it is important to tell the user in his language what is happening.

It is also desirable that the failure of one service does not lead to a cascade failure. For each service, a code must be written that handles its crash, otherwise the application may fall entirely.

But that's not all. Sometimes something falls without which you cannot live at all. For example, a central database or session service. It is important to correctly work out and show the user something adequate, somehow entertain him, say that everything is under control. At the same time, it is important that everything is really under control, and monitor workers are notified of the problem.

Die right

- Get ready for the fall. There is no silver bullet, so always lay a straw in case of a complete drop in service, even if your reservation is used.

- Do not allow cascade failures when problems with one of the services kill the entire application.

- Disable noncritical user functionality. This is normal. Many services are used only for internal needs and do not affect the functionality provided. For example, statistics service. It doesn’t matter to the user whether statistics are collected by you or not. It is important to him that the site worked.

Results

In order to securely integrate a new service into the system, we write a special API wrapper around Badoo, which takes on the following tasks:

- load balancing;

- timeouts;

- logic failover;

- circuit breaker;

- monitoring and telemetry;

- authorization logic;

- data serialization and deserialization.

It is better to make sure that all these items are also covered in your integration layer. Especially, if you use a ready Open-Source API client. It is important to remember that the integration layer is the source of the increased risk of cascading failure of your application.

Thanks for attention!

Literature