Motion Capture from iPi Soft

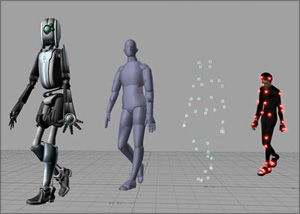

Motion capture (MoCap; Motion capture ) - a technology for recording the movements of actors, which are then used in computer graphics. Since the body of a person (and animals) is rather complicated, it is much simpler, more convincing, and often cheaper to record the movements of actors and transfer them to three-dimensional models than to animate three-dimensional models manually.

Motion capture (MoCap; Motion capture ) - a technology for recording the movements of actors, which are then used in computer graphics. Since the body of a person (and animals) is rather complicated, it is much simpler, more convincing, and often cheaper to record the movements of actors and transfer them to three-dimensional models than to animate three-dimensional models manually. Most motion capture systems work with markers or sensors that attach to the actor’s body , usually with a suit. But there is also a way to capture movement with the help of chromakey - we talked with Mikhail Nikonov , one of the members of the iPi Soft team (who won the Dubna school of innovation) and discussed the details of the project and its prospects.

Tell us about the project?

Our company makes technology for creating three-dimensional animation of people. We believe that now this is one of the key problems of three-dimensional graphics.

Mikhail Nikonov

Why?

Because rendering and other aspects of three-dimensional animation - even physical modeling - are already developed, and the viewer no longer notices further progress, further efforts in rendering. At the same time, I, as a person involved in animation, can say that in many films and especially in many computer games, the quality of animation still needs to be improved.

Motion tweens or facial tweens?

Both facial and full body animation.

And what problems do you solve better than traditional image capture, which is done using special video cameras, chromakey, lighting and suits with sensors or markers?

We designed our system on the principle of maximum usability for animators. All users with whom we communicate note that mobility is important to them, a flexible filming schedule is important, so that you do not need to order a studio in advance, and if you want to remake something, so that you do not have to wait a month for this studio. First of all, we solve precisely this problem - we provide animators and 3D graphic studios with a portable motion capture system, which, if desired, can be equipped with each animator.

What does it look like?

The system consists of a video camera and software that, using image processing and computer vision algorithms, reads skeletal animation from recorded video. The system looks like the software that you put on your computer, and the user must buy the camera in the store. We recommend the Sony PlayStation Eye camera, which costs $ 30 and is sold at any electronics store.

What about the markers?

The fact of the matter is that we do not use markers - this is the basis of our decision. That is why we started the development of our system. We want to send suits with markers, suits with sensors to the warehouse, because they restrict creative freedom.

What about the difficult shooting conditions?

Our system was tested by one well-known Moscow studio, they came with a laptop and cameras, and we made a capture in the first room that came across. No markers, complex background, however, our technology is coping.

Moving camera?

We can’t work with a moving camera yet, our technology is not so tested. While we have a number of advantages - even with a low resolution cameras, at 320x240, we get quite high-quality animation. And so we recommend 640x480.

Perhaps the frame rate is of great importance?

Yes. We recommend the Sony camera precisely because it supports up to 60 frames per second. In addition, we recommend using multiple cameras. Now we recommend three or four cameras, and up to four are supported. On modern computers, you can shoot with six cameras at the same time, so we are now working on a six-chamber capture.

We will also work on a single-chamber version - Microsoft is going to release a three-dimensional camera, and then image capture will be even more simplified.

Does capture happen in real time?

Not yet. The fact is that our algorithm requires a lot of computing power of the computer - we use all the processor cores in the system, and we use the video card for calculations, what is called GPGPU. So even on the most powerful modern computers, we have so far reached a speed of 2 frames per second. But, of course, this is just a matter of time - last year we accelerated our algorithm four times, and the speed of computers increased about two times, so next year we will speed up video processing, and there will be real-time processing not far away.

What are your future plans?

Now we are at the beginning of the journey. Of course, we will improve this technology, we need several years for its development in order for it to reach maturity. It's not a secret that we are working on related technologies. What you are seeing now is motion capture for human actors. Man is a complex creature, acting is important, and it’s almost impossible to model acting on a computer. With animals, the opposite is true - we believe that they need to be simulated on a computer. This is the so-called synthesis of movements. We are now engaged in the creation of technology prototypes that would allow us to realistically simulate the animation of animals and monsters using genetic algorithms, where you will have a virtual laboratory in which you can breed some creatures and at the same time record the animation of the resulting creatures in different situations - for example,

Not without looking back at Spore ?

Maybe, although I must say that Spore disappointed many that the authors greatly simplified the game, abandoning the original approach, where they wanted to more honestly model creatures and made them more kawaii, but without taking into account biology factors and even physical laws. There can simultaneously be a creature with a large mass and at the same time a fast one - this does not happen in life. If you have a large mass - you are a sweeping elephant, but for speed you need to be compact and thin, like a cheetah.

By the way, you can already try our image capture technology yourself. Any modern laptop is suitable for recording video, but for processing we, of course, recommend a powerful desktop computer with Windows OS - most likely, it is a gaming computer with which you can quickly process the animation.

* * *

We asked Anatoly Belikov , who is already familiar to you from our plot with panoramic views of Paris, to comment on the development of iPi Soft . Here's how the specialist comments on the iPi Soft capture system:“There is one“ but ”. The main advantage of this program is the work without markers - its main drawback. The fact is that conventional motion capture devices simply track the movement of markers in space. The program does not know what it is tracking, and further use of such data is not automated in any way. The resulting animation must be manually “screwed” to the virtual object. This, despite all the difficulties, allows you to track the movement of anything. You can stick markers on the face, on objects in the hands of actors, on a cat, a dog, and so on, and programs for optical tracking of markers using household cameras have been around for many years. So you need to understand that iPi Soft technology is so far limited to capturing the movement of human actors. But, of course, I will definitely use such technology,.

* * *

ps: new movie:

Feel free to join the ranks of Intel's readers on Habré.

Feel free to join the ranks of Intel's readers on Habré. Success!