Hyper-V Architecture: Deep Immersion

Everyone take their places! Bully down the hatches! Get ready for the dive!

In this article, I will try to talk more about the Hyper-V architecture than I did before .

Hyper-V is one of the server virtualization technologies that allows you to run many virtual operating systems on the same physical server. These OSs are referred to as “guest”, and the OS installed on the physical server is called “host”. Each guest operating system runs in its own isolated environment, and “thinks” that it works on a separate computer. They “do not know” about the existence of other guest OS and host OS.

These isolated environments are called "virtual machines" (or VM for short). Virtual machines are implemented in software, and provide guest OS and applications access to server hardware resources through a hypervisor and virtual devices. As already mentioned, the guest OS behaves as if it completely controls the physical server, and has no idea about the existence of other virtual machines. Also, these virtual environments can be called "partitions" (not to be confused with partitions on hard drives).

Having appeared for the first time as part of Windows Server 2008, Hyper-V now exists as a standalone Hyper-V Server product (which is de facto heavily stripped down Windows Server 2008), and in the new version - R2 - which has entered the enterprise-class virtualization systems market. Version R2 supports some new features, and this article will focus on this version.

The term “hypervisor” dates back to 1972 when IBM implemented virtualization in its System / 370 mainframes. This was a breakthrough in IT, as it circumvented architectural limitations and the high cost of using mainframes.

A hypervisor is a virtualization platform that allows you to run several operating systems on the same physical computer. It is the hypervisor that provides the isolated environment for each virtual machine, and it is it that provides the guest OS with access to the computer hardware.

Hypervisors can be divided into two types according to the launch method (on bare metal or inside the OS) and into two types according to architecture (monolithic and microkernel).

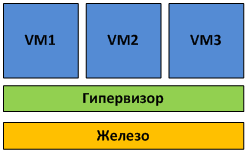

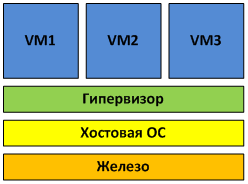

Type 1 hypervisor runs directly on the physical hardware and manages it independently. Guest OSs running inside virtual machines are located a higher level, as shown in Figure 1.

Fig. 1 Hypervisor of the first kind is launched on the "bare metal".

The work of hypervisors of the first kind directly with the equipment allows you to achieve greater performance, reliability and security.

Type 1 hypervisors are used in many Enterprise-class solutions:

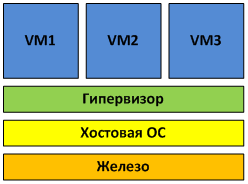

Unlike the 1st kind, the 2nd kind hypervisor runs inside the host OS (see Fig. 2).

Fig. 2 Hypervisor of the 2nd kind is launched inside the guest OS.

Virtual machines are launched in the user space of the host OS, which does not have the best effect on performance.

Examples of type 2 hypervisors are MS Virtual Server and VMware Server, as well as desktop virtualization products - MS VirtualPC and VMware Workstation.

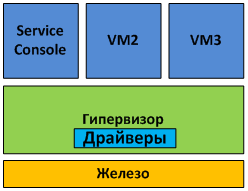

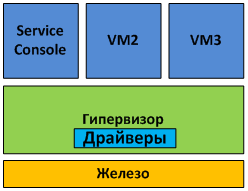

Monolithic architecture hypervisors include hardware device drivers in their code (see Figure 3).

Fig. 3. Monolithic architecture

Monolithic architecture has its advantages and disadvantages. Among the advantages include:

The disadvantages of monolithic architecture are as follows:

The most common example of monolithic architecture is VMware ESX.

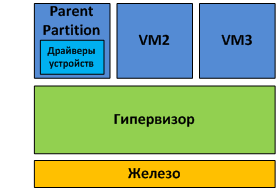

With microkernel architecture, device drivers work inside the host OS.

The host OS in this case runs in the same virtual environment as all VMs, and is called the "parent partition". All other environments, respectively, are "children". The only difference between the parent and child partitions is that only the parent partition has direct access to the server hardware. The hypervisor itself is dedicated to allocating memory and scheduling processor time.

Fig. 4. Microkernel architecture The

advantages of such an architecture are as follows:

The most striking example of microkernel architecture is, in fact, Hyper-V itself.

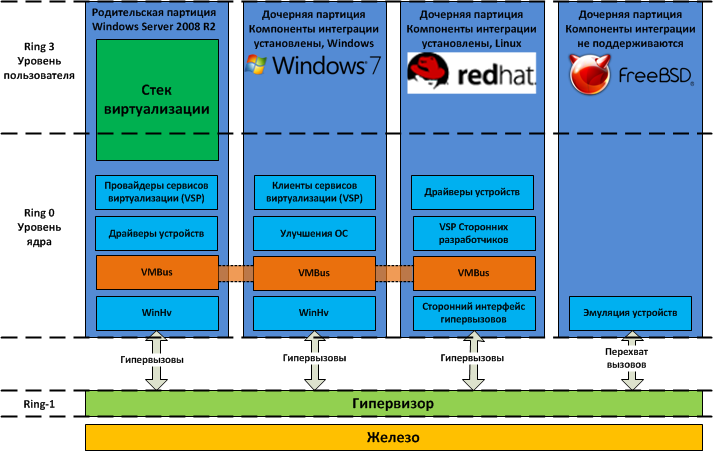

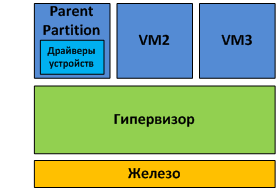

Figure 5 shows the basic elements of the Hyper-V architecture.

Figure 5 Hyper-V Architecture

As you can see from the figure, the hypervisor works at the next level after iron - which is typical for hypervisors of the first kind. Parent and child partitions work at a level higher than the hypervisor. Partitions in this case are isolation areas within which operating systems operate. Do not confuse them, for example, with partitions on the hard drive. In the parent partition, the host OS (Windows Server 2008 R2) and the virtualization stack are launched. It is also from the parent partition that external devices are managed, as well as child partitions. It’s easy to guess that the child partitions are created from the parent partition and are designed to run guest OSs. All partitions are connected to the hypervisor via the hyper-call interface, which provides the operating systems with a special API.The MSDN .

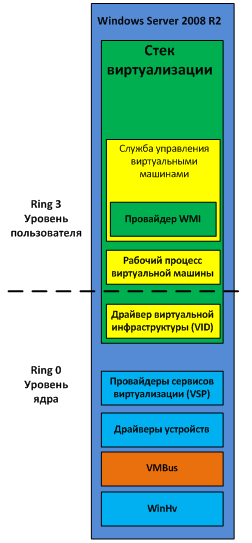

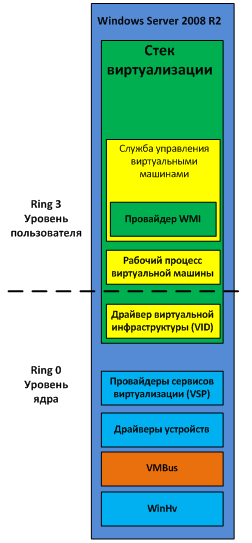

The parent partition is created immediately upon installation of the Hyper-V system role. The components of the parent partition are shown in Fig. 6. The

purpose of the parent partition is as follows:

Figure 6 Hyper-V Parent Partition Components

The following components that work in the parent partition are collectively called the virtualization stack:

In addition, two more components work in the parent partition. These are virtualization service providers (VSPs) and the virtual machine bus (VMBus).

Virtual

Machine Management Service The tasks of the Virtual Machine Management Service (VMMS) include:

When the virtual machine starts, VMMS creates a new virtual machine workflow. Learn more about workflows below.

Also, VMMS determines what operations are allowed to be performed with the virtual machine at the moment: for example, if a snapshot is deleted, then it will not be allowed to apply a snapshot during the delete operation. You can read more about working with snapshots (snapshots) of virtual machines in my corresponding article .

In more detail, VMMS manages the following states of virtual machines:

Other management tasks — Pause, Save, and Power Off — are not performed by the VMMS service, but directly by the workflow of the corresponding virtual machine.

The VMMS service operates both at the user level and at the kernel level as a system service (VMMS.exe) and depends on the Remote Procedure Call (RPC) and Windows Management Instrumentation (WMI) services. VMMS includes many components, including a WMI provider that provides an interface for managing virtual machines. Thanks to this, you can manage virtual machines from the command line and using VBScript and PowerShell scripts. System Center Virtual Machine Manager also uses this interface to manage virtual machines.

To manage the virtual machine from the parent partition, a special process is launched - the virtual machine workflow (VMWP). This process works at the user level. For each running virtual machine, VMMS starts a separate workflow. This allows you to isolate virtual machines from each other. To increase security, workflows are launched under the built-in user account Network Service.

The VMWP process is used to manage the corresponding virtual machine. Its tasks include:

Creating, configuring, and starting a virtual machine

Pause and continue working (Pause / Resume)

Saving and restoring state (Save / Restore State)

Creating snapshots (snapshots)

In addition, it is the workflow that emulates the virtual motherboard (VMB), which is used to provide guest OS memory, manage interrupts, and virtual devices.

Virtual Devices (VDevs) are software modules that implement configuration and device management for virtual machines. VMB includes a basic set of virtual devices that includes a PCI bus and system devices identical to the Intel 440BX chipset. There are two types of virtual devices:

The virtual infrastructure driver (vid.sys) runs at the kernel level and manages partitions, virtual processors, and memory. This driver is also an intermediate link between the hypervisor and the components of the user-level virtualization stack.

The hypervisor interface library (WinHv.sys) is a kernel-level DLL that loads in both the host and guest OS, provided that the integration component is installed. This library provides a hyper-call interface used for interaction between the OS and the hypervisor.

Virtualization service providers work in the parent partition and provide guest OSs with access to hardware devices through the Virtualization Services (VSC) client. Communication between the VSP and VSC is via the VMBus virtual bus.

The purpose of VMBus is to provide high-speed access between the parent and child partitions, while other access methods are much slower due to the high overhead when emulating devices.

If the guest OS does not support integration components, you have to use device emulation. This means that the hypervisor has to intercept the calls of the guest OS and redirect them to emulated devices, which, I recall, are emulated by the workflow of the virtual machine. Since the workflow runs in user space, the use of emulated devices leads to a significant decrease in performance compared to using VMBus. That is why it is recommended that you install the integration components immediately after installing the guest OS.

As already mentioned, when using VMBus, the interaction between the host and guest OS occurs according to the client-server model. In the parent partition, virtualization service providers (VSPs), which are the server part, are launched, and in the child partitions, the client part - VSC. VSC redirects guest OS requests via VMBus to VSP in the parent partition, and VSP itself redirects the request to the device driver. This interaction process is completely transparent to the guest OS.

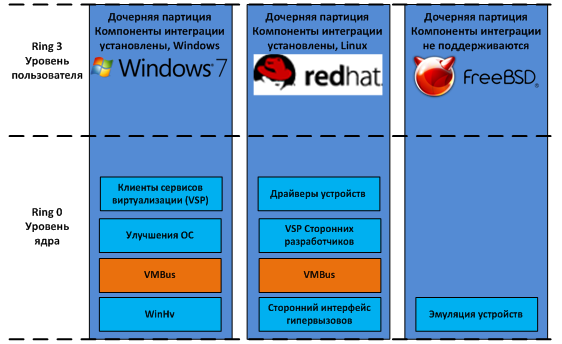

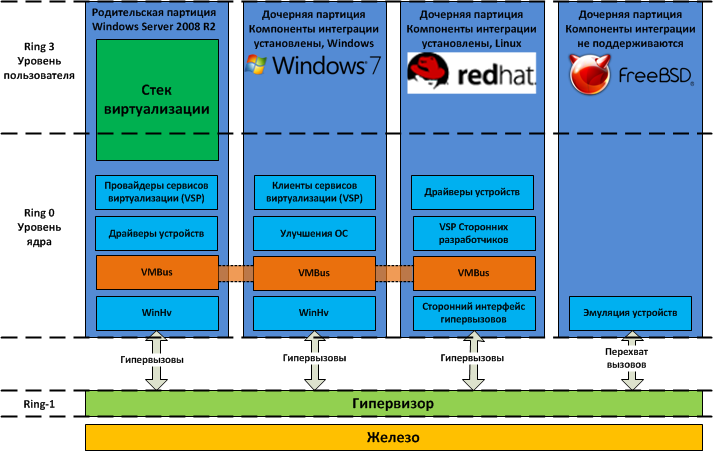

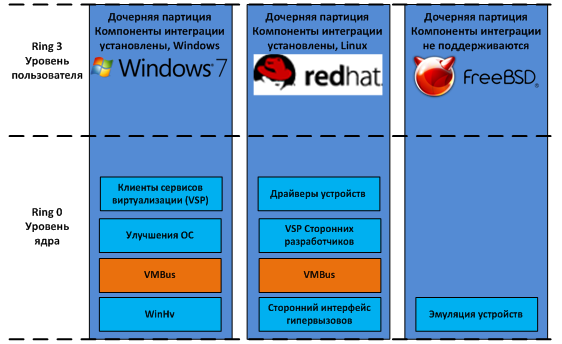

Let us return to our drawing with Hyper-V architecture, we will only reduce it a little, since we are only interested in child partitions.

Fig. 7 Child partitions

So, in child partitions can be installed:

In all three cases, the set of components in the child partitions will vary slightly.

Microsoft Windows operating systems starting with Windows 2000 support the installation of an integration component. After installing Hyper-V Integration Services in the guest OS, the following components are launched:

Also, integration components provide the following functionality:

There are also OSes that are not part of the Windows family, but that support integration components. At the moment, these are only SUSE Linux Enterprise Server and Red Hat Enterprise Linux. When installing the integration component, such OSs use third-party VSCs to interact with VSCs via VMBus and access equipment. The integration components for Linux were developed by Microsoft in conjunction with Citrix and are available for download at the Microsoft Download Center. Since integration components for Linux were released under the GPL v2 license, work is underway to integrate them into the Linux kernel through the Linux Driver Project , which will significantly expand the list of supported guest OSs.

This, perhaps, will end my second article on the architecture of Hyper-V. The previous article raised some questions from some readers, and I hope that now I answered them.

Hope the reading was not too boring. I often used the "academic language", but this was necessary, since the subject of the article involves a very large volume of theory and almost zero point zero practice.

Many thanks to Mitch Tulloch and the Microsoft Virtualization Team. Based on their book Understanding Microsoft Virtualization Solutions , an article was prepared.

In this article, I will try to talk more about the Hyper-V architecture than I did before .

What is Hyper-V?

Hyper-V is one of the server virtualization technologies that allows you to run many virtual operating systems on the same physical server. These OSs are referred to as “guest”, and the OS installed on the physical server is called “host”. Each guest operating system runs in its own isolated environment, and “thinks” that it works on a separate computer. They “do not know” about the existence of other guest OS and host OS.

These isolated environments are called "virtual machines" (or VM for short). Virtual machines are implemented in software, and provide guest OS and applications access to server hardware resources through a hypervisor and virtual devices. As already mentioned, the guest OS behaves as if it completely controls the physical server, and has no idea about the existence of other virtual machines. Also, these virtual environments can be called "partitions" (not to be confused with partitions on hard drives).

Having appeared for the first time as part of Windows Server 2008, Hyper-V now exists as a standalone Hyper-V Server product (which is de facto heavily stripped down Windows Server 2008), and in the new version - R2 - which has entered the enterprise-class virtualization systems market. Version R2 supports some new features, and this article will focus on this version.

Hypervisor

The term “hypervisor” dates back to 1972 when IBM implemented virtualization in its System / 370 mainframes. This was a breakthrough in IT, as it circumvented architectural limitations and the high cost of using mainframes.

A hypervisor is a virtualization platform that allows you to run several operating systems on the same physical computer. It is the hypervisor that provides the isolated environment for each virtual machine, and it is it that provides the guest OS with access to the computer hardware.

Hypervisors can be divided into two types according to the launch method (on bare metal or inside the OS) and into two types according to architecture (monolithic and microkernel).

1st kind hypervisor

Type 1 hypervisor runs directly on the physical hardware and manages it independently. Guest OSs running inside virtual machines are located a higher level, as shown in Figure 1.

Fig. 1 Hypervisor of the first kind is launched on the "bare metal".

The work of hypervisors of the first kind directly with the equipment allows you to achieve greater performance, reliability and security.

Type 1 hypervisors are used in many Enterprise-class solutions:

- Microsoft Hyper-V

- VMware ESX Server

- Citrix XenServer

2nd kind hypervisor

Unlike the 1st kind, the 2nd kind hypervisor runs inside the host OS (see Fig. 2).

Fig. 2 Hypervisor of the 2nd kind is launched inside the guest OS.

Virtual machines are launched in the user space of the host OS, which does not have the best effect on performance.

Examples of type 2 hypervisors are MS Virtual Server and VMware Server, as well as desktop virtualization products - MS VirtualPC and VMware Workstation.

Monolithic hypervisor

Monolithic architecture hypervisors include hardware device drivers in their code (see Figure 3).

Fig. 3. Monolithic architecture

Monolithic architecture has its advantages and disadvantages. Among the advantages include:

- Higher (theoretically) performance due to drivers being in the hypervisor space

- Higher reliability, since failures in the operating OS (in terms of VMware - “Service Console”) will not lead to a failure of all running virtual machines.

The disadvantages of monolithic architecture are as follows:

- Only hardware whose drivers are available in the hypervisor is supported. Because of this, the hypervisor vendor must work closely with equipment vendors so that drivers for the operation of all new equipment with the hypervisor are written in time and added to the hypervisor code. For the same reason, when switching to a new hardware platform, you may need to switch to a different version of the hypervisor, and vice versa - when switching to a new version of the hypervisor, you may need to change the hardware platform, because old equipment is no longer supported.

- Potentially lower security - due to the inclusion of third-party code in the form of device drivers in the hypervisor. Since the driver code is executed in the hypervisor space, there is a theoretical possibility to exploit the vulnerability in the code and gain control over both the host OS and all guest systems.

The most common example of monolithic architecture is VMware ESX.

Microkernel architecture

With microkernel architecture, device drivers work inside the host OS.

The host OS in this case runs in the same virtual environment as all VMs, and is called the "parent partition". All other environments, respectively, are "children". The only difference between the parent and child partitions is that only the parent partition has direct access to the server hardware. The hypervisor itself is dedicated to allocating memory and scheduling processor time.

Fig. 4. Microkernel architecture The

advantages of such an architecture are as follows:

- No drivers are required, “sharpened” under the hypervisor. The microkernel architecture hypervisor is compatible with any equipment that has drivers for the parent partition OS.

- Since the drivers run inside the parent partition, the hypervisor has more time for more important tasks - memory management and the scheduler.

- Higher security. The hypervisor does not contain extraneous code; accordingly, there are less opportunities to attack it.

The most striking example of microkernel architecture is, in fact, Hyper-V itself.

Hyper-V Architecture

Figure 5 shows the basic elements of the Hyper-V architecture.

Figure 5 Hyper-V Architecture

As you can see from the figure, the hypervisor works at the next level after iron - which is typical for hypervisors of the first kind. Parent and child partitions work at a level higher than the hypervisor. Partitions in this case are isolation areas within which operating systems operate. Do not confuse them, for example, with partitions on the hard drive. In the parent partition, the host OS (Windows Server 2008 R2) and the virtualization stack are launched. It is also from the parent partition that external devices are managed, as well as child partitions. It’s easy to guess that the child partitions are created from the parent partition and are designed to run guest OSs. All partitions are connected to the hypervisor via the hyper-call interface, which provides the operating systems with a special API.The MSDN .

Parent Partition

The parent partition is created immediately upon installation of the Hyper-V system role. The components of the parent partition are shown in Fig. 6. The

purpose of the parent partition is as follows:

- Creation, deletion and management of child partitions, including the remote one, using the WMI provider.

- Controlling access to hardware devices, with the exception of processor time and memory, is what the hypervisor does.

- Power management and hardware error handling, if any.

Figure 6 Hyper-V Parent Partition Components

Virtualization stack

The following components that work in the parent partition are collectively called the virtualization stack:

- Virtual Machine Management Service (VMMS)

- Virtual Machine Workflows (VMWP)

- Virtual devices

- Virtual Infrastructure Driver (VID)

- Hypervisor Interface Library

In addition, two more components work in the parent partition. These are virtualization service providers (VSPs) and the virtual machine bus (VMBus).

Virtual

Machine Management Service The tasks of the Virtual Machine Management Service (VMMS) include:

- Virtual machine state management (on / off)

- Add / Remove Virtual Devices

- Snapshot management

When the virtual machine starts, VMMS creates a new virtual machine workflow. Learn more about workflows below.

Also, VMMS determines what operations are allowed to be performed with the virtual machine at the moment: for example, if a snapshot is deleted, then it will not be allowed to apply a snapshot during the delete operation. You can read more about working with snapshots (snapshots) of virtual machines in my corresponding article .

In more detail, VMMS manages the following states of virtual machines:

- Starting

- Active

- Not active

- Taking snapshot

- Applying snapshot

- Deleting snapshot

- Merging disk

Other management tasks — Pause, Save, and Power Off — are not performed by the VMMS service, but directly by the workflow of the corresponding virtual machine.

The VMMS service operates both at the user level and at the kernel level as a system service (VMMS.exe) and depends on the Remote Procedure Call (RPC) and Windows Management Instrumentation (WMI) services. VMMS includes many components, including a WMI provider that provides an interface for managing virtual machines. Thanks to this, you can manage virtual machines from the command line and using VBScript and PowerShell scripts. System Center Virtual Machine Manager also uses this interface to manage virtual machines.

Virtual Machine Workflow (VMWP)

To manage the virtual machine from the parent partition, a special process is launched - the virtual machine workflow (VMWP). This process works at the user level. For each running virtual machine, VMMS starts a separate workflow. This allows you to isolate virtual machines from each other. To increase security, workflows are launched under the built-in user account Network Service.

The VMWP process is used to manage the corresponding virtual machine. Its tasks include:

Creating, configuring, and starting a virtual machine

Pause and continue working (Pause / Resume)

Saving and restoring state (Save / Restore State)

Creating snapshots (snapshots)

In addition, it is the workflow that emulates the virtual motherboard (VMB), which is used to provide guest OS memory, manage interrupts, and virtual devices.

Virtual devices

Virtual Devices (VDevs) are software modules that implement configuration and device management for virtual machines. VMB includes a basic set of virtual devices that includes a PCI bus and system devices identical to the Intel 440BX chipset. There are two types of virtual devices:

- Emulated devices - emulate certain hardware devices, such as, for example, a VESA video adapter. There are a lot of emulated devices, for example: BIOS, DMA, APIC, ISA and PCI buses, interrupt controllers, timers, power management, serial port controllers, a system speaker, a PS / 2 keyboard and mouse controller, an emulated (Legacy) Ethernet adapter ( DEC / Intel 21140), FDD, IDE controller and VESA / VGA video adapter. That is why only the virtual IDE controller can be used to load the guest OS, and not SCSI, which is a synthetic device.

- Synthetic devices - do not emulate the glands that really exist in nature. Examples include a synthetic video adapter, human interaction devices (HIDs), a network adapter, a SCSI controller, a synthetic interrupt controller, and a memory controller. Synthetic devices can only be used if the integration component is installed in the guest OS. Synthetic devices access server hardware devices through virtualization service providers running in the parent partition. The appeal is through the virtual bus VMBus, which is much faster than emulating physical devices.

Virtual Infrastructure Driver (VID)

The virtual infrastructure driver (vid.sys) runs at the kernel level and manages partitions, virtual processors, and memory. This driver is also an intermediate link between the hypervisor and the components of the user-level virtualization stack.

Hypervisor Interface Library

The hypervisor interface library (WinHv.sys) is a kernel-level DLL that loads in both the host and guest OS, provided that the integration component is installed. This library provides a hyper-call interface used for interaction between the OS and the hypervisor.

Virtualization Service Providers (VSPs)

Virtualization service providers work in the parent partition and provide guest OSs with access to hardware devices through the Virtualization Services (VSC) client. Communication between the VSP and VSC is via the VMBus virtual bus.

Virtual Machine Bus (VMBus)

The purpose of VMBus is to provide high-speed access between the parent and child partitions, while other access methods are much slower due to the high overhead when emulating devices.

If the guest OS does not support integration components, you have to use device emulation. This means that the hypervisor has to intercept the calls of the guest OS and redirect them to emulated devices, which, I recall, are emulated by the workflow of the virtual machine. Since the workflow runs in user space, the use of emulated devices leads to a significant decrease in performance compared to using VMBus. That is why it is recommended that you install the integration components immediately after installing the guest OS.

As already mentioned, when using VMBus, the interaction between the host and guest OS occurs according to the client-server model. In the parent partition, virtualization service providers (VSPs), which are the server part, are launched, and in the child partitions, the client part - VSC. VSC redirects guest OS requests via VMBus to VSP in the parent partition, and VSP itself redirects the request to the device driver. This interaction process is completely transparent to the guest OS.

Subsidiary partitions

Let us return to our drawing with Hyper-V architecture, we will only reduce it a little, since we are only interested in child partitions.

Fig. 7 Child partitions

So, in child partitions can be installed:

- Windows OS with installed integration components (in our case, Windows 7)

- The OS is not from the Windows family, but it supports integration components (Red Hat Enterprise Linux in our case)

- OSs that do not support integration components (for example, FreeBSD).

In all three cases, the set of components in the child partitions will vary slightly.

Windows OS with integration components installed

Microsoft Windows operating systems starting with Windows 2000 support the installation of an integration component. After installing Hyper-V Integration Services in the guest OS, the following components are launched:

- Клиенты служб виртуализации. VSC представляют собой синтетические устройства, позволяющие осуществлять доступ к физическим устройствам посредством VMBus через VSP. VSC появляются в системе только после установки компонент интеграции, и позволяют использовать синтетические устройства. Без установки интеграционных компонент гостевая ОС может использовать только эмулируемые устройства. ОС Windows 7 и Windows Server 2008 R2 включает в себя компоненты интеграции, так что их не нужно устанавливать дополнительно.

- Improvements. By this we mean modifications in the OS code to ensure that the OS works with a hypervisor and thereby increase its efficiency in a virtual environment. These modifications concern disk, network, graphic subsystems and input-output subsystems. Windows Server 2008 R2 and Windows 7 already contain the necessary modifications; on other supported operating systems, integration components must be installed for this.

Also, integration components provide the following functionality:

- Heartbeat - helps to determine if the child partition responds to requests from the parent.

- Registry key exchange - allows you to exchange registry keys between the child and parent partitions.

- Time synchronization between host and guest OS

- Guest OS shutdown

- Volume Shadow Copy Service (VSS), which provides consistent backups.

OS is not from the Windows family, but supports integration components

There are also OSes that are not part of the Windows family, but that support integration components. At the moment, these are only SUSE Linux Enterprise Server and Red Hat Enterprise Linux. When installing the integration component, such OSs use third-party VSCs to interact with VSCs via VMBus and access equipment. The integration components for Linux were developed by Microsoft in conjunction with Citrix and are available for download at the Microsoft Download Center. Since integration components for Linux were released under the GPL v2 license, work is underway to integrate them into the Linux kernel through the Linux Driver Project , which will significantly expand the list of supported guest OSs.

Instead of a conclusion

This, perhaps, will end my second article on the architecture of Hyper-V. The previous article raised some questions from some readers, and I hope that now I answered them.

Hope the reading was not too boring. I often used the "academic language", but this was necessary, since the subject of the article involves a very large volume of theory and almost zero point zero practice.

Many thanks to Mitch Tulloch and the Microsoft Virtualization Team. Based on their book Understanding Microsoft Virtualization Solutions , an article was prepared.