Your robot needs to ignore you

- Transfer

In 2014, one project from Google fired in such a way that it was heard even in Detroit [once - “the car capital of the United States” - approx. trans.]. Its newest prototype roboMobil had neither a steering wheel, nor brakes. The hint was clear: future cars will be completely autonomous, they will not need a driver. And even more annoying was the fact that instead of remaking the Prius or Lexus, as Google did with the two previous generations of romo mobiles, the company built its own body for its new robo mobile with the help of auto parts suppliers. And what is most surprising, the new car was originally born an experienced driver, she already had 1.1 million km of experience, learned from the brains of previous prototypes. Today, robomobiles have a few more years of practice, the collective mileage of the entire fleet exceeds 2.1 million km - this is the equivalent of a person

Automobile companies are pouring billions into software development in response, and the epicenter of automotive innovation has moved from Detroit to Silicon Valley. If automakers could influence how the transition to robomobili is carried out, they would make it gradual. In the first step, driver assistance technologies would be running in. On the second one, there would appear several luxury models with limited autonomous movement in special situations, most likely along the highway. In the third step, these limited capabilities would have leaked to cheaper cars.

Consulting firm Deloitte describes such a step-by-step approach as incremental, “in which automakers invest in new technologies — for example, the anti-lock braking system, electronic stabilization, safety cameras, telematics — for expensive car lines, and then go from top to bottom, when savings start to work scale. Such a cautious approach, while attractive, may be wrong. Slow approach to autonomy through the gradual addition of computer-based security technologies that help people steer, brake and accelerate can be an unsafe strategy in the long run, both in terms of human life and in terms of the auto industry.

One of the reasons why car companies prefer a gradual approach is to help maintain control over the industry. Robotic mobiles need a smart OS that perceives the environment of the car, interprets the incoming data stream and reacts accordingly. Creating software that can work as an AI - in particular, working with artificial perception - requires experienced personnel and certain intellectual baggage. The automakers, who are well-versed in creating complex mechanical systems, lack the necessary personnel, culture and experience to effectively delve into a thick thicket of AI research. And Google is already there.

Robomobili introduce uncertainty in the automotive industry. Over the past century, selling cars directly to customers has been profitable. But if robomobils allow consumers to pay for trips, rather than buying cars, then the business of selling universal cars to transport companies that rent robotaxi may not be so profitable. If automakers have to work together with software developers to create romo mobiles, such a partnership may end up being the first to get a not-so-big piece of cake.

The amount of money in question is growing like a pot in a poker game that lasts all night. Former professor at the University of Michigan and GM's executive director Larry Burns explains that the 4.8 trillion kilometers that people travel every year (in the US alone) is hidden goldmine. He says: “If the one who succeeds first gets 10% of the profit from 4.8 trillion kilometers every year and makes 10 cents per mile, then his annual profit will be $ 30 billion - this is comparable to the fat years of Apple and ExxonMobil“ .

Automakers and Google are giant tankers, which, threatening to collide, are slowly approaching a common destination: squeeze more profits from the next generation of robots. Automakers prefer an evolutionary approach in which driver-assisting modules are developed until they can take control of driving for long periods of time. Google, on the contrary, is planning a sharp jump to full autonomy.

Not only automakers prefer a gradual approach. The US Department of Transportation and Automotive Engineers Community (SAE) have already developed their plans for autonomy. Their stages are slightly different, but what they have in common is that the best way of development is a sequence of gradual, linear steps, when the “driving assistance” system temporarily takes control of the car, but quickly gives it back to the person as soon as an unpleasant situation arises.

We disagree with the idea that a gradual transition is the best way to proceed. For many reasons, humans and robots should not replace each other behind the wheel. Many experts, however, believe that the optimal model is the separation of control over the machine between the person and the software, that the driver must remain the owner, and the software is the servant. Paradigm-based software, in which people and machines work in tandem, is known to engineers as Human-in-the-loop (HITL) - “participation in the human process”. In many situations, the joint work of man and machine really leads to excellent results. Experienced surgeons use robotic manipulators and achieve inhuman precision in operations. HITL programs are used on modern commercial aircraft, as well as in industrial and military applications.

The arguments in favor of preserving a person in the process are convincing. The dream of a complex combination of the best capabilities of a person with the best capabilities of a machine is very attractive; it resembles an optimization puzzle for manually selecting professional American football players to create a dream team. Machines are highly accurate, do not get tired and are able to analyze. Machines perfectly detect patterns, carry out calculations and measurements. People are well able to draw conclusions, to connect at first glance, random objects or events and learn from experience.

In theory, if you combine a person with an AI, you can end up with an attentive, sensitive, and extremely experienced driver. After all, the advantage of the HITL approach to automation is that you can combine the strengths of people and machines.

In reality, HITL-software will be able to work with robots, if only each of the parties, people and software, clearly and consistently share responsibility. Unfortunately, the automotive industry and officials from the Ministry of Transport were not proposing a model for the precise execution of these responsibilities. Instead, they propose to leave the person in the process, but at the same time he will have an incomprehensible and changing circle of responsibilities.

At the heart of the smooth transition strategy is the assumption that in the event of some unexpected situation, a beep or vibration will alert the person that he should quickly move to the driver's seat and deal with the problem. A gradual path to full automation may seem reasonable and safe. But in practice, a gradual transition from partial to full automation of driving will be dangerous.

In some situations, cars can work successfully with people, but not while driving. The main reason why the HITL scheme is not suitable for driving is that driving is a very boring and tedious process. Tedious actions people gladly give cars, and at the same time willingly disclaim responsibility for them.

When I participated in the training of seafarers in the fleet, I learned one of the key doctrines of good governance: never to divide critical tasks between two people. This is a classic management error, known as split responsibility. The problem with the division of responsibility is that each person from the task involved believes that he can calmly shift this task to another. But if none of them take care of its fulfillment, the mission fails. If people and cars share responsibility for driving, the results can be disastrous.

A heartbreaking example of the split responsibility between man and machine is Air France flight 447, which fell into the Atlantic Ocean in 2009.. 228 people aboard died. Analysis of the black box of the aircraft showed that the cause of the catastrophe was not terrorism or mechanical failure. Unsuccessfully passed the transfer of control from the autopilot to the team of pilots.

In flight, the instruments necessary for the autopilot operation were frozen, and the autopilot suddenly switched off. A team of pilots, confused and not practiced for a long time, suddenly faced the need to control what was supposed to be a routine flight. Pilots, suddenly faced with responsibility, made several catastrophic mistakes, which led to the crash of the aircraft at sea.

In the fall of 2012, several Google employees were allowed to take autonomous Lexus and drive down the highway to work. The idea was that a man drove a Lexus to the highway, went onto the road, and, standing in the lane, turned on the autopilot. Each employee was warned that the technology was at an early stage and they should be attentive all the time. Each car was equipped with a video camera that constantly shot a person in the cabin.

Employees spoke about roboMobily strictly positively. Everyone described the advantages of not having to deal with traffic on the roads at rush hour, and they could come home fresh and rested to spend more time with their families. But problems arose after engineers watched videos from salons. One of the employees completely turned away from the helm to look for charging for a phone in the back seat. Others were distracted from driving and relaxing, enjoying free moments.

The Google report described the attention-sharing situation, and was called the “automation bias.” “We have seen how human nature works: people very quickly begin to trust technology after they see it at work. As a result, it is very difficult for them to participate in driving when they are encouraged to distract from it and relax. ”

Google’s belief in the absence of a compromise - that people should not share control with machines - sounds risky, but this is actually the most prudent way of development from a security point of view. Automation can harm a driver in two ways: first, to invite him to do things that distract him from the road — for example, reading or watching a video; secondly, to violate his situational awareness, that is, the ability to perceive critical environmental factors and quickly and adequately respond to them. If we look at the driver with distracted attention, not knowing what is happening outside the car, it becomes clear why splitting driving responsibility is such a terrible idea.

A study at Virgin University of Technology has quantified the human temptation that arises when technology offers them to facilitate a tedious task. Researchers tested 12 drivers on a test track. In each car, there were two types of software that helped the driver: one centered the car in the lane, the other controlled the brakes and taxiing — what is called adaptive cruise control. The purpose of the study was to measure the reaction of people to technology, taking on the retention of the car in the lane, speed support and brake control. To do this, each car was installed a set of devices that collect data and record what is happening.

Researchers hired 12 people from 25 to 34 years old from ordinary Detroit residents, offering $ 80 for participation in the project. Drivers were asked to pretend that they were traveling long distances, and not only encouraged them to take their mobile phones with them, but also provided them with a choice of printed materials, food, drinks and entertainment devices. The drivers were told that one of the members of the research team would go with each of them. They were told that their passenger would have some kind of “homework” that he would be busy with during the trip, so most of the time he would watch a DVD.

12 test subjects were driving on a test track, and their reactions and actions were measured and recorded. The researchers set goals to measure both the temptation to be distracted by other activities such as eating, reading, or watching videos, and the degree of driver distraction from the road when the software takes over most of the responsibilities. In other words, the researchers checked whether the technology of automatic driving of drivers prompted unsafe actions, such as distraction from the driving process, unsuitable driving lessons, loss of perception of what was happening and the ability to recognize critical factors in their environment.

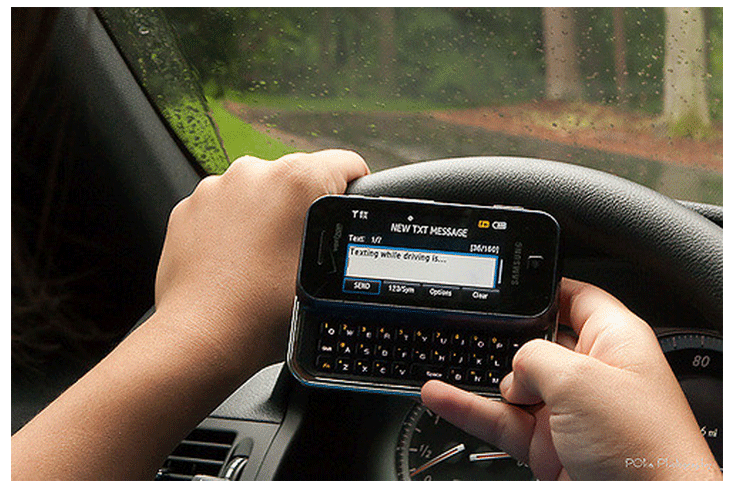

It turned out that most drivers, in the presence of automatic driving technology, happily launched into all three types of violations behind the wheel. “Homework”, which the passenger-researcher allegedly was engaged in, along with the adaptive cruise control capabilities and the strip restraint system lulled the attention of drivers, and they felt that they could distract themselves from what was happening on the road without harming themselves. For three hours of testing, during which various systems of automatic driving were used, most drivers were engaged in abstract things - they usually ate, got something from the back seat, talked on a mobile phone and sent messages.

Especially relaxed drivers holding the car in the lane. When it was turned on, as many as 58% of the subjects watched while driving a DVD. 25% of drivers engaged in reading, which increased the risk of an accident 3.4 times.

The drivers' visual attention was no better. The attention was scattered, they did not look at the road for almost 33% of the time while driving. What is even more dangerous, their gaze was distracted from the road for a long time, that is, more than 2 seconds, an average of 3325 times during a three-hour trip. True, such long distractions took only 8% of the time of the test.

This study, of course, can only be considered the first step. 12 people - a small control group, more research is needed. One of the interesting discoveries was that while most drivers enjoyed eating, watching movies, reading or sending messages while driving, some were able to resist this temptation. As the researchers concluded, “the work showed the presence of large individual differences in the question of distraction to foreign occupations, which may mean the heterogeneity of the influence of autonomous driving systems on different drivers.”

In another study conducted by the University of Pennsylvania, researchers interviewed 30 teenagers about using mobile phones while driving. It turned out that, despite the awareness of the danger of sending messages while driving, teenagers did it anyway. Teenagers who completely denied the use of telephones behind the wheel confessed that they were sending messages when they were at a traffic light waiting for a green signal. Adolescents also had their own system of defining what “sending messages behind the wheel” means. For example, they said that reading Twitter behind the wheel does not apply to working with messages. The same they said about the photography behind the wheel.

Distracted attention is one of the risks. Another risk of sharing responsibility for driving between people and software is that people’s driving skills will degrade from rare use. Drivers will use every chance to relax behind the wheel in the same way as pilots of flight AF447 did. And if a person has not driven a car for several weeks, months or years, and then he suddenly needs to take control in an abnormal situation, he will not only have no idea about what is happening outside, but will also find that his driving skills are rusty.

The temptation to do other things or take your hands off the wheel while splitting responsibilities is such a serious danger that Google decided to skip the stage of gradual transition to autonomous driving. The company's report of October 2015 ended with an unexpected conclusion: on the basis of early experiments with partially autonomous systems, a strategy was developed focusing on achieving exceptionally complete automation. It says the following: “As a result, tests led us to the need to develop vehicles capable of moving independently from point A to point B without human intervention. Everyone thinks it's hard to make a car that drives itself. And it is. But it seems to us that it’s just as hard to get people to keep their attention, if they are tired, they are bored, and technology says to them: “Do not worry,

At the time of this writing, Google mobiles got into 17 minor accidents and one collision with a bus at low speed. In the 17th incident, drivers of other cars were the culprit. February 14, 2016, the Google car got into the first serious accident, “touching” with the side of a city bus. And unlike in previous cases, this error occurred because of the software of the mobile phone - it incorrectly predicted that if the car went further, the bus would stop.

Except in the case of a bus, all other incidents occurred, no matter how funny it is, due to the fact that the mobile car drives too well. A well-programmed vehicle clearly follows all the rules, which confuses less law-abiding people who are accustomed to follow them not so literally. Typical examples of such accidents can be called cases where the mobile vehicle tried to integrate into the flow on the highway or turn right at a busy intersection, and the drivers, not realizing the exact observance of the marking and speed limits by the car, drove into the robo-mobiles.

So far, no accidents have led to injuries. In the future, the best way to avoid collisions will be to teach ro-mobs to drive a little more human, more carefree and a little illegal. In the long term, the easiest way is to solve the problem with drivers by replacing them with a computer that is never distracted from the road.

Automakers and IT giants gather around the table to play their high-stakes car poker game. It is not yet known to whom the map will fall. If officials push the law on the need for human participation in driving, HITL will win automakers, while maintaining control over the automotive industry. If the law allows or even demands full autonomy from ro-mobiles, IT companies will win.

Google has several major advantages - it is the undisputed leader in the field of digital maps and in-depth training. From a business strategy point of view, Google’s lack of clues in the automotive industry may be its key advantage. Analyst Kevin Ruth writes: “Unlike OEM, Google is not threatened with loss of profits due to the omission of intermediate steps in development, they immediately set about creating fully autonomous robomobiles, and they seem to have a serious odds.” Add to this the strong desire of Google to create a new profit channel that does not depend on the sale of online advertising - currently the main source of revenue for the company.

One thing is clear: regardless of how the transition to roboMobiles happens, automakers will have to acquire new abilities. To remain among the players in the new industry for the sale of robots, automakers will need to master the difficult art of creating AI - and this task has remained inaccessible to the best specialists in robotics for many decades.