The only worthy goal of intelligence is life?

The article discusses the intellect and its ultimate goals, while addressing the theses on orthogonality and convergence. In my previous article "Life, Intelligence, Mind, Mind" the question was not sufficiently clarified. The principle was declared in that article: a strong intellect should be built as an adaptable system based on the ultimate goal: the preservation and survival of a living organism - the carrier of the intellect. And the general structural diagram of the body with the intelligence, perhaps strong. The following is an additional analysis of why this is so.

A note about terminology.

As a strong intellect, we will keep in mind its common understanding. Weak intelligence will be called any system or algorithm that does not implement strong intelligence. The common name for both is simply intellect; we will not distinguish between artificial and natural intellect.

Thesis about the orthogonality of the goal and intelligence.

The thesis on orthogonality says that it is possible to combine any level of intelligence with any goal, since the intellect and the final goals are orthogonal, that is, independent, variables.

Adaptive behavior

Let us show the obvious, but important fact: adaptive behavior is impossible without a goal. Consider any algorithms with adaptive properties, including systems of strong intelligence, because, by virtue of generality, there is no reason to exclude them.

Adaptation can be seen as:

For neural networks, the 1st method is used - adjustment of link weights. Weights are tuned in the learning process, the teacher acts as an external factor that makes the network adapt - why only he knows, we can say it is a motivating process. Method 2 can be carried out by a programmer, the motivational causes of the programmer are also known only to him.

Another adaptation method can be implemented as an optimization process for target values. The implementation of such optimization processes can be quite simple. Training a neural network with reinforcements is a good example: a transparent algorithm adjusts the weights of the network for target behavior. Adaptation technique for target behavior, see. AI from Google independently mastered 49 old Atari games .

And so, respectively, the structure of self-learning or adaptive systems:

This scheme is true for any adaptive systems, including strong intelligence. Two concepts have been introduced: the adaptation and motivating component of the intellect. The motivating component is implemented according to the ultimate goal. The motivating component is not modified (does not adapt) - it is set rigidly.

How to enter the final goal in the system?

Let us ask ourselves - how to practically implement optimization to achieve the goal. If the adaptation component is assumed to be simple and the signal complexity of the inputs, outputs is simple, then the motivating component is also simple and its implementation is transparent.

Again, we’ll turn to Atari games - for the given complexity of the sensory and executive information signals and the goal, the developers were able to effectively create a motivating component that implements reinforcement training for the network. Intuitively, the task itself is not worth a strong intellect! And there is no question of strong intelligence in the case of Atari games.

Justifiably suggests itself: on what level systems is it possible to talk about strong intelligence? It is clear that strong intelligence is potentially possible if the complexity and diversity of sensory (input) and executive (output) information in terms of semantics and concept levels exceeds a certain critical threshold. It is doubtful that it can be limited to the fact that some simple way of integrating the input information will form a small set of reinforcement signals.

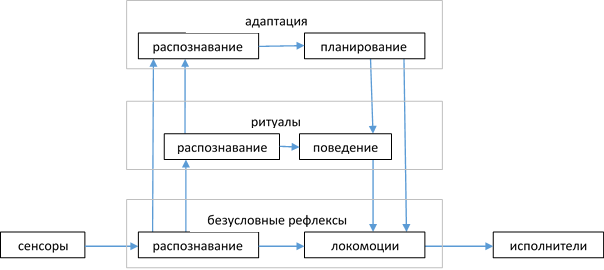

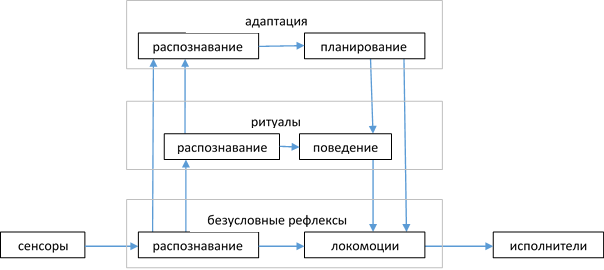

Most likely, to motivate a strong intellect, a complex and complex motivating component must be formed, which may include many conditions and reaction patterns. Most likely, it will be multilevel in the sense of input information - recognizable simple patterns and reactions (let's call them basic or unconditional reflexes) of them add up to recognizable objects of higher order and responsive behavior (let's call them rituals). In the biological variant, the motivating component was formed by genetic algorithms in the course of evolution.

Note that for the motivating component it can be argued that separately considered, it cannot be a strong intellect, since it is rigidly specified.

Detailed scheme for a system with potentially strong intelligence: (unconditioned reflexes and rituals form a motivating component )

For living organisms, the motivating component is the goal of the concept of conservation. Meanwhile, in principle, other goals can be laid in the motivating component (the thesis of orthogonality). It is important that the goal must take place for the adaptation process.

Which end goals are important?

With all the variety of goals, one can definitely say that to work with a more or less nontrivial goal requires a more or less long period, and therefore there is a need for an intermediate goal - to ensure their safety and security. And this is consistent with the thesis of convergence.

The thesis of instrumental convergence states that supramental forces, or agents — with the widest variety of their ultimate goals — will nevertheless pursue similar intermediate objectives, since all agents will have the same instrumental reasons for this.

Take the well-known three laws of robotics: and among them there is a law on the target security of a robot. And in accordance with the definition of life (see the article 'Life, Intelligence, Mind, Mind' ) such target behavior can be considered a sign of a living organism.

Thus, any system of intelligence with any more or less non-trivial goal should be primarily alive, including a carrier of strong intelligence.

In the course of biological evolution, a strong human intellect was formed only for the purpose of survival. If we assume that it will be possible to realize an artificial carrier of a strong intellect, will it be humane that, in addition to the goal of survival-preservation, there will be some additional goal in it? I wonder if you were in his place, as a person (!), Would you like it?

Conclusion

Strong intelligence can potentially be realized on the basis of a goal - the preservation of a living organism, subject to overcoming the critical threshold of complexity of sensory and executive information.

A note about terminology.

As a strong intellect, we will keep in mind its common understanding. Weak intelligence will be called any system or algorithm that does not implement strong intelligence. The common name for both is simply intellect; we will not distinguish between artificial and natural intellect.

Thesis about the orthogonality of the goal and intelligence.

The thesis on orthogonality says that it is possible to combine any level of intelligence with any goal, since the intellect and the final goals are orthogonal, that is, independent, variables.

Adaptive behavior

Let us show the obvious, but important fact: adaptive behavior is impossible without a goal. Consider any algorithms with adaptive properties, including systems of strong intelligence, because, by virtue of generality, there is no reason to exclude them.

Adaptation can be seen as:

- 1) change of scalar values - algorithm parameters

- 2) change of control structures

For neural networks, the 1st method is used - adjustment of link weights. Weights are tuned in the learning process, the teacher acts as an external factor that makes the network adapt - why only he knows, we can say it is a motivating process. Method 2 can be carried out by a programmer, the motivational causes of the programmer are also known only to him.

Another adaptation method can be implemented as an optimization process for target values. The implementation of such optimization processes can be quite simple. Training a neural network with reinforcements is a good example: a transparent algorithm adjusts the weights of the network for target behavior. Adaptation technique for target behavior, see. AI from Google independently mastered 49 old Atari games .

And so, respectively, the structure of self-learning or adaptive systems:

This scheme is true for any adaptive systems, including strong intelligence. Two concepts have been introduced: the adaptation and motivating component of the intellect. The motivating component is implemented according to the ultimate goal. The motivating component is not modified (does not adapt) - it is set rigidly.

How to enter the final goal in the system?

Let us ask ourselves - how to practically implement optimization to achieve the goal. If the adaptation component is assumed to be simple and the signal complexity of the inputs, outputs is simple, then the motivating component is also simple and its implementation is transparent.

Again, we’ll turn to Atari games - for the given complexity of the sensory and executive information signals and the goal, the developers were able to effectively create a motivating component that implements reinforcement training for the network. Intuitively, the task itself is not worth a strong intellect! And there is no question of strong intelligence in the case of Atari games.

Justifiably suggests itself: on what level systems is it possible to talk about strong intelligence? It is clear that strong intelligence is potentially possible if the complexity and diversity of sensory (input) and executive (output) information in terms of semantics and concept levels exceeds a certain critical threshold. It is doubtful that it can be limited to the fact that some simple way of integrating the input information will form a small set of reinforcement signals.

Most likely, to motivate a strong intellect, a complex and complex motivating component must be formed, which may include many conditions and reaction patterns. Most likely, it will be multilevel in the sense of input information - recognizable simple patterns and reactions (let's call them basic or unconditional reflexes) of them add up to recognizable objects of higher order and responsive behavior (let's call them rituals). In the biological variant, the motivating component was formed by genetic algorithms in the course of evolution.

Note that for the motivating component it can be argued that separately considered, it cannot be a strong intellect, since it is rigidly specified.

Detailed scheme for a system with potentially strong intelligence: (unconditioned reflexes and rituals form a motivating component )

For living organisms, the motivating component is the goal of the concept of conservation. Meanwhile, in principle, other goals can be laid in the motivating component (the thesis of orthogonality). It is important that the goal must take place for the adaptation process.

Which end goals are important?

With all the variety of goals, one can definitely say that to work with a more or less nontrivial goal requires a more or less long period, and therefore there is a need for an intermediate goal - to ensure their safety and security. And this is consistent with the thesis of convergence.

The thesis of instrumental convergence states that supramental forces, or agents — with the widest variety of their ultimate goals — will nevertheless pursue similar intermediate objectives, since all agents will have the same instrumental reasons for this.

Take the well-known three laws of robotics: and among them there is a law on the target security of a robot. And in accordance with the definition of life (see the article 'Life, Intelligence, Mind, Mind' ) such target behavior can be considered a sign of a living organism.

Thus, any system of intelligence with any more or less non-trivial goal should be primarily alive, including a carrier of strong intelligence.

In the course of biological evolution, a strong human intellect was formed only for the purpose of survival. If we assume that it will be possible to realize an artificial carrier of a strong intellect, will it be humane that, in addition to the goal of survival-preservation, there will be some additional goal in it? I wonder if you were in his place, as a person (!), Would you like it?

Conclusion

Strong intelligence can potentially be realized on the basis of a goal - the preservation of a living organism, subject to overcoming the critical threshold of complexity of sensory and executive information.