Work with a Proxmox cluster: installation, network setup, ZFS, solving common problems

- Tutorial

Over the past few years, I have been working very closely with Proxmox clusters: many clients require their own infrastructure where they can develop their project. That is why I can tell you about the most common mistakes and problems that you may also encounter. In addition to this, we will of course configure a cluster of three nodes from scratch.

A Proxmox cluster can consist of two or more servers. The maximum number of nodes in a cluster is 32 pieces. Our own cluster will consist of three nodes on a multicast (in the article I will also describe how to raise a cluster on uniqueness - this is important if you base your cluster infrastructure on Hetzner or OVH, for example). In short, multicast allows data transfer to several nodes simultaneously. With a multicast, we can not think about the number of nodes in the cluster (focusing on the limitations above).

The cluster itself is built on an internal network (it is important that the IP addresses are on the same subnet), the same Hetzner and OVH have the ability to combine nodes in different data centers using Virtual Switch (Hetzner) and vRack (OVH) technology - about Virtual Switch we will also talk in the article. If your hosting provider does not have similar technologies at work, then you can use OVS (Open Virtual Switch), which is natively supported by Proxmox, or use a VPN. However, in this case, I recommend using Unicast with a small number of nodes — situations often arise where the cluster simply “falls apart” based on such a network infrastructure and has to be restored. Therefore, I try to use exactly OVH and Hetzner in my work - I have seen fewer such incidents, but first of all, study the hosting provider,

Proxmox can be installed in two ways: ISO-installer and installation via shell. We choose the second method, so install Debian on the server.

We proceed directly to the installation of Proxmox on each server. Installation is extremely simple and is described in the official documentation here.

Add the Proxmox repository and the key of this repository:

Updating repositories and the system itself:

After a successful update, install the necessary Proxmox packages:

Note : Postfix and grub will be configured during installation - one of them may fail. Perhaps this will be caused by the fact that the hostname does not resolve by name. Edit the hosts entries and perform apt-get update

From now on, we can log into the Proxmox web interface at https: // <external-ip-address>: 8006 (you will encounter an untrusted certificate during connection).

Image 1. Proxmox node web interface

I don’t really like the situation with the certificate and IP address, so I suggest installing Nginx and setting up Let's Encrypt certificate. I will not describe the installation of Nginx, I will leave only the important files for the Let's encrypt certificate to work:

Command for issuing SSL certificate:

After installing the SSL certificate, do not forget to set it to auto-renew via cron:

Excellent! Now we can access our domain via HTTPS.

Note : to disable the subscription information window, run this command:

Network settings

Before connecting to the cluster, configure the network interfaces on the hypervisor. It is worth noting that the configuration of the remaining nodes is no different, except for IP addresses and server names, so I will not duplicate their settings.

Let's create a network bridge for the internal network so that our virtual machines (in my version there will be an LXC container for convenience), firstly, they are connected to the internal network of the hypervisor and can interact with each other. Secondly, a little later we will add a bridge for the external network so that the virtual machines have their own external IP address. Accordingly, the containers will be at the moment behind NAT'om with us.

There are two ways to work with the Proxmox network configuration: through the web interface or through the configuration file / etc / network / interfaces. In the first option, you will need to restart the server (or you can simply rename the interfaces.new file to interfaces and restart the networking service through systemd). If you are just starting to configure and there are no virtual machines or LXC containers yet, then it is advisable to restart the hypervisor after the changes.

Now create a network bridge called vmbr1 in the network tab in the Proxmox web panel.

Figure 2. Network interfaces of the proxmox1 node

Figure 3. Creating a network bridge

Image 4. Configuring the vmbr1 network configuration

The setup is extremely simple - we need vmbr1 so that the instances get access to the Internet.

Now restart our hypervisor and check if the interface has been created:

Image 5. Network interface vmbr1 in ip command output a

Note: I already have ens19 interface - this is an interface with an internal network, based on which a cluster will be created.

Repeat these steps on the other two hypervisors, and then proceed to the next step - preparing the cluster.

Also, an important stage now is to enable packet forwarding - without it, the instances will not gain access to the external network. Open the sysctl.conf file and change the value of the net.ipv4.ip_forward parameter to 1, after which we enter the following command:

In the output, you should see the net.ipv4.ip_forward directive (if you have not changed it before)

Configuring the Proxmox cluster

Now we will go directly to the cluster. Each node must resolve itself and other nodes on the internal network, for this it is necessary to change the values in the hosts records as follows (each node must have a record about the others):

It is also required to add the public keys of each node to the others - this is required to create a cluster.

Let's create a cluster through the web panel:

Image 6. Creating a cluster through the web interface

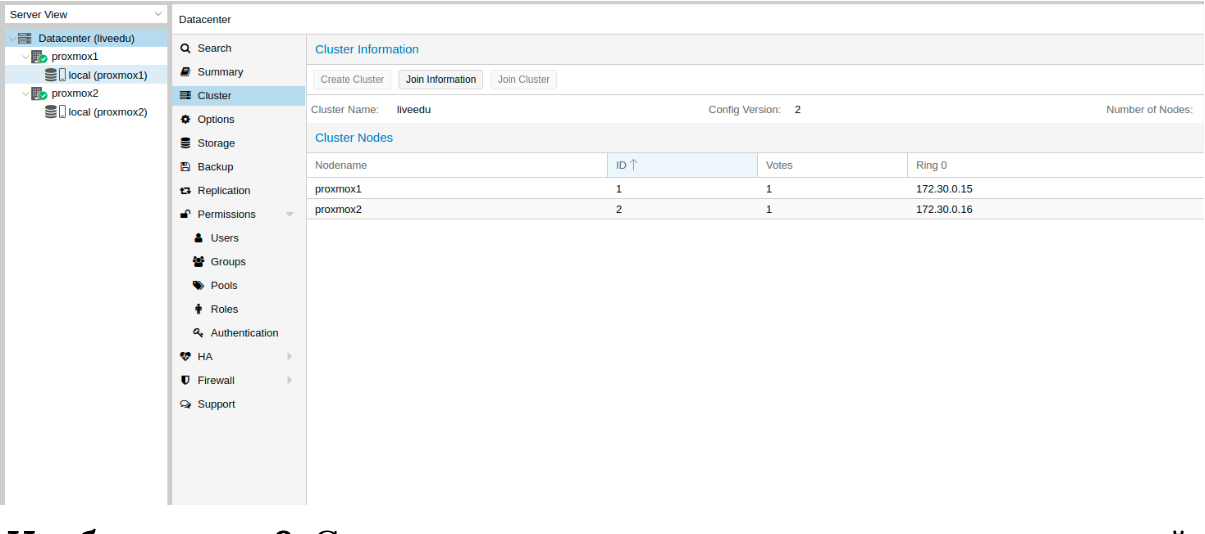

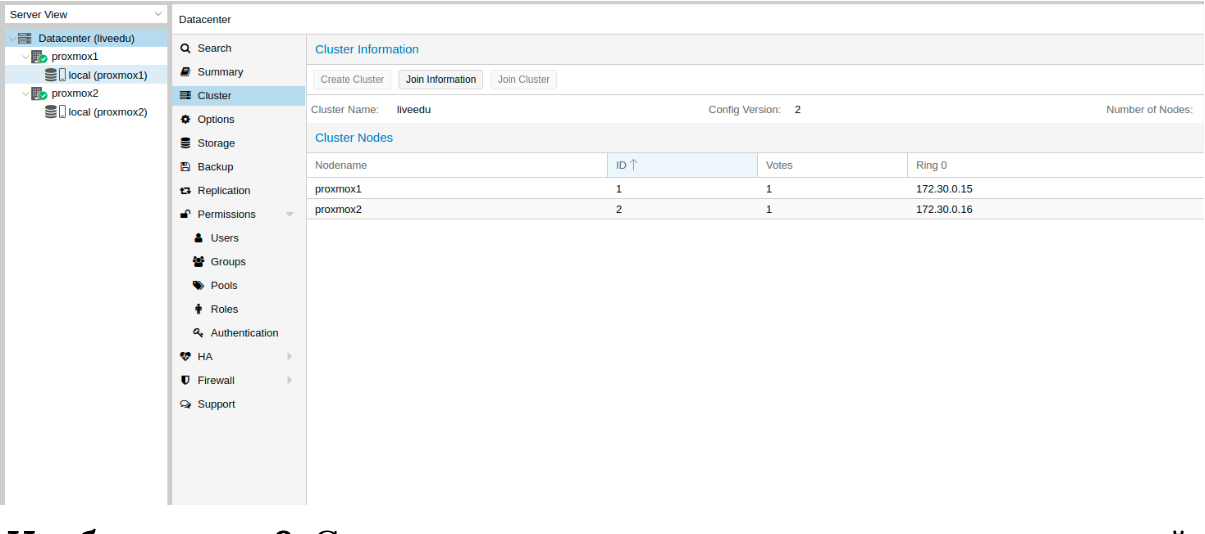

After creating the cluster, we need to get information about it. Go to the same tab of the cluster and click the “Join Information” button:

Image 7. Information about the created cluster

This information is useful to us when joining the second and third nodes in the cluster. We are connected to the second node and in the Cluster tab, click the “Join Cluster” button:

Image 8. Connecting to the node cluster

Let us examine in more detail the parameters for connection:

Image 9. Cluster state after connecting the second node.

The second node is successfully connected! However, this does not always happen. If you follow the steps incorrectly or network problems arise, then joining the cluster will fail, and the cluster itself will be “broken up”. The best solution is to disconnect the node from the cluster, delete all the information about the cluster on it, then restart the server and check the previous steps. How to safely disconnect a node from a cluster? First, delete it from the cluster on the first server:

After which the node will be disconnected from the cluster. Now go to the broken node and disable the following services on it:

The Proxmox cluster stores information about itself in the sqlite database, it also needs to be cleared:

Data about the bark are successfully deleted. Delete the remaining files, for this you need to start the cluster file system in standalone mode:

We restart the server (this is not necessary, but we are safe: all the services should be up and running correctly in the end. In order not to miss anything, we will restart). After switching on, we will get an empty node without any information about the previous cluster and we can start the connection again.

ZFS is a file system that can be used with Proxmox. With it, you can allow yourself to replicate data to another hypervisor, migrate the virtual machine / LXC container, access the LXC container from the host system, and so on. Installing it is quite simple, let's proceed to the analysis. Three SSDs are available on my servers, which we will combine into a RAID array.

Add repositories:

Updating the list of packages:

Set the required dependencies:

Install ZFS itself:

If in the future you get an error fusermount: fuse device not found, try 'modprobe fuse' first, then run the following command:

Now let's proceed directly to the setup. First we need to format the SSDs and configure them through parted:

Similar actions must be performed for other drives. After all the disks are ready, proceed to the next step:

zpool create -f -o ashift = 12 rpool / dev / sda4 / dev / sdb4 / dev / sdc4

We choose ashift = 12 for performance reasons - this is the recommendation of zfsonlinux itself, more details you can read about it on their wiki: github.com/zfsonlinux/zfs/wiki/faq#performance-considerations

Let's apply some settings for ZFS:

Now we need to calculate some variables to calculate zfs_arc_max, I do this as follows:

At the moment, the pool has been successfully created, we also created a data subpool. You can check the status of your pool with the zpool status command. This action must be performed on all hypervisors, and then proceed to the next step.

Now add ZFS to Proxmox. We go to the settings of the data center (it is it, and not a separate node) in the "Storage" section, click on the "Add" button and select the "ZFS" option, after which we will see the following parameters:

ID: Name of the store. I gave it the name local-zfs

ZFS Pool: We created rpool / data, and we add it here.

Nodes: specify all available nodes

This command creates a new pool with the drives we selected. On each hypervisor, a new storage should appear called local-zfs, after which you can migrate your virtual machines from local storage to ZFS.

The Proxmox cluster has the ability to replicate data from one hypervisor to another: this option allows you to switch the instance from one server to another. The data will be relevant at the time of the last synchronization - its time can be set when creating replication (15 minutes is set as standard). There are two ways to migrate an instance to another Proxmox node: manual and automatic. Let's look at the manual option first, and in the end I will give you a Python script that will allow you to create a virtual machine on an accessible hypervisor when one of the hypervisors is unavailable.

To create replication, go to the Proxmox web panel and create a virtual machine or LXC container. In the previous paragraphs, we configured vmbr1 bridge with NAT, which will allow us to go to the external network. I will create an LXC container with MySQL, Nginx and PHP-FPM with a test site to test replication. Below is a step-by-step instruction.

We load the appropriate template (go to storage -> Content -> Templates), an example in the screenshot:

Image 10. Local storage with templates and VM images

Press the “Templates” button and load the LXC container template we need:

Image 11. Select and download the template

Now we can use it when creating new LXC containers. Select the first hypervisor and click the “Create CT” button in the upper right corner: we will see the panel for creating a new instance. The installation steps are quite simple and I will give only the configuration file for this LXC container:

The container was created successfully. You can connect to LXC containers via the pct enter command, I also added the hypervisor SSH key before installation to connect directly via SSH (there are some problems with terminal display in PCT). I prepared the server and installed all the necessary server applications there, now you can proceed to creating replication.

We click on the LXC container and go to the “Replication” tab, where we create the replication parameter using the “Add” button:

Image 12. Creating replication in the Proxmox interface

Image 13. Replication job creation window

I created the task of replicating the container to the second node, as you can see in the next screenshot, the replication was successful - pay attention to the “Status” field, it notifies about the replication status, it is also worth paying attention to the “Duration” field to know how long the data replication takes.

Image 14. VM synchronization list

Now let's try to migrate the machine to the second node using the “Migrate” button.

The migration of the container will start , the log can be viewed in the task list - there will be our migration. After that, the container will be moved to the second node.

“Host Key Verification Failed” Error

Sometimes when configuring a cluster, a similar problem may arise - it prevents the machines from migrating and creating replication, which eliminates the advantages of cluster solutions. To fix this error, delete the known_hosts file and connect via SSH to the conflicting node:

Accept Hostkey and try entering this command, it should connect you to the server:

Go to the Robot panel and click on the “Virtual Switches” button. On the next page you will see a panel for creating and managing Virtual Switch interfaces: first you need to create it, and then “connect” dedicated servers to it. In the search, add the necessary servers to connect - they do not need to be rebooted, only have to wait until 10-15 minutes when the connection to the Virtual Switch will be active.

After adding the servers to Virtual Switch through the web panel, we connect to the servers and open the configuration files of the network interfaces, where we create a new network interface:

Let's take a closer look at what it is. At its core, it is a VLAN that connects to a single physical interface called enp4s0 (it may vary for you), with a VLAN number - this is the Virtual Switch number that you created in the Hetzner Robot web panel. You can specify any address, as long as it is local.

I note that you should configure enp4s0 as usual, in fact it should contain an external IP address that was issued to your physical server. Repeat these steps on other hypervisors, then reboot the networking service on them, ping to a neighboring node using the Virtual Switch IP address. If the ping was successful, then you have successfully established a connection between the servers using Virtual Switch.

I will also attach the sysctl.conf configuration file, it will be needed if you have problems with the forwarding package and other network parameters:

Adding IPv4 subnets to Hetzner

Before starting work, you need to order a subnet to Hetzner, you can do this through the Robot panel.

Create a network bridge with the address that will be from this subnet. Configuration Example:

Now go to the settings of the virtual machine in Proxmox and create a new network interface that will be attached to the vmbr2 bridge. I use the LXC container, its configuration can be changed immediately in Proxmox. Final configuration for Debian:

Please note: I specified 26 mask, not 29 - this is required in order for the network to work on the virtual machine.

Adding IPv4 Addresses to Hetzner

The situation with a single IP address is different - usually Hetzner gives us an additional address from the server subnet. This means that instead of vmbr2 we need to use vmbr0, but at the moment we don’t have it. The bottom line is that vmbr0 must contain the IP address of the iron server (that is, use the address that used the physical network interface enp2s0). The address must be moved to vmbr0, the following configuration is suitable for this (I advise you to order KVM, in which case to resume network operation):

Restart the server, if possible (if not, restart the networking service), and then check the network interfaces via ip a:

As you can see here, enp2s0 is connected to vmbr0 and does not have an IP address, since it was reassigned to vmbr0.

Now in the settings of the virtual machine, add the network interface that will be connected to vmbr0. For the gateway, specify the address attached to vmbr0.

I hope this article comes in handy when you set up the Proxmox cluster in Hetzner. If time permits, I will expand the article and add instructions for OVH - there, too, not everything is obvious, as it seems at first glance. The material turned out to be quite voluminous, if you find errors, then please write in the comments, I will correct them. Thank you all for your attention.

Posted by Ilya Andreev, edited by Alexei Zhadan and Live Linux Team

A Proxmox cluster can consist of two or more servers. The maximum number of nodes in a cluster is 32 pieces. Our own cluster will consist of three nodes on a multicast (in the article I will also describe how to raise a cluster on uniqueness - this is important if you base your cluster infrastructure on Hetzner or OVH, for example). In short, multicast allows data transfer to several nodes simultaneously. With a multicast, we can not think about the number of nodes in the cluster (focusing on the limitations above).

The cluster itself is built on an internal network (it is important that the IP addresses are on the same subnet), the same Hetzner and OVH have the ability to combine nodes in different data centers using Virtual Switch (Hetzner) and vRack (OVH) technology - about Virtual Switch we will also talk in the article. If your hosting provider does not have similar technologies at work, then you can use OVS (Open Virtual Switch), which is natively supported by Proxmox, or use a VPN. However, in this case, I recommend using Unicast with a small number of nodes — situations often arise where the cluster simply “falls apart” based on such a network infrastructure and has to be restored. Therefore, I try to use exactly OVH and Hetzner in my work - I have seen fewer such incidents, but first of all, study the hosting provider,

Install Proxmox

Proxmox can be installed in two ways: ISO-installer and installation via shell. We choose the second method, so install Debian on the server.

We proceed directly to the installation of Proxmox on each server. Installation is extremely simple and is described in the official documentation here.

Add the Proxmox repository and the key of this repository:

echo "deb http://download.proxmox.com/debian/pve stretch pve-no-subscription" > /etc/apt/sources.list.d/pve-install-repo.list

wget http://download.proxmox.com/debian/proxmox-ve-release-5.x.gpg -O /etc/apt/trusted.gpg.d/proxmox-ve-release-5.x.gpg

chmod +r /etc/apt/trusted.gpg.d/proxmox-ve-release-5.x.gpg # optional, if you have a changed default umaskUpdating repositories and the system itself:

apt update && apt dist-upgradeAfter a successful update, install the necessary Proxmox packages:

apt install proxmox-ve postfix open-iscsiNote : Postfix and grub will be configured during installation - one of them may fail. Perhaps this will be caused by the fact that the hostname does not resolve by name. Edit the hosts entries and perform apt-get update

From now on, we can log into the Proxmox web interface at https: // <external-ip-address>: 8006 (you will encounter an untrusted certificate during connection).

Image 1. Proxmox node web interface

Install Nginx and Let's Encrypt Certificate

I don’t really like the situation with the certificate and IP address, so I suggest installing Nginx and setting up Let's Encrypt certificate. I will not describe the installation of Nginx, I will leave only the important files for the Let's encrypt certificate to work:

/etc/nginx/snippets/letsencrypt.conf

location ^~ /.well-known/acme-challenge/ {

allow all;

root /var/lib/letsencrypt/;

default_type "text/plain";

try_files $uri =404;

}

Command for issuing SSL certificate:

certbot certonly --agree-tos --email sos@livelinux.info --webroot -w /var/lib/letsencrypt/ -d proxmox1.domain.name

Site Configuration in NGINX

upstream proxmox1.domain.name {

server 127.0.0.1:8006;

}

server {

listen 80;

server_name proxmox1.domain.name;

include snippets/letsencrypt.conf;

return 301 https://$host$request_uri;

}

server {

listen 443 ssl;

server_name proxmox1.domain.name;

access_log /var/log/nginx/proxmox1.domain.name.access.log;

error_log /var/log/nginx/proxmox1.domain.name.error.log;

include snippets/letsencrypt.conf;

ssl_certificate /etc/letsencrypt/live/proxmox1.domain.name/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/proxmox1.domain.name/privkey.pem;

location / {

proxy_pass https://proxmox1.domain.name;

proxy_next_upstream error timeout invalid_header http_500 http_502 http_503 http_504;

proxy_redirect off;

proxy_buffering off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}After installing the SSL certificate, do not forget to set it to auto-renew via cron:

0 */12 * * * /usr/bin/certbot -a \! -d /run/systemd/system && perl -e 'sleep int(rand(3600))' && certbot -q renew --renew-hook "systemctl reload nginx"Excellent! Now we can access our domain via HTTPS.

Note : to disable the subscription information window, run this command:

sed -i.bak "s/data.status !== 'Active'/false/g" /usr/share/javascript/proxmox-widget-toolkit/proxmoxlib.js && systemctl restart pveproxy.serviceNetwork settings

Before connecting to the cluster, configure the network interfaces on the hypervisor. It is worth noting that the configuration of the remaining nodes is no different, except for IP addresses and server names, so I will not duplicate their settings.

Let's create a network bridge for the internal network so that our virtual machines (in my version there will be an LXC container for convenience), firstly, they are connected to the internal network of the hypervisor and can interact with each other. Secondly, a little later we will add a bridge for the external network so that the virtual machines have their own external IP address. Accordingly, the containers will be at the moment behind NAT'om with us.

There are two ways to work with the Proxmox network configuration: through the web interface or through the configuration file / etc / network / interfaces. In the first option, you will need to restart the server (or you can simply rename the interfaces.new file to interfaces and restart the networking service through systemd). If you are just starting to configure and there are no virtual machines or LXC containers yet, then it is advisable to restart the hypervisor after the changes.

Now create a network bridge called vmbr1 in the network tab in the Proxmox web panel.

Figure 2. Network interfaces of the proxmox1 node

Figure 3. Creating a network bridge

Image 4. Configuring the vmbr1 network configuration

The setup is extremely simple - we need vmbr1 so that the instances get access to the Internet.

Now restart our hypervisor and check if the interface has been created:

Image 5. Network interface vmbr1 in ip command output a

Note: I already have ens19 interface - this is an interface with an internal network, based on which a cluster will be created.

Repeat these steps on the other two hypervisors, and then proceed to the next step - preparing the cluster.

Also, an important stage now is to enable packet forwarding - without it, the instances will not gain access to the external network. Open the sysctl.conf file and change the value of the net.ipv4.ip_forward parameter to 1, after which we enter the following command:

sysctl -pIn the output, you should see the net.ipv4.ip_forward directive (if you have not changed it before)

Configuring the Proxmox cluster

Now we will go directly to the cluster. Each node must resolve itself and other nodes on the internal network, for this it is necessary to change the values in the hosts records as follows (each node must have a record about the others):

172.30.0.15 proxmox1.livelinux.info proxmox1

172.30.0.16 proxmox2.livelinux.info proxmox2

172.30.0.17 proxmox3.livelinux.info proxmox3

It is also required to add the public keys of each node to the others - this is required to create a cluster.

Let's create a cluster through the web panel:

Image 6. Creating a cluster through the web interface

After creating the cluster, we need to get information about it. Go to the same tab of the cluster and click the “Join Information” button:

Image 7. Information about the created cluster

This information is useful to us when joining the second and third nodes in the cluster. We are connected to the second node and in the Cluster tab, click the “Join Cluster” button:

Image 8. Connecting to the node cluster

Let us examine in more detail the parameters for connection:

- Peer Address: IP address of the first server (to the one to which we are connecting)

- Password: password of the first server

- Fingerprint: we get this value from cluster information

Image 9. Cluster state after connecting the second node.

The second node is successfully connected! However, this does not always happen. If you follow the steps incorrectly or network problems arise, then joining the cluster will fail, and the cluster itself will be “broken up”. The best solution is to disconnect the node from the cluster, delete all the information about the cluster on it, then restart the server and check the previous steps. How to safely disconnect a node from a cluster? First, delete it from the cluster on the first server:

pvecm del proxmox2After which the node will be disconnected from the cluster. Now go to the broken node and disable the following services on it:

systemctl stop pvestatd.service

systemctl stop pvedaemon.service

systemctl stop pve-cluster.service

systemctl stop corosync

systemctl stop pve-cluster

The Proxmox cluster stores information about itself in the sqlite database, it also needs to be cleared:

sqlite3 /var/lib/pve-cluster/config.db

delete from tree where name = 'corosync.conf';

.quit

Data about the bark are successfully deleted. Delete the remaining files, for this you need to start the cluster file system in standalone mode:

pmxcfs -l

rm /etc/pve/corosync.conf

rm /etc/corosync/*

rm /var/lib/corosync/*

rm -rf /etc/pve/nodes/*

We restart the server (this is not necessary, but we are safe: all the services should be up and running correctly in the end. In order not to miss anything, we will restart). After switching on, we will get an empty node without any information about the previous cluster and we can start the connection again.

Install and configure ZFS

ZFS is a file system that can be used with Proxmox. With it, you can allow yourself to replicate data to another hypervisor, migrate the virtual machine / LXC container, access the LXC container from the host system, and so on. Installing it is quite simple, let's proceed to the analysis. Three SSDs are available on my servers, which we will combine into a RAID array.

Add repositories:

nano /etc/apt/sources.list.d/stretch-backports.list

deb http://deb.debian.org/debian stretch-backports main contrib

deb-src http://deb.debian.org/debian stretch-backports main contrib

nano /etc/apt/preferences.d/90_zfs

Package: libnvpair1linux libuutil1linux libzfs2linux libzpool2linux spl-dkms zfs-dkms zfs-test zfsutils-linux zfsutils-linux-dev zfs-zed

Pin: release n=stretch-backports

Pin-Priority: 990

Updating the list of packages:

apt updateSet the required dependencies:

apt install --yes dpkg-dev linux-headers-$(uname -r) linux-image-amd64Install ZFS itself:

apt-get install zfs-dkms zfsutils-linuxIf in the future you get an error fusermount: fuse device not found, try 'modprobe fuse' first, then run the following command:

modprobe fuseNow let's proceed directly to the setup. First we need to format the SSDs and configure them through parted:

Configure / dev / sda

parted /dev/sda

(parted) print

Model: ATA SAMSUNG MZ7LM480 (scsi)

Disk /dev/sda: 480GB

Sector size (logical/physical): 512B/512B

Partition Table: msdos

Disk Flags:

Number Start End Size Type File system Flags

1 1049kB 4296MB 4295MB primary raid

2 4296MB 4833MB 537MB primary raid

3 4833MB 37,0GB 32,2GB primary raid

(parted) mkpart

Partition type? primary/extended? primary

File system type? [ext2]? zfs

Start? 33GB

End? 480GB

Warning: You requested a partition from 33,0GB to 480GB (sectors 64453125..937500000).

The closest location we can manage is 37,0GB to 480GB (sectors 72353792..937703087).

Is this still acceptable to you?

Yes/No? yes

Similar actions must be performed for other drives. After all the disks are ready, proceed to the next step:

zpool create -f -o ashift = 12 rpool / dev / sda4 / dev / sdb4 / dev / sdc4

We choose ashift = 12 for performance reasons - this is the recommendation of zfsonlinux itself, more details you can read about it on their wiki: github.com/zfsonlinux/zfs/wiki/faq#performance-considerations

Let's apply some settings for ZFS:

zfs set atime=off rpool

zfs set compression=lz4 rpool

zfs set dedup=off rpool

zfs set snapdir=visible rpool

zfs set primarycache=all rpool

zfs set aclinherit=passthrough rpool

zfs inherit acltype rpool

zfs get -r acltype rpool

zfs get all rpool | grep compressratio

Now we need to calculate some variables to calculate zfs_arc_max, I do this as follows:

mem =`free --giga | grep Mem | awk '{print $2}'`

partofmem=$(($mem/10))

echo $setzfscache > /sys/module/zfs/parameters/zfs_arc_max

grep c_max /proc/spl/kstat/zfs/arcstats

zfs create rpool/data

cat > /etc/modprobe.d/zfs.conf << EOL

options zfs zfs_arc_max=$setzfscache

EOL

echo $setzfscache > /sys/module/zfs/parameters/zfs_arc_max

grep c_max /proc/spl/kstat/zfs/arcstatsAt the moment, the pool has been successfully created, we also created a data subpool. You can check the status of your pool with the zpool status command. This action must be performed on all hypervisors, and then proceed to the next step.

Now add ZFS to Proxmox. We go to the settings of the data center (it is it, and not a separate node) in the "Storage" section, click on the "Add" button and select the "ZFS" option, after which we will see the following parameters:

ID: Name of the store. I gave it the name local-zfs

ZFS Pool: We created rpool / data, and we add it here.

Nodes: specify all available nodes

This command creates a new pool with the drives we selected. On each hypervisor, a new storage should appear called local-zfs, after which you can migrate your virtual machines from local storage to ZFS.

Replicating instances to a neighboring hypervisor

The Proxmox cluster has the ability to replicate data from one hypervisor to another: this option allows you to switch the instance from one server to another. The data will be relevant at the time of the last synchronization - its time can be set when creating replication (15 minutes is set as standard). There are two ways to migrate an instance to another Proxmox node: manual and automatic. Let's look at the manual option first, and in the end I will give you a Python script that will allow you to create a virtual machine on an accessible hypervisor when one of the hypervisors is unavailable.

To create replication, go to the Proxmox web panel and create a virtual machine or LXC container. In the previous paragraphs, we configured vmbr1 bridge with NAT, which will allow us to go to the external network. I will create an LXC container with MySQL, Nginx and PHP-FPM with a test site to test replication. Below is a step-by-step instruction.

We load the appropriate template (go to storage -> Content -> Templates), an example in the screenshot:

Image 10. Local storage with templates and VM images

Press the “Templates” button and load the LXC container template we need:

Image 11. Select and download the template

Now we can use it when creating new LXC containers. Select the first hypervisor and click the “Create CT” button in the upper right corner: we will see the panel for creating a new instance. The installation steps are quite simple and I will give only the configuration file for this LXC container:

arch: amd64

cores: 3

memory: 2048

nameserver: 8.8.8.8

net0: name=eth0,bridge=vmbr1,firewall=1,gw=172.16.0.1,hwaddr=D6:60:C5:39:98:A0,ip=172.16.0.2/24,type=veth

ostype: centos

rootfs: local:100/vm-100-disk-1.raw,size=10G

swap: 512

unprivileged:

The container was created successfully. You can connect to LXC containers via the pct enter command, I also added the hypervisor SSH key before installation to connect directly via SSH (there are some problems with terminal display in PCT). I prepared the server and installed all the necessary server applications there, now you can proceed to creating replication.

We click on the LXC container and go to the “Replication” tab, where we create the replication parameter using the “Add” button:

Image 12. Creating replication in the Proxmox interface

Image 13. Replication job creation window

I created the task of replicating the container to the second node, as you can see in the next screenshot, the replication was successful - pay attention to the “Status” field, it notifies about the replication status, it is also worth paying attention to the “Duration” field to know how long the data replication takes.

Image 14. VM synchronization list

Now let's try to migrate the machine to the second node using the “Migrate” button.

The migration of the container will start , the log can be viewed in the task list - there will be our migration. After that, the container will be moved to the second node.

“Host Key Verification Failed” Error

Sometimes when configuring a cluster, a similar problem may arise - it prevents the machines from migrating and creating replication, which eliminates the advantages of cluster solutions. To fix this error, delete the known_hosts file and connect via SSH to the conflicting node:

/usr/bin/ssh -o 'HostKeyAlias=proxmox2' root@172.30.0.16

Accept Hostkey and try entering this command, it should connect you to the server:

/usr/bin/ssh -o 'BatchMode=yes' -o 'HostKeyAlias=proxmox2' root@172.30.0.16

Features of network settings on Hetzner

Go to the Robot panel and click on the “Virtual Switches” button. On the next page you will see a panel for creating and managing Virtual Switch interfaces: first you need to create it, and then “connect” dedicated servers to it. In the search, add the necessary servers to connect - they do not need to be rebooted, only have to wait until 10-15 minutes when the connection to the Virtual Switch will be active.

After adding the servers to Virtual Switch through the web panel, we connect to the servers and open the configuration files of the network interfaces, where we create a new network interface:

auto enp4s0.4000

iface enp4s0.4000 inet static

address 10.1.0.11/24

mtu 1400

vlan-raw-device enp4s0Let's take a closer look at what it is. At its core, it is a VLAN that connects to a single physical interface called enp4s0 (it may vary for you), with a VLAN number - this is the Virtual Switch number that you created in the Hetzner Robot web panel. You can specify any address, as long as it is local.

I note that you should configure enp4s0 as usual, in fact it should contain an external IP address that was issued to your physical server. Repeat these steps on other hypervisors, then reboot the networking service on them, ping to a neighboring node using the Virtual Switch IP address. If the ping was successful, then you have successfully established a connection between the servers using Virtual Switch.

I will also attach the sysctl.conf configuration file, it will be needed if you have problems with the forwarding package and other network parameters:

net.ipv6.conf.all.disable_ipv6=0

net.ipv6.conf.default.disable_ipv6 = 0

net.ipv6.conf.all.forwarding=1

net.ipv4.conf.all.rp_filter=1

net.ipv4.tcp_syncookies=1

net.ipv4.ip_forward=1

net.ipv4.conf.all.send_redirects=0

Adding IPv4 subnets to Hetzner

Before starting work, you need to order a subnet to Hetzner, you can do this through the Robot panel.

Create a network bridge with the address that will be from this subnet. Configuration Example:

auto vmbr2

iface vmbr2 inet static

address ip-address

netmask 29

bridge-ports none

bridge-stp off

bridge-fd 0Now go to the settings of the virtual machine in Proxmox and create a new network interface that will be attached to the vmbr2 bridge. I use the LXC container, its configuration can be changed immediately in Proxmox. Final configuration for Debian:

auto eth0

iface eth0 inet static

address ip-address

netmask 26

gateway bridge-addressPlease note: I specified 26 mask, not 29 - this is required in order for the network to work on the virtual machine.

Adding IPv4 Addresses to Hetzner

The situation with a single IP address is different - usually Hetzner gives us an additional address from the server subnet. This means that instead of vmbr2 we need to use vmbr0, but at the moment we don’t have it. The bottom line is that vmbr0 must contain the IP address of the iron server (that is, use the address that used the physical network interface enp2s0). The address must be moved to vmbr0, the following configuration is suitable for this (I advise you to order KVM, in which case to resume network operation):

auto enp2s0

iface enp2s0 inet manual

auto vmbr0

iface vmbr0 inet static

address ip-address

netmask 255.255.255.192

gateway ip-gateway

bridge-ports enp2s0

bridge-stp off

bridge-fd 0

Restart the server, if possible (if not, restart the networking service), and then check the network interfaces via ip a:

2: enp2s0: mtu 1500 qdisc pfifo_fast master vmbr0 state UP group default qlen 1000

link/ether 44:8a:5b:2c:30:c2 brd ff:ff:ff:ff:ff:ff

As you can see here, enp2s0 is connected to vmbr0 and does not have an IP address, since it was reassigned to vmbr0.

Now in the settings of the virtual machine, add the network interface that will be connected to vmbr0. For the gateway, specify the address attached to vmbr0.

At the end

I hope this article comes in handy when you set up the Proxmox cluster in Hetzner. If time permits, I will expand the article and add instructions for OVH - there, too, not everything is obvious, as it seems at first glance. The material turned out to be quite voluminous, if you find errors, then please write in the comments, I will correct them. Thank you all for your attention.

Posted by Ilya Andreev, edited by Alexei Zhadan and Live Linux Team