Petty little joy # 4: Radon - code quality measured in numbers

Engineers are very fond of measurements and numbers. Therefore, it is not surprising that they are trying to measure in a numerical form such a non-trivial thing as the quality of the code.

Metrics for evaluating the texts of programs have been invented a lot - from the banal number of lines of code in the project to the not so obvious "Maintainability Index". Details about all existing methods of coating the code with all sorts of metrics can be found in this article .

The Python world, of course, has its own thing for evaluating code quality. It is called radon . It is written in the same Python and works exclusively with petite files.

We put it to ourselves

pip install radonWe go to the folder with your code and begin to measure.

Raw statistics

Banal count of the number of lines in the source. And also the number of lines directly containing the code and the number of comment lines. Not very informative metric, but it is required for further calculations.

radon raw ./In response, a list of files in the project and statistics for each file will fall out.

Cyclomatic complexity

The more transitions (if-else), loops, generators, exception handlers, and logical operators in the code, the more options the program has and the more difficult it is to keep various system states in mind. A metric that measures the complexity of a code based on the number of these operations is called the cyclomatic complexity of a program .

It is considered a team.

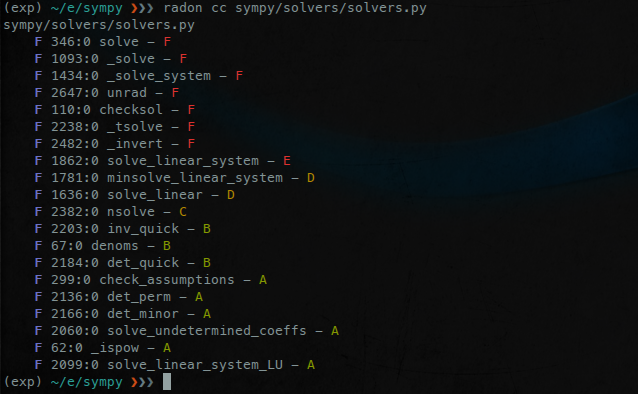

radon cc ./In response, you will receive a list of files, classes, methods and functions in your project and their complexity index, from very simple to very complex. The index will indicate logic overloaded places that can be broken down into smaller pieces, simplified or rewritten (if possible, the algorithm can be very complex in itself and attempts to break it into pieces can only worsen the code's readability).

Halstead Metrics

Here we consider the number of unique operators and operands in the code and their total number. The obtained values are substituted into the formulas and a set of numbers is obtained that describes the complexity of the program and the amount of effort that is supposedly spent on writing and understanding the code.

radon hal ./Code Support Index

This index tells us how difficult it will be to maintain or edit a piece of the program. This parameter is calculated based on the numbers obtained from the metrics calculated above.

radon mi ./In response, we get a list of files in the project and their support index, from light to very heavy.

Detailed algorithms for calculating metrics and documentation for them can be found here .

Where is all this?

Of course, it is impossible to draw any conclusions about the quality of the code, relying solely on numerical metrics. But in some cases, a quick assessment with radon may be helpful.

- You have to review a large amount of code and there is no time to devote a lot of attention to each file individually. Running the tests will allow you to see the function in which the newcomer junior washed down 40 nested conditions.

- You have to steer the development of a large number of microservices, broken into small projects. A quick assessment (possibly even in automatic mode) will allow you to find potentially problematic places and review them manually.

- Of course, run tests on open source libraries (especially some not-so-popular solutions with a small community).

Informative? Yes. Useful and necessary? Perhaps sometimes, maybe in certain cases.

To put, play, run a couple of your projects through metrics, write a small script and hook it onto commits? Perhaps a good project for the evening.