Upgrade of the disk subsystem of the old server with the PCIe 1.0 - 2.0 bus

- Tutorial

Why the topic of this article was chosen to upgrade the disk subsystem

It is clear that in the first place you need, as a rule:

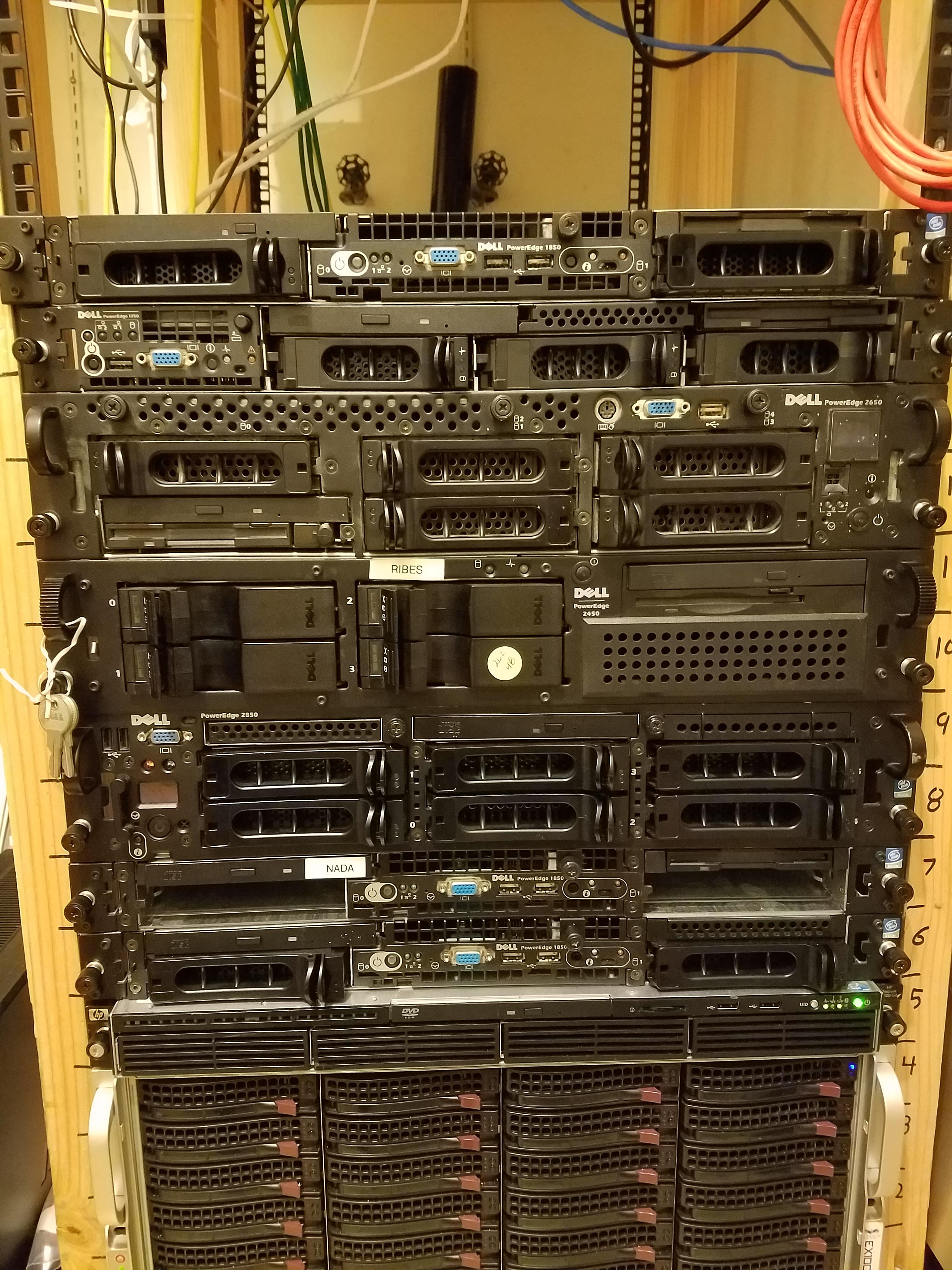

For older servers that have memory, processors can usually be found at bargain prices.

- Increase RAM. This is such an obvious move that I did not even consider it necessary to write about it in the main article

- Install additional processor (s) or replace both processors with the most productive versions supported by server sockets.

For older servers that have memory, processors can usually be found at bargain prices.

At some point, the question of any owner of their own server becomes upgrade or a new server.

Since the price of a new server can now be measured in millions of rubles, many go on the path of upgrade.

For a successful upgrade, it is very important to use compromises, so that for a small fee (relative to the price of the new server) we get a significant performance gain.

The article provides a list of server SSDs PCI-E 2.0 x8, which are much cheaper now, raid controllers with support for SSD caching are indicated, and a SATA III SSD is tested on the SATA II interface.

The most obvious way to upgrade the disk subsystem is to switch from HDD to SSD. This is true for laptops as well as for servers. On servers, perhaps the only difference is that SSDs can be easily built into a raid.

True, there are subtle points associated with the fact that there may not be any SATA III ports on the old server and then you will have to replace or install the appropriate controller.

There are, of course, intermediate methods.

Caching on SSD.

In general, this method is well suited for databases, 1C, any random access. Speed really speeds up. For huge video surveillance files, this method is useless.

LSI Raid Controllers (IBM, DELL, CISCO, Fujtsu)

Starting with the 92xx series, LSI has CacheCade 2.0 technology, which allows you to use almost any SATA SSD as a RAID array cache. Both for reading and writing. And even create a mirror from caching SSDs.

With branded controllers, things get more complicated. This is especially true for IBM. You will have to buy keys and SSDs for CacheCade from IBM for a lot of money, so it’s easier to change the controller to LSI and buy a hardware key at a low price. Software keys cost significantly more than hardware keys.

Adaptec Raid Controllers

Adaptec controllers have MaxCache technology, which also allows you to use SSD as a cache. We are interested in controller versions that end with the letter Q.

Q-controllers are able to use almost any SSD, not just the SSDs supplied by Adaptec.

- Starting with 5xxx, all controllers have Hybrid raid support. The essence of this technology is that reading is always done with the SSD, when there is a mirror one of the drives in which the SSD.

- 5xxxQ, e.g. 5805ZQ. These controllers support MaxCache 1.0. Read caching only.

- 6xxQ, e.g. 6805Q. MaxCache 2.0. Read and write caching.

- 7xxQ, for example 7805Q. MaxCache 3.0. Read and write caching.

- 8xxQ for upgrade purposes makes almost no sense to use because of the high prices.

Article about caching on SSD on Habré (controllers and OS).

Software Technology Caching on SSD

I will not cover these technologies. In almost any OS, they are now supported. I remember that when using btrfs, it automatically forwards read requests to the device with the shortest queue - SSD.

SATA III SSD on SATA II

Since there is not always the opportunity and money for a new controller, the question arises of how well SATA III SSDs work on the outdated SATA II interface.

Let's do a little test. As a test subject, we will have a 400GB SATA III SSD Intel S3710.

| Random Read, iops | Avg read latency, mS | Random Write, iops | Avg write latency, mS | Linear read, MB / s | Linear write, MB / s | |

|---|---|---|---|---|---|---|

| SATA II | 21241 | 2 | 13580 | 4 | 282 | 235 |

| SATA III | 68073 | 0.468 | 61392 | 0.52 | 514 | 462 |

Commands used for speed testing

fio --name LinRead --eta-newline=5s --filename=/dev/sda --rw=read --size=500m --io_size=10g --blocksize=1024k --ioengine=libaio --iodepth=32 --direct=1 --numjobs=1 --runtime=60 --group_reporting

fio --name LinWrite --eta-newline=5s --filename=/dev/sda --rw=write --size=500m --io_size=10g --blocksize=1024k --ioengine=libaio --fsync=10000 --iodepth=32 --direct=1 --numjobs=1 --runtime=60 --group_reporting

fio --name RandRead --eta-newline=5s --filename=/dev/sda --rw=randread --size=500m --io_size=10g --blocksize=4k --ioengine=libaio --iodepth=32 --direct=1 --numjobs=4 --runtime=60 --group_reporting

fio --name RandWrite --eta-newline=5s --filename=/dev/sda --rw=randwrite --size=500m --io_size=10g --blocksize=4k --ioengine=libaio --iodepth=32 --direct=1 --numjobs=4 --runtime=60 --group_reporting

As you can see the difference in linear speed, IOPS, delays is very decent, so it makes sense to use only the SATA III interface, and if it is not, then install the controller.

In fairness, I will say that in other experiments, the difference in the speed of random reading and writing turned out to be insignificant. Perhaps such a big IOPS difference between SATA II and SATA III could have happened because I had some kind of extremely unsuccessful SATA II controller or driver with some bugs.

However, the fact is that you need to check the speed of SATA II - suddenly you have the same brake controller. In this case, the transition to the SATA III controller is required.

PCIe SSD on PCI-e 2.0 or 1.0

As you know, the fastest SSDs are PCI-e NVMe, which are not limited to the SAS or SATA protocols.

However, when installing modern PCI-e SSDs, it is necessary to take into account the fact that most of them use only 4 PCI-e lines, usually PCI-e 3.0 or 3.1.

Now let's see the PCI-e bus speed table.

| PCI Express Bandwidth, GB / s | ||||||

|---|---|---|---|---|---|---|

| Year of manufacture | PCI Express Version | Coding | Transfer rate | Bandwidth on x lines | ||

| × 4 | × 8 | × 16 | ||||

| 2002 | 1.0 | 8b / 10b | 0.50 GB / s | 1.0 GB / s | 2.0 GB / s | 4.0 GB / s |

| 2007 | 2.0 | 8b / 10b | 1.0 GB / s | 2.0 GB / s | 4.0 GB / s | 8.0 GB / s |

| 2010 | 3.0 | 128b / 130b | 1.97 GB / s | 3.94 GB / s | 7.88 GB / s | 15.8 GB / s |

M.2 SSD and PCI-e adapter

There are good upgrade options when we buy an adapter for $ 10 and put the M.2 SSD in the server, but again for good SSDs there will be speed cuts (especially on PCI-e 1.0), and the M.2 SSDs are not always readily available for server loads: high durability, power protection and stability of high-speed characteristics due to the SLC cache filling on cheap models.So this method can be suitable only for a server with a PCI-e 2.0 bus and busy with non-critical work.

PCI-E 2.0 x8 SSD

The most economical logical upgrade is to use the PCI-E 2.0 x8 SSD for servers with a PCI-e 1.0 bus (bandwidth up to 2 GB / s) and PCI-e 2.0 (up to 4 GB / s).

Such server SSDs can now be bought quite inexpensively both at various marketplaces and at online auctions, including in Russia.

I have compiled a table of such obsolete SSDs that will perfectly overclock your old server. At the end of the table, I added several SSDs with a PCI-E 3.0 x8 interface. Suddenly you are lucky and come across at a reasonable price.

| Title | TB | PBW | PCI-E | 4k read iops, K | 4k write iops, K | read, MB / s | write, MB / s |

|---|---|---|---|---|---|---|---|

| Fusion-io ioDrive II DUO MLC | 2.4 | 32.5 | 480 | 490 | 3000 | 2500 | |

| SANDISK FUSION IOMEMORY SX350-1300 | 1.3 | 4 | 225 | 345 | 2800 | 1300 | |

| SANDISK FUSION IOMEMORY PX600-1300 | 1.3 | 16 | 235 | 375 | 2700 | 1700 | |

| SANDISK FUSION IOMEMORY SX350-1600 | 1.6 | 5.5 | 270 | 375 | 2800 | 1700 | |

| SanDisk Fusion ioMemory SX300-3200 | 3.2 | eleven | 345 | 385 | 2700 | 2200 | |

| SanDisk Fusion ioMemory SX350-3200 | 3.2 | eleven | 345 | 385 | 2800 | 2200 | |

| SANDISK FUSION IOMEMORY PX600 | 2.6 | 32 | 350 | 385 | 2700 | 2200 | |

| Huawei ES3000 V2 | 1,6 | 8.76 | 395 | 270 | 1550 | 1100 | |

| Huawei ES3000 V2 | 3.2 | 17.52 | 770 | 230 | 3100 | 2200 | |

| EMC XtremSF | 2.2 | 340 | 110 | 2700 | 1000 | ||

| HGST Virident FlashMAX II | 2.2 | 33 | 350 | 103 | 2700 | 1000 | |

| HGST Virident SSD FlashMAX II | 4.8 | 10.1 | 269 | 51 | 2600 | 900 | |

| HGST Virident FlashMAX III | 2.2 | 7.1 | 531 | 59 | 2700 | 1400 | |

| Dell Micron P420M | 1.4 | 9.2 | 750 | 95 | 3300 | 630 | |

| Micron P420M | 1.4 | 9.2 | 750 | 95 | 3300 | 630 | |

| HGST SN260 | 1.6 | 10/25 | 1200 | 200 | 6170 | 2200 | |

| HGST SN260 | 3.2 | 17.52 | 1200 | 200 | 6170 | 2200 | |

| Intel P3608 | 3.2 | 17.5 | 850 | 80 | 4500 | 2600 | |

| Kingston DCP1000 | 3.2 | 2.78 | 1000 | 180 | 6800 | 6000 | |

| Oracle F320 | 3.2 | 29th | 750 | 120 | 5500 | 1800 | |

| Samsung PM1725 | 3.2 | 29th | 1000 | 120 | 6000 | 2000 | |

| Samsung PM1725a | 3.2 | 29th | 1000 | 180 | 6200 | 2600 | |

| Samsung PM1725b | 3.2 | 18 | 980 | 180 | 6200 | 2600 |

Of these SSDs stand alone Fusion ioMemory. Fusion's research director was Steve Wozniak . Then this company was bought by SanDisk for $ 1.2 billion. At one time, they cost from $ 50,000 apiece. Now you can buy them for several hundred dollars in new condition for a disk with a capacity of 1TB or higher.

Of these SSDs stand alone Fusion ioMemory. Fusion's research director was Steve Wozniak . Then this company was bought by SanDisk for $ 1.2 billion. At one time, they cost from $ 50,000 apiece. Now you can buy them for several hundred dollars in new condition for a disk with a capacity of 1TB or higher. If you look closely at the table, you can see that they have a rather high number of IOPS per record, which is almost equal to the number of IOPS per read. Given their current price, in my opinion, these SSDs are worth paying attention to.

True, they have several features:

- They cannot be bootable.

- Need a driver to use. Drivers have almost everything, but under the latest versions of Linux they will have to be compiled.

- The optimal sector size is 4096 bytes. (512 is also supported)

- The driver in the worst case scenario can consume quite a lot of RAM (with a sector size of 512 bytes)

- The speed of work depends on the speed of the processor, so it is better to turn off energy-saving technologies. This is both a plus and a minus, because with the help of a powerful processor the device can work even faster than indicated in the specifications

- Needs good cooling. For servers, this should not be a problem.

- It is not recommended for ESXi, as ESXi prefers 512N sector disks, and this can lead to high memory consumption by the driver.

- Branded versions of these SSDs are, as a rule, not supported by vendors up to the level of the latest driver from SanDisk (March 2019)

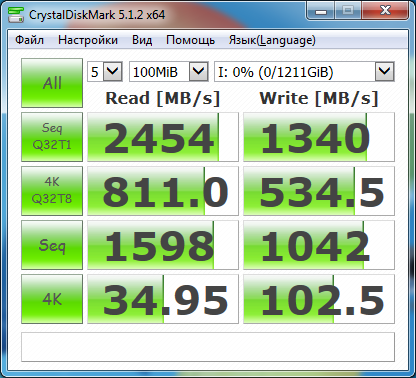

I conducted Fusion ioMemory tests in comparison with a rather modern server SSD Intel P3700 PCI-E 3.0 x8 (the latter costs 4 times more expensive than Fusion with a similar capacity). At the same time, you can see how much the speed is cut due to the x4 bus.

| Fusion PX600 1.3TB PCI-E 2.0 x8 | Intel P3700 1.6TB PCI-E 3.0 x4 |

|---|---|

|  |

Yes, the linear read speed is uniquely cut by the Intel P3700. The passport should be 2800 MB / s, and we have 1469 MB / s. Although in general it can be said that with the PCI-e 2.0 bus you can use the server SSD PCI-E 3.0 x4, if you can get them at a reasonable price.

conclusions

The disk subsystem of an old server with a PCI-E 1.0 or 2.0 bus can be rediscovered by using SSDs that can utilize 8 PCI-E lines that provide throughputs up to 4GB / s (PCI-E 2.0) or 2GB / s (PCI-E 1.0). It is most economically beneficial to do this using obsolete PCI-E 2.0 SSDs.

Compromise options related to purchasing a CacheCade key for LSI controllers or replacing an Adaptec controller with a Q version are also easy to implement.

Well, a completely banal way is to buy (raid) the SATA III controller in order for the SSD to work at full speed and move them all demanding speed.