Recommender systems: ideas, approaches, tasks

Many people are accustomed to rating a movie at KinoPoisk or imdb after viewing it, and the sections “Also bought with this product” and “Popular products” are in any online store. But there are less familiar types of recommendations. In this article I will talk about what tasks are recommended by recommendation systems, where to run and what to google.

What do we recommend?

What if we want to recommend a route that is comfortable for the user ? Different aspects of the trip are important for different users: the availability of seats, travel time, traffic, air conditioning, a beautiful view from the window. An unusual task, but it’s pretty clear how to build such a system.

What if we recommend the news ? News quickly becomes obsolete - you need to show users the latest articles while they are still relevant. It is necessary to understand the content of the article. Already more difficult.

And if we recommend restaurants based on reviews? But we recommend not only the restaurant, but also specific dishes that are worth a try. You can also give recommendations to restaurants about what is worth improving.

What if we dolet’s expand the task and try to answer the question: “What product will be of interest to the largest group of people?”. It is becoming very unusual and it is not immediately clear how to solve this.

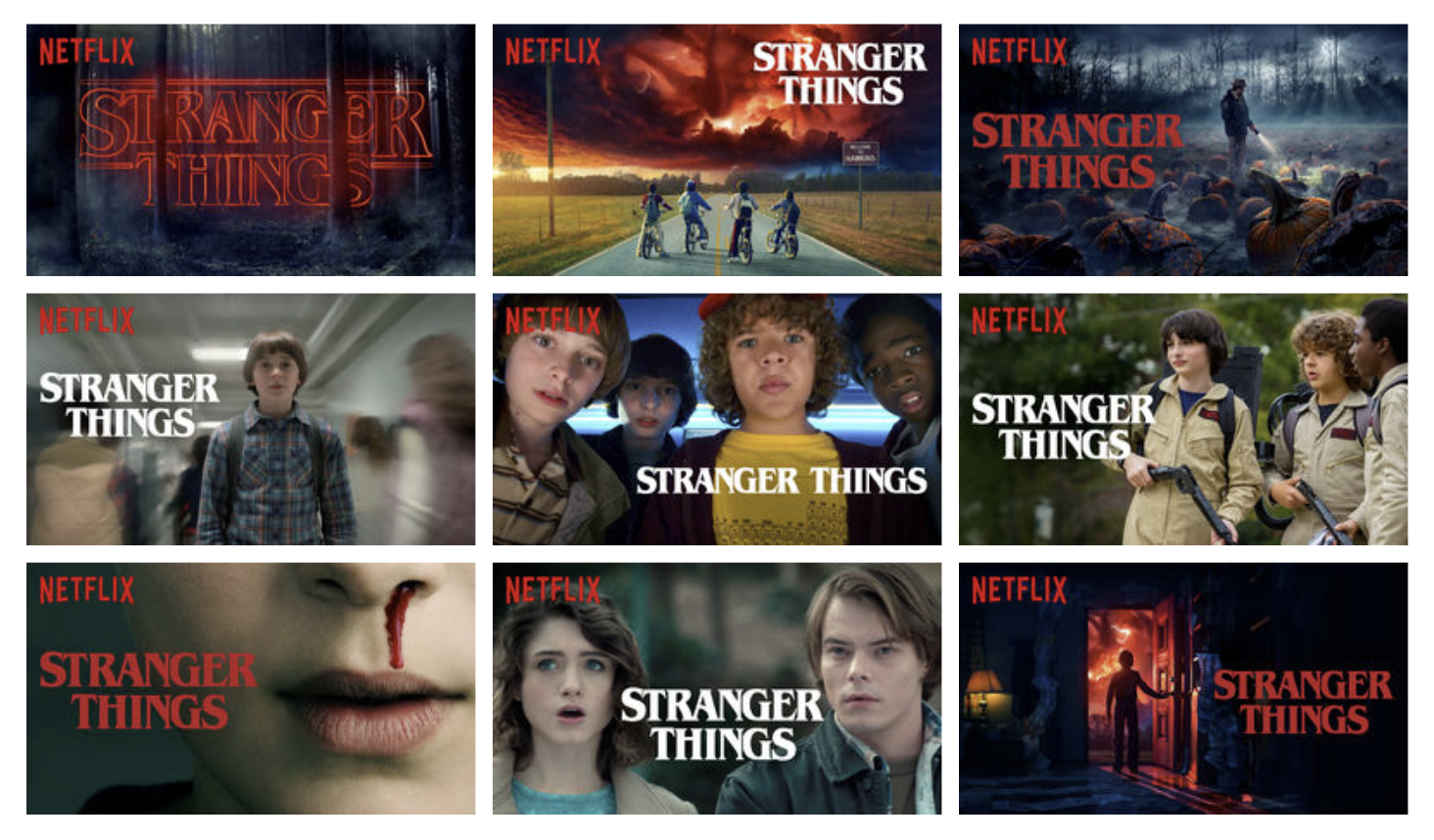

In fact, there are many variations of the recommendations task and each has its own nuances. You can recommend completely unexpected things. My favorite example is recommending previews on Netflix .

Narrower task

Take the familiar and familiar task of recommending music. What exactly do we want to recommend? On this collage you can find examples of various recommendations from Spotify, Google and Yandex.

- Highlighting homogeneous interests in the Daily Mix

- Personalized News Release Radar, Recommended New Releases, Premiere

- Personal selection of what you like - Playlist of the Day

- Personal selection of tracks that the user has not yet heard - Discover Weekly, Dejavu

- A combination of the previous two points with a bias in new tracks - I'm Feeling Lucky

- Located in the library, but not yet heard - Cache

- Your Top Songs 2018

- The tracks that you listened at age 14 and which shaped your tastes - Your Time Capsule

- Tracks that you might like, but differ from what the user usually listens to - Taste breakers

- Tracks of artists who perform in your city

- Style Collections

- Activity and mood selections

And for sure you can come up with something else. Even if we are absolutely able to predict which tracks the user likes, the question still remains in what form and in what layout they should be issued.

Classic staging

In the classical statement of the problem, all that we have is a user-item rating matrix. It is very sparse and our task is to fill in the missing values. Usually, RMSE of the predicted rating is used as a metric, however, there is an opinion that this is not entirely correct and the characteristics of the recommendation as a whole should be taken into account, and not the accuracy of predicting a specific number.

How to evaluate the quality?

Online evaluation

The most preferable way to evaluate the quality of the system is direct verification on users in the context of business metrics. This may be the CTR, the time spent in the system, or the number of purchases. But experiments on users are expensive, and I don’t want to roll out a bad algorithm even to a small group of users, so they use offline quality metrics before online testing.

Offline evaluation

Ranking metrics, such as MAP @ k and nDCG @ k, are usually used as quality metrics.

Relevancein context MAP@k, a binary value, and in context nDCG@k, there may be a rating scale. But, in addition to the accuracy of the prediction, we may be interested in other things:

- сoverage - the share of goods that is issued by the recommender,

- personalization - how different are recommendations between users,

- diversity - how diverse the products are within the recommendation.

In general, there is a good review of metrics. How good your recommender system is? A survey on evaluations in recommendation . An example of formalizing novelty metrics can be found in Rank and Relevance in Novelty and Diversity Metrics for Recommender Systems .

Data

Explicit feedback

The grading matrix is an example of explicit data. Like, dislike, rating - the user himself has clearly expressed the degree of his interest in the item. Such data is usually scarce. For example, in the Rekko Challenge in the test data, only 34% of users have at least one mark.

Implicit feedback

There is much more information about implicit preferences - views, clicks, bookmarking, setting up notifications. But if the user watched the film - it only means that before watching the film seemed interesting enough to him. We cannot draw direct conclusions about whether the film was liked or not.

Loss Functions for Learning

To use implicit feedback, we came up with appropriate teaching methods.

Bayesian personalized ranking

Original article .

It is known what items the user interacted with. We assume that these are positive examples that he liked. There are still many items that the user has not interacted with. We do not know which of them will be of interest to the user and which are not, but we certainly know that not all of these examples will turn out to be positive. We make a rough generalization and consider the absence of interaction as a negative example.

We will sample triples {user, positive item, negative item} and fine the model if a negative example is rated higher than a positive one.

Weighted Approximate-Rank Pairwise

Add to the previous idea adaptive learning rate. We will evaluate the training of the system based on the number of samples that we had to look through to find a negative example for the given pair {user, positive example}, which the system rated higher than positive.

If we found such an example the first time, then the fine should be large. If you had to look for a long time, then the system is already working well and you do not need to fine so much.

What else is worth thinking about?

Cold start

As soon as we have learned to make predictions for existing users and products, two questions arise: - “How to recommend a product that no one has yet seen?” and “What should I recommend to a user who does not yet have a single rating?”. To solve this problem, they try to extract information from other sources. This may be data about a user from other services, a questionnaire during registration, information about an item from its contents.

In this case, there are tasks for which the state of a cold start is constant. In Session Based Recommenders, you need to have time to understand something about the user during the time that he is on the site. New items are constantly appearing in recommenders of news or fashion products, while old items quickly become obsolete.

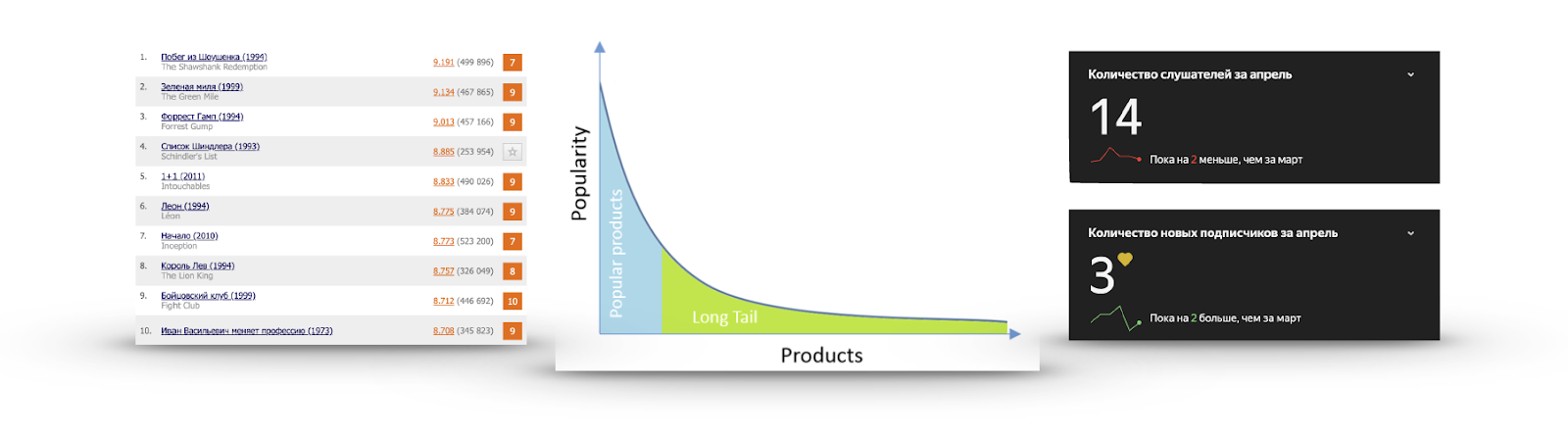

Long tail

If for each item its popularity is calculated in the form of the number of users who interacted with it or gave a positive rating, then very often we will get a graph like in the image: There is a very small number of items that everyone knows about. There is no point in recommending them, because the user most likely either has already seen them and simply did not give a rating, either knows about them and is going to see them, or has firmly decided not to watch at all. I watched the trailer of Schindler’s List more than once, but I wasn’t going to see it.

On the other hand, popularity is dropping very quickly, and almost no one has seen the vast majority of items. Making recommendations from this part is more useful: there is interesting content that the user is unlikely to find himself. For example, on the right is the listening statistics of one of my favorite groups on Yandex.Music.

Exploration vs Exploitation

Let's say we know exactly what the user likes. Does this mean that we should recommend the same thing? There is a feeling that such recommendations will quickly become boring and sometimes it is worth showing something new. When we recommend what exactly should like is exploitation. If we try to add something less popular to the recommendations or somehow diversify them - this is exploration. I want to balance these things.

Non-personalized recommendations

The easiest option is to recommend the same thing to everyone.

Sort by popularity

Score = (Positive ratings) - (Negative ratings)

You can subtract dislikes from likes and sort them. But in this case, we do not take into account their percentage ratio. There is a feeling that 200 likes of 50 dislikes are not the same as 1200 likes and 1050 dislikes.

Score = (Positive ratings) / (Total ratings)

You can divide the number of likes by the number of dislikes, but in this case we do not take into account the number of ratings and a product with one rating of 5 points will be ranked higher than a very popular product with an average rating of 4.8.

How not to sort by the average rating and consider the number of ratings? Calculate the confidence interval: "Based on the available estimates, is the probability of a 95% probability of the true share of positive ratings at least what?" The answer to this question was given by Edwin Wilson in 1927.

Compatibility

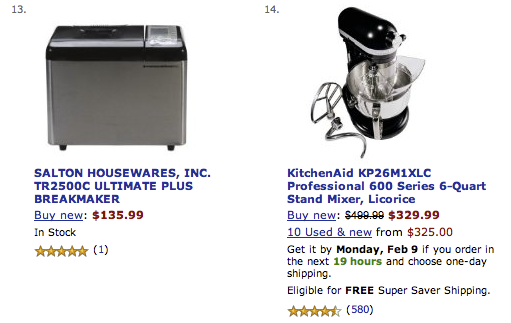

The selection of frequently encountered product sets includes a whole group of pattern mining tasks: periodic pattern mining , sequential rule mining , sequential pattern mining , high-utility itemset mining , frequent itemset mining (basket analysis) . Each specific task will have its own methods , but if roughly generalized, algorithms for finding frequent sets perform an abbreviated breadth-first search, trying not to sort out obviously bad options.

Rare sets are cut off at the given boundary support - the number or frequency of occurrence of the set in the data.

After highlighting the frequent itemset, the quality of their dependence is evaluated using the Lift or Confindence (a, b) / Confidence (! A, b) metrics. They are aimed at removing false dependencies.

For example, bananas can often be found in the grocery basket along with canned goods. But the point is not in some special connection, but in the fact that bananas are popular by themselves, and this should be taken into account when searching for matches.

Personalized recommendations

Content based

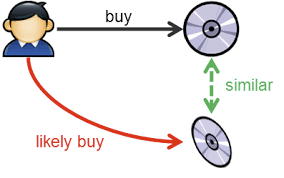

The idea of a content-based approach is to create for him a vector of his preferences in the space of objects based on the history of user actions and recommend products close to this vector.

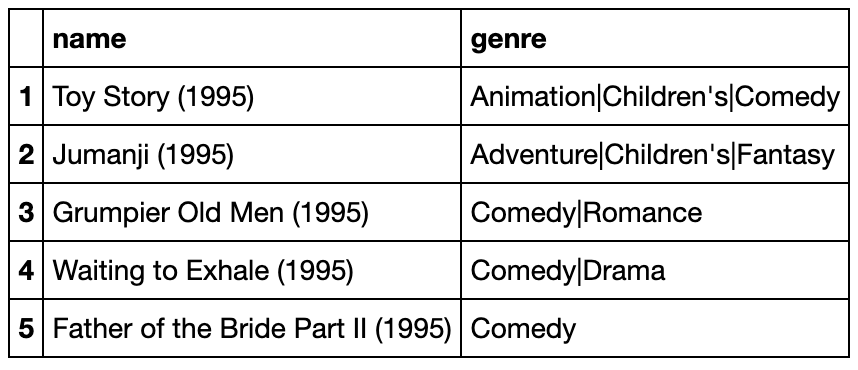

That is, the item should have some characteristic description. For example, these may be genres of films. The history of likes and dislikes of films forms a vector of preference,

highlighting some genres and avoiding others. By comparing the user vector and the item vector, you can make a ranking and get recommendations.

Collaborative filtering

Collaborative filtering assumes a user-item assessment matrix. The idea is to find the most similar “neighbors” for each user and fill in the gaps of a specific user by weighting averaging the ratings of “neighbors”.

Similarly, you can look at the similarity of items, believing that similar items are liked by similar people. Technically, this will simply be a consideration of the transposed matrix of estimates.

Users use the rating scale differently - someone never puts it above eight, and someone uses the entire scale. This is useful to take into account, and therefore it is possible to predict not the rating itself, but a deviation from the average rating.

Or you can normalize the estimates in advance.

Matrix Factorization

From mathematics, we know that any matrix can be decomposed into the product of three matrices. But the matrix of ratings is very sparse, 99% is commonplace. And SVD does not know what gaps are. Filling them with an average value is not very desirable. And in general, we are not very interested in the matrix of singular values - we just want to get a hidden view of users and objects, which, when multiplied, will approximate the true rating. You can immediately decompose into two matrices.

What to do with passes? Hammer on them. It turned out that you can train to approximate ratings by RMSE metric using SGD or ALS, ignoring omissions altogether. The first such algorithm is Funk SVD , which was invented in 2006 in the course of solving the competition from Netflix.

Netflix prize

Netflix Prize - a significant event that gave a strong impetus to the development of recommendation systems. The goal of the competition is to overtake the existing Cinematch RMSE recommendation system by 10%. To this end, a large dataset containing 100 million ratings was provided at that time. The task may not seem so difficult, but in order to achieve the required quality it was required to rediscover the competition two times - a solution was received only for 3 years of the competition. If it were necessary to obtain an improvement of 15%, perhaps this would not have been possible to achieve on the data provided.

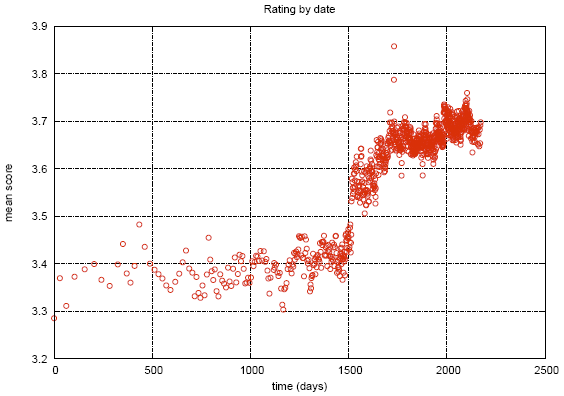

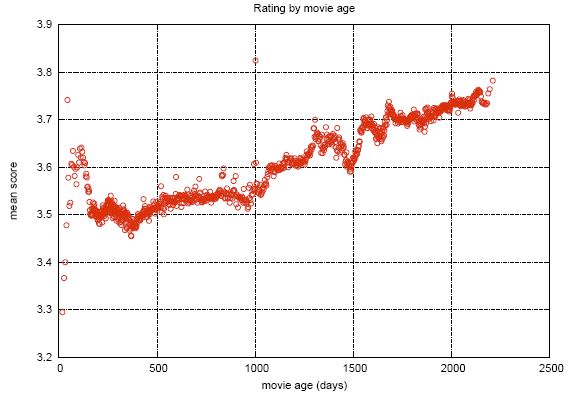

During the competition, some interesting features were found in the data . The graph shows the average rating of films depending on the date they appeared in the Netflix catalog. The apparent gap is associated with the fact that at this time Netflix switched from an objective scale (a bad movie, a good movie) to a subjective one (I didn’t like it, I really liked it). People are less critical when expressing their assessment, rather than characterizing an object.

This graph shows how the average movie rating changes after release. It can be seen that over 2000 days the score rises by 0.2. That is, after the film has ceased to be new, those who are pretty confident that he will like the film, which increases the rating, begin to watch it.

The first intermediate prize was taken by a team of specialists from AT&T - Korbell. After 2000 hours of work and compiling an ensemble of 107 algorithms, they managed to get an improvement of 8.43%.

Among the models was a variation of SVD and RBM, which in themselves provided most of the input. The remaining 105 algorithms improved only one hundredth of the metric. Netflix adapted these two algorithms for its data volumes and still uses them as part of the system.

In the second year of the competition, the two teams merged and now the prize was taken by Bellkor in BigChaos. They attacked a total of 207 algorithms and improved accuracy by another one-hundredth, reaching a value of 0.8616. The required quality is still not achieved, but it is already clear that next year everything should work out.

Third year. Combining with yet another team, renaming Bellkor's Pragmatic Chaos and achieving the required quality, slightly inferior to The Ensemble. But this is only the public part of the dataset.

On the hidden side, it turned out that the accuracy of these teams coincides to the fourth decimal place, so the winner was determined by a difference of commits of 20 minutes.

Netflix paid the promised million to the winners, but never used the resulting solution . Implementing the ensemble turned out to be too expensive, and there is not much benefit from it - after all, only two algorithms already provide most of the increase in accuracy. And most importantly - at the time of the end of the competition in 2009, Netflix had already started to engage in its streaming service in addition to renting a DVD for two years. They had many other tasks and data that they could use in their system. However, their DVD mail rental service still serves 2.7 million happy subscribers .

Neural networks

In modern recommendation systems, a frequent question is how to take into account various explicit and implicit sources of information. Often there is additional data about the user or item and you want to use them. Neural networks are a good way of accounting for such information.

On the issue of using networks for recommendations, you should pay attention to the review of Deep Learning based Recommender System: A Survey and New Perspectives . It describes examples of using a large number of architectures for various tasks.

There are a lot of architectures and approaches. One of the repeated names is DSSM . I would also like to mention Attentive Collaborative Filtering .

ACF proposes to introduce two levels of attenuation:

- Даже при одинаковых рейтингах одни айтемы вносят больший вклад в ваши предпочтения, чем другие.

- Айтемы не атомарны, а состоят из компонентов. Некоторые оказывают большее влияние на оценку, чем другие. Фильм может быть интересен только за счет наличия любимого актера.

Multi-armed bandits are one of the most popular topics of recent times. What is multi-armed bandits can be read in an article on Habré or on the Medium .

When applied to recommendations, the Contextual-Bandit task will sound something like this: “We feed the user and item context vectors to the system input, we want to maximize the likelihood of interaction (clicks, purchases) for all users over time, making frequent updates to the recommendation policy.” This formulation naturally solves the problem of exploration vs exploitation and allows you to quickly roll out optimal strategies for all users.

In the wake of the popularity of transformer architecture, there are also attempts to use them in recommendations. Next Item Recommendation with Self-Attention attempts to combine long-term and recent user preferences to improve recommendations.

Instruments

Recommendations are not such a popular topic as CV or NLP, therefore, to use the latest grid architectures, you will either have to implement them yourself, or hope that the author's implementation is quite convenient and understandable. However, some basic tools are still there:

Conclusion

The recommender systems have gone far from the standard statement about filling in the matrix of assessments, and each specific area will have its own nuances. This introduces difficulties, but also adds interest. In addition, it can be difficult to separate the recommendation system from the product as a whole. Indeed, not only the list of items is important, but also the method and context of the submission. What, how, to whom and when to recommend. All this determines the impression of interaction with the service.