Selenium WebDriver - Real-time test metric using Grafana and InfluxDB

- Transfer

Hello! Next week, classes in the Java QA Engineer group will start . This will be timed to coincide with the current publication.

The daily execution of automated regression tests as part of the daily assembly is inevitable at the moment. It's nice to find and report bugs right after finding them. At the same time, it is problematic to run hundreds of automated tests and remote parallel execution. Once you have a set of automated regression tests, you can split it into several sets of business modules and run them in parallel, as part of a daily automated regression test. Most of these test suites run on a remote virtual machine, and test results are only available after it has been run. Otherwise, you can look into the jenkins console to see the logs of the test execution process. This is sometimes annoying. It would be great to have an application,

Let's see how you can create a simple page with test results and a dashboard using InfluxDB and Grafana.

We will collect all available test metrics, such as:

We can get some of the indicators in real time, as shown below:

InfluxDB is a time series database that is used to collect all test metrics. InfluxDB has a REST API for writing data and sending requests. You can learn more here . Below I use the docker command to start an instance of InfluxDB.

We have already raised and launched the InfluxDB database. Let's create a separate database schema for collecting Selenium test results. Below I run a command in the terminal to create a schema called “selenium” in the database. (Check the URL, replace localhost with hostname / ipaddress if you are not running on the current computer).

Let's create a simple testNG test:

Our goal is to collect test results in InfluxDB at runtime. So, we need a driver / library in Java for InfluxDB.

Add the Maven dependencies shown below:

TestNG listeners are great for listening to events, and may respond depending on what happened. First, let's create a simple class that is responsible for sending the results to InfluxDB.

Now create another class that implements the ITestListener interface.

The listener from the example above will monitor the execution of the test and as soon as a certain method / class of the test is completed, it will send a name, duration and some additional details. My goal here is just to give you an idea. Change the code according to your needs.

Now add the listener to the XML package or to the TestNG base class.

Or:

We managed to send the results to InfluxDB. But how to request results and visualize the received data? For this we will use another free tool called “Grafana”.

Grafana is an excellent visualization tool for time series data; it interacts perfectly with InfluxDB. The following are docker commands for creating an instance of Grafana. [the piechart plugin is optional on the team, it can be removed if it is not needed]

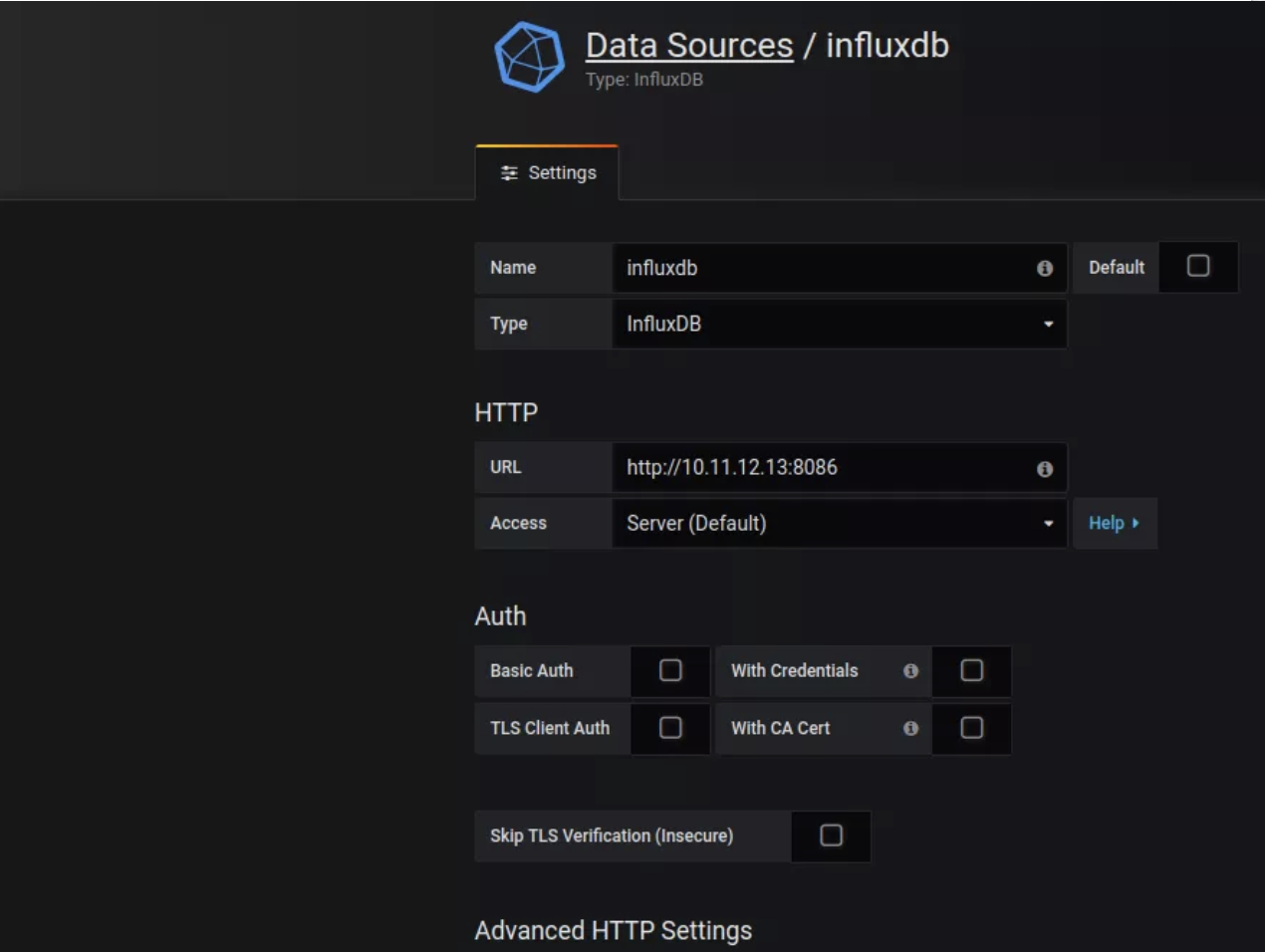

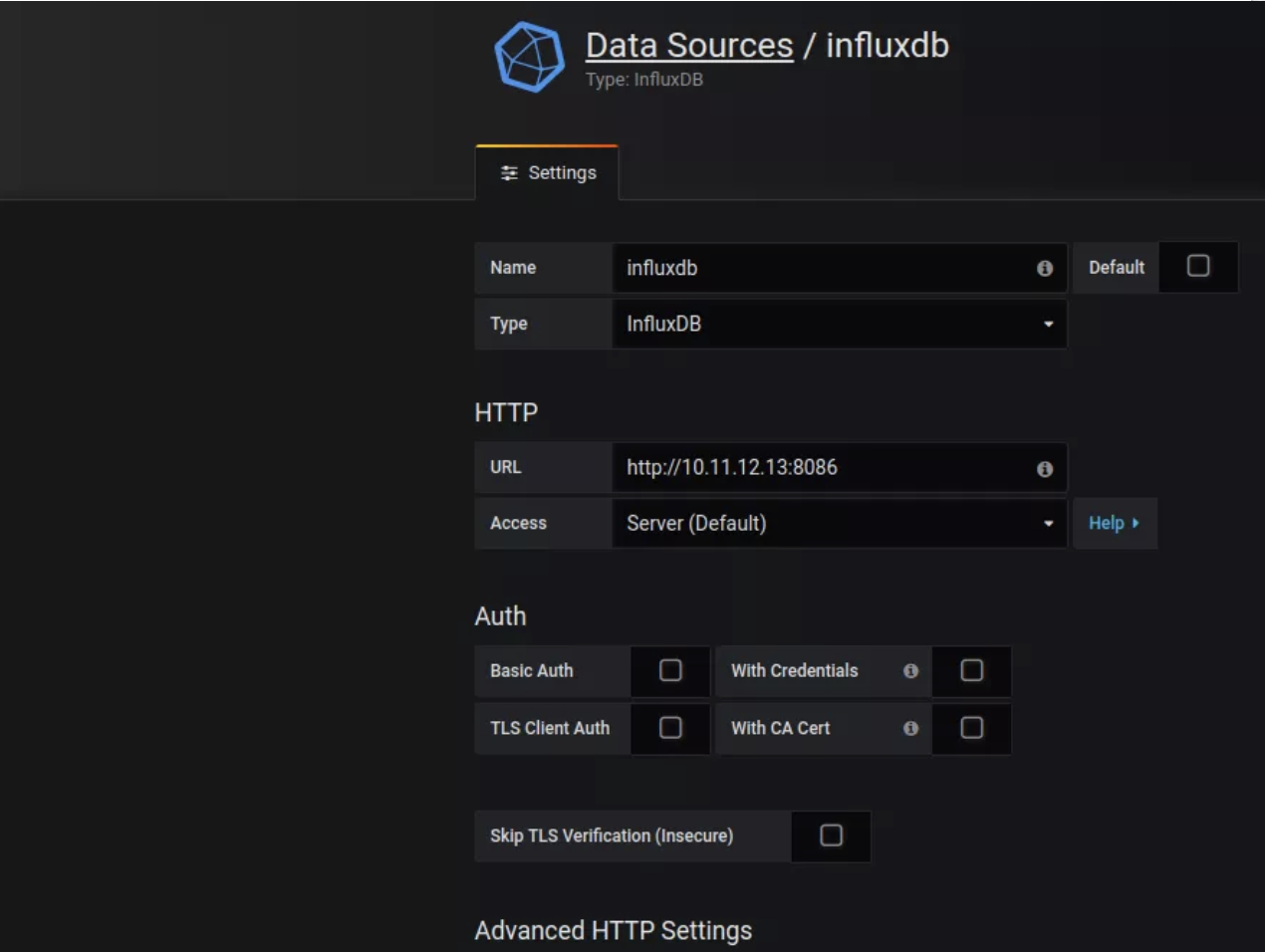

Go to Settings -> Data sources -> Add new data source , as shown in the screenshot. Click on the 'Save & Test' button to make sure Grafana can communicate with InfluxDB.

I would like you to watch this video, because it’s not easy to explain all the nuances in the article. That’s why I recorded a hotel video.

Demo 2:

I hope that getting real-time results using InfluxDB and Grafana turned out to be interesting and useful. It requires minimal changes in the existing structure, because we use TestNG listeners. Removing the listener from the set / base class file is enough to disable this function if you do not need it. Such an approach will help to avoid some frustration in the team if its members are only engaged in monitoring the test results via console I / O on the remote machine. This article only sets out the main idea. You can improve this approach by adding more information, for example, a test environment, add additional filters to update the data in charts for a specific environment / tests, etc.

Here is such a short, but quite useful material. Traditionally, we are waiting for your comments, as well as recall that today will be open day at the rate that anyone can sign up for.

Overview:

The daily execution of automated regression tests as part of the daily assembly is inevitable at the moment. It's nice to find and report bugs right after finding them. At the same time, it is problematic to run hundreds of automated tests and remote parallel execution. Once you have a set of automated regression tests, you can split it into several sets of business modules and run them in parallel, as part of a daily automated regression test. Most of these test suites run on a remote virtual machine, and test results are only available after it has been run. Otherwise, you can look into the jenkins console to see the logs of the test execution process. This is sometimes annoying. It would be great to have an application,

Let's see how you can create a simple page with test results and a dashboard using InfluxDB and Grafana.

Goal:

We will collect all available test metrics, such as:

- Test Method Status

- Test Method Duration

- Class status with test methods

- Single class test runtime

- Test suite status

- Test Run Duration

We can get some of the indicators in real time, as shown below:

- The number of testing methods grouped by status (for example: Pass: 30, Failed: 2, Skipped: 2) on a particular day.

- The trend in the duration of a set of tests for a week, month, year, etc.

InfluxDB:

InfluxDB is a time series database that is used to collect all test metrics. InfluxDB has a REST API for writing data and sending requests. You can learn more here . Below I use the docker command to start an instance of InfluxDB.

sudo docker run -p 8086:8086 -v $PWD:/var/lib/influxdb influxdbDatabase creation:

We have already raised and launched the InfluxDB database. Let's create a separate database schema for collecting Selenium test results. Below I run a command in the terminal to create a schema called “selenium” in the database. (Check the URL, replace localhost with hostname / ipaddress if you are not running on the current computer).

curl -i -XPOST http://localhost:8086/query --data-urlencode "q=CREATE DATABASE selenium"TestNG:

Let's create a simple testNG test:

public class SampleTest {

@Test(description = "login")

public void login(){

}

@Test(description = "search for flights", dependsOnMethods = "login")

public void search(){

}

@Test(description = "select flight", dependsOnMethods = "search")

public void select(){

}

@Test(description = "book flight", dependsOnMethods = "select")

public void book(){

}

@Test(description = "logout", dependsOnMethods = "book")

public void logout(){

}

}Our goal is to collect test results in InfluxDB at runtime. So, we need a driver / library in Java for InfluxDB.

Maven Dependencies:

Add the Maven dependencies shown below:

org.influxdb influxdb-java 2.12 Students:

TestNG listeners are great for listening to events, and may respond depending on what happened. First, let's create a simple class that is responsible for sending the results to InfluxDB.

import org.influxdb.InfluxDB;

import org.influxdb.InfluxDBFactory;

import org.influxdb.dto.Point;

public class ResultSender {

private static final InfluxDB INFLXUDB = InfluxDBFactory.connect("http://localhost:8086", "root", "root");

private static final String DATABASE = "selenium";

static{

INFLXUDB.setDatabase(DATABASE);

}

public static void send(final Point point){

INFLXUDB.write(point);

}

}Now create another class that implements the ITestListener interface.

import org.influxdb.dto.Point;

import org.testng.ITestContext;

import org.testng.ITestListener;

import org.testng.ITestResult;

import java.util.concurrent.TimeUnit;

public class ExecutionListener implements ITestListener {

public void onTestStart(ITestResult iTestResult) {

}

public void onTestSuccess(ITestResult iTestResult) {

this.sendTestMethodStatus(iTestResult, "PASS");

}

public void onTestFailure(ITestResult iTestResult) {

this.sendTestMethodStatus(iTestResult, "FAIL");

}

public void onTestSkipped(ITestResult iTestResult) {

this.sendTestMethodStatus(iTestResult, "SKIPPED");

}

public void onTestFailedButWithinSuccessPercentage(ITestResult iTestResult) {

}

public void onStart(ITestContext iTestContext) {

}

public void onFinish(ITestContext iTestContext) {

this.sendTestClassStatus(iTestContext);

}

private void sendTestMethodStatus(ITestResult iTestResult, String status) {

Point point = Point.measurement("testmethod")

.time(System.currentTimeMillis(), TimeUnit.MILLISECONDS)

.tag("testclass", iTestResult.getTestClass().getName())

.tag("name", iTestResult.getName())

.tag("description", iTestResult.getMethod().getDescription())

.tag("result", status)

.addField("duration", (iTestResult.getEndMillis() - iTestResult.getStartMillis()))

.build();

ResultSender.send(point);

}

private void sendTestClassStatus(ITestContext iTestContext) {

Point point = Point.measurement("testclass")

.time(System.currentTimeMillis(), TimeUnit.MILLISECONDS)

.tag("name", iTestContext.getAllTestMethods()[0].getTestClass().getName())

.addField("duration", (iTestContext.getEndDate().getTime() - iTestContext.getStartDate().getTime()))

.build();

ResultSender.send(point);

}

}Note: Use an optional tag that meets your goals in the example above to classify the results. For example tag (“scenario”, “login flow”).

The listener from the example above will monitor the execution of the test and as soon as a certain method / class of the test is completed, it will send a name, duration and some additional details. My goal here is just to give you an idea. Change the code according to your needs.

Now add the listener to the XML package or to the TestNG base class.

Or:

@Listeners(ExecutionListener.class)

public class SampleTest {

@Test

public void test(){

}

}Grafana:

We managed to send the results to InfluxDB. But how to request results and visualize the received data? For this we will use another free tool called “Grafana”.

Grafana is an excellent visualization tool for time series data; it interacts perfectly with InfluxDB. The following are docker commands for creating an instance of Grafana. [the piechart plugin is optional on the team, it can be removed if it is not needed]

docker run -d -p 3000:3000

--name=grafana \

-e "GF_INSTALL_PLUGINS=grafana-piechart-panel" \

-v $PWD:/var/lib/grafana \

grafana/grafanaData Source for Grafana:

Go to Settings -> Data sources -> Add new data source , as shown in the screenshot. Click on the 'Save & Test' button to make sure Grafana can communicate with InfluxDB.

Note: If you are using Grafana with Docker and trying to access as 'Server default', DO NOT use localhost in the InfluxDB connection string. That's because here localhost is a Grafana container, not a physical machine. Therefore, the Grafana container will not be able to find InfluxDB.

Creating a dashboard:

I would like you to watch this video, because it’s not easy to explain all the nuances in the article. That’s why I recorded a hotel video.

Demo 2:

Summarize:

I hope that getting real-time results using InfluxDB and Grafana turned out to be interesting and useful. It requires minimal changes in the existing structure, because we use TestNG listeners. Removing the listener from the set / base class file is enough to disable this function if you do not need it. Such an approach will help to avoid some frustration in the team if its members are only engaged in monitoring the test results via console I / O on the remote machine. This article only sets out the main idea. You can improve this approach by adding more information, for example, a test environment, add additional filters to update the data in charts for a specific environment / tests, etc.

Here is such a short, but quite useful material. Traditionally, we are waiting for your comments, as well as recall that today will be open day at the rate that anyone can sign up for.