Build tools in machine learning projects, an overview

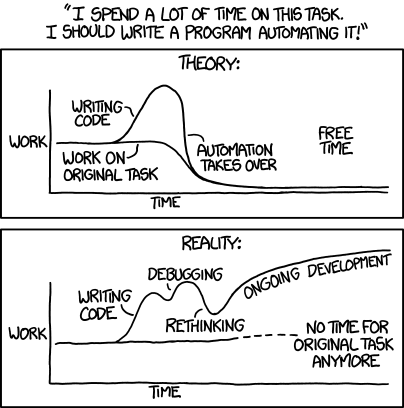

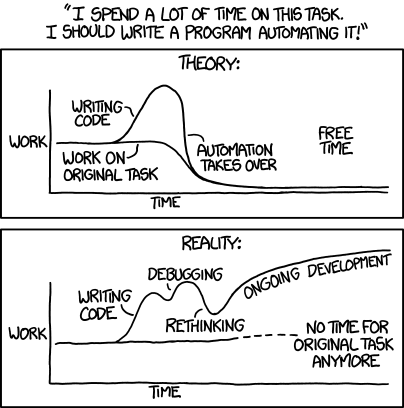

I was wondering about machine learning/data science project structure/workflow and was reading different opinions on the subject. And when people start to talk about workflow they want their workflows to be reproducible. There are a lot of posts out there that suggest to use make for keeping workflow reproducible. Although

This post is more an invitation for a dialogue rather than a tutorial. Perhaps your solution is perfect. If it is then it will be interesting to hear about it.

In this post I will use a small Python project and will do the same automation tasks with different systems:

There will be a comparison table in the end of the post.

Most of the tools I will look at are known as build automation software or build systems. There are myriads of them in all different flavours, sizes and complexities. The idea is the same: developer defines rules for producing some results in an automated and consistent way. For example, a result might be an image with a graph. In order to make this image one would need to download the data, clean the data and do some data manipulations (classical example, really). You may start with a couple of shell scripts that will do the job. Once you return to the project a year later, it will be difficult to remember all the steps and their order you need to take to make that image. The obvious solution is to document all the steps. Good news! Build systems let you document the steps in a form of computer program. Some build systems are like your shell scripts, but with additional bells and whistles.

The foundation of this post is a series of posts by Mateusz Bednarski on automated workflow for a machine learning project. Mateusz explains his views and provides recipes for using

If you would like to know more about

My selection of build systems is not comprehensive nor unbiased. If you want to make your list, Wikipedia might be a good starting point. As stated above above, I will cover CMake, PyBuilder, pynt, Paver, doit and Luigi. Most of the tools in this list are python-based and it makes sense since the project is in Python. This post will not cover how to install the tools. I assume that you are fairly proficient in Python.

I am mostly interested in testing this functionality:

I will not use all build targets from Mateusz's post, just three of them to illustrate the principles.

All the code is available on GitHub.

CMake is a build script generator, which generates input files for various build systems. And it’s name stands for cross-platform make. CMake is a software engineering tool. It’s primary concern is about building executables and libraries. So CMake knows how to build targets from source code in supported languages. CMake is executed in two steps: configuration and generation. During configuration it is possible to configure the future build according to one needs. For example, user-provided variables are given during this step. Generation is normally straightforward and produces file(s) that build systems can work with. With CMake, you can still use

Another important concept is that CMake encourages out-of-source builds. Out-of-source builds keep source code away from any artifacts it produces. This makes a lot of sense for executables where single source codebase may be compiled under different CPU architectures and operating systems. This approach, however, may contradict the way a lot of data scientists work. It seems to me that data science community tends to have high coupling of data, code and results.

Let’s see what we need to achieve our goals with CMake. There are two possibilities to define custom things in CMake: custom targets and custom commands. Unfortunately we will need to use both, which results in more typing compared to vanila makefile. A custom target is considered to be always out of date, i.e. if there is a target for downloading raw data CMake will always redownload it. A combination of custom command with custom target allows to keep targets up to date.

For our project we will create a file named CMakeLists.txt and put it in the project’s root. Let’s check out the content:

This part is basic. The second line defines the name of your project, version, and specifies that we won’t use any build-in language support (sine we will call Python scripts).

Our first target will download the IRIS dataset:

First line defines parameter

Next, we define variables with downloaded location of IRIS dataset. Then we add a custom command, which will produce

Alright, let’s see how we can use it. I am currently running it on WIndows with installed MinGW compiler. You may need to adjust the generator setting for your needs (run

With modern CMake we can build the project directly from CMake. This command will invoke

We can also view the list of available targets:

And we can remove downloaded file by:

See that we didn’t need to create the clean target manually.

Now let’s move to the next target — preprocessed IRIS data. Mateusz creates two files from a single function:

The second option is to specify a list of files as a custom command output:

See that in this case I created the list, but didn’t use it inside custom command. I do not know of a way to reference output arguments of custom command inside it.

Another interesting thing to note is the usage of

And finally the third target:

This target is basically the same as the second one.

To wrap up. CMake looks messy and more difficult than Make. Indeed, a lot of people criticize CMake for it’s syntax. In my experience, the understanding will come and it is absolutely possible to make sense of even very complicated CMake files.

You will still do a lot of gluing yourself as you will need to pass correct variables around. I do not see an easy way of referencing output of one custom command in another one. It seems like it is possible to do it via custom targets.

PyBuilder part is very short. I used Python 3.7 in my project and PyBuilder current version 0.11.17 does not support it. The proposed solution is to use development version. However that version is bounded to pip v9. Pip is v19.3 as of time of writing. Bummer. After fiddling around with it a bit, it didn't work for me at all. PyBuilder evaluation was a short-lived one.

Pynt is python-based, which means we can use python functions directly. It is not necessary to wrap them with click and to provide command line interface. However, pynt is also capable of executing shell commands. I will use python functions.

Build commands are given in a file

Since I would like to use python functions I need to import them in the build script. Pynt does not include the current directory as python script, so writing smth like this:

will not work. We have to do:

My initial

And the

We can run pynt from the project's folder and list all the available targets:

Let's make the pairwise distribution:

If we now run the same command again (i.e.

Paver looks almost exactly as Pynt. It slightly different in a way one defines dependencies between targets (another decorator

Doit seems like an attempt to create a truly build automation tool in python. It can execute python code and shell commands. It looks quite promising. What it seems to miss (in the context of our specific goals) is the ability to handle dependencies between targets. Let's say we want to make a small pipeline where the output of target A is used as input of target B. And let's say we are using files as outputs, so target A create a file named

In order to make such pipeline we will need to specify file

Dependencies in doit are file-based and expressed as strings. This means that dependencies

Here the target

It may be worth reading the success stories of using doit. It definitely has nice features like the ability to provide a custom up-to-date target check.

Luigi stays apart from other tools as it is a system to build complex pipelines. It appeared on my radar after a colleague told me that he tried Make, was never able to use it across Windows/Linux and moved away to Luigi.

Luigi aims at production-ready systems. It comes with a server, which can be used to visualize your tasks or to get a history of task executions. The server is called a central schedler. A local scheduler is available for debugging purposes.

Luigi is also different from other systems in a way how tasks are created. Lugi doesn't act on some predefined file (like

What is great about luigi is the way of specifying dependencies between tasks. Each task is a class. Method

Luigi doesn't care about file modifications. It cares about file existance. So it is not possible to trigger rebuilds when the source code changes. Luigi doesn't have a built-in clean functionality.

Luigi tasks for this project are available in file luigitasks.py. I run them from the terminal:

The table below summarizes how different systems work in respect to our specific goals.

make is very stable and widely-used I personally like cross-platform solutions. It is 2019 after all, not 1977. One can argue that make itself is cross-platform, but in reality you will have troubles and will spend time on fixing your tool rather than on doing the actual work. So I decided to have a look around and to check out what other tools are available. Yes, I decided to spend some time on tools.

This post is more an invitation for a dialogue rather than a tutorial. Perhaps your solution is perfect. If it is then it will be interesting to hear about it.

In this post I will use a small Python project and will do the same automation tasks with different systems:

There will be a comparison table in the end of the post.

Most of the tools I will look at are known as build automation software or build systems. There are myriads of them in all different flavours, sizes and complexities. The idea is the same: developer defines rules for producing some results in an automated and consistent way. For example, a result might be an image with a graph. In order to make this image one would need to download the data, clean the data and do some data manipulations (classical example, really). You may start with a couple of shell scripts that will do the job. Once you return to the project a year later, it will be difficult to remember all the steps and their order you need to take to make that image. The obvious solution is to document all the steps. Good news! Build systems let you document the steps in a form of computer program. Some build systems are like your shell scripts, but with additional bells and whistles.

The foundation of this post is a series of posts by Mateusz Bednarski on automated workflow for a machine learning project. Mateusz explains his views and provides recipes for using

make. I encourage you to go and check his posts first. I will mostly use his code, but with different build systems.If you would like to know more about

make, following is a references for a couple of posts. Brooke Kennedy gives a high-level overview in 5 Easy Steps to Make Your Data Science Project Reproducible. Zachary Jones gives more details about the syntax and capabilities along with the links to other posts. David Stevens writes a very hype post on why you absolutely have to start using make right away. He provides nice examples comparing the old way and the new way. Samuel Lampa, on the other hand, writes about why using make is a bad idea.My selection of build systems is not comprehensive nor unbiased. If you want to make your list, Wikipedia might be a good starting point. As stated above above, I will cover CMake, PyBuilder, pynt, Paver, doit and Luigi. Most of the tools in this list are python-based and it makes sense since the project is in Python. This post will not cover how to install the tools. I assume that you are fairly proficient in Python.

I am mostly interested in testing this functionality:

- Specifying couple of targets with dependencies. I want to see how to do it and how easy it is.

- Checking out if incremental builds are possible. This means that build system won’t rebuild what have not been changed since the last run, i.e. you do not need to redownload your raw data. Another thing that I will look for is incremental builds when dependency changes. Imagine we have a graph of dependencies

A -> B -> C. Will targetCbe rebuilt ifBchanges? IfA? - Checking if rebuild will be triggered if source code is changed, i.e. we change the parameter of generated graph, next time we build the image must be rebuilt.

- Checking out the ways to clean build artifacts, i.e. remove files that have been created during build and roll back to the clean source code.

I will not use all build targets from Mateusz's post, just three of them to illustrate the principles.

All the code is available on GitHub.

CMake

CMake is a build script generator, which generates input files for various build systems. And it’s name stands for cross-platform make. CMake is a software engineering tool. It’s primary concern is about building executables and libraries. So CMake knows how to build targets from source code in supported languages. CMake is executed in two steps: configuration and generation. During configuration it is possible to configure the future build according to one needs. For example, user-provided variables are given during this step. Generation is normally straightforward and produces file(s) that build systems can work with. With CMake, you can still use

make, but instead of writing makefile directly you write a CMake file, which will generate the makefile for you.Another important concept is that CMake encourages out-of-source builds. Out-of-source builds keep source code away from any artifacts it produces. This makes a lot of sense for executables where single source codebase may be compiled under different CPU architectures and operating systems. This approach, however, may contradict the way a lot of data scientists work. It seems to me that data science community tends to have high coupling of data, code and results.

Let’s see what we need to achieve our goals with CMake. There are two possibilities to define custom things in CMake: custom targets and custom commands. Unfortunately we will need to use both, which results in more typing compared to vanila makefile. A custom target is considered to be always out of date, i.e. if there is a target for downloading raw data CMake will always redownload it. A combination of custom command with custom target allows to keep targets up to date.

For our project we will create a file named CMakeLists.txt and put it in the project’s root. Let’s check out the content:

cmake_minimum_required(VERSION 3.14.0 FATAL_ERROR)

project(Cmake_in_ml VERSION 0.1.0 LANGUAGES NONE)

This part is basic. The second line defines the name of your project, version, and specifies that we won’t use any build-in language support (sine we will call Python scripts).

Our first target will download the IRIS dataset:

SET(IRIS_URL "https://archive.ics.uci.edu/ml/machine-learning-databases/iris/iris.data" CACHE STRING "URL to the IRIS data")

set(IRIS_DIR ${CMAKE_CURRENT_SOURCE_DIR}/data/raw)

set(IRIS_FILE ${IRIS_DIR}/iris.csv)

ADD_CUSTOM_COMMAND(OUTPUT ${IRIS_FILE}

COMMAND ${CMAKE_COMMAND} -E echo "Downloading IRIS."

COMMAND python src/data/download.py ${IRIS_URL} ${IRIS_FILE}

COMMAND ${CMAKE_COMMAND} -E echo "Done. Checkout ${IRIS_FILE}."

WORKING_DIRECTORY ${CMAKE_CURRENT_SOURCE_DIR}

)

ADD_CUSTOM_TARGET(rawdata ALL DEPENDS ${IRIS_FILE})

First line defines parameter

IRIS_URL, which is exposed to user during configuration step. If you use CMake GUI you can set this variable through the GUI:

Next, we define variables with downloaded location of IRIS dataset. Then we add a custom command, which will produce

IRIS_FILE as it’s output. In the end, we define a custom target rawdata that depends on IRIS_FILE meaning that in order to build rawdataIRIS_FILE must be built. Option ALL of custom target says that rawdata will be one of the default targets to build. Note that I use CMAKE_CURRENT_SOURCE_DIR in order to keep the downloaded data in the source folder and not in the build folder. This is just to make it the same as Mateusz.Alright, let’s see how we can use it. I am currently running it on WIndows with installed MinGW compiler. You may need to adjust the generator setting for your needs (run

cmake --help to see the list of available generators). Fire up the terminal and go to the parent folder of the source code, then:mkdir overcome-the-chaos-build

cd overcome-the-chaos-build

cmake -G "MinGW Makefiles" ../overcome-the-chaos

outcome

— Configuring done

— Generating done

— Build files have been written to: C:/home/workspace/overcome-the-chaos-build

— Generating done

— Build files have been written to: C:/home/workspace/overcome-the-chaos-build

With modern CMake we can build the project directly from CMake. This command will invoke

build all command:cmake --build .

outcome

Scanning dependencies of target rawdata

[100%] Built target rawdata

[100%] Built target rawdata

We can also view the list of available targets:

cmake --build . --target help

And we can remove downloaded file by:

cmake --build . --target clean

See that we didn’t need to create the clean target manually.

Now let’s move to the next target — preprocessed IRIS data. Mateusz creates two files from a single function:

processed.pickle and processed.xlsx. You can see how he goes away with cleaning this Excel file by using rm with wildcard. I think this is not a very good approach. In CMake, we have two options of how to deal with it. First option is to use ADDITIONAL_MAKE_CLEAN_FILES directory property. The code will be:SET(PROCESSED_FILE ${CMAKE_CURRENT_SOURCE_DIR}/data/processed/processed.pickle)

ADD_CUSTOM_COMMAND(OUTPUT ${PROCESSED_FILE}

COMMAND python src/data/preprocess.py ${IRIS_FILE} ${PROCESSED_FILE} --excel data/processed/processed.xlsx

WORKING_DIRECTORY ${CMAKE_CURRENT_SOURCE_DIR}

DEPENDS rawdata ${IRIS_FILE}

)

ADD_CUSTOM_TARGET(preprocess DEPENDS ${PROCESSED_FILE})

# Additional files to clean

set_property(DIRECTORY PROPERTY ADDITIONAL_MAKE_CLEAN_FILES

${CMAKE_CURRENT_SOURCE_DIR}/data/processed/processed.xlsx

)

The second option is to specify a list of files as a custom command output:

LIST(APPEND PROCESSED_FILE "${CMAKE_CURRENT_SOURCE_DIR}/data/processed/processed.pickle"

"${CMAKE_CURRENT_SOURCE_DIR}/data/processed/processed.xlsx"

)

ADD_CUSTOM_COMMAND(OUTPUT ${PROCESSED_FILE}

COMMAND python src/data/preprocess.py ${IRIS_FILE} data/processed/processed.pickle --excel data/processed/processed.xlsx

WORKING_DIRECTORY ${CMAKE_CURRENT_SOURCE_DIR}

DEPENDS rawdata ${IRIS_FILE} src/data/preprocess.py

)

ADD_CUSTOM_TARGET(preprocess DEPENDS ${PROCESSED_FILE})

See that in this case I created the list, but didn’t use it inside custom command. I do not know of a way to reference output arguments of custom command inside it.

Another interesting thing to note is the usage of

depends in this custom command. We set dependency not only from a custom target, but it’s output as well and the python script. If we do not add dependency to IRIS_FILE, then modifying iris.csv manually will not result in rebuilding of preprocess target. Well, you should not modify files in your build directory manually in the first place. Just letting you know. More details in Sam Thursfield's post. The dependency to python script is needed to rebuild the target if python script changes.And finally the third target:

SET(EXPLORATORY_IMG ${CMAKE_CURRENT_SOURCE_DIR}/reports/figures/exploratory.png)

ADD_CUSTOM_COMMAND(OUTPUT ${EXPLORATORY_IMG}

COMMAND python src/visualization/exploratory.py ${PROCESSED_FILE} ${EXPLORATORY_IMG}

WORKING_DIRECTORY ${CMAKE_CURRENT_SOURCE_DIR}

DEPENDS ${PROCESSED_FILE} src/visualization/exploratory.py

)

ADD_CUSTOM_TARGET(exploratory DEPENDS ${EXPLORATORY_IMG})

This target is basically the same as the second one.

To wrap up. CMake looks messy and more difficult than Make. Indeed, a lot of people criticize CMake for it’s syntax. In my experience, the understanding will come and it is absolutely possible to make sense of even very complicated CMake files.

You will still do a lot of gluing yourself as you will need to pass correct variables around. I do not see an easy way of referencing output of one custom command in another one. It seems like it is possible to do it via custom targets.

PyBuilder

PyBuilder part is very short. I used Python 3.7 in my project and PyBuilder current version 0.11.17 does not support it. The proposed solution is to use development version. However that version is bounded to pip v9. Pip is v19.3 as of time of writing. Bummer. After fiddling around with it a bit, it didn't work for me at all. PyBuilder evaluation was a short-lived one.

pynt

Pynt is python-based, which means we can use python functions directly. It is not necessary to wrap them with click and to provide command line interface. However, pynt is also capable of executing shell commands. I will use python functions.

Build commands are given in a file

build.py. Targets/tasks are created with function decorators. Task dependencies are provided through the same decorator.Since I would like to use python functions I need to import them in the build script. Pynt does not include the current directory as python script, so writing smth like this:

from src.data.download import pydownload_file

will not work. We have to do:

import os

import sys

sys.path.append(os.path.join(os.path.dirname(__file__), '.'))

from src.data.download import pydownload_file

My initial

build.py file was like this:#!/usr/bin/python

import os

import sys

sys.path.append(os.path.join(os.path.dirname(__file__), '.'))

from pynt import task

from path import Path

import glob

from src.data.download import pydownload_file

from src.data.preprocess import pypreprocess

iris_file = 'data/raw/iris.csv'

processed_file = 'data/processed/processed.pickle'

@task()

def rawdata():

'''Download IRIS dataset'''

pydownload_file('https://archive.ics.uci.edu/ml/machine-learning-databases/iris/iris.data', iris_file)

@task()

def clean():

'''Clean all build artifacts'''

patterns = ['data/raw/*.csv', 'data/processed/*.pickle',

'data/processed/*.xlsx', 'reports/figures/*.png']

for pat in patterns:

for fl in glob.glob(pat):

Path(fl).remove()

@task(rawdata)

def preprocess():

'''Preprocess IRIS dataset'''

pypreprocess(iris_file, processed_file, 'data/processed/processed.xlsx')

And the

preprocess target didn't work. It was constantly complaining about input arguments of pypreprocess function. It seems like Pynt does not handle optional function arguments very well. I had to remove the argument for making the excel file. Keep this in mind if your project has functions with optional arguments.We can run pynt from the project's folder and list all the available targets:

pynt -l

outcome

Tasks in build file build.py:

clean Clean all build artifacts

exploratory Make an image with pairwise distribution

preprocess Preprocess IRIS dataset

rawdata Download IRIS dataset

Powered by pynt 0.8.2 - A Lightweight Python Build Tool.

Let's make the pairwise distribution:

pynt exploratory

outcome

[ build.py - Starting task "rawdata" ]

Downloading from https://archive.ics.uci.edu/ml/machine-learning-databases/iris/iris.data to data/raw/iris.csv

[ build.py - Completed task "rawdata" ]

[ build.py - Starting task "preprocess" ]

Preprocessing data

[ build.py - Completed task "preprocess" ]

[ build.py - Starting task "exploratory" ]

Plotting pairwise distribution...

[ build.py - Completed task "exploratory" ]

If we now run the same command again (i.e.

pynt exploratory) there will be a full rebuild. Pynt didn't track that nothing has changed.Paver

Paver looks almost exactly as Pynt. It slightly different in a way one defines dependencies between targets (another decorator

@needs). Paver makes a full rebuild each time and doesn't play nicely with functions that have optional arguments. Build instructions are found in pavement.py file.doit

Doit seems like an attempt to create a truly build automation tool in python. It can execute python code and shell commands. It looks quite promising. What it seems to miss (in the context of our specific goals) is the ability to handle dependencies between targets. Let's say we want to make a small pipeline where the output of target A is used as input of target B. And let's say we are using files as outputs, so target A create a file named

outA.

In order to make such pipeline we will need to specify file

outA twice in target A (as a result of a target, but also return it's name as part of target execution). Then we will need to specify it as input to target B. So there are 3 places in total where we need to provide information about file outA. And even after we do so, modification of file outA won't lead to automatic rebuild of target B. This means that if we ask doit to build target B, doit will only check if target B is up-to-date without checking any of the dependencies. To overcome this, we will need to specify outA 4 times — also as file dependency of target B. I see this as a drawback. Both Make and CMake are able to handle such situations correctly.Dependencies in doit are file-based and expressed as strings. This means that dependencies

./myfile.txt and myfile.txt are viewed as being different. As I wrote above, I find the way of passing information from target to target (when using python targets) a bit strange. Target has a list of artifacts it is going to produce, but another target can't use it. Instead the python function, which constitutes the target, must return a dictionary, which can be accessed in another target. Let's see it on an example:def task_preprocess():

"""Preprocess IRIS dataset"""

pickle_file = 'data/processed/processed.pickle'

excel_file = 'data/processed/processed.xlsx'

return {

'file_dep': ['src/data/preprocess.py'],

'targets': [pickle_file, excel_file],

'actions': [doit_pypreprocess],

'getargs': {'input_file': ('rawdata', 'filename')},

'clean': True,

}

Here the target

preprocess depends on rawdata. The dependency is provided via getargs property. It says that the argument input_file of function doit_pypreprocess is the output filename of the target rawdata. Have a look at the complete example in file dodo.py.It may be worth reading the success stories of using doit. It definitely has nice features like the ability to provide a custom up-to-date target check.

Luigi

Luigi stays apart from other tools as it is a system to build complex pipelines. It appeared on my radar after a colleague told me that he tried Make, was never able to use it across Windows/Linux and moved away to Luigi.

Luigi aims at production-ready systems. It comes with a server, which can be used to visualize your tasks or to get a history of task executions. The server is called a central schedler. A local scheduler is available for debugging purposes.

Luigi is also different from other systems in a way how tasks are created. Lugi doesn't act on some predefined file (like

dodo.py, pavement.py or makefile). Rather, one has to pass a python module name. So, if we try to use it in the similar way to other tools (place a file with tasks in project's root), it won't work. We have to either install our project or modify environmental variable PYTHONPATH by adding the path to the project.What is great about luigi is the way of specifying dependencies between tasks. Each task is a class. Method

output tells Luigi where the results of the task will end up. Results can be a single element or a list. Method requires specifies task dependencies (other tasks; although it is possible to make a dependency from itself). And that's it. Whatever is specified as output in task A will be passed as an input to task B if task B relies on task A.

Luigi doesn't care about file modifications. It cares about file existance. So it is not possible to trigger rebuilds when the source code changes. Luigi doesn't have a built-in clean functionality.

Luigi tasks for this project are available in file luigitasks.py. I run them from the terminal:

luigi --local-scheduler --module luigitasks Exploratory

Comparison

The table below summarizes how different systems work in respect to our specific goals.

| Define target with dependency | Incremental builds | Incremental builds if source code is changed | Ability to figure out which artifacts to remove during clean command | |

|---|---|---|---|---|

| CMake | yes | yes | yes | yes |

| Pynt | yes | no | no | no |

| Paver | yes | no | no | no |

| doit | Somewhat yes | yes | yes | yes |

| Luigi | yes | no | no | no |