How the cloud gaming platform for b2b and b2c clients works. Solutions for a great picture and fight with the last mile

Cloud gaming is called one of the main technologies that you should follow right now. For 6 years, this market should grow 10 times - from $ 45 million in 2018 to $ 450 million in 2024. Technology giants have already rushed to seize a niche: Google and Nvidia have launched beta versions of their cloud gaming services, Microsoft, EA, Ubisoft, Amazon and Verizon are about to enter the scene.

For gamers, this means that very soon they will be able to completely stop spending money on updating iron and run powerful games on weak computers. Is it beneficial to the rest of the ecosystem? We tell why cloud gaming will increase their earnings and how we created a technology that makes it easy to enter a promising market.

Game publishers and developers are interested in delivering their product to the largest number of players as quickly as possible. Now, according to our data, 70% of potential buyers do not reach the game - they do not wait for the client to download and the installation file weighing tens of gigabytes. At the same time, 60% of users, judging by their video cards , in principle, cannot run powerful games (AAA-level) on their computers in acceptable quality. Cloud gaming can solve this problem - it will not only not reduce the earnings of publishers and developers, but will help them build a paying audience.

Manufacturers of TV and set-top boxes are now looking towards cloud gaming. In the era of smart homes and voice assistants, they have to compete more and more for user attention, and game functionality is the main way to attract this attention. With built-in cloud gaming, their client will be able to run modern games directly on the TV, paying for the service to the manufacturer.

Another potentially active participant in the ecosystem is telecom operators. Their way to increase revenue is to provide additional services. Gaming is just one of these services that operators are already actively implementing. Rostelecom launched the “Gaming” tariff; Akado is selling access to our Playkey service. It is not only about broadband Internet operators. Mobile operators due to the active distribution of 5G will also be able to make cloud gaming their additional source of income.

Despite the bright prospects, entering the market is not so simple. All existing services, including products of technology giants, have not yet been able to completely overcome the problem of the "last mile". This means that due to the imperfection of the network directly in the house or apartment, the user does not have enough Internet speed for cloud gaming to work correctly.

Take a look at how the WiFi signal fades, spreading from the router through the apartment

Players who have long been present in the market and have powerful resources are gradually moving towards solving this problem. But launching your cloud games from scratch in 2019 means spending a lot of money, time and, possibly, not creating an effective solution. To help all ecosystem participants grow in a rapidly growing market, we have developed a technology that allows you to quickly and without high costs launch your cloud gaming service.

Playkey began developing its cloud gaming technology back in 2012. In 2014, a commercial launch took place, and by 2016, 2.5 million players had used the service at least once. Throughout the development, we saw interest not only from gamers, but also from manufacturers of set-top boxes and telecom operators. With NetByNet and Er-Telecom, we even launched several pilot projects. In 2018, we decided that our product may have a B2B future.

To develop for each company its own option for integrating cloud gaming, as we did in pilot projects, is problematic. Each such implementation took from three months to six months. Why? Everyone has different equipment and operating systems: someone needs cloud gaming for an Android console, and someone needs an iFrame in the web interface of their personal account for streaming to computers. In addition, everyone has a different design, billing (a separate wonderful world!) And other features. It became clear that it was necessary either to increase the development team tenfold, or to make the most versatile B2B-box solution.

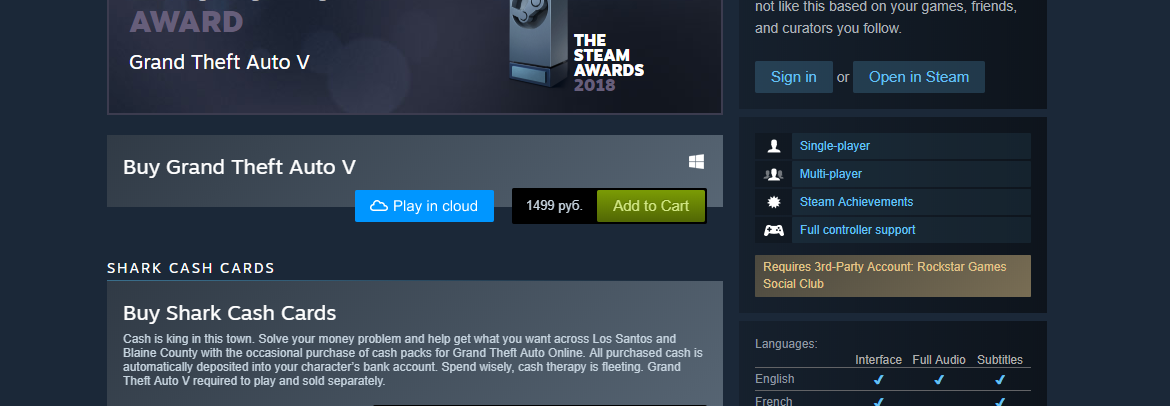

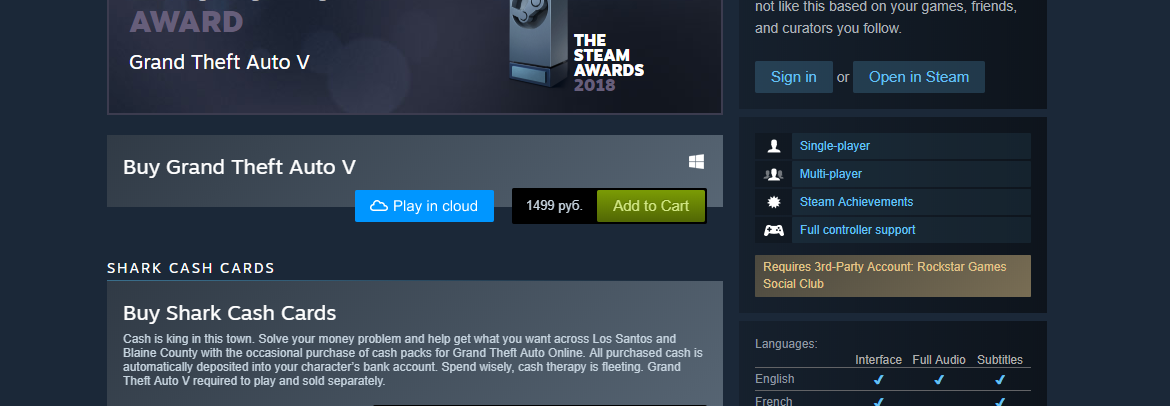

In March 2019, we launched RemoteClick. This is software that companies can install on their servers and get a working cloud gaming service. How will it look for the user? He will see a button on his familiar site allowing him to launch the game in the cloud. When pressed, the game will start on the company’s server, and the user will see the stream and will be able to play remotely. Here's what it might look like in popular digital game distribution services.

How RemoteClick deals with numerous technical barriers, we will now tell. Cloud gaming of the first wave (for example, OnLive) ruined the poor quality of the Internet among users. Then, in 2010, the average Internet connection speed in the USA was only 4.7 Mbit / s. By 2017, it has grown to 18.7 Mbps, and soon 5G will appear everywhere and a new era will begin. However, despite the fact that in general the infrastructure is ready for cloud gaming, the already mentioned “last mile” problem remains.

One side of it, which we call objective: the user really has problems with the network. For example, the operator does not highlight the declared maximum speed. Or use 2.4 GHz WiFi, noisy microwave and wireless mouse.

The other side, which we call subjective: the user does not even suspect that he has problems with the network (he does not know that he does not know)! In the best case, he is sure that since the operator sells him a 100 Mbps tariff, he has 100 Mbps Internet. At worst, it has no idea what a router is, and the Internet is divided into blue and color. The real case of casdeva.

Blue and color internet.

But both parts of the “last mile” problem are solvable. In RemoteClick, we use active and passive mechanisms for this. Below is a detailed account of how they deal with obstacles.

1. Effective noise-tolerant encoding of transmitted data aka redundancy (FEC - Forward Error Correction)

When transmitting video data from a server to a client, noise-tolerant encoding is used. With it, we restore the original data when they are partially lost due to network problems. What makes our solution effective?

We play via Doom in Playkey on Core i3, 4 GB RAM, MSI GeForce GTX 750.

2. Data sending

An alternative way to deal with losses is to request data again. For example, if the server and user are in Moscow, then the transmission delay will not exceed 5 ms. With this value, the client application will have time to request and receive from the server the lost part of the data unnoticed by the user. Our system itself decides when to apply redundancy, and when to redundancy.

3. Individual settings for data transfer

In order to choose the best way to deal with losses, our algorithm analyzes the user's network connection and sets up a data transfer system individually for each case.

He looks:

If you rank the connections by loss and delay, then the wire is the most reliable of all, of course. Losses are rare over Ethernet, and “last mile” delays are extremely unlikely. Then comes WiFi 5 GHz and only then WiFi 2.4 GHz. Mobile connections are generally trash, we are waiting for 5G.

When using WiFi, the system automatically configures the user’s adapter, putting it in the most suitable mode for use in the cloud (for example, turning off power saving).

4. Custom encoding settings.

Streaming video exists thanks to codecs - programs for compressing and restoring video data. In uncompressed form, one second of video easily passes for a hundred megabytes, and the codec reduces this value by an order of magnitude. We are armed with codecs H264 and H265.

H264 is the most popular. Hardware work with it has been supported by all major manufacturers of video cards for over a decade. H265 is a daring young successor. Hardware began to support him about five years ago. Encoding and decoding in H265 requires more resources, but the quality of the compressed frame is much higher than on H264. And without increasing the volume!

Which codec to choose and what encoding parameters to set for a particular user, based on his hardware? A non-trivial task that is solved with us automatically. The smart system analyzes the capabilities of the equipment, sets the optimal parameters of the encoder and selects the decoder on the client side.

5. Loss compensation

They didn’t want to admit it, but even we are not perfect. Some data lost in the bowels of the network cannot be restored and we will not be able to send it back. But in this case there is a way out.

For example, bitrate adjustment. Our algorithm constantly monitors the amount of data sent from server to client. It captures every shortage and even predicts possible future losses. Its task is to notice in time, and ideally, to predict when the losses will reach a critical value and begin to create noticeable interference to the user on the screen. And to correct at this moment the amount of data being transferred (bitrate).

We also use invalidation of unassembled frames and the mechanism of reference frames in the video stream. Both tools reduce the number of noticeable artifacts. That is, even with serious violations in data transmission, the image on the screen remains acceptable, and the game is playable.

6. Distributed sending

Time-distributed sending of data also improves the quality of streaming. How to distribute it, depends on specific indicators in the network, for example, the presence of losses, ping and other factors. Our algorithm analyzes them and selects the best option. Sometimes a distribution in the interval of a few milliseconds reduces losses by several times.

7. Reduction of delay

One of the key features when playing through the cloud is latency. The smaller it is, the more comfortable it is to play. The delay can be conditionally divided into two parts:

Networking depends on the infrastructure and to deal with it is problematic. If the wire was bitten by mice, dancing with a tambourine will not help. But the system delay can be reduced at times and the quality of cloud gaming for the player will change dramatically. In addition to the already mentioned error-correcting coding and personalized settings, we use two more mechanisms.

Rendering a cursor without delay in Playkey using the example of Apex Legends

Using our technology, with a network delay of 0 ms and working with a video stream of 60 FPS, the delay of the entire system does not exceed 35 ms.

In our experience, many users have a poor idea of how their devices connect to the Internet. In an interview with the players it turned out that some do not know what a router is. And this is normal! You do not have to know the design of an internal combustion engine in order to drive a car. Do not require the user to know the system administrator so that he can play.

However, it’s still important to convey some technical points so that the player can remove the barriers on his own side. And we help him.

1. Indication of WiFi support 5 GHz

We wrote above that we see the Wi-Fi standard - 5 GHz or 2.4 GHz. And we also know whether the network adapter of the user device supports the ability to work at 5 GHz. And if so, we recommend using this range. We cannot independently change the frequency yet, since we do not see the characteristics of the router.

2. Indication of WiFi signal strength

For some users, the WiFi signal may be weak, even if the Internet is working well and seems to be at an acceptable speed. The problem will be revealed precisely with cloud gaming, which exposes the network to real tests.

Signal intensity is affected by obstacles - such as walls - and interference from other devices. The same microwaves emit a lot. As a result, there are losses that are invisible when working on the Internet, but critical for playing through the cloud. In such cases, we warn the user about interference, offer to move closer to the router and turn off the "noisy" devices.

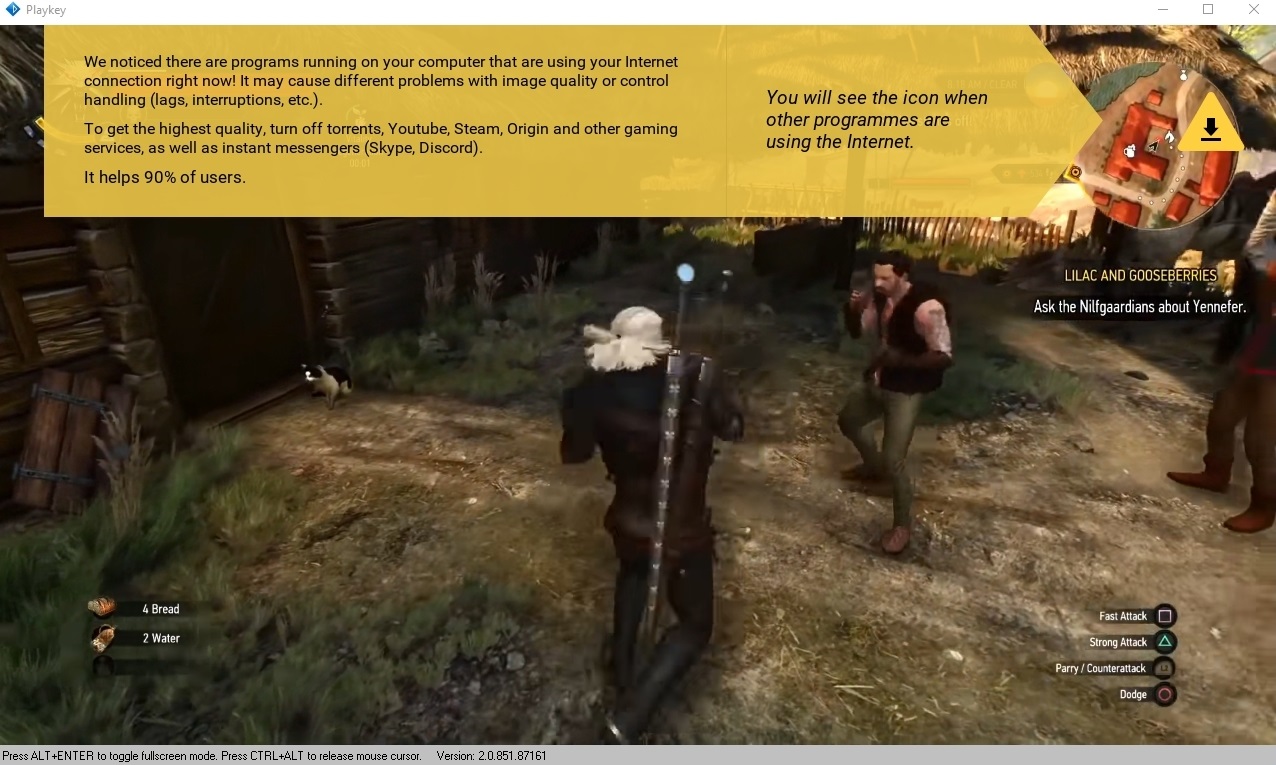

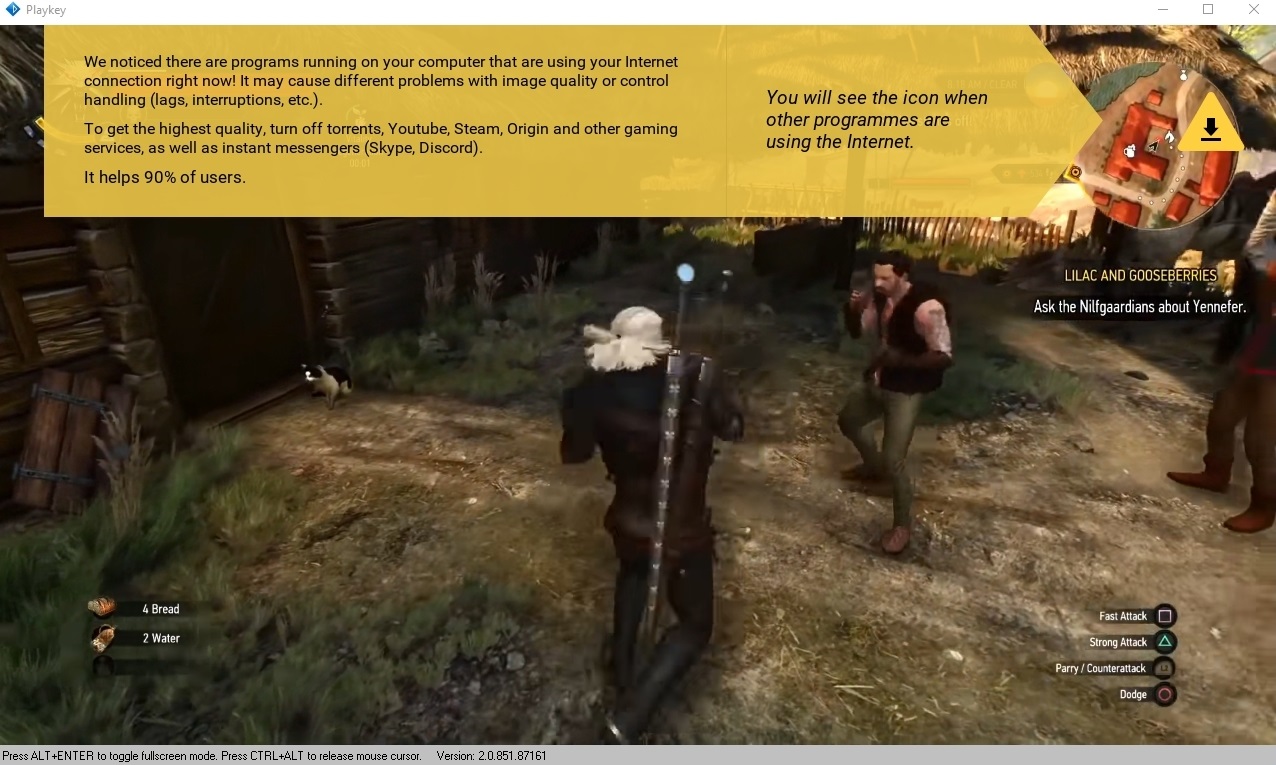

3. Indication of traffic consumers.

Even if everything is fine with the network, other applications may consume too much traffic. For example, if a video on Youtube is launched in parallel with the game in the cloud, or torrents are downloaded. Our application calculates thieves and warns the player about them.

Cloud games as a fundamentally new way of consuming game content have been trying to break into the market for almost ten years now. And as is the case with any innovation, their history is a series of small victories and high-profile defeats. It is not surprising that over the years, cloud gaming has acquired myths and prejudices. At the dawn of the development of technology, they were justified, but today they are completely groundless.

Today, in a technically advanced cloud solution, the pictures of the original and the clouds are almost identical - there are no differences with the naked eye. Individual setting of the encoder for the player’s equipment and a set of mechanisms to combat losses close this question. On a high-quality network, there is neither frame blur nor graphic artifacts. We even take permission into account. It makes no sense to stream the picture to 1080p if the player uses 720p.

Below are two Apex Legends videos from our channel. In one case, this is the recording of gameplay when playing on a PC, in the other - through the Playkey.

Apex Legends on PC

Apex Legends on Playkey

The network status is really intermittent, but this problem is resolved. We dynamically change the encoder settings to the user's network quality. A constantly acceptable level of FPS is supported by special methods of image capture.

How it works? The game has a 3D engine with which to build a 3D world. But the user is shown a flat image. In order for him to see it, a memory picture is created for each frame - a kind of photograph of how this 3D world is seen from a certain point. This picture in encoded form is stored in the video memory buffer. We capture it from the video memory and pass it to the encoder, which already decrypts it. And so with each frame, one after another.

Our technology allows you to capture and decode a picture in one stream, which increases FPS. And if these processes are conducted in parallel (a solution quite popular on the cloud gaming market), the encoder will constantly turn to capture, pick up new frames with a delay and, accordingly, transmit them with a delay.

The video at the top of the screen was obtained using capture and decoding technology in a single stream.

The control delay is normally a few milliseconds. And usually it is invisible to the end user. But sometimes there is a tiny discrepancy between mouse movement and cursor movement. He does not affect anything, but creates a negative impression. The above-described drawing of the cursor directly on the user's device eliminates this drawback. Otherwise, the total system delay of 30-35 ms is so small that neither the player nor his opponents notice anything in the match. The outcome of the battle is decided only by skills. Proof below.

Streamer bends through Playkey

Cloud gaming is already a reality. Playkey, PlayStation Now, Shadow are working services with their audience and market place. And like many young markets, cloud gaming will grow rapidly in the coming years.

One of the scenarios that seems most likely to us is the appearance of their own services for game publishers and telecom operators. Someone will develop their own, someone will use ready-made boxed solutions, such as RemoteClick. The more players on the market, the faster the cloud-based way of consuming game content will become mainstream.

For gamers, this means that very soon they will be able to completely stop spending money on updating iron and run powerful games on weak computers. Is it beneficial to the rest of the ecosystem? We tell why cloud gaming will increase their earnings and how we created a technology that makes it easy to enter a promising market.

Publishers, developers, TV manufacturers and telecom operators: why do they all need cloud gaming

Game publishers and developers are interested in delivering their product to the largest number of players as quickly as possible. Now, according to our data, 70% of potential buyers do not reach the game - they do not wait for the client to download and the installation file weighing tens of gigabytes. At the same time, 60% of users, judging by their video cards , in principle, cannot run powerful games (AAA-level) on their computers in acceptable quality. Cloud gaming can solve this problem - it will not only not reduce the earnings of publishers and developers, but will help them build a paying audience.

Manufacturers of TV and set-top boxes are now looking towards cloud gaming. In the era of smart homes and voice assistants, they have to compete more and more for user attention, and game functionality is the main way to attract this attention. With built-in cloud gaming, their client will be able to run modern games directly on the TV, paying for the service to the manufacturer.

Another potentially active participant in the ecosystem is telecom operators. Their way to increase revenue is to provide additional services. Gaming is just one of these services that operators are already actively implementing. Rostelecom launched the “Gaming” tariff; Akado is selling access to our Playkey service. It is not only about broadband Internet operators. Mobile operators due to the active distribution of 5G will also be able to make cloud gaming their additional source of income.

Despite the bright prospects, entering the market is not so simple. All existing services, including products of technology giants, have not yet been able to completely overcome the problem of the "last mile". This means that due to the imperfection of the network directly in the house or apartment, the user does not have enough Internet speed for cloud gaming to work correctly.

Take a look at how the WiFi signal fades, spreading from the router through the apartment

Players who have long been present in the market and have powerful resources are gradually moving towards solving this problem. But launching your cloud games from scratch in 2019 means spending a lot of money, time and, possibly, not creating an effective solution. To help all ecosystem participants grow in a rapidly growing market, we have developed a technology that allows you to quickly and without high costs launch your cloud gaming service.

How we made a technology that will easily launch our cloud gaming service

Playkey began developing its cloud gaming technology back in 2012. In 2014, a commercial launch took place, and by 2016, 2.5 million players had used the service at least once. Throughout the development, we saw interest not only from gamers, but also from manufacturers of set-top boxes and telecom operators. With NetByNet and Er-Telecom, we even launched several pilot projects. In 2018, we decided that our product may have a B2B future.

To develop for each company its own option for integrating cloud gaming, as we did in pilot projects, is problematic. Each such implementation took from three months to six months. Why? Everyone has different equipment and operating systems: someone needs cloud gaming for an Android console, and someone needs an iFrame in the web interface of their personal account for streaming to computers. In addition, everyone has a different design, billing (a separate wonderful world!) And other features. It became clear that it was necessary either to increase the development team tenfold, or to make the most versatile B2B-box solution.

In March 2019, we launched RemoteClick. This is software that companies can install on their servers and get a working cloud gaming service. How will it look for the user? He will see a button on his familiar site allowing him to launch the game in the cloud. When pressed, the game will start on the company’s server, and the user will see the stream and will be able to play remotely. Here's what it might look like in popular digital game distribution services.

Active struggle for quality. And passive too.

How RemoteClick deals with numerous technical barriers, we will now tell. Cloud gaming of the first wave (for example, OnLive) ruined the poor quality of the Internet among users. Then, in 2010, the average Internet connection speed in the USA was only 4.7 Mbit / s. By 2017, it has grown to 18.7 Mbps, and soon 5G will appear everywhere and a new era will begin. However, despite the fact that in general the infrastructure is ready for cloud gaming, the already mentioned “last mile” problem remains.

One side of it, which we call objective: the user really has problems with the network. For example, the operator does not highlight the declared maximum speed. Or use 2.4 GHz WiFi, noisy microwave and wireless mouse.

The other side, which we call subjective: the user does not even suspect that he has problems with the network (he does not know that he does not know)! In the best case, he is sure that since the operator sells him a 100 Mbps tariff, he has 100 Mbps Internet. At worst, it has no idea what a router is, and the Internet is divided into blue and color. The real case of casdeva.

Blue and color internet.

But both parts of the “last mile” problem are solvable. In RemoteClick, we use active and passive mechanisms for this. Below is a detailed account of how they deal with obstacles.

Active mechanisms

1. Effective noise-tolerant encoding of transmitted data aka redundancy (FEC - Forward Error Correction)

When transmitting video data from a server to a client, noise-tolerant encoding is used. With it, we restore the original data when they are partially lost due to network problems. What makes our solution effective?

- Speed. Encoding and decoding is very fast. Even on “weak” computers, the operation takes no more than 1 ms for 0.5 MB of data. Thus, encoding and decoding almost does not add delay when playing through the cloud. The importance is hard to overestimate.

- Maximum data recovery potential. Namely, the ratio of the excess amount of data and the potential for recovery volume. In our case, the ratio = 1. Suppose you want to transfer 1 MB of video. If we add 300 Kb of additional data during encoding (this is called redundancy), then in the decoding process to recover 1 original megabyte, we need only any 1 MB of the total 1.3 MB that the server sent. In other words, we can lose 300 Kb and still restore the original data. As you can see, 300/300 = 1. This is the maximum possible efficiency.

- Flexibility in setting up additional data during encoding. We can configure a separate level of redundancy for each video frame that needs to be transmitted over the network. For example, by noticing network problems, we can increase or decrease the level of redundancy.

We play via Doom in Playkey on Core i3, 4 GB RAM, MSI GeForce GTX 750.

2. Data sending

An alternative way to deal with losses is to request data again. For example, if the server and user are in Moscow, then the transmission delay will not exceed 5 ms. With this value, the client application will have time to request and receive from the server the lost part of the data unnoticed by the user. Our system itself decides when to apply redundancy, and when to redundancy.

3. Individual settings for data transfer

In order to choose the best way to deal with losses, our algorithm analyzes the user's network connection and sets up a data transfer system individually for each case.

He looks:

- type of connection (Ethernet, WiFi, 3G, etc.);

- the used frequency range of WiFi is 2.4 GHz or 5 GHz;

- WiFi signal strength.

If you rank the connections by loss and delay, then the wire is the most reliable of all, of course. Losses are rare over Ethernet, and “last mile” delays are extremely unlikely. Then comes WiFi 5 GHz and only then WiFi 2.4 GHz. Mobile connections are generally trash, we are waiting for 5G.

When using WiFi, the system automatically configures the user’s adapter, putting it in the most suitable mode for use in the cloud (for example, turning off power saving).

4. Custom encoding settings.

Streaming video exists thanks to codecs - programs for compressing and restoring video data. In uncompressed form, one second of video easily passes for a hundred megabytes, and the codec reduces this value by an order of magnitude. We are armed with codecs H264 and H265.

H264 is the most popular. Hardware work with it has been supported by all major manufacturers of video cards for over a decade. H265 is a daring young successor. Hardware began to support him about five years ago. Encoding and decoding in H265 requires more resources, but the quality of the compressed frame is much higher than on H264. And without increasing the volume!

Which codec to choose and what encoding parameters to set for a particular user, based on his hardware? A non-trivial task that is solved with us automatically. The smart system analyzes the capabilities of the equipment, sets the optimal parameters of the encoder and selects the decoder on the client side.

5. Loss compensation

They didn’t want to admit it, but even we are not perfect. Some data lost in the bowels of the network cannot be restored and we will not be able to send it back. But in this case there is a way out.

For example, bitrate adjustment. Our algorithm constantly monitors the amount of data sent from server to client. It captures every shortage and even predicts possible future losses. Its task is to notice in time, and ideally, to predict when the losses will reach a critical value and begin to create noticeable interference to the user on the screen. And to correct at this moment the amount of data being transferred (bitrate).

We also use invalidation of unassembled frames and the mechanism of reference frames in the video stream. Both tools reduce the number of noticeable artifacts. That is, even with serious violations in data transmission, the image on the screen remains acceptable, and the game is playable.

6. Distributed sending

Time-distributed sending of data also improves the quality of streaming. How to distribute it, depends on specific indicators in the network, for example, the presence of losses, ping and other factors. Our algorithm analyzes them and selects the best option. Sometimes a distribution in the interval of a few milliseconds reduces losses by several times.

7. Reduction of delay

One of the key features when playing through the cloud is latency. The smaller it is, the more comfortable it is to play. The delay can be conditionally divided into two parts:

- network or data transfer delay;

- system delay (removal of control on the client side, image capture on the server, image encoding, the above mechanisms for adapting data to sending, data collection on the client, image decoding and its rendering).

Networking depends on the infrastructure and to deal with it is problematic. If the wire was bitten by mice, dancing with a tambourine will not help. But the system delay can be reduced at times and the quality of cloud gaming for the player will change dramatically. In addition to the already mentioned error-correcting coding and personalized settings, we use two more mechanisms.

- Fast data acquisition from control devices (keyboard, mouse) on the client side. Even on weak computers, 1-2 ms is enough for this.

- Rendering a system cursor on the client. The mouse pointer is not processed on the remote server, but in the Playkey client on the user's computer, that is, without the slightest delay. Yes, this does not affect the real control in the game, but the main thing here is human perception.

Rendering a cursor without delay in Playkey using the example of Apex Legends

Using our technology, with a network delay of 0 ms and working with a video stream of 60 FPS, the delay of the entire system does not exceed 35 ms.

Passive gears

In our experience, many users have a poor idea of how their devices connect to the Internet. In an interview with the players it turned out that some do not know what a router is. And this is normal! You do not have to know the design of an internal combustion engine in order to drive a car. Do not require the user to know the system administrator so that he can play.

However, it’s still important to convey some technical points so that the player can remove the barriers on his own side. And we help him.

1. Indication of WiFi support 5 GHz

We wrote above that we see the Wi-Fi standard - 5 GHz or 2.4 GHz. And we also know whether the network adapter of the user device supports the ability to work at 5 GHz. And if so, we recommend using this range. We cannot independently change the frequency yet, since we do not see the characteristics of the router.

2. Indication of WiFi signal strength

For some users, the WiFi signal may be weak, even if the Internet is working well and seems to be at an acceptable speed. The problem will be revealed precisely with cloud gaming, which exposes the network to real tests.

Signal intensity is affected by obstacles - such as walls - and interference from other devices. The same microwaves emit a lot. As a result, there are losses that are invisible when working on the Internet, but critical for playing through the cloud. In such cases, we warn the user about interference, offer to move closer to the router and turn off the "noisy" devices.

3. Indication of traffic consumers.

Even if everything is fine with the network, other applications may consume too much traffic. For example, if a video on Youtube is launched in parallel with the game in the cloud, or torrents are downloaded. Our application calculates thieves and warns the player about them.

Fears from the past - debunking the myths of cloud gaming

Cloud games as a fundamentally new way of consuming game content have been trying to break into the market for almost ten years now. And as is the case with any innovation, their history is a series of small victories and high-profile defeats. It is not surprising that over the years, cloud gaming has acquired myths and prejudices. At the dawn of the development of technology, they were justified, but today they are completely groundless.

Myth 1. The image in the cloud is worse than in the original - as if playing on YouTube

Today, in a technically advanced cloud solution, the pictures of the original and the clouds are almost identical - there are no differences with the naked eye. Individual setting of the encoder for the player’s equipment and a set of mechanisms to combat losses close this question. On a high-quality network, there is neither frame blur nor graphic artifacts. We even take permission into account. It makes no sense to stream the picture to 1080p if the player uses 720p.

Below are two Apex Legends videos from our channel. In one case, this is the recording of gameplay when playing on a PC, in the other - through the Playkey.

Apex Legends on PC

Apex Legends on Playkey

Myth 2. Unstable quality

The network status is really intermittent, but this problem is resolved. We dynamically change the encoder settings to the user's network quality. A constantly acceptable level of FPS is supported by special methods of image capture.

How it works? The game has a 3D engine with which to build a 3D world. But the user is shown a flat image. In order for him to see it, a memory picture is created for each frame - a kind of photograph of how this 3D world is seen from a certain point. This picture in encoded form is stored in the video memory buffer. We capture it from the video memory and pass it to the encoder, which already decrypts it. And so with each frame, one after another.

Our technology allows you to capture and decode a picture in one stream, which increases FPS. And if these processes are conducted in parallel (a solution quite popular on the cloud gaming market), the encoder will constantly turn to capture, pick up new frames with a delay and, accordingly, transmit them with a delay.

The video at the top of the screen was obtained using capture and decoding technology in a single stream.

Myth 3. Due to lags in management I will be “cancer” in multiplayer

The control delay is normally a few milliseconds. And usually it is invisible to the end user. But sometimes there is a tiny discrepancy between mouse movement and cursor movement. He does not affect anything, but creates a negative impression. The above-described drawing of the cursor directly on the user's device eliminates this drawback. Otherwise, the total system delay of 30-35 ms is so small that neither the player nor his opponents notice anything in the match. The outcome of the battle is decided only by skills. Proof below.

Streamer bends through Playkey

What's next

Cloud gaming is already a reality. Playkey, PlayStation Now, Shadow are working services with their audience and market place. And like many young markets, cloud gaming will grow rapidly in the coming years.

One of the scenarios that seems most likely to us is the appearance of their own services for game publishers and telecom operators. Someone will develop their own, someone will use ready-made boxed solutions, such as RemoteClick. The more players on the market, the faster the cloud-based way of consuming game content will become mainstream.