How HPE SimpliVity 380 for VDI Will Work: Tough Load Tests

The customer wanted VDI. I looked very closely at the bunch of SimpliVity + VDI Citrix Virtual Desktop. For all operators, office workers in cities and so on. There are five thousand users only in the first wave of migration, and therefore they insisted on stress testing. VDI can start to slow down, it can calmly lie down - and this does not always happen due to problems with the channel. We bought a very powerful testing package specifically for VDI and loaded the infrastructure until it fell on the disks and the processor.

So, we need a plastic bottle, LoginVSI software for sophisticated VDI tests. We have it with licenses for 300 users. Then they took the HPE SimpliVity 380 hardware in a packing suitable for the task of maximum user density on one server, cut virtual machines with a good oversubscription, put office software on them on Win10 and started testing.

Go!

System

Two nodes (servers) HPE SimpliVity 380 Gen10. On each:

- 2 x Intel Xeon Platinum 8170 26c 2.1Ghz.

- RAM: 768GB, 12 x 64GB LRDIMMs DDR4 2666MHz.

- Primary Disk Controller: HPE Smart Array P816i-a SR Gen10.

- Hard drives: 9 x 1.92 TB SATA 6Gb / s SSD (in RAID6 7 + 2 configuration, i.e. it is a Medium model in terms of HPE SimpliVity).

- Network cards: 4 x 1Gb Eth (user data), 2 x 10Gb Eth (SimpliVity and vMotion backend).

- Special integrated FPGA cards in each node for deduplication / compression.

The nodes are connected to each other by a 10Gb Ethernet interconnect directly without an external switch, which is used as a SimpliVity backend and for transmitting virtual machine data via NFS. Virtual machine data in a cluster is always mirrored between two nodes.

Nodes are clustered in a Vmware vSphere cluster running vCenter.

For testing, a domain controller and a Citrix connection broker are deployed. The domain controller, broker and vCenter are placed on a separate cluster.

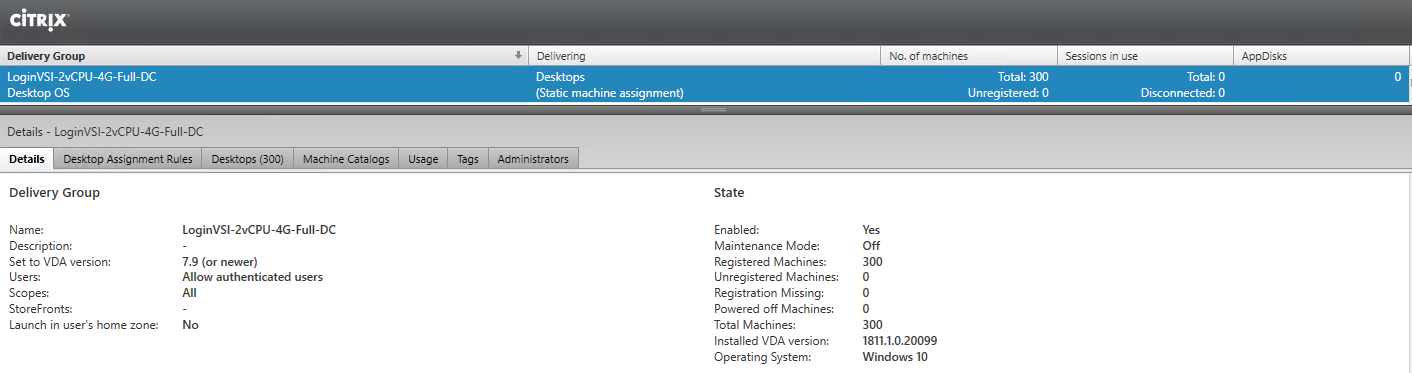

As a test infrastructure, 300 virtual desktops are deployed in the Dedicated - Full Copy configuration, that is, each desktop is a complete copy of the original image of the virtual machine and saves all changes made by users.

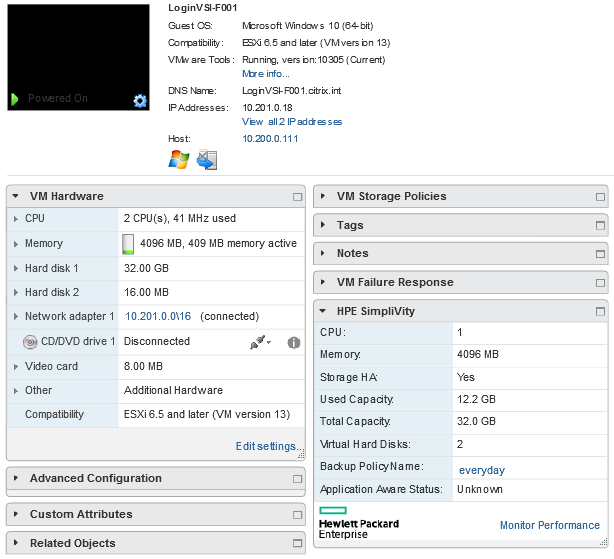

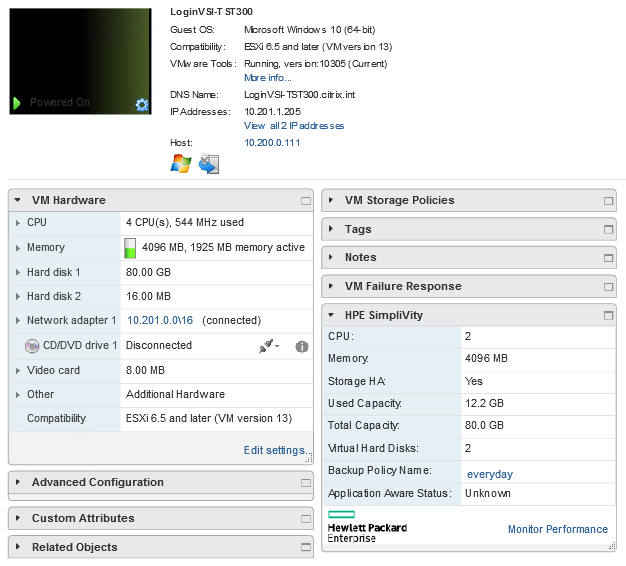

Each virtual machine has 2vCPU and 4GB RAM:

The following software required for testing was installed on the virtual machines:

- Windows 10 (64-bit), version 1809.

- Adobe Reader XI.

- Citrix Virtual Delivery Agent 1811.1.

- Doro PDF 1.82.

- Java 7 Update 13.

- Microsoft Office Professional Plus 2016.

Between nodes - synchronous replication. Each data block in the cluster has two copies. That is, now a complete set of data on each of the nodes. With a cluster of three or more nodes — copies of blocks in two different places. When creating a new VM, an additional copy is created on one of the cluster nodes. If one node fails, all VMs previously running on it automatically restart on other nodes where they have replicas. If the node fails for a long time, then a gradual redundancy recovery begins, and the cluster returns to N + 1 redundancy again.

Balancing and storing data occurs at the level of the software storage of SimpliVity itself.

Virtual machines launch a virtualization cluster; it also hosts them on software storage. The desktops themselves were taken according to the standard template: the tables of financiers and operationalists drove for a test (these are two different templates).

Testing

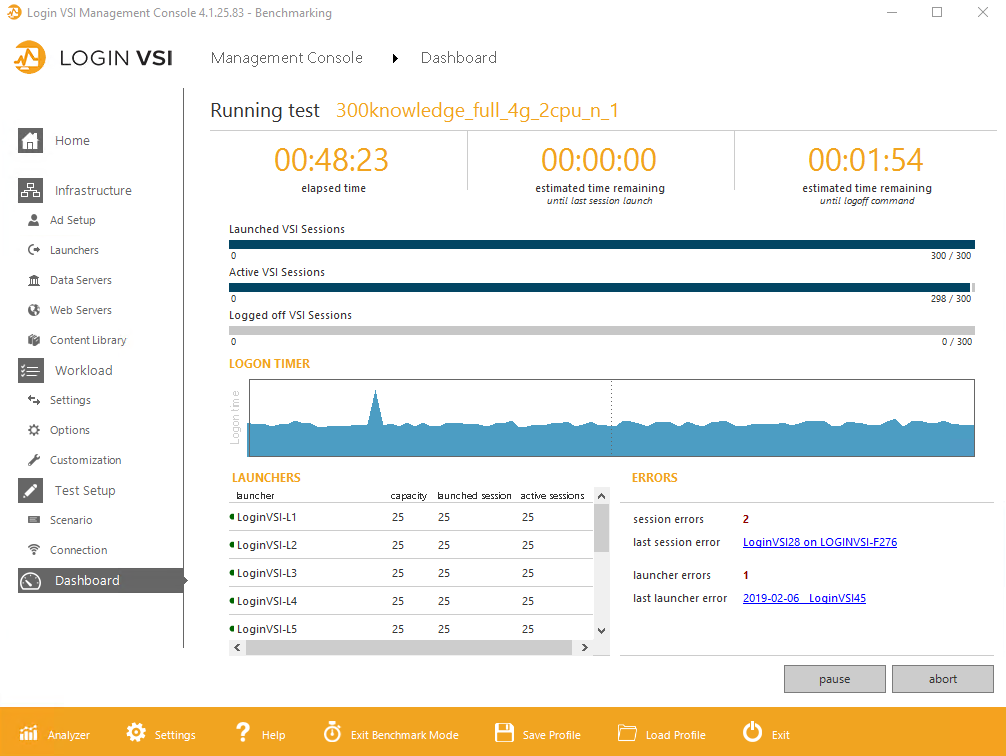

For testing, the test complex of LoginVSI 4.1 software was used. The LoginVSI complex as part of the management server and 12 machines for test connections were deployed on a separate physical host.

Testing was carried out in three modes:

Benchmark mode - load options 300 Knowledge workers and 300 Storage workers.

Standard mode is the 300 Power workers load option.

To enable Power workers to work and increase load diversity, a library of additional Power Library files was added to the LoginVSI complex. To ensure repeatability of the results, all test bench settings were left to Default.

The Knowledge and Power workers tests simulate the real load of users working on virtual workstations.

The Storage workers test was created specifically for testing storage systems, far from real workloads and for the most part consists in the user’s work with a large number of files of different sizes.

During testing, users log on to workstations for 48 minutes, about one user every 10 seconds.

results

The main result of LoginVSI testing is the VSImax metric, which is compiled from the execution time of various tasks launched by the user. For example: file open time in notepad, file compression time in 7-Zip, etc. A

detailed description of the calculation of metrics is available in the official documentation at the link .

In other words, LoginVSI repeats a typical load pattern, simulating user actions in an office suite, reading PDFs and so on, and measures various delays. There is a critical level of delays “everything slows down, it is impossible to work”), before which it is believed that the maximum of users is not reached. If the response time is 1,000 ms faster than this “everything slows down” state, then it is considered that the system is working fine and you can add more users.

Here are the basic metrics:

Metrics | Action taken | Detailed description | Loadable Components |

NSLD | Opening time for a text | Notepad starts and | CPU and I / O |

Nfo |

| Opening a VSI-Notepad File [Ctrl + O] | CPU, RAM and I / O

|

ZHC * | Strong Compression Zip File Creation Time | Compress a local | CPU and I / O |

ZLC * | Low Compression Zip File Creation Time | Compress a local | I / O

|

CPU | Computing a large | Creating a large array of | CPU |

During testing, the basic VSIbase metric is initially calculated, which shows the speed of tasks without the load on the system. Based on it, the VSImax Threshold is determined, which is equal to VSIbase + 1000ms.

Conclusions about system performance are made on the basis of two metrics: VSIbase, which determines the speed of the system, and VSImax threshold, which determines the maximum number of users that the system can withstand without significant degradation.

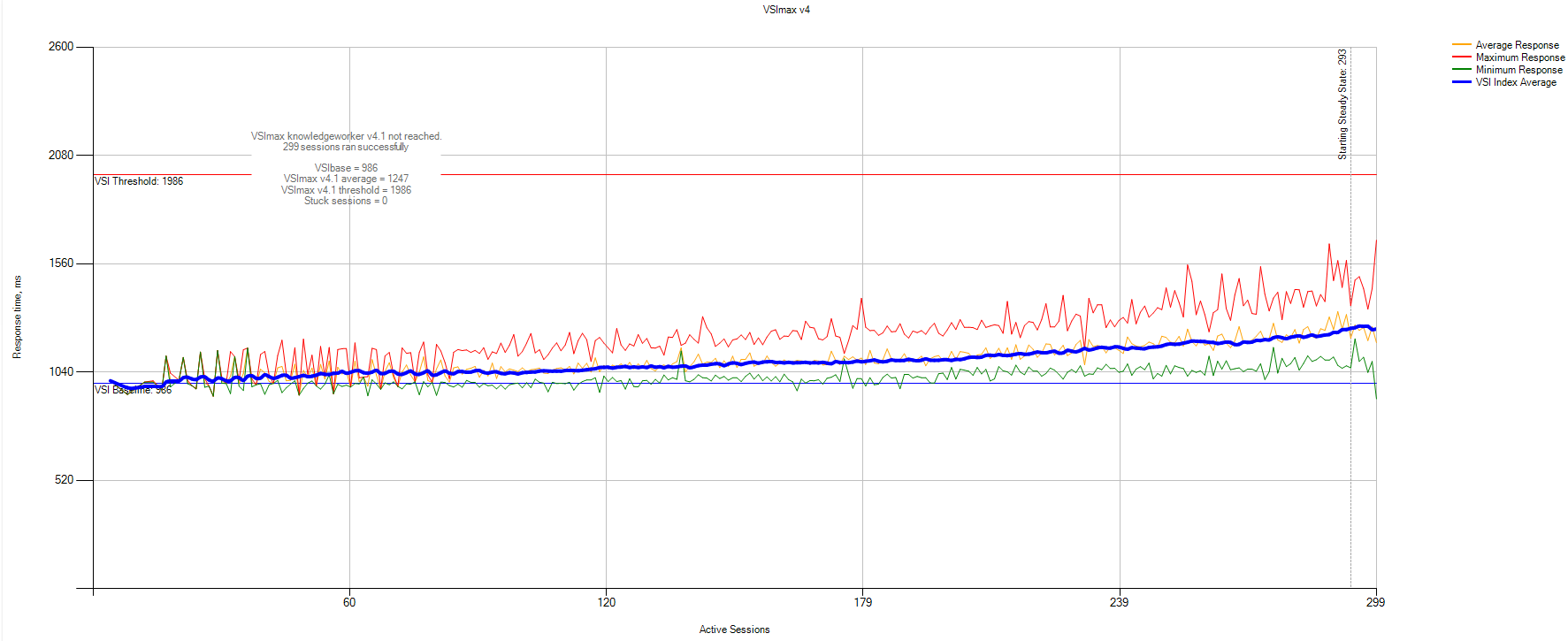

300 Knowledge workers benchmark

Knowledge workers are users who regularly load memory, processor, and IO with various small peaks. The software emulates the load from demanding office users, as if they are constantly poking something (PDF, Java, office suite, viewing photos, 7-Zip). As users are added from zero to 300, the delay for each of them gradually increases.

VSImax statistics data:

VSIbase = 986ms, VSI Threshold was not reached.

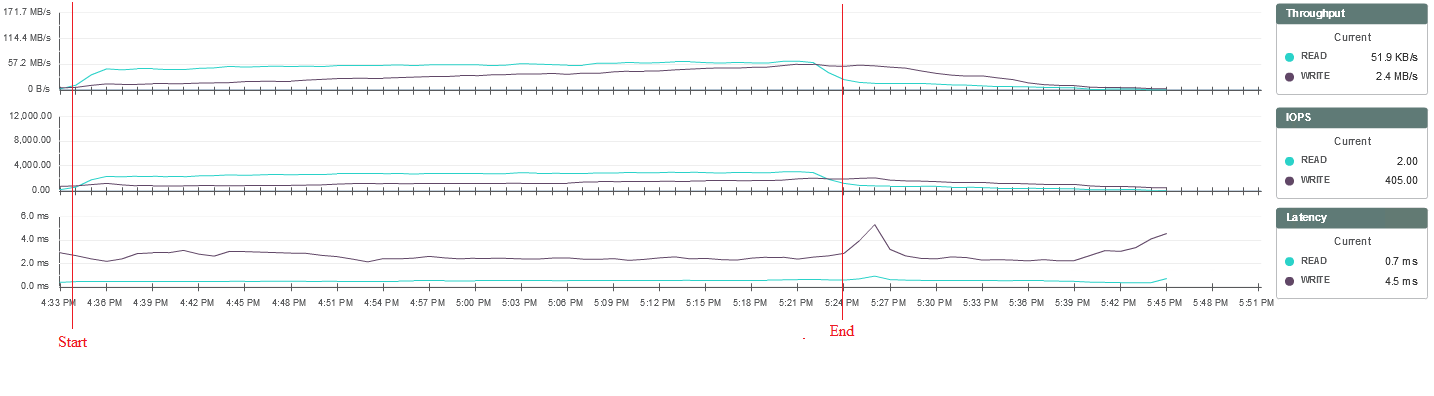

Statistics of the load on the storage system from SimpliVity monitoring:

With this type of load, the system can withstand increased load with little or no degradation in performance. The execution time of user tasks grows smoothly, the response time of the system does not change during testing and is up to 3 ms for writing and up to 1 ms for reading.

Conclusion: 300 knowledge of users without any problems work on the current cluster and do not interfere with each other, reaching pCPU / vCPU 1 to 6 oversubscription. General delays increase evenly, but the conditional limit has not been reached.

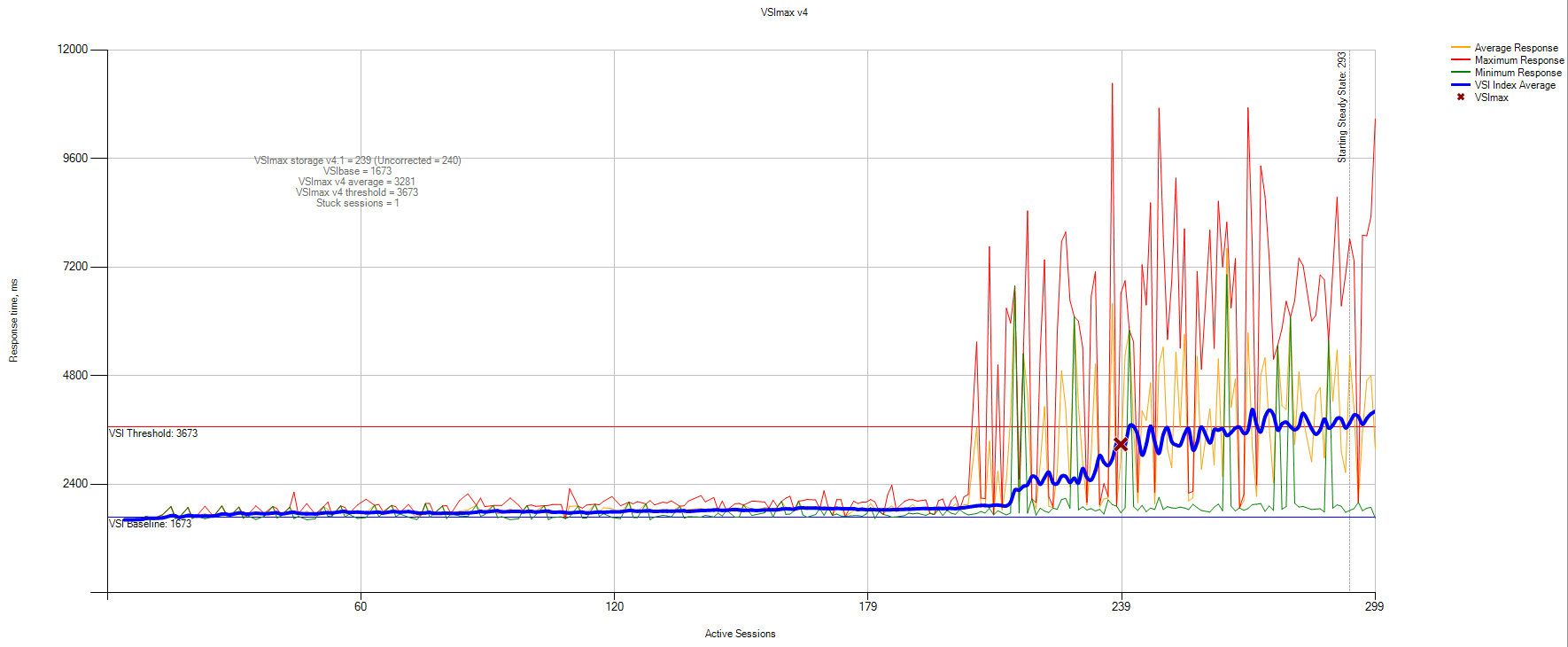

300 Storage workers benchmark

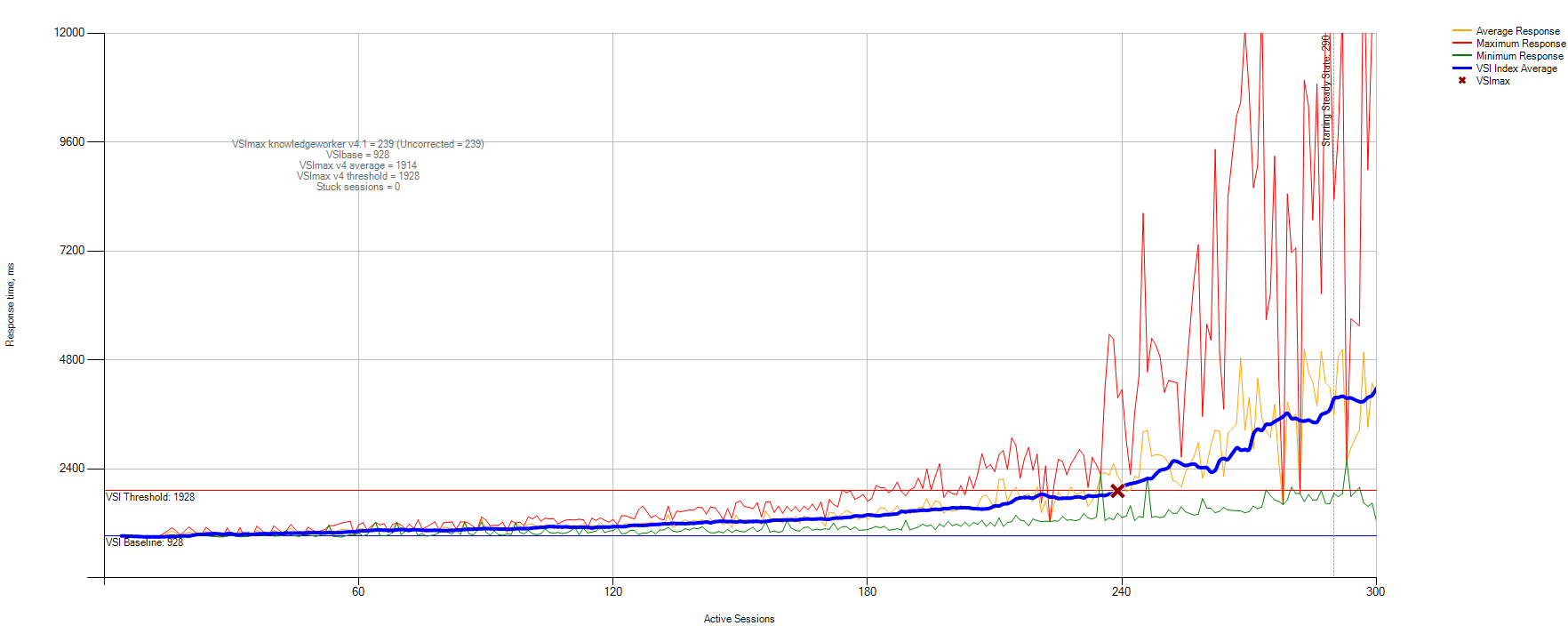

These are users who constantly write and read in a ratio of 30 to 70, respectively. This test was conducted more for the sake of experiment. VSImax statistics data:

VSIbase = 1673, VSI Threshold reached at 240 users.

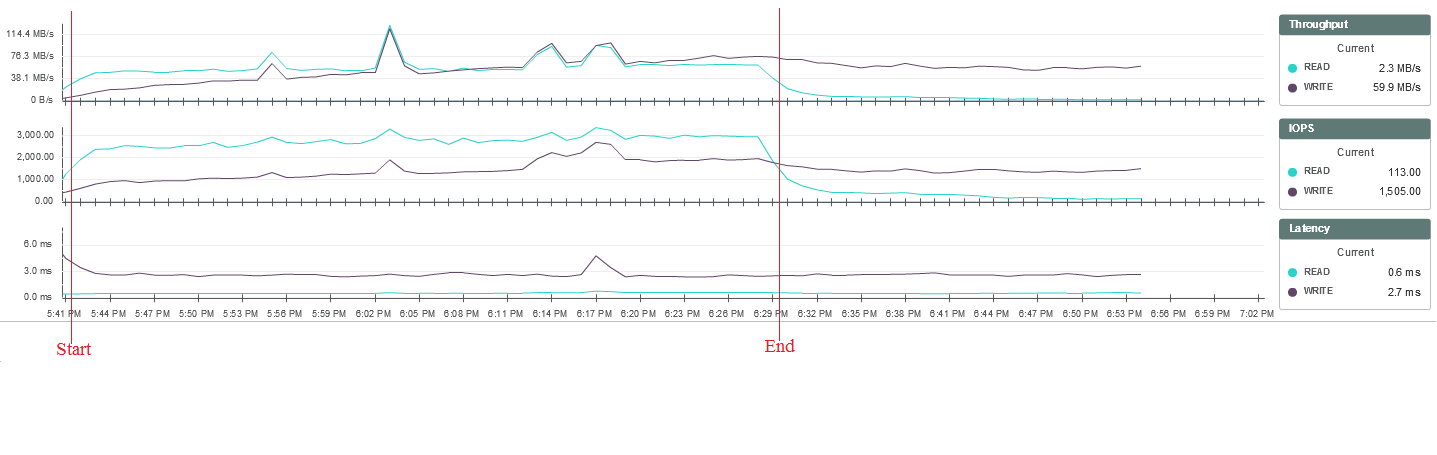

Statistics of the load on the storage system from SimpliVity monitoring:

This type of load, in fact, is a stress test of the storage system. When it is executed, each user writes to the disk many random files of different sizes. In this case, it can be seen that when a certain load threshold is exceeded, some users increase the time required to complete file recording tasks. At the same time, the load on the storage system, processor, and host memory does not change significantly, therefore, it is currently impossible to determine exactly what the delays are associated with.

Conclusions about the system performance using this test can only be made in comparison with the test results on other systems, since such loads are synthetic, unrealistic. However, in general, the test went well. Up to 210 sessions, everything went well, and then incomprehensible responses began, which were not tracked anywhere except Login VSI.

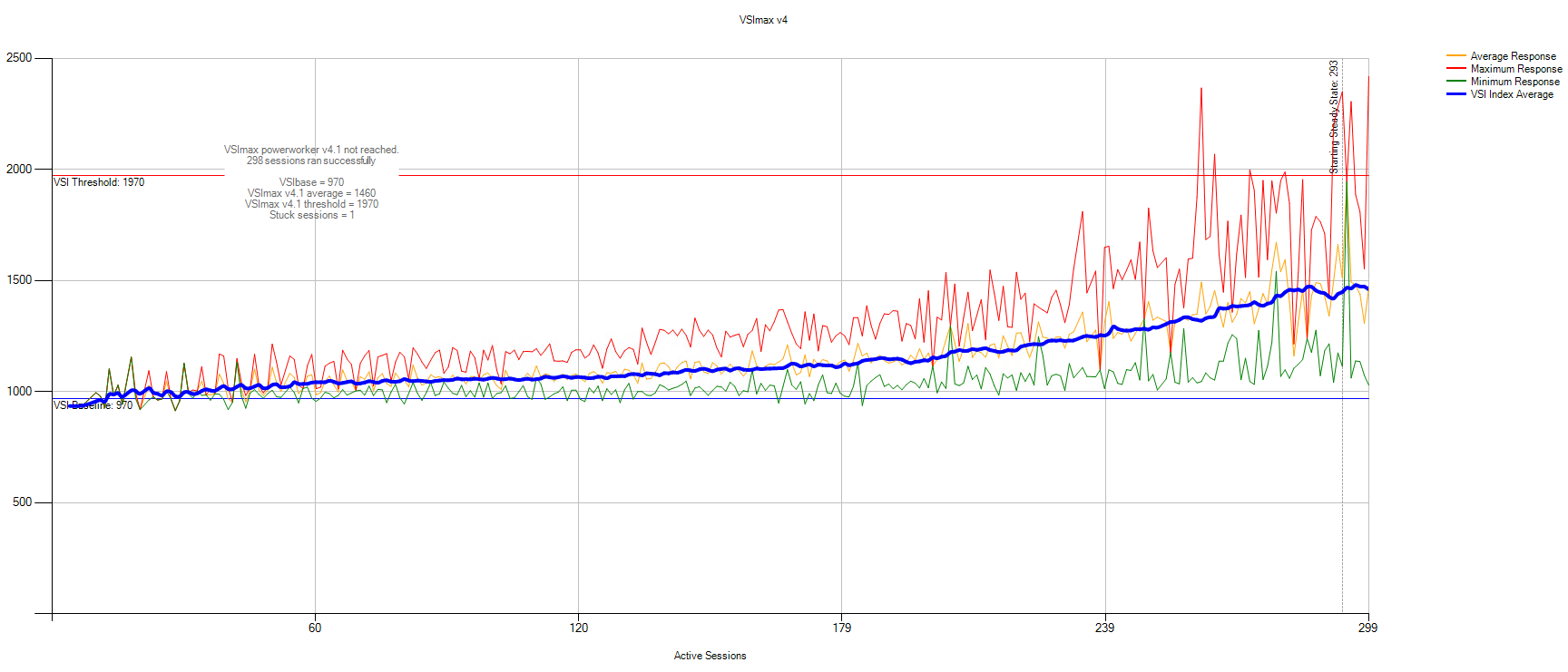

300 power workers

These are users who love the processor, memory and high IO. These “advanced users” regularly run complex tasks with long peaks like installing new software and unpacking large archives. VSImax statistics data:

VSIbase = 970, VSI Threshold was not reached.

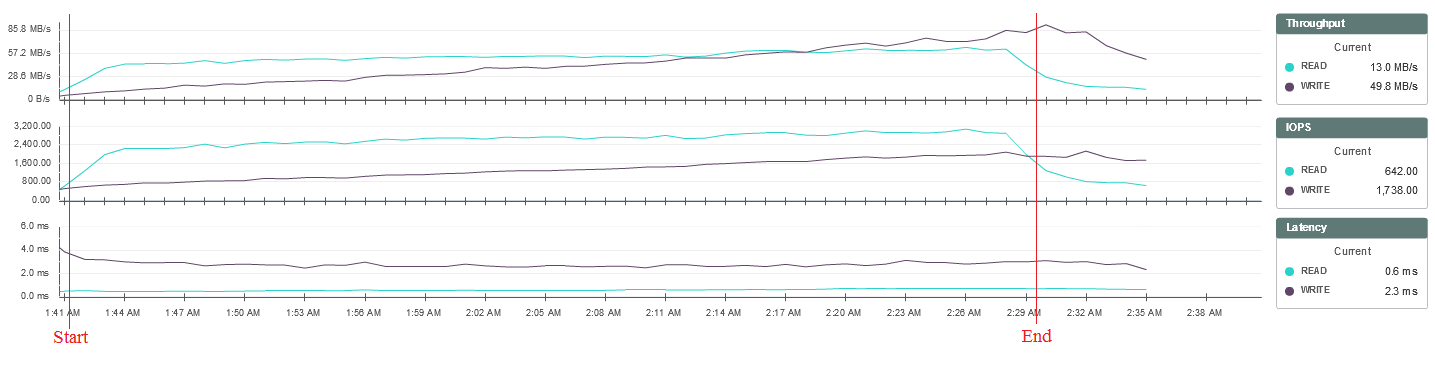

Statistics of the load on the storage system from SimpliVity monitoring:

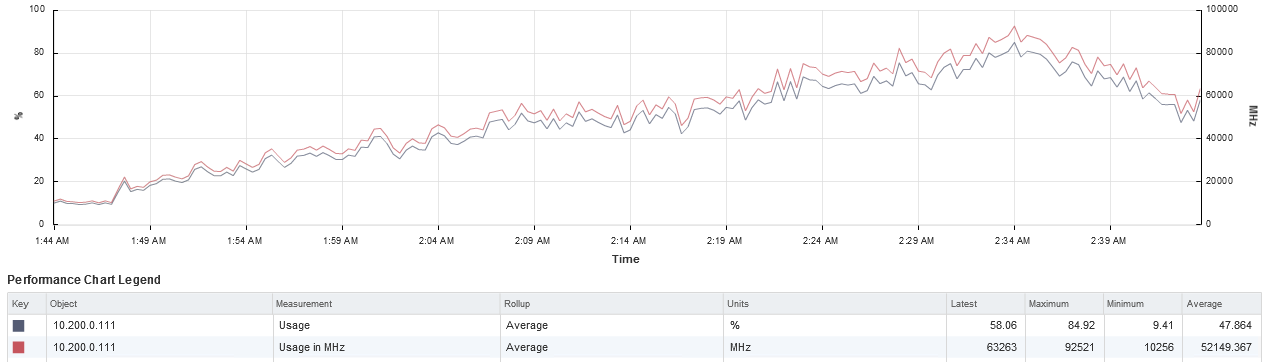

During testing, the processor load threshold was reached on one of the system nodes, but this did not have a significant impact on its operation:

In this case, the system can withstand increased load without significant performance degradation. The execution time of user tasks grows smoothly, the response time of the system does not change during testing and is up to 3 ms for writing and up to 1 ms for reading.

The usual tests for the customer were not enough, and we went further: increased the characteristics of the VM (the number of vCPUs in order to evaluate the increase in oversubscription and disk size) and added additional load.

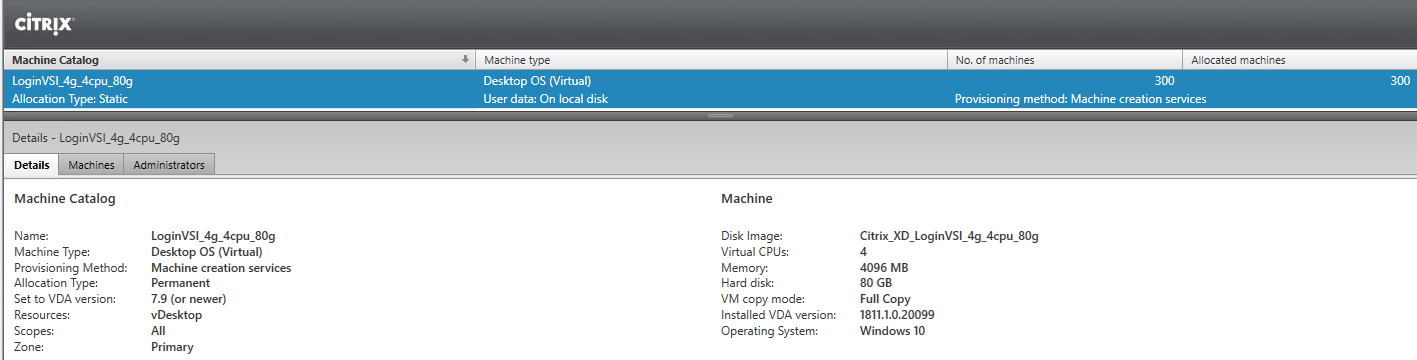

During additional tests, the following stand configuration was used:

300 virtual desktops in the configuration of 4vCPU, 4GB RAM, 80GB HDD were deployed.

Configuration of one of the test machines: The

machines are deployed in the Dedicated - Full Copy option:

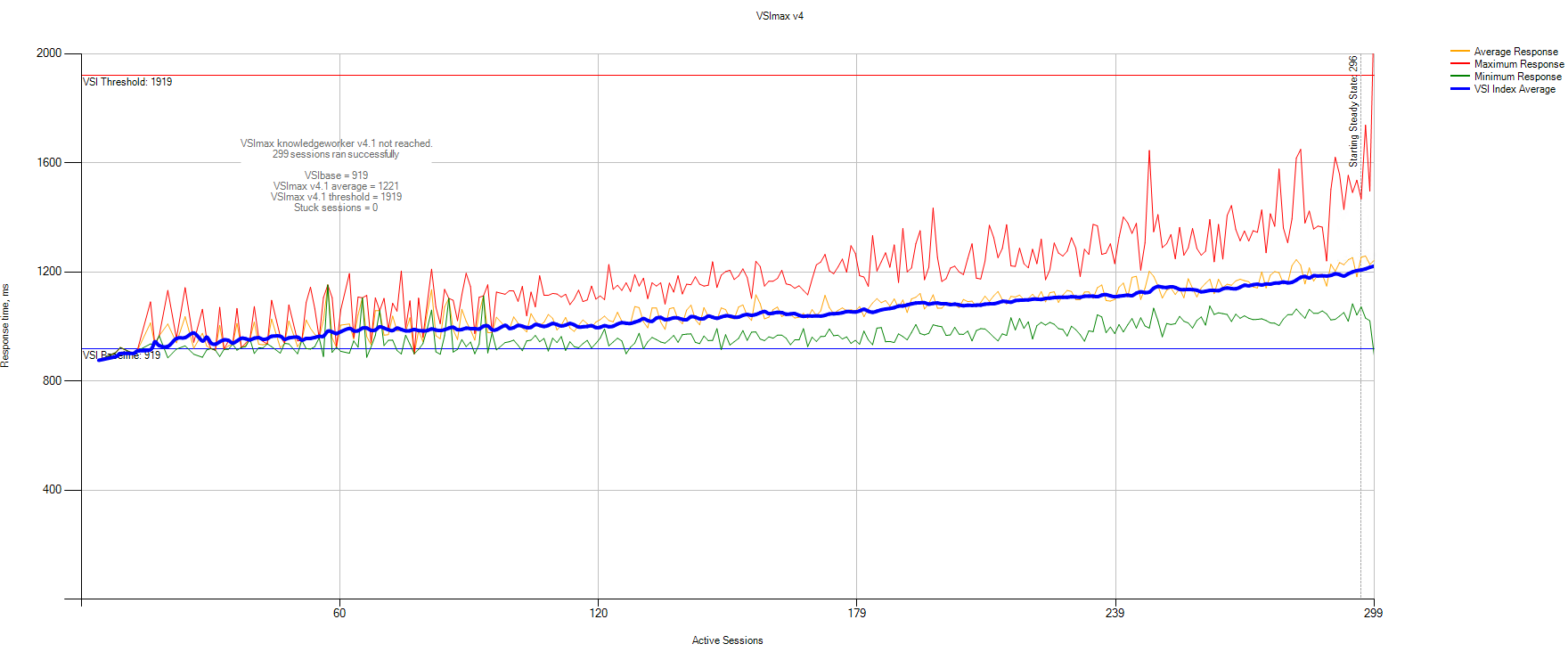

300 Knowledge workers benchmark 12 oversubscription

VSImax statistics data:

VSIbase = 921 ms, VSI Threshold was not reached.

Statistics of the load on the storage system from SimpliVity monitoring:

The results are similar to testing the previous VM configuration.

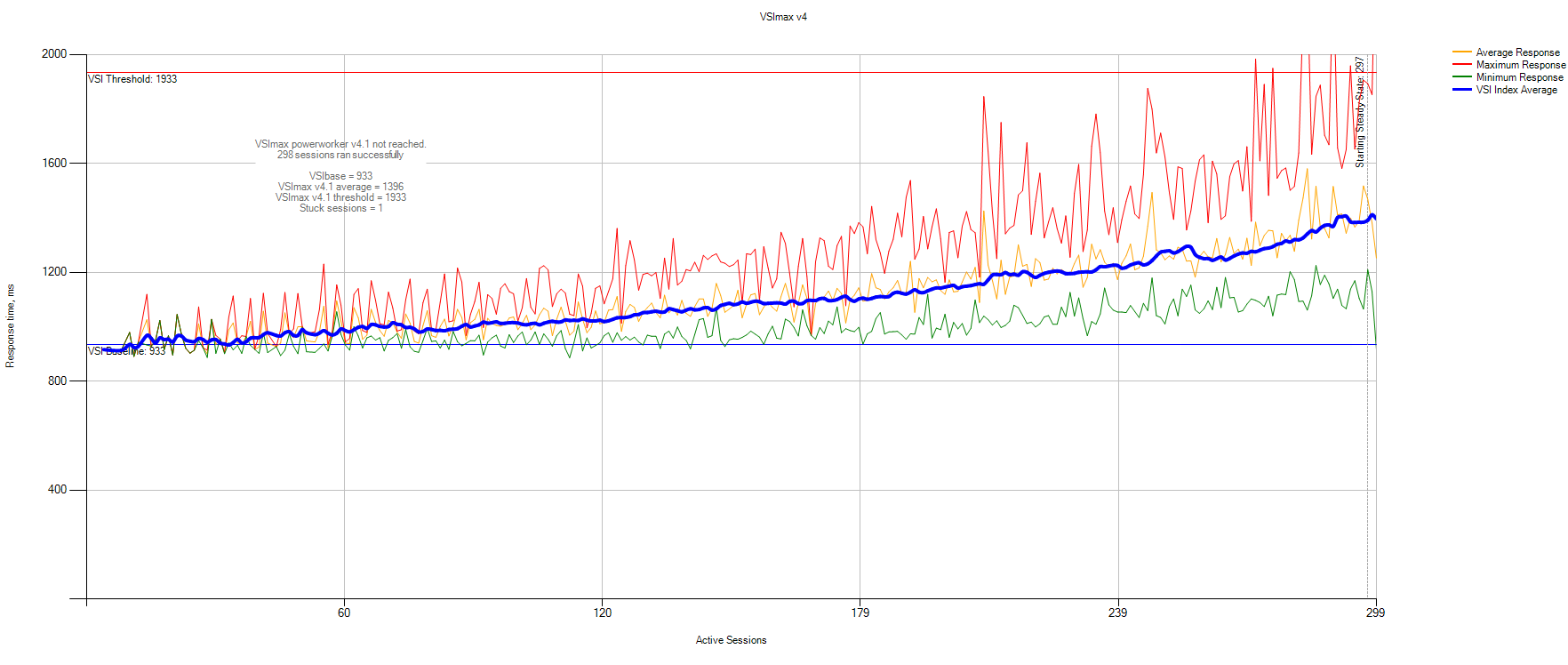

300 Power workers oversubscribing 12

VSImax statistics data:

VSIbase = 933, VSI Threshold was not reached.

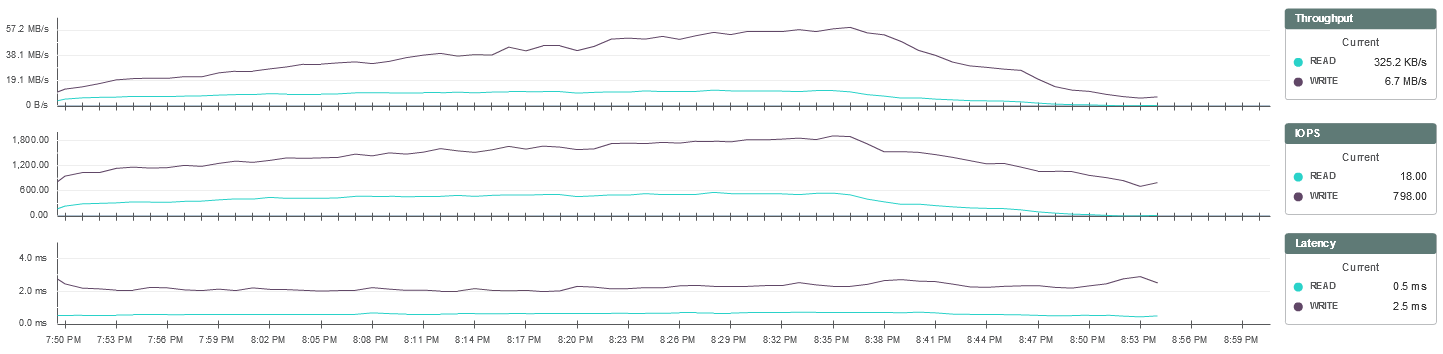

Statistics of the load on the storage system from the SimpliVity monitoring:

During this test, the processor load threshold was also reached, but this did not have a significant impact on performance:

The results obtained are similar to testing the previous configuration.

What happens if you start the load for 10 hours?

Now we’ll see if there will be an “accumulation effect” and run tests for 10 hours in a row.

Long tests and a description of the section should be aimed at the fact that we wanted to check whether there would be any problems with the farm with a long load on it.

300 Knowledge workers benchmark + 10 hours

In addition, a load test of 300 knowledge workers was tested, followed by user work for 10 hours.

VSImax statistics data:

VSIbase = 919 ms, VSI Threshold was not reached.

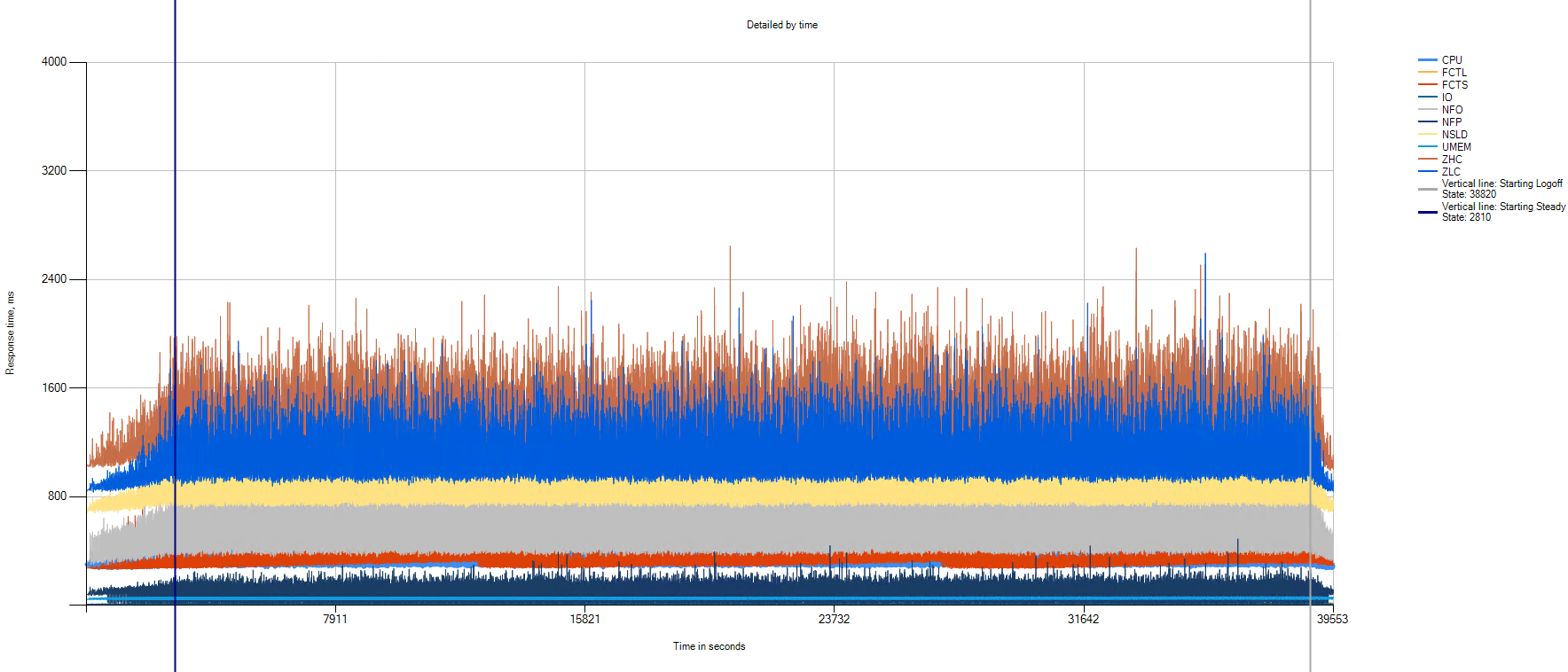

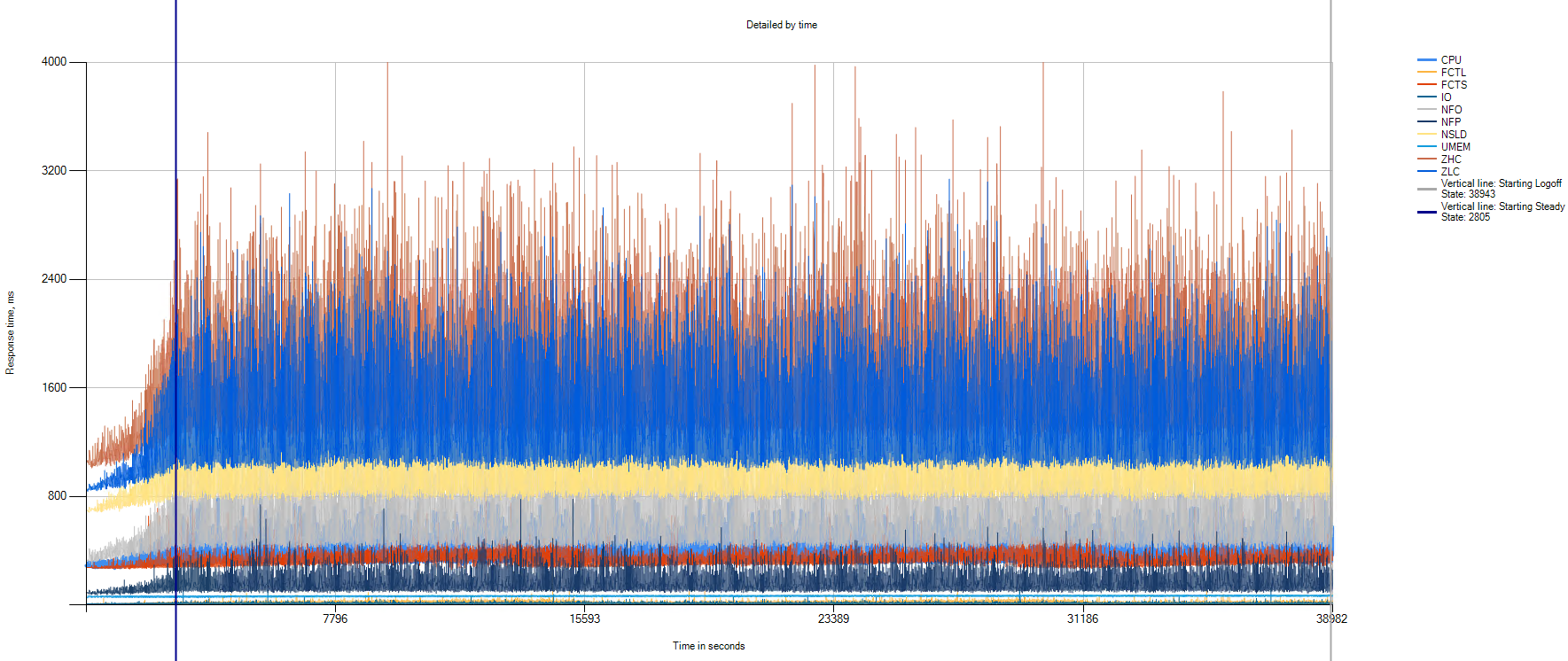

VSImax Detailed statistics:

The graph shows that throughout the test there is no performance degradation.

SimpliVity monitoring load statistics on a storage system:

Storage system performance remains at the same level throughout the test.

Additional testing with added synthetic load

The customer asked to add a wild load to the disk. To do this, a task was added to the storage system in each of the user's virtual machines to launch a synthetic load on the disk when a user logs on to the system. The load was provided by the fio utility, which allows limiting the load on the disk by the number of IOPS. In each machine, a task was launched to start an additional load in the amount of 22 IOPS 70% / 30% Random Read / Write.

300 Knowledge workers benchmark + 22 IOPS per user

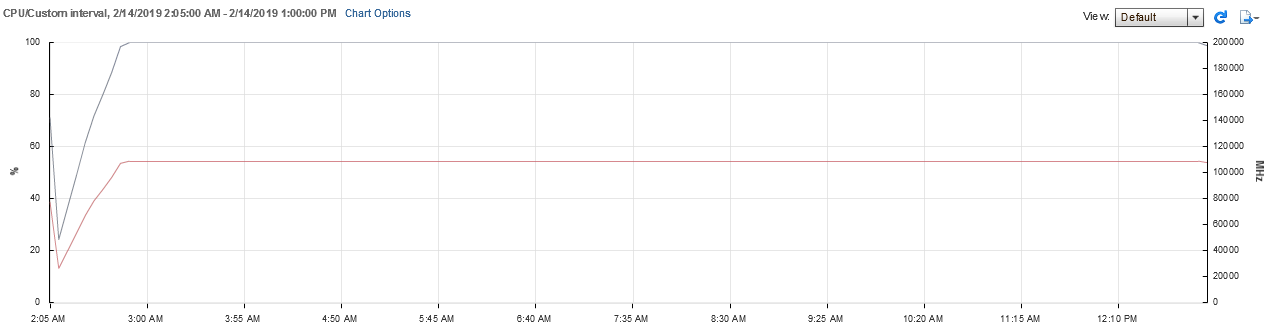

During initial testing, it was discovered that fio creates a significant additional load on the processor of virtual machines. This led to a fast host overload on the CPU and greatly affected the operation of the system as a whole.

CPU load on hosts:

Storage system latencies also naturally increased:

Lack of computing power became critical for approximately 240 users:

As a result of the results, it was decided to conduct testing that would less load the CPU.

230 Office workers benchmark + 22 IOPS per user

To reduce the load on the CPU, the Office workers load type was selected, and 22 IOPS of synthetic load were added to each session.

The test was limited to 230 sessions in order not to exceed the maximum load on the CPU.

The test was launched with the subsequent work of users for 10 hours to check the stability of the system during prolonged operation at a load close to maximum.

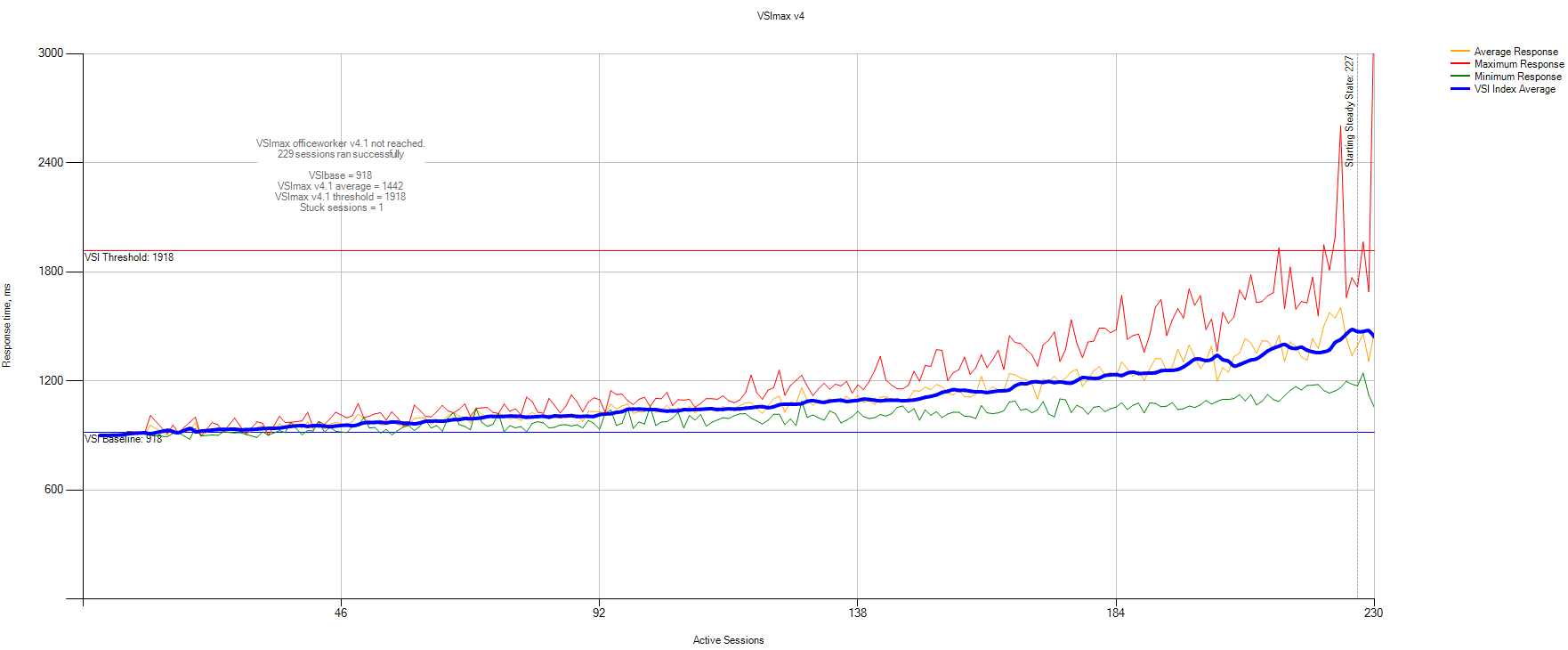

VSImax statistics data:

VSIbase = 918 ms, VSI Threshold was not reached.

VSImax Detailed statistics:

The graph shows that throughout the test there is no performance degradation.

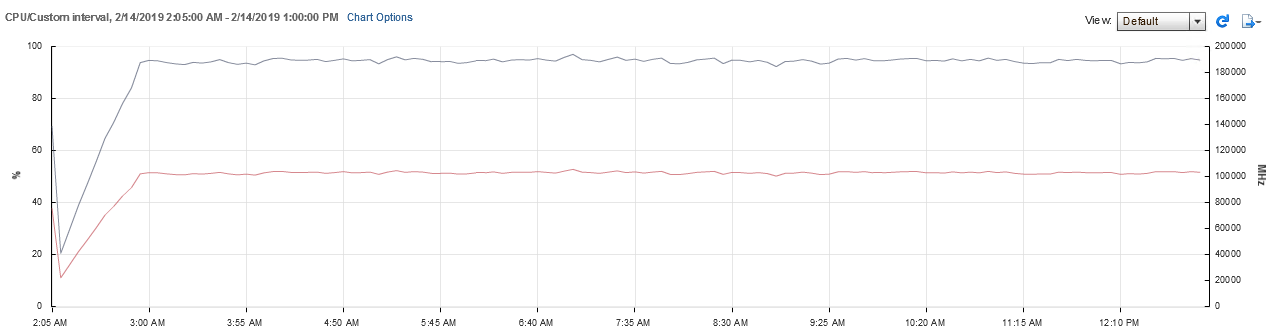

Statistics on CPU load:

When performing this test, the load on the CPU of the hosts was almost maximum.

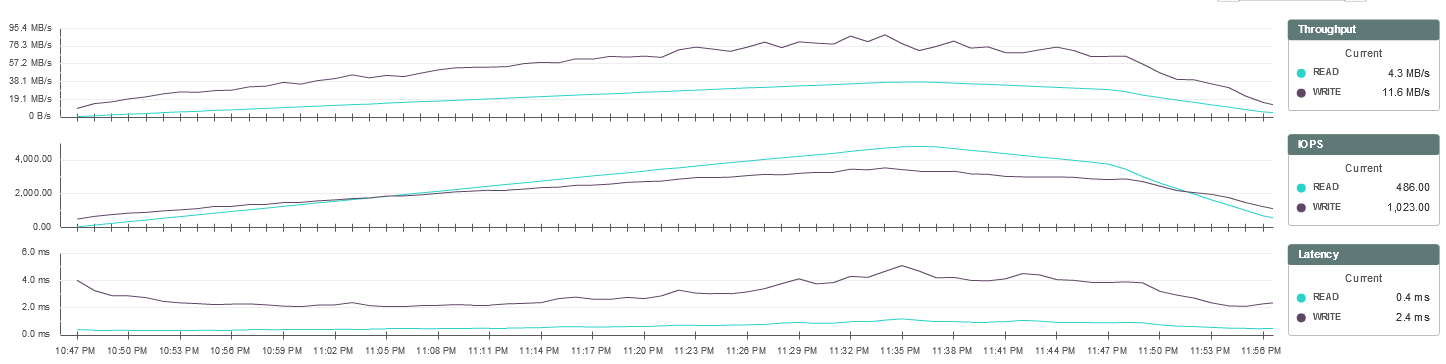

SimpliVity monitoring load statistics on a storage system:

Storage system performance remains at the same level throughout the test.

The load on the storage system during the test was approximately 6,500 IOPS in a 60/40 ratio (3,900 IOPS for reading, 2,600 IOPS for writing), which is about 28 IOPS per workstation.

The response time averaged 3 ms for writing and up to 1 ms for reading.

Total

When simulating real loads on the HPE SimpliVity infrastructure, results were obtained confirming the ability of the system to provide virtual desktops in the amount of at least 300 Full Clone machines on a pair of SimpliVity nodes. At the same time, the response time of the storage system was maintained at an optimal level throughout the entire test.

We are very impressed with the approach about lengthy tests and comparison of solutions before implementation. We can test performance for your workloads if you want. Including other hyperconverged solutions. The mentioned customer is now completing tests on another solution in parallel. Its current infrastructure is just a PC fleet, domain and software at every workplace. Moving to VDI without tests is, of course, quite difficult. It is particularly difficult to understand the real capabilities of the VDI farm without migrating real users to it. And these tests allow you to quickly assess the real capabilities of a particular system without the need to attract ordinary users. Hence, such a study arose.

The second important approach - the customer immediately laid down on the correct scaling. Here you can buy a server and add a farm, for example, for 100 users, everything is predictable at the price of the user. For example, when they need to add another 300 users, they will know that they need two servers in an already defined configuration, and not review the possibilities of upgrading their infrastructure as a whole.

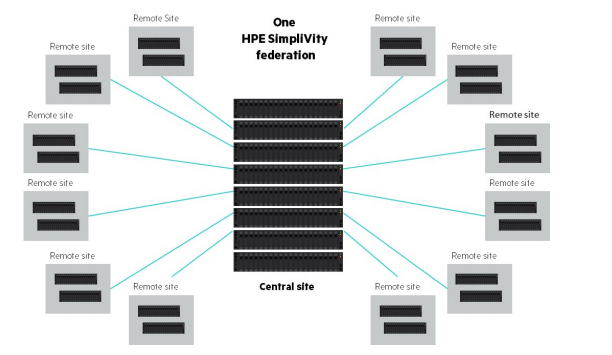

Interesting features of the HPE SimpliVity federation. Business is geographically divided, so it makes sense to put your own separate VDI piece of iron in a distant office. In SimpliVity Federation, each virtual machine is replicated according to a schedule with the ability to do between geographically remote clusters very quickly and without load on the channel - this is a very good built-in backup. When replicating VMs between sites, the channel is used as minimally as possible, and this makes it possible to build very interesting DR architectures with a single control center and a bunch of decentralized storage sites.

Federation

All this together makes it possible to evaluate the financial side in great detail, and to impose the costs of VDI on the company's growth plans, and to understand how quickly the solution will pay off and how it will work. Because any VDI is a solution that ultimately saves a ton of resources, but at the same time, most likely, without a cost-effective opportunity to change it within 5-7 years of use.

In general, if you have any questions not for comments, please write to me at mk@croc.ru.