Emulation exercises: Xbox 360 FMA manual

- Transfer

Many years ago, I worked in the Microsoft Xbox 360 department. We thought about releasing a new console, and decided that it would be great if this console could run games from the previous generation console.

Emulation is always difficult, but it is even more difficult if your corporate bosses are constantly changing the types of central processors. The first Xbox (not to be confused with the Xbox One) used an x86 CPU. In the second Xbox, that is, sorry, the Xbox 360 used a PowerPC processor. The third Xbox, that is, the Xbox One , used the x86 / x64 CPU. Such leaps between different ISAs did not simplify our lives.

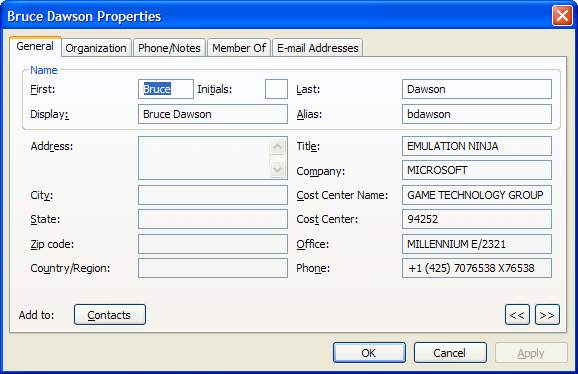

I participated in the work of a team that taught the Xbox 360 to emulate many games of the first Xbox, that is, emulate x86 on PowerPC, and for this work I received the title of “ninja emulation” . Then I was asked to study the issue of emulating the Xbox 360 PowerPC CPU on x64 CPU. I will say in advance that I have not found a satisfactory solution.

FMA! = MMA

One of the things that bothered me was fused multiply add, or FMA instructions . These instructions received three parameters at the input, multiplied the first two, and then added the third. Fused meant that rounding was not performed until the end of the operation. That is, the multiplication is performed with full accuracy, after which the addition is performed, and only then the result is rounded to the final answer.

To show this with a concrete example, let's imagine that we use decimal floating-point numbers and two precision digits. Imagine this calculation, shown as a function:

FMA(8.1e1, 2.9e1, 4.1e1), или 8.1e1 * 2.9e1 + 4.1e1, или 81 * 29 + 4181*29equal 2349and after adding 41 we get 2390. Rounding up to two digits, we get 2400or 2.4e3. If we do not have FMA, then we will first have to perform the multiplication, get

2349that round up to two digits of accuracy and give 2300 (2.3e3). Then we add 41and we get 2341that it will be rounded again and we will get the final result 2300 (2.3e3), which is less accurate than the FMA answer.Note 1:FMA(a,b, -a*b)calculates the error ina*b, which is actually cool.

Note 2: One of the side effects of Note 1 is that itx = a * b – a * bmay not return zero if the computer automatically generates FMA instructions.

So, obviously, FMA gives more accurate results than individual multiplication and addition instructions. We will not go deep, but we will agree that if we need to multiply two numbers and then add the third, then the FMA will be more accurate than its alternatives. In addition, FMA instructions often have less latency than the multiplication instruction followed by the addition instruction. In the Xbox 360 CPU, the latency and FMA processing speed was equal to those of fmul or fadd , so using FMA instead of fmul followed by dependent fadd allowed to reduce the delay by half.

FMA emulation

The Xbox 360 compiler has always generated FMA instructions , both vector and scalar. We were not sure that the x64 processors we selected would support these instructions, so it was critical to emulate them quickly and accurately. It was necessary for our emulation of these instructions to become ideal, because from my previous experience of emulating floating-point calculations, I knew that “fairly close” results resulted in characters falling through the floor, cars flying out of the world, and so on.

So what is needed to perfectly emulate FMA instructions if the x64 CPU does not support them?

Fortunately, the vast majority of floating point calculations in games are performed with float precision (32 bits), and I could happily use instructions with double precision (64 bits) in FMA emulation.

It seems that emulating FMA instructions with float precision using calculations with double precision should be simple ( narrator voice: but it is not; floating point operations are never simple ). Float has an accuracy of 24 bits, and double has an accuracy of 53 bits. This means that if you convert the incoming float to precision double (lossless conversion), then you can perform the multiplication without errors. That is, to store completely accurate results, only 48 bits of accuracy are enough, and we have more, that is, everything is in order.

Then we need to do the addition. It is enough just to take the second term in the float format, convert it to double, and then add it to the result of the multiplication. Since rounding does not occur in the process of multiplication, and it is performed only after addition, this is completely sufficient to emulate FMA. Our logic is perfect. You can declare victory and return home.

The victory was so close ...

But that does not work. Or at least it fails for some of the incoming data. Ponder yourself why this can happen.

Call hold music sounds ...

The failure occurs because, by the definition of FMA, multiplication and addition are performed with full precision, after which the result is rounded with a precision float. We almost managed to achieve this.

Multiplication occurs without rounding, and then, after addition, rounding is performed. This is similar to what we are trying to do. But rounding after addition is done with double precision . After that, we need to save the result with float precision, which is why rounding occurs again.

Pooh Double rounding .

It will be difficult to demonstrate this clearly, so let's get back to our decimal floating-point formats, where single precision is two decimal places and double precision is four digits. And let's imagine what we are calculating

FMA(8.1e1, 2.9e1, 9.9e-1), or 81 * 29 + .99. The exact answer of this expression will be

2349.99or 2.34999e3. Rounding to precision single (two digits), we get 2.3e3. Let's see what goes wrong when we try to emulate these calculations. When we perform multiplication

81and 29with double precision, we get 2349. So far so good. Then we add

.99and get 2349.99. Everything is still fine. This result is rounded to the precision of double and we get

2350 (2.350e3). OopsWe round it to the precision single and according to IEEE rules we round to the nearest even one we get

2400 (2.4e3). This is the wrong answer. It has a slightly larger error than the correctly rounded result returned by the FMA instruction. You can state that the problem is in the IEEE environment rule until the nearest even one. However, no matter what rounding rule you choose, there will always be a case where double rounding returns a result different from the true FMA.

How did it all end?

I was not able to find a completely satisfying solution to this problem.

I left the Xbox team long before the Xbox One was released, and since then I have not paid much attention to the console, so I don’t know what decision they made. Modern x64 CPUs have FMA instructions that can perfectly emulate such operations. You can also somehow use the x87 mathematical coprocessor to emulate FMA - I don’t remember what conclusion I came to when I studied this question. Or perhaps the developers simply decided that the results are fairly close and can be used.