An introduction to Kubernetes for VMware users. Part 2. Practice

- Transfer

- Tutorial

This is the second part of the introduction to Kubernetes for VMware users. Before proceeding to the practical part, read the previous article on the link .

The easiest and fastest way to meet Kubernetes without any special requirements is the GCP (Google Cloud Platform). Just do test access , and learn Kubernetes. To confirm registration you need to provide information on a bank card. GCP will not charge anything unless you activate the payment. Create an account, and we will continue to explore the fascinating world of Kubernetes.

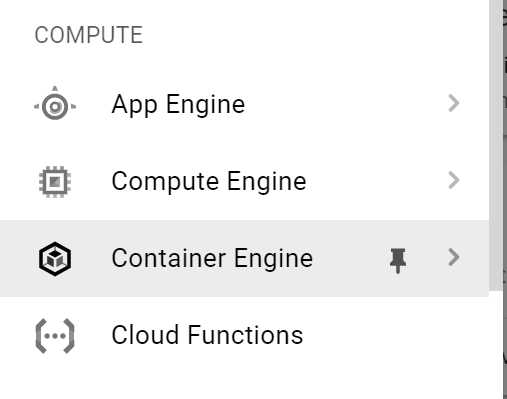

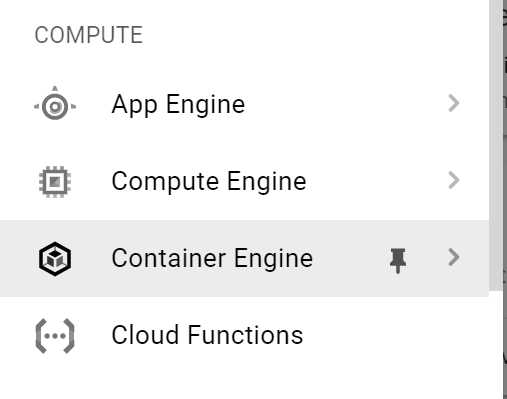

The first step is to connect to GKE (Google Kubernetes Engine). In the left menu, select Container Engine .

Click on Create cluster.

Fill in the fields:

- the name of the cluster;

- access zone,

- version Kubernetes;

- details of the image, etc.

A cluster will be created in a few minutes. When ready, click Connect. The command to connect appears. We will use it. Copy it.

The easiest way to connect to a cluster is through the Google Cloud shell .

In the upper right corner, click on the shell icon.

After a few seconds, the shell will start on the same page. Paste the command that you copied in the previous step.

Now let's look at the information on our cluster using the kubectl cluster-info command .

To get information about nodes, enter the command kubectl get no -o wide. You will see a list of nodes with information on them.

Now we will get a list of all working pods in our K8s cluster. Enter the command kubectl get po -o wide –all-namespaces .

Now let's connect to the cluster nodes to find out their network settings. To do this, click the main menu again on the left and select Compute Engine from the drop-down list . As you can see from the screenshot below, the k8s cluster is ultimately the number of virtual machines.

Click SSH to start a session with the selected node. A new dialog box will appear. Make sure that pop-ups are not blocked in your browser.

Check which Linux distribution is used. To do this, type in the uname -a command , and then cat / etc / lsb-release .

Now let's check the network settings with the ifconfig -a | more . See the cbr0 bridge interface ? This is what we showed in the network diagram above.

Here we also see the / 24 network allocated to this node - 10.40.1.0/24. From it, our pods get their IP addresses. Now you can correlate the network scheme above with the actual configuration on the nodes of the k8s cluster. Cool, right?

Now let's go back to the Google Cloud Shell window and try to close the test application. Beat in git clone https://github.com/hanymichael/NSX-CNA-Lab.git .

With this command, you will clone one of my test applications on GitHub. Now go to the folder with the application and type kubectl create -f.so that all YAML files are created in this folder.

Now we will display a list of pods and see their status. It is seen that all have the status ContainerCreating . After a few seconds, the status will change to Running .

There are two deployments of my web application in K8s, each with 4 replicas. Now let's check the Services associated with this deployment with the command kubectl get svc .

The last thing to check is the ingress associated with this application. The screenshot above shows that we are connecting to the application via external IP. If we check the partition with the balancer, we will see the load balancer associated with the ingress.

If you want to access the application itself, you can create a DNS record for * .esxcloud.net in the local host file.

Now open a new tab in your browser and follow the links sphinx-v1.esxcloud.net and sphinx-v2.esxcloud.net . We will see two things:

Now you can relate the configuration of the application to the diagram below and see how it all looks in Kubernetes.

We saw that Kubernetes, like any distributed system, has many similarities (and some differences) with vSphere, which we know as VMware users. I tried to explain the basic concepts by comparing K8s & vSphere. I hope this helped you a little to quickly get acquainted with this incredibly powerful cloud platform that is already conquering the world.

In the next posts I will start to dive deeper into the development of our enterprise-solution for launching Kubernetes inside the data center. Follow the news!

PS: You can try Kubernetes in our KuberLine service by completing a test drive.

The easiest and fastest way to meet Kubernetes without any special requirements is the GCP (Google Cloud Platform). Just do test access , and learn Kubernetes. To confirm registration you need to provide information on a bank card. GCP will not charge anything unless you activate the payment. Create an account, and we will continue to explore the fascinating world of Kubernetes.

The first step is to connect to GKE (Google Kubernetes Engine). In the left menu, select Container Engine .

Click on Create cluster.

Fill in the fields:

- the name of the cluster;

- access zone,

- version Kubernetes;

- details of the image, etc.

A cluster will be created in a few minutes. When ready, click Connect. The command to connect appears. We will use it. Copy it.

The easiest way to connect to a cluster is through the Google Cloud shell .

In the upper right corner, click on the shell icon.

After a few seconds, the shell will start on the same page. Paste the command that you copied in the previous step.

Now let's look at the information on our cluster using the kubectl cluster-info command .

To get information about nodes, enter the command kubectl get no -o wide. You will see a list of nodes with information on them.

Now we will get a list of all working pods in our K8s cluster. Enter the command kubectl get po -o wide –all-namespaces .

Now let's connect to the cluster nodes to find out their network settings. To do this, click the main menu again on the left and select Compute Engine from the drop-down list . As you can see from the screenshot below, the k8s cluster is ultimately the number of virtual machines.

Click SSH to start a session with the selected node. A new dialog box will appear. Make sure that pop-ups are not blocked in your browser.

Check which Linux distribution is used. To do this, type in the uname -a command , and then cat / etc / lsb-release .

Now let's check the network settings with the ifconfig -a | more . See the cbr0 bridge interface ? This is what we showed in the network diagram above.

Here we also see the / 24 network allocated to this node - 10.40.1.0/24. From it, our pods get their IP addresses. Now you can correlate the network scheme above with the actual configuration on the nodes of the k8s cluster. Cool, right?

Now let's go back to the Google Cloud Shell window and try to close the test application. Beat in git clone https://github.com/hanymichael/NSX-CNA-Lab.git .

With this command, you will clone one of my test applications on GitHub. Now go to the folder with the application and type kubectl create -f.so that all YAML files are created in this folder.

Now we will display a list of pods and see their status. It is seen that all have the status ContainerCreating . After a few seconds, the status will change to Running .

There are two deployments of my web application in K8s, each with 4 replicas. Now let's check the Services associated with this deployment with the command kubectl get svc .

The last thing to check is the ingress associated with this application. The screenshot above shows that we are connecting to the application via external IP. If we check the partition with the balancer, we will see the load balancer associated with the ingress.

If you want to access the application itself, you can create a DNS record for * .esxcloud.net in the local host file.

Now open a new tab in your browser and follow the links sphinx-v1.esxcloud.net and sphinx-v2.esxcloud.net . We will see two things:

- version of the application is shown for each link;

- after updating the web page, the container’s IP address changes, so the balancer is working.

Now you can relate the configuration of the application to the diagram below and see how it all looks in Kubernetes.

Conclusion

We saw that Kubernetes, like any distributed system, has many similarities (and some differences) with vSphere, which we know as VMware users. I tried to explain the basic concepts by comparing K8s & vSphere. I hope this helped you a little to quickly get acquainted with this incredibly powerful cloud platform that is already conquering the world.

In the next posts I will start to dive deeper into the development of our enterprise-solution for launching Kubernetes inside the data center. Follow the news!

PS: You can try Kubernetes in our KuberLine service by completing a test drive.