How to withstand increased system loads: talk about large-scale preparations for Black Friday

Hello, Habr!

In 2017, during Black Friday, the load increased almost one and a half times, and our servers were at the limit of possibilities. Over the year, the number of customers has grown significantly, and it became clear that without a thorough preliminary preparation, the platform may simply not withstand the loads of 2018.

The goal was set the most ambitious of all possible: we wanted to be fully prepared for any, even the most powerful, bursts of activity and began to withdraw new capacities in advance during the year.

Our CTO Andrei Chizh ( chizh_andrey ) tells how we were preparing for Black Friday 2018, what measures we took to avoid falls, and, of course, the results of such careful preparation.

Today I want to talk about preparations for Black Friday 2018. Why now, when most of the major sales are behind? We began to prepare about a year before large-scale events, and by trial and error we found the optimal solution. We recommend that you take care of the hot seasons in advance and prevent the fakaps that may emerge at the most inopportune moment.

The material will be useful to everyone who wants to squeeze the maximum profit out of such stocks, as the technical side of the issue is not inferior to the marketing one.

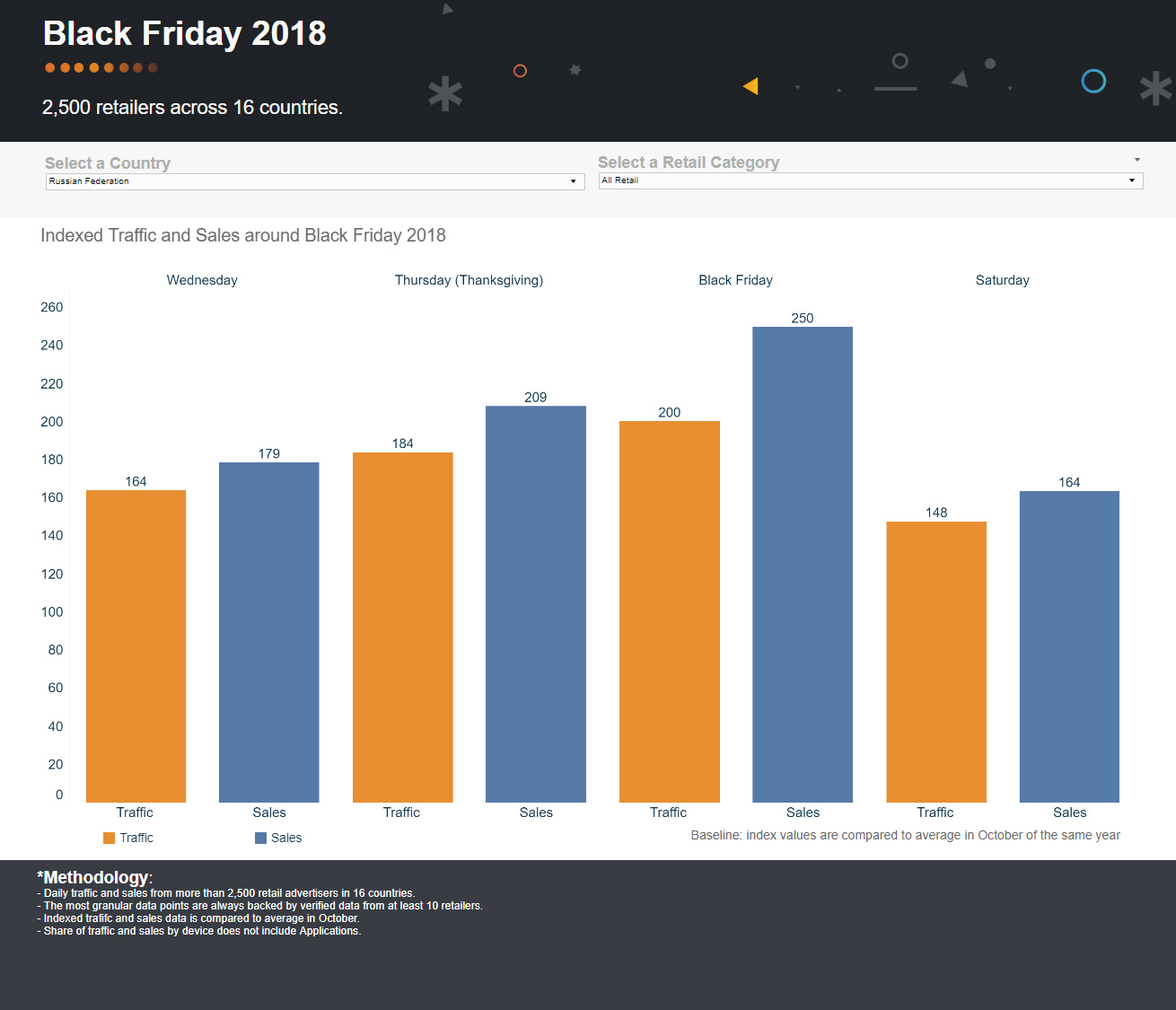

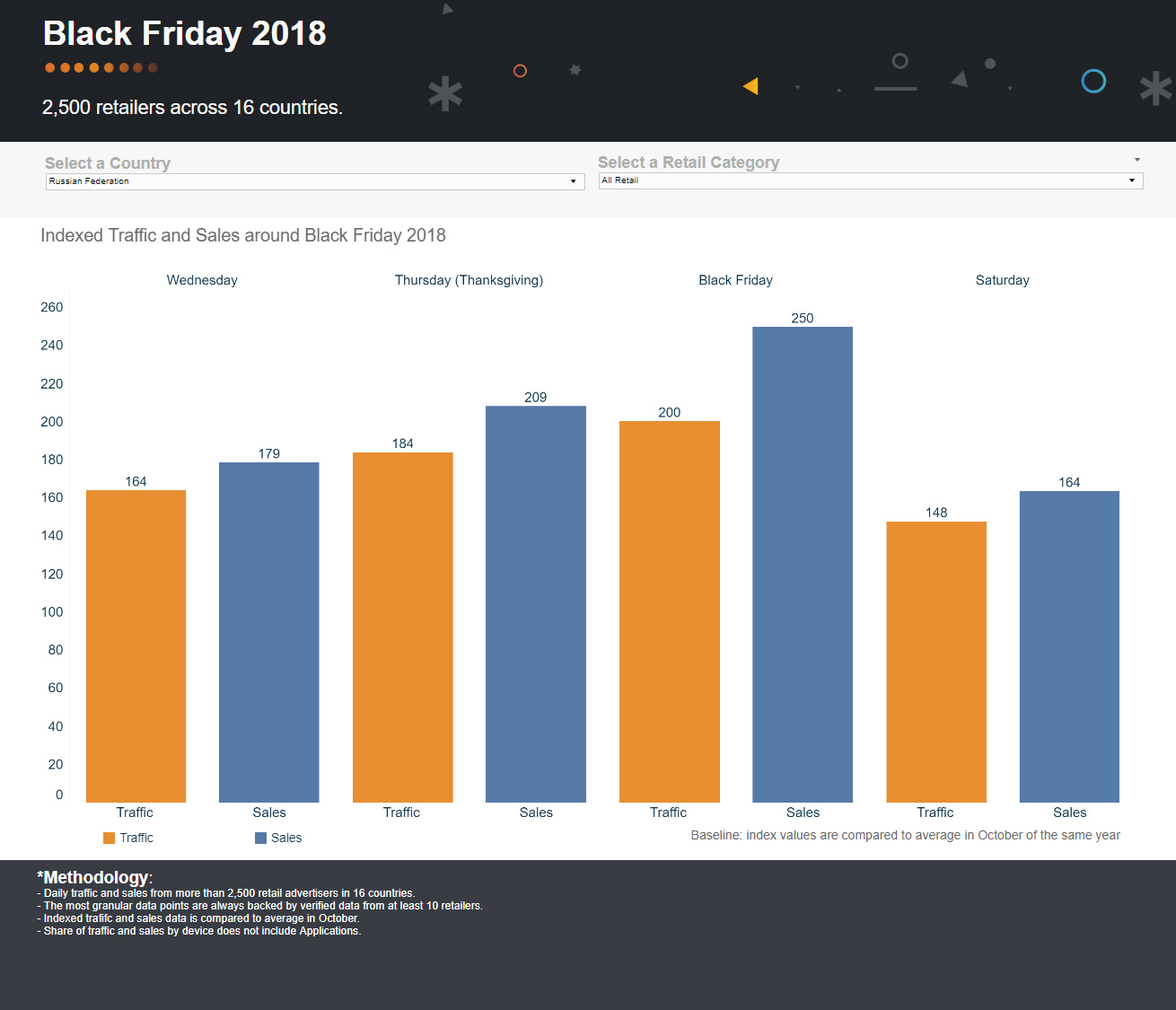

Contrary to popular belief, Black Friday is not just one day a year, but almost a whole week: the first discount offers arrive 7-8 days before the sale. Website traffic begins to grow smoothly all week, reaches its peak on Friday and drops quite sharply on Saturday to regular store indicators.

This is important to consider: online stores become especially sensitive to any “slowdowns” in the system. In addition, our email newsletter also experienced a significant increase in the number of sendings.

It is strategically important for us to go through Black Friday without falls, as the most important functionality of the sites and mailing lists of stores depends on the platform’s operation, namely:

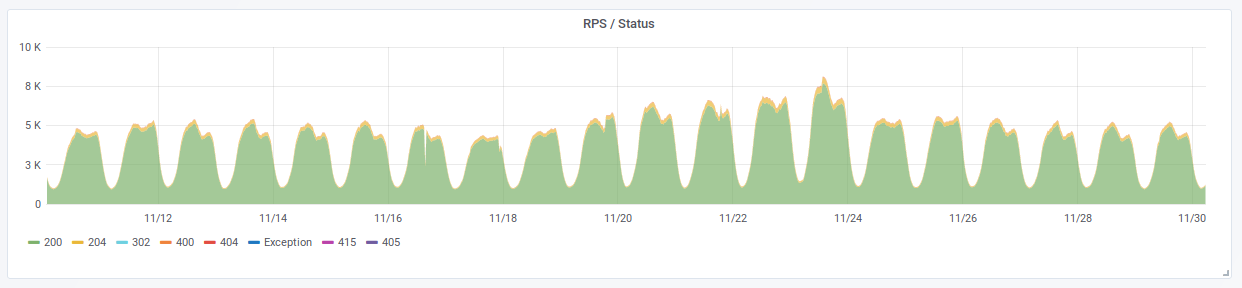

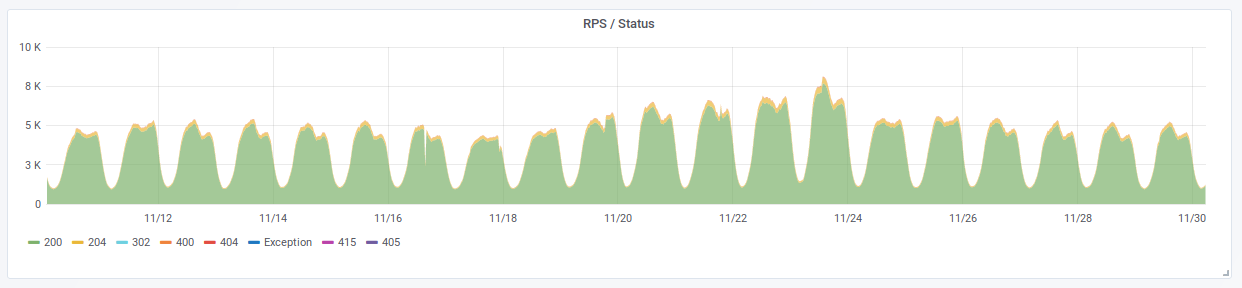

In fact, during Black Friday 2019, the load on the service increased by 40%, i.e. the number of events that the Retail Rocket system monitors and processes on online store sites has grown from 5 to 8 thousand requests per second. Due to the fact that we were preparing for more serious loads, we experienced such a surge easily.

Black Friday is a hot time for all retail and for ecommerce in particular. The number of users and their activity at this time is growing at times, so we, as always, thoroughly prepared for this busy time. We add the fact that many online stores are connected to us, not only in Russia but also in Europe, where the hype is much higher, and we get the level of passions worse than the Brazilian series. What needs to be done to be fully prepared for increased loads?

To begin with, it was necessary to find out what exactly we lack for increasing the capacity of servers. Already in August, we began to order new servers specifically for Black Friday - a total of 10 additional machines were added. By November, they were already completely in battle.

At the same time, part of the build machines was reinstalled for use as Application servers. We immediately prepared them for using different functions: both for issuing recommendations and for the ImageResizer service, so that depending on the type of load, each of them could be used for one of these roles. In the normal mode, Application and ImageResizer servers have clearly defined functions: the first ones are involved in the issuance of recommendations, the second ones provide images for letters and recommendation blocks on the website of online stores. In preparation for Black Friday, it was decided to make all dual-purpose servers to balance traffic between them depending on the type of load.

Then we added two large servers for Kafka (Apache Kafka) and got a cluster of 5 powerful machines. Unfortunately, everything did not go as smoothly as we would like: in the process of data synchronization, two new machines occupied the entire width of the network channel, and we had to urgently figure out how to carry out the add process quickly and safely for the entire infrastructure. To resolve this issue, our administrators had to valiantly sacrifice the weekend.

In addition to the servers, we decided to optimize the files to ease the load, and a big step for us was the translation of static files. All static files that were previously hosted on the servers were taken to S3 + Cloudfront. They had wanted to do this for a long time, since the load on the server was close to the limit values, and now an excellent reason appeared.

A week before Black Friday, we increased the caching time of images to 3 days, so that in the event of a fall in ImageResizer, previously cached images were obtained from cdn. It also reduced the load on our servers, because the longer the image is stored, the less often we need to spend resources on resizing.

And last, but not least: 5 days before Black Friday a moratorium was announced on the deployment of any new functionality, as well as on any work with infrastructure - all attention was directed at coping with increased loads.

No matter how good the preparation, fakapy are always possible. And we have developed 3 response plans for possible critical situations:

Plan A: load reduction. Should have been involved if, due to a surge in load, our servers go beyond acceptable response timings. In this case, we prepared mechanisms to gradually reduce the load by switching part of the traffic to Amazon servers, which would simply give “200 OK” to all requests and give an empty answer. We understood that this is a degradation of the quality of the service, but the choice between the fact that the service does not work at all or does not show recommendations for about 10% of traffic is obvious.

Plan B: Disabling Services.Implied a partial degradation of the service. For example, reducing the speed of calculating personal recommendations for the sake of offloading some databases and communication channels. In the normal mode, recommendations are calculated in real-time mode, creating a version of the online store for each visitor, but under conditions of increased loads, a decrease in speed allows other core services to continue working.

Plan C: for Armageddon.If a complete system failure occurs, we have prepared a plan that will safely disconnect us from customers. Shoppers will simply stop seeing recommendations; the performance of an online store will not be affected. To do this, we would have to reset our integration file so that new users ceased to interact with the service. That is, we would disable the work of our main tracking code, the service would stop collecting data and calculate recommendations, and the user would simply see a page without recommendation blocks. For all those who have previously received the integration file, we have provided the option of switching DNS records to Amazon and a 200 OK stub.

We coped with the entire load even without the need to use additional build machines. And thanks to the advance preparation, we did not need any of the developed response plans. But all the work done is an invaluable experience that will help us cope with the most unexpected and huge influxes of traffic.

As in 2017, the load on the service increased by 40%, and the number of users in online stores for Black Friday increased by 60%. All difficulties and mistakes occurred during the preparatory period, which saved us and our customers from unforeseen situations.

How do you feel about Black Friday? How to prepare for critical loads?

In 2017, during Black Friday, the load increased almost one and a half times, and our servers were at the limit of possibilities. Over the year, the number of customers has grown significantly, and it became clear that without a thorough preliminary preparation, the platform may simply not withstand the loads of 2018.

The goal was set the most ambitious of all possible: we wanted to be fully prepared for any, even the most powerful, bursts of activity and began to withdraw new capacities in advance during the year.

Our CTO Andrei Chizh ( chizh_andrey ) tells how we were preparing for Black Friday 2018, what measures we took to avoid falls, and, of course, the results of such careful preparation.

Today I want to talk about preparations for Black Friday 2018. Why now, when most of the major sales are behind? We began to prepare about a year before large-scale events, and by trial and error we found the optimal solution. We recommend that you take care of the hot seasons in advance and prevent the fakaps that may emerge at the most inopportune moment.

The material will be useful to everyone who wants to squeeze the maximum profit out of such stocks, as the technical side of the issue is not inferior to the marketing one.

Features of traffic on large sales

Contrary to popular belief, Black Friday is not just one day a year, but almost a whole week: the first discount offers arrive 7-8 days before the sale. Website traffic begins to grow smoothly all week, reaches its peak on Friday and drops quite sharply on Saturday to regular store indicators.

This is important to consider: online stores become especially sensitive to any “slowdowns” in the system. In addition, our email newsletter also experienced a significant increase in the number of sendings.

It is strategically important for us to go through Black Friday without falls, as the most important functionality of the sites and mailing lists of stores depends on the platform’s operation, namely:

- Tracking and issuing product recommendations,

- The issuance of related materials (for example, image design blocks of recommendations, such as arrows, logos, icons and other visual elements),

- Issuance of product images of the right size (for this purpose we have “ImageResizer” - a subsystem that downloads an image from the store’s server, compresses it to the desired size and, through caching servers, provides images of the right size for each product in each recommendation block).

In fact, during Black Friday 2019, the load on the service increased by 40%, i.e. the number of events that the Retail Rocket system monitors and processes on online store sites has grown from 5 to 8 thousand requests per second. Due to the fact that we were preparing for more serious loads, we experienced such a surge easily.

General preparation

Black Friday is a hot time for all retail and for ecommerce in particular. The number of users and their activity at this time is growing at times, so we, as always, thoroughly prepared for this busy time. We add the fact that many online stores are connected to us, not only in Russia but also in Europe, where the hype is much higher, and we get the level of passions worse than the Brazilian series. What needs to be done to be fully prepared for increased loads?

Work with servers

To begin with, it was necessary to find out what exactly we lack for increasing the capacity of servers. Already in August, we began to order new servers specifically for Black Friday - a total of 10 additional machines were added. By November, they were already completely in battle.

At the same time, part of the build machines was reinstalled for use as Application servers. We immediately prepared them for using different functions: both for issuing recommendations and for the ImageResizer service, so that depending on the type of load, each of them could be used for one of these roles. In the normal mode, Application and ImageResizer servers have clearly defined functions: the first ones are involved in the issuance of recommendations, the second ones provide images for letters and recommendation blocks on the website of online stores. In preparation for Black Friday, it was decided to make all dual-purpose servers to balance traffic between them depending on the type of load.

Then we added two large servers for Kafka (Apache Kafka) and got a cluster of 5 powerful machines. Unfortunately, everything did not go as smoothly as we would like: in the process of data synchronization, two new machines occupied the entire width of the network channel, and we had to urgently figure out how to carry out the add process quickly and safely for the entire infrastructure. To resolve this issue, our administrators had to valiantly sacrifice the weekend.

Work with data

In addition to the servers, we decided to optimize the files to ease the load, and a big step for us was the translation of static files. All static files that were previously hosted on the servers were taken to S3 + Cloudfront. They had wanted to do this for a long time, since the load on the server was close to the limit values, and now an excellent reason appeared.

A week before Black Friday, we increased the caching time of images to 3 days, so that in the event of a fall in ImageResizer, previously cached images were obtained from cdn. It also reduced the load on our servers, because the longer the image is stored, the less often we need to spend resources on resizing.

And last, but not least: 5 days before Black Friday a moratorium was announced on the deployment of any new functionality, as well as on any work with infrastructure - all attention was directed at coping with increased loads.

Emergency Response Plans

No matter how good the preparation, fakapy are always possible. And we have developed 3 response plans for possible critical situations:

- load reduction

- disabling some services,

- complete shutdown of the service.

Plan A: load reduction. Should have been involved if, due to a surge in load, our servers go beyond acceptable response timings. In this case, we prepared mechanisms to gradually reduce the load by switching part of the traffic to Amazon servers, which would simply give “200 OK” to all requests and give an empty answer. We understood that this is a degradation of the quality of the service, but the choice between the fact that the service does not work at all or does not show recommendations for about 10% of traffic is obvious.

Plan B: Disabling Services.Implied a partial degradation of the service. For example, reducing the speed of calculating personal recommendations for the sake of offloading some databases and communication channels. In the normal mode, recommendations are calculated in real-time mode, creating a version of the online store for each visitor, but under conditions of increased loads, a decrease in speed allows other core services to continue working.

Plan C: for Armageddon.If a complete system failure occurs, we have prepared a plan that will safely disconnect us from customers. Shoppers will simply stop seeing recommendations; the performance of an online store will not be affected. To do this, we would have to reset our integration file so that new users ceased to interact with the service. That is, we would disable the work of our main tracking code, the service would stop collecting data and calculate recommendations, and the user would simply see a page without recommendation blocks. For all those who have previously received the integration file, we have provided the option of switching DNS records to Amazon and a 200 OK stub.

Summary

We coped with the entire load even without the need to use additional build machines. And thanks to the advance preparation, we did not need any of the developed response plans. But all the work done is an invaluable experience that will help us cope with the most unexpected and huge influxes of traffic.

As in 2017, the load on the service increased by 40%, and the number of users in online stores for Black Friday increased by 60%. All difficulties and mistakes occurred during the preparatory period, which saved us and our customers from unforeseen situations.

How do you feel about Black Friday? How to prepare for critical loads?