Where do photos come from for testing face recognition systems?

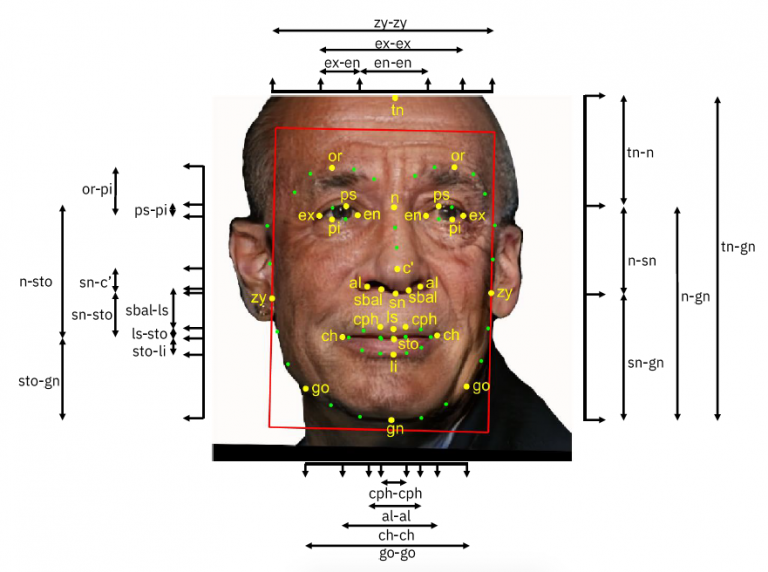

Annotated photo from the Diversity in Faces dataset from IBM

Recently, IBM was criticized for taking publicly available photos from Flickr photo hosting and other sites where users post their pictures to train neural networks without permission. Formally, everything is according to the law - all photos are published under a Creative Commons license - but people feel uncomfortable because AI learns from their faces. Some did not even know that they were photographed. As you know, to photograph a person in a public place, you do not need to ask him for permission.

According to media reports, IBM used approximately 1 million private photos from Flickr to train its face recognition system. But then it turned out that IBM did not actually copy photos from Flickr, these images are part of the YFCC100M data set of 99.2 million photos available for training neural networks. This base was also made by Yahoo, the former owner of Flickr.

It turns out that the story with IBM is just the tip of the iceberg. Here, the company accidentally fell under the distribution, and in fact, user photos have long been used to train a variety of systems, this has already become common practice: “Our study showed that the US government, researchers and corporations used images of immigrants, abused children, and dead people to test their facial recognition systems, "- writes edition of Slate . It emphasizes that even government agencies such as the National Institute of Standards and Technology (NIST) practice such activities.

In particular, within the framework of NIST, the Facial Recognition Verification Testing (FRVT) program is implemented for standardized testing of face recognition systems developed by third-party companies. This program allows you to evaluate all systems in the same way, objectively comparing them with each other. In some cases, cash prizes of up to $ 25 thousand are awarded for winning the competition . But even without a monetary reward, a high score in the NIST test is a powerful incentive for the commercial success of the development company, because potential customers will immediately pay attention to this system, and the A + rating can be mentioned in press releases and promotional materials.

To evaluate NIST, large data sets are used with photographs of faces taken at different angles and in different lighting conditions.

InvestigationSlate revealed that the NIST dataset includes the following photographs:

- photographs of children used in child pornography ;

- photographs of US visa applicants , especially from Mexico;

- people who have been arrested and now have died;

- citizens detained on suspicion of committing crimes.

Many pictures were taken by employees of the Department of Homeland Security (DHS) in public places, while in the process of photographing passers-by, DHS employees posed as tourists who photograph the surroundings.

NIST datasets contain millions of images of people. Since data collection took place in public places, literally any person can be in this database. NIST is actively distributing its data sets, allowing everyone to download, store and use these photos to develop face recognition systems (images of child exploitation are not published). It is impossible to say how many commercial systems use this data, but numerous scientific projects do it for sure, writes Slate .

In a commentary for the publication, a NIST representative said that the FRVT base was being assembled by other government organizations in accordance with their tasks, this also applies to the base with photos of children. NIST uses this data in strict accordance with the law and existing regulations. He confirmed that the database with child porn is actually used to test commercial products, but the children in this database are anonymous, that is, their names and place of residence are not indicated. NIST employees do not view these photos, they are stored on DHS servers.

A dataset with photos of children has been used since at least 2016. According to the documentation for developers, it includes “photographs of children from an infant to a teenager,” where most photographs show “coercion, violence, and sexual activity”. These images are considered particularly difficult to recognize because of greater variability in position, context, etc.

This data set is likely to be used to train and test systems for automatically filtering obscene content .

Journalists also note the “bias” of the Multiple Encounter Dataset data set. Although blacks make up only 12.6% of the US population, 47.5% are in the database of photographs of criminals, which is why AI can also learn bias and become racist .