Typical errors when working with PostgreSQL. Part 2

We continue to publish videos and transcripts of the best reports from the PGConf.Russia 2019 conference . In the first part of the report Ivan Frolkova it was about inconsistent naming of constraints, as to where best to focus the logic - in the database or application. In this part, you will find parsing error handling, concurrent access, non-cancellable operations, CTE and JSON.

I will tell such a story. Our client says: “The database is working slowly, and our application is engaged in serving the population. We are afraid that they will raise us here for forks. ” It turned out that they had a lot of processes in idle in transaction state. The application started the transaction, does nothing, but the transaction does not complete. If you interact with some external services, then, in principle, this is a normal situation. Another thing is that if your idle in transaction state lasts a long time (it’s already suspicious for more than a minute), then this is bad because PostgreSQL really does not like long transactions: VACUUM will not be able to clear all the lines that it could see, and hanging for a long time transaction effectively blocks VACUUM. Tables begin to swell, indexes are becoming less and less effective.

In this case, people did not write requests correctly and received Cartesian products - such requests were completed for several days. Well, the user, he will press the button, wait for the result and, if there is no result, press the button again.

But this did not explain why they have so many processes in idle in transaction . And they appeared in the following situation: the application crawls into the database, starts the transaction, crawls onto some external service, gets an error there, and then everything just crumbles, we print to the stack trace log , and we calm down on this. The connection remains abandoned, hanging and interfering.

What to do about it? First, you must always handle errors. If an error arrives to you, please do not ignore it. It’s good if PostgreSQL lost the connection: it rolls back the transaction, we survive. On this I will stop. Well, if there is a code that you don’t have time to edit at all, then we still have max idle in transaction- it can be delivered, and it will simply kick out inactive transactions.

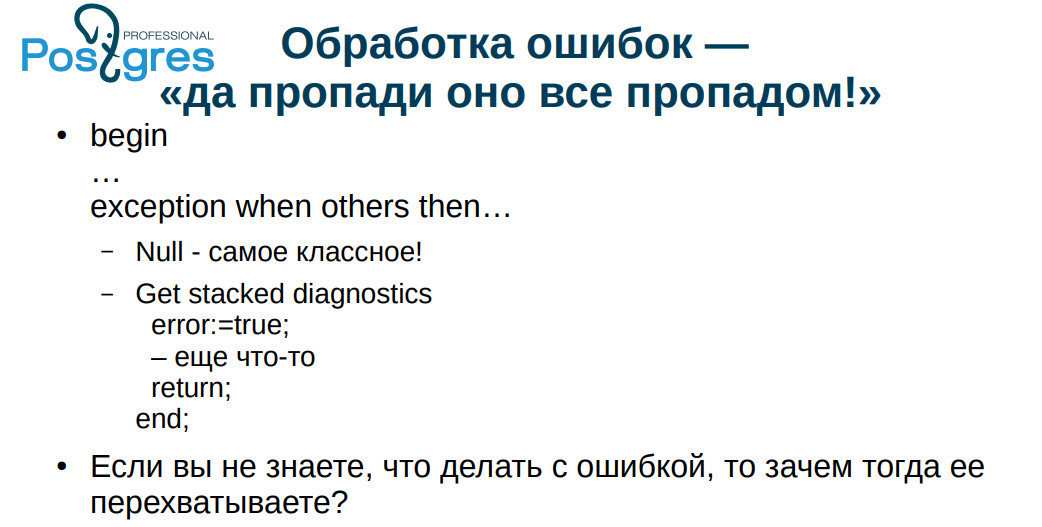

A typical case of error handling is: EXCEPTION WHEN OTHERS THAN NULL. Once we were arguing with a colleague about terminology. I said that it translates as "burn it all with a blue flame," and he means "it’s all gone to waste." If something bad happened with us, then, even if everything scolded the log, it is still better than complete silence - like here.

If you do not know what to do with the error, then do not intercept it. A very common practice: they caught an error, logged it and ran on as if nothing had happened. If, again, you engage in money transactions and you have an error that you ignored, the results may be unpredictable. In the 90s they could, for example, be taken out into the forest in the trunk. Now times have become softer, but also not very pleasant.

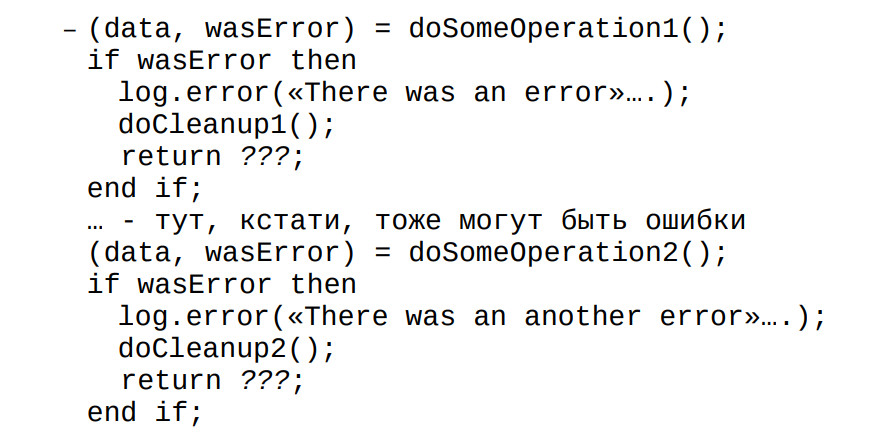

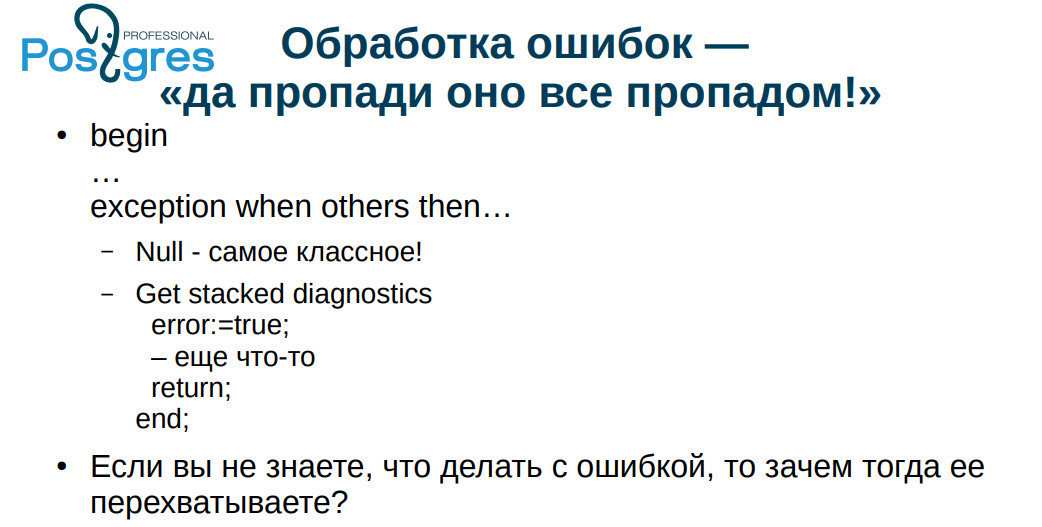

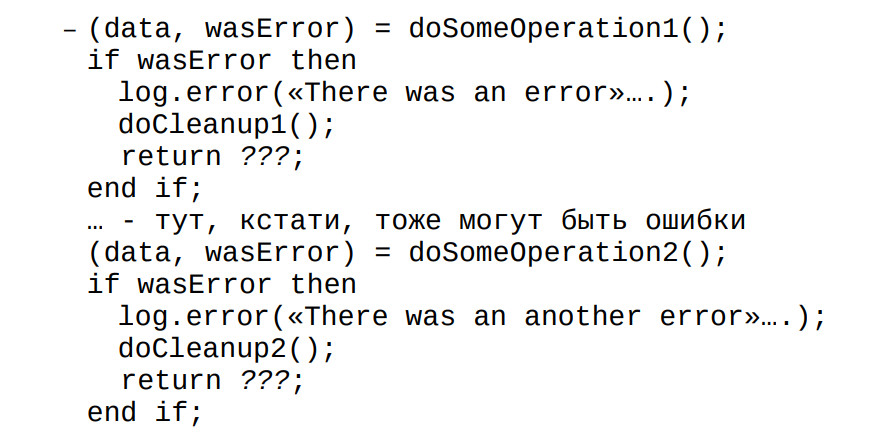

If we do the operation on the client, then, usually, we return the value: everything went either successfully or unsuccessfully. And we process each error. I saw people specifically writing plpgsql code, where they intercepted the error, wrote to the log that, they say, yes, there was an error and rather rude, they inserted their message text. But SQLSTATE did not return. This is always done, so if they forgot to check something, then they started having problems.

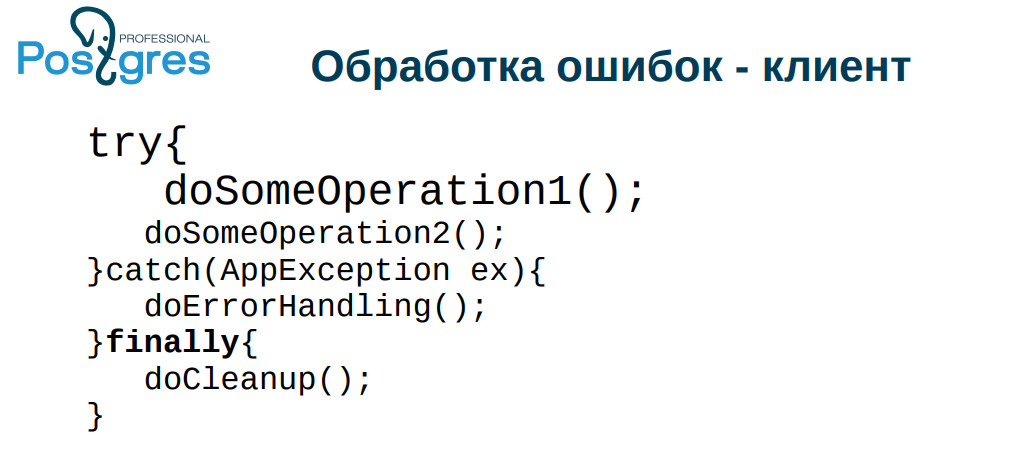

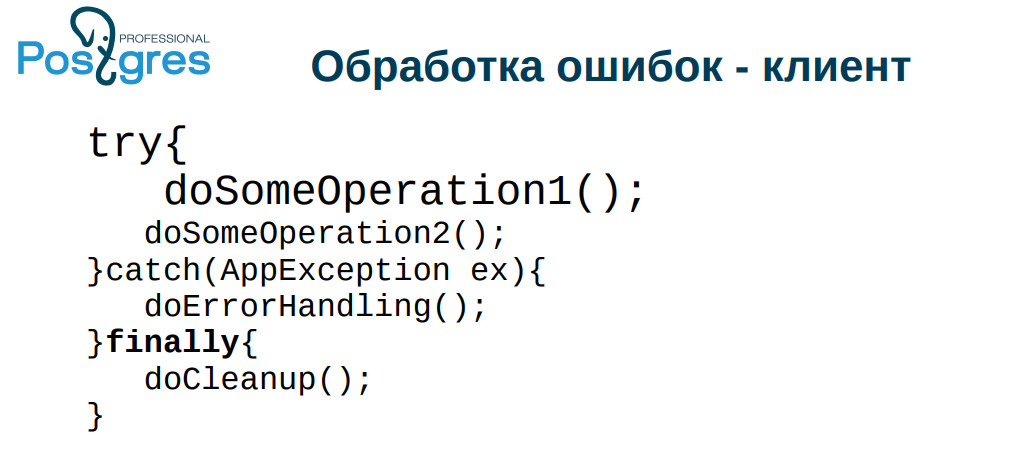

Everyone, for some reason, is afraid of exceptions - both in plpgsql and in other languages. And if you do not invent something of your own, but use the standard features of the language, everything usually works out well. Especially this problem often occurs when the connection falls. It has fallen, the process is idle in transaction , the database is filling up, performance is falling. By the way, such a transaction may still leave locks, but for some reason this is not so common. Therefore, add finally errors to the processing code.and there, clean the connection, give it back to the server.

Moreover, if you have well-defined constraints, you can throw an exception not from the database, but from the application while processing the error. In spring there is an exception translation , in php , respectively, set_exception_handler . Pay attention to the tools that your framework provides you with, they appeared there for a reason.

So: do not catch the error with which you do not know what to do; name errors carefully and accurately; classify errors.

Personally, I classify by such criteria: the operation can be repeated (for example, we had deadlock); the operation cannot be repeated, it has already been completed; the operation cannot be performed in principle.

Paradoxically, from the point of view of the application, the situations when deadlock occurs, when the connection is lost and when we have run out of money to pay are the same situations: the error handler will try to perform the operation again after a while.

On the other hand, what they write in the application, in general, is not my business: I am engaged in the base. I just urge you to handle errors carefully, otherwise: idle in transaction, locked lines, swollen databases and so on.

Most developers believe that they work with the database alone, and their application performs operations strictly sequentially. And this is a plus for all relational DBMSs because, oddly enough, everything works, as a rule, very well, even with the standard isolation level READ COMMITTED, and not SERIALIZABLE. At the same time, situations happen when updates are lost: one loads the form, the other loads the same form, one wrote and saved, the other saved the old one - the changes were erased. The first came to swear: "how so, I wrote so much, and everything is lost."

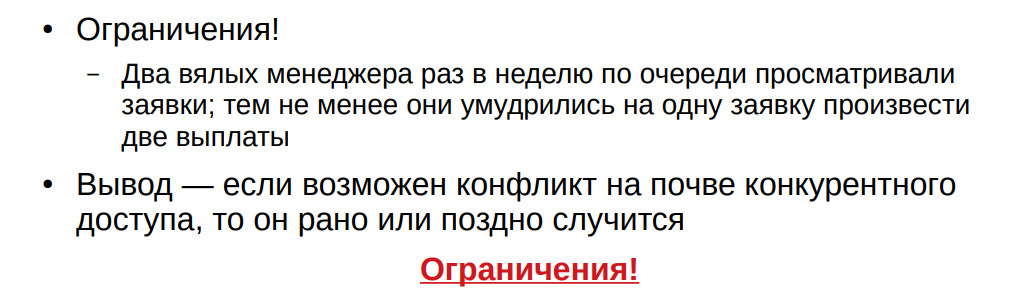

From my experience: once a week on Fridays, two managers made payments. They have to

were changing every other time, but, nevertheless, once climbed at the same time and made two payments per person. If you have at least some chance of a competitive access error, it will happen sooner or later. The question is when.

In addition, I draw your attention to the limitations. I have repeatedly seen how they tried to provide uniqueness with triggers. You will not provide uniqueness in the table with triggers. Either you will need to block the entire table, or do some other complex gestures. You will stumble on this sooner or later.

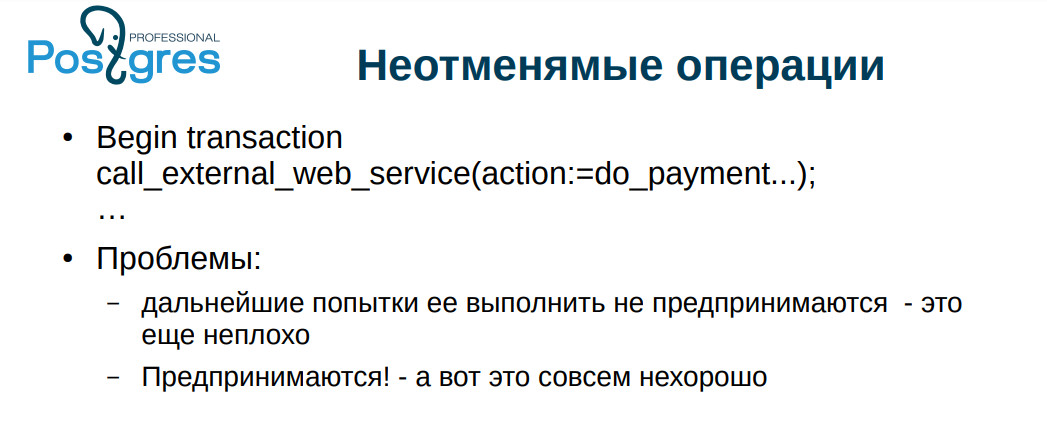

A couple of times I came across a completely nightmarish thing: an external web service is called from the database. There were some operations that change the external entities. This is bad because a transaction can be rolled back in the database, but operations on the remote service will not be rejected.

An even more subtle point is deadlock. Let's imagine: we process a transaction, call an external web service, change something, after that we get deadlock, and we roll back, then we try to perform the operation again, call again, in good circumstances, deadlock occurs again, again roll back - this can

happen many times (I came across a couple of hundred repetitions). And now you process these deadlocks more or less correctly, repeat the operations and suddenly discover that you have been paying a double amount to someone within two months.

I met with payment services that had a poor API: “pay such and such an amount to such and such a user”; the function returns the result - paid / not paid. Firstly, there is a problem in the case of a repeat, and secondly, it is not clear what to do if the connection is interrupted. For some reason, very few people bother on this subject either.

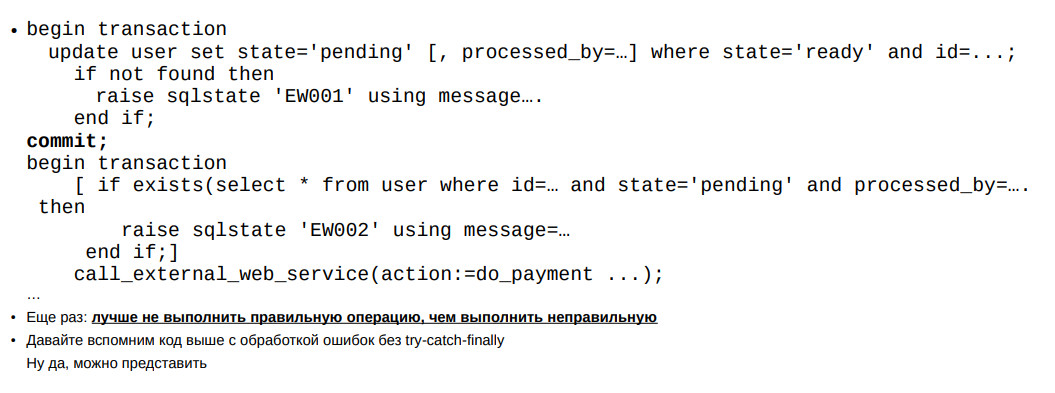

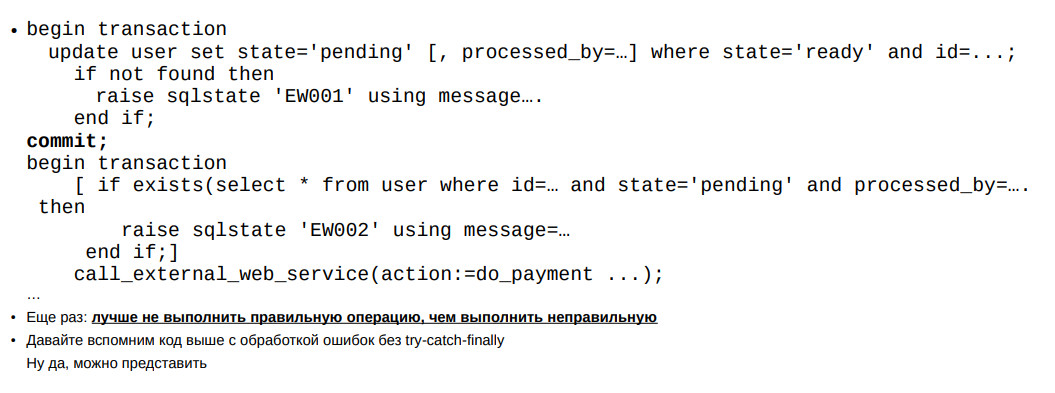

An example is on the slide: such an operation should be carried out in two stages: as if a warning - “we will do something now”; the operation itself.

If we suddenly interrupt - you never know, turned off the power - we can re-perform the operation. If we died in the second stage, then, in the whole world, the second time we will not do it, and this can be disassembled manually. In fact, the vast majority of such operations normally work out for the first time, but these measures are not theoretical fabrications. Everything can work normally for months, and suddenly the admin starts to get wiser with the network, the service starts to flash actively - and the problems started.

There are 4 types of non-cancelable operations on the slide. The latter is non-idempotent operations. This is a very sad case. At the beginning I talked about a comrade who did everything on triggers precisely to ensure the idempotency of his operations.

At the conference, people will talk about Common Table Expressions, about how good it is. Unfortunately, PostgreSQL CTEs are not free: they require work_mem for themselves. If you have a small sample, then, in general, it's okay. And if you suddenly have it big, then your problems begin. People very often use CTE as a kind of mini-views - so that you can somehow structure the application. CTE is very much in demand.

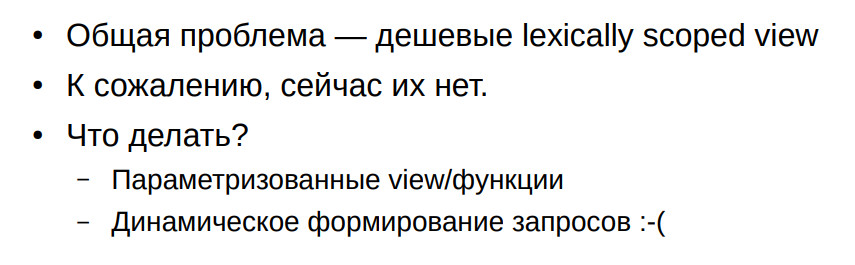

You can make temporary views, but, unfortunately, each one takes a line in pg_class, and if this is very actively used, then there may be problems with directory swelling.

In this case, you can advise to make a parameterized view, or dynamically form a query, but, unfortunately, in PostgreSQL from the inside, this is not very cool.

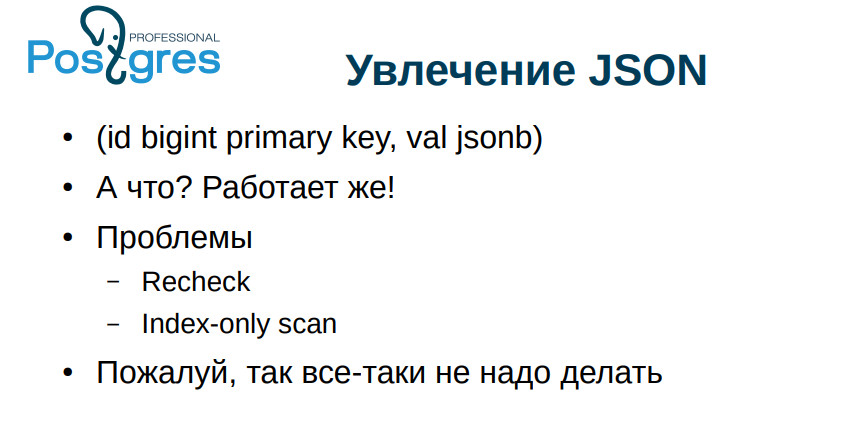

JSON is usually talked about in excellent tones, but there is a tendency in the application in JSON to push anything at all. In principle, everything works well. On the other hand, data is retrieved from JSON, albeit quickly, but not as fast as from columns. Even worse, if you have a large JSON, and it is issued in TOAST. To get JSON from there, you need to pick it up from TOAST.

If all the columns are in JSON, a functional index is even built on them, then you still need to get it out of there. It gets even worse with a large volume, when the database is large, when you have a bitmap index scan . Then we have links not to lines, but to the whole page, and in order to understand what to take from the page, PostgreSQL will make Recheck, that is, he lifts a line from TOAST and checks if this value is there or not, and accordingly already skips or does not skip. If with small columns this works well, then with JSON this is a big problem. There is no need to get too carried away with JSONs.

- How to check when several users work with a string? What options are there?

- Firstly, you can subtract the values of all columns and make sure that they have not changed before showing the line in the form. The second option is more convenient: calculate the hash on all

columns, especially since the columns there can be large and thick. And the hash is not so big.

- You say that constraints should be called good names so that the user can understand what is happening. But there is a 60 character limit per constraint name. This is often not enough. How to deal with it?

- I think to fight by self-restraint. In PostgreSQL, this is a special type of length 64. In principle, you can recompile to a longer length, but this is not very good.

- In the report, you intrigued us with the fact that we need to do something with the archives. What mechanism is considered to be the most correct for outdated archiving?

- As I said at the very beginning, with due diligence everything works. Which method is most convenient for you, so use it.

Timing: The second part of the report starts at 25:16

- There is a certain procedure that several users invoke in parallel. How to limit the parallel execution of this procedure, that is, to line up all

users in a queue so that until one finishes the procedure, the next one can not start using it?

- Precisely the procedure? Or is it enough transaction?

- It is the procedure that is called in some transaction.

- You can put a lock on the object. It would be difficult if you had a condition, say, no more than 3 at the same time. But this is realizable. I usually use transactional locks, but non-transactional ones are also possible.

- I would still like to once again return to archival data. You talked about

archive storage options so that data from the application is also available. It occurred to me to simply create a separate archive database. What other options are there?

- Yes, you can make an archive database. You can write a function and wrap it in a view. In a function, you can do whatever it takes: you can go to the archive database, you can pick up some files from the disk, you can go to an external web service, you can combine all this, you can generate some random data yourself - choice limited only by imagination.

- To the question about archive data: you can use partitions - new chips of the 11th version, when we make the whole table partitioned, and then we just detach the partition and leave it as an archive. It can also be accessed.

“Of course, why not.” I give way to the next speaker.

I will tell such a story. Our client says: “The database is working slowly, and our application is engaged in serving the population. We are afraid that they will raise us here for forks. ” It turned out that they had a lot of processes in idle in transaction state. The application started the transaction, does nothing, but the transaction does not complete. If you interact with some external services, then, in principle, this is a normal situation. Another thing is that if your idle in transaction state lasts a long time (it’s already suspicious for more than a minute), then this is bad because PostgreSQL really does not like long transactions: VACUUM will not be able to clear all the lines that it could see, and hanging for a long time transaction effectively blocks VACUUM. Tables begin to swell, indexes are becoming less and less effective.

In this case, people did not write requests correctly and received Cartesian products - such requests were completed for several days. Well, the user, he will press the button, wait for the result and, if there is no result, press the button again.

But this did not explain why they have so many processes in idle in transaction . And they appeared in the following situation: the application crawls into the database, starts the transaction, crawls onto some external service, gets an error there, and then everything just crumbles, we print to the stack trace log , and we calm down on this. The connection remains abandoned, hanging and interfering.

What to do about it? First, you must always handle errors. If an error arrives to you, please do not ignore it. It’s good if PostgreSQL lost the connection: it rolls back the transaction, we survive. On this I will stop. Well, if there is a code that you don’t have time to edit at all, then we still have max idle in transaction- it can be delivered, and it will simply kick out inactive transactions.

A typical case of error handling is: EXCEPTION WHEN OTHERS THAN NULL. Once we were arguing with a colleague about terminology. I said that it translates as "burn it all with a blue flame," and he means "it’s all gone to waste." If something bad happened with us, then, even if everything scolded the log, it is still better than complete silence - like here.

If you do not know what to do with the error, then do not intercept it. A very common practice: they caught an error, logged it and ran on as if nothing had happened. If, again, you engage in money transactions and you have an error that you ignored, the results may be unpredictable. In the 90s they could, for example, be taken out into the forest in the trunk. Now times have become softer, but also not very pleasant.

If we do the operation on the client, then, usually, we return the value: everything went either successfully or unsuccessfully. And we process each error. I saw people specifically writing plpgsql code, where they intercepted the error, wrote to the log that, they say, yes, there was an error and rather rude, they inserted their message text. But SQLSTATE did not return. This is always done, so if they forgot to check something, then they started having problems.

Everyone, for some reason, is afraid of exceptions - both in plpgsql and in other languages. And if you do not invent something of your own, but use the standard features of the language, everything usually works out well. Especially this problem often occurs when the connection falls. It has fallen, the process is idle in transaction , the database is filling up, performance is falling. By the way, such a transaction may still leave locks, but for some reason this is not so common. Therefore, add finally errors to the processing code.and there, clean the connection, give it back to the server.

Moreover, if you have well-defined constraints, you can throw an exception not from the database, but from the application while processing the error. In spring there is an exception translation , in php , respectively, set_exception_handler . Pay attention to the tools that your framework provides you with, they appeared there for a reason.

So: do not catch the error with which you do not know what to do; name errors carefully and accurately; classify errors.

Personally, I classify by such criteria: the operation can be repeated (for example, we had deadlock); the operation cannot be repeated, it has already been completed; the operation cannot be performed in principle.

Paradoxically, from the point of view of the application, the situations when deadlock occurs, when the connection is lost and when we have run out of money to pay are the same situations: the error handler will try to perform the operation again after a while.

On the other hand, what they write in the application, in general, is not my business: I am engaged in the base. I just urge you to handle errors carefully, otherwise: idle in transaction, locked lines, swollen databases and so on.

Most developers believe that they work with the database alone, and their application performs operations strictly sequentially. And this is a plus for all relational DBMSs because, oddly enough, everything works, as a rule, very well, even with the standard isolation level READ COMMITTED, and not SERIALIZABLE. At the same time, situations happen when updates are lost: one loads the form, the other loads the same form, one wrote and saved, the other saved the old one - the changes were erased. The first came to swear: "how so, I wrote so much, and everything is lost."

From my experience: once a week on Fridays, two managers made payments. They have to

were changing every other time, but, nevertheless, once climbed at the same time and made two payments per person. If you have at least some chance of a competitive access error, it will happen sooner or later. The question is when.

In addition, I draw your attention to the limitations. I have repeatedly seen how they tried to provide uniqueness with triggers. You will not provide uniqueness in the table with triggers. Either you will need to block the entire table, or do some other complex gestures. You will stumble on this sooner or later.

A couple of times I came across a completely nightmarish thing: an external web service is called from the database. There were some operations that change the external entities. This is bad because a transaction can be rolled back in the database, but operations on the remote service will not be rejected.

An even more subtle point is deadlock. Let's imagine: we process a transaction, call an external web service, change something, after that we get deadlock, and we roll back, then we try to perform the operation again, call again, in good circumstances, deadlock occurs again, again roll back - this can

happen many times (I came across a couple of hundred repetitions). And now you process these deadlocks more or less correctly, repeat the operations and suddenly discover that you have been paying a double amount to someone within two months.

I met with payment services that had a poor API: “pay such and such an amount to such and such a user”; the function returns the result - paid / not paid. Firstly, there is a problem in the case of a repeat, and secondly, it is not clear what to do if the connection is interrupted. For some reason, very few people bother on this subject either.

An example is on the slide: such an operation should be carried out in two stages: as if a warning - “we will do something now”; the operation itself.

If we suddenly interrupt - you never know, turned off the power - we can re-perform the operation. If we died in the second stage, then, in the whole world, the second time we will not do it, and this can be disassembled manually. In fact, the vast majority of such operations normally work out for the first time, but these measures are not theoretical fabrications. Everything can work normally for months, and suddenly the admin starts to get wiser with the network, the service starts to flash actively - and the problems started.

There are 4 types of non-cancelable operations on the slide. The latter is non-idempotent operations. This is a very sad case. At the beginning I talked about a comrade who did everything on triggers precisely to ensure the idempotency of his operations.

At the conference, people will talk about Common Table Expressions, about how good it is. Unfortunately, PostgreSQL CTEs are not free: they require work_mem for themselves. If you have a small sample, then, in general, it's okay. And if you suddenly have it big, then your problems begin. People very often use CTE as a kind of mini-views - so that you can somehow structure the application. CTE is very much in demand.

You can make temporary views, but, unfortunately, each one takes a line in pg_class, and if this is very actively used, then there may be problems with directory swelling.

In this case, you can advise to make a parameterized view, or dynamically form a query, but, unfortunately, in PostgreSQL from the inside, this is not very cool.

JSON is usually talked about in excellent tones, but there is a tendency in the application in JSON to push anything at all. In principle, everything works well. On the other hand, data is retrieved from JSON, albeit quickly, but not as fast as from columns. Even worse, if you have a large JSON, and it is issued in TOAST. To get JSON from there, you need to pick it up from TOAST.

If all the columns are in JSON, a functional index is even built on them, then you still need to get it out of there. It gets even worse with a large volume, when the database is large, when you have a bitmap index scan . Then we have links not to lines, but to the whole page, and in order to understand what to take from the page, PostgreSQL will make Recheck, that is, he lifts a line from TOAST and checks if this value is there or not, and accordingly already skips or does not skip. If with small columns this works well, then with JSON this is a big problem. There is no need to get too carried away with JSONs.

- How to check when several users work with a string? What options are there?

- Firstly, you can subtract the values of all columns and make sure that they have not changed before showing the line in the form. The second option is more convenient: calculate the hash on all

columns, especially since the columns there can be large and thick. And the hash is not so big.

- You say that constraints should be called good names so that the user can understand what is happening. But there is a 60 character limit per constraint name. This is often not enough. How to deal with it?

- I think to fight by self-restraint. In PostgreSQL, this is a special type of length 64. In principle, you can recompile to a longer length, but this is not very good.

- In the report, you intrigued us with the fact that we need to do something with the archives. What mechanism is considered to be the most correct for outdated archiving?

- As I said at the very beginning, with due diligence everything works. Which method is most convenient for you, so use it.

Timing: The second part of the report starts at 25:16

- There is a certain procedure that several users invoke in parallel. How to limit the parallel execution of this procedure, that is, to line up all

users in a queue so that until one finishes the procedure, the next one can not start using it?

- Precisely the procedure? Or is it enough transaction?

- It is the procedure that is called in some transaction.

- You can put a lock on the object. It would be difficult if you had a condition, say, no more than 3 at the same time. But this is realizable. I usually use transactional locks, but non-transactional ones are also possible.

- I would still like to once again return to archival data. You talked about

archive storage options so that data from the application is also available. It occurred to me to simply create a separate archive database. What other options are there?

- Yes, you can make an archive database. You can write a function and wrap it in a view. In a function, you can do whatever it takes: you can go to the archive database, you can pick up some files from the disk, you can go to an external web service, you can combine all this, you can generate some random data yourself - choice limited only by imagination.

- To the question about archive data: you can use partitions - new chips of the 11th version, when we make the whole table partitioned, and then we just detach the partition and leave it as an archive. It can also be accessed.

“Of course, why not.” I give way to the next speaker.