Multiple pitfalls of static typing in Python

I think we are slowly getting used to the fact that Python has type annotations: they were delivered two releases back (3.5) to the annotation of functions and methods ( PEP 484 ), and in the last release (3.6) to variables ( PEP 526 ).

Since both of these PEPs were inspired by MyPy , I’ll tell you what everyday joys and cognitive dissonances awaited me when using this static analyzer, as well as the typing system as a whole.

Disclamer: I do not raise the question of the need or harmfulness of static typing in Python. Just talking about the pitfalls that came across in the process of working in a statically-typed context.

Generics (typing.Generic)

Pleasant to use annotations something List[int], Callable[[int, str], None].

It is very nice when the analyzer highlights the following code:

T = ty.TypeVar('T')

classA(ty.Generic[T]):

value: T

A[int]().value = 'str'# error: Incompatible types in assignment# (expression has type "str", variable has type "int")However, what to do if we write a library, and a programmer using it will not use a static analyzer?

To force the user to initialize the class with a value, and then store its type?

T = ty.TypeVar('T')

classGen(Generic[T]):

value: T

ref: Type[T]

def__init__(self, value: T) -> None:

self.value = value

self.ref = type(value)Somehow not user-friendly.

And what if you want to do so?

b = Gen[A](B())In search of an answer to this question, I ran a little along the module typing, and plunged into the world of factories.

The fact is that after initialization of the instance of the Generic class, it has an attribute __origin_class__that has an attribute __args__representing a type tuple. However, there is no access to it from __init__, no less than from __new__. Also it is not in the __call__metaclass. And the trick is that at the time of subclass initialization Genericit turns into another metaclass _GenericAlias, which sets the final type, either after the object is initialized, including all methods of its metaclass, or at the time of the call __getithem__on it. Thus, there is no way to get generic types when constructing an object.

Поэтому я написал себе небольшой дескриптор, решающий эту проблему:

def_init_obj_ref(obj: 'Gen[T]') -> None:"""Set object ref attribute if not one to initialized arg."""ifnot hasattr(obj, 'ref'):

obj.ref = obj.__orig_class__.__args__[0] # type: ignoreclassValueHandler(Generic[T]):"""Handle object _value attribute, asserting it's type."""def__get__(self,

obj: 'Gen[T]',

cls: Type['Gen[T]']

) -> Union[T, 'ValueHandler[T]']:ifnot obj:

return self

_init_obj_ref(obj)

ifnot obj._value:

obj._value = obj.ref()

return obj._value

def__set__(self, obj: 'Gen[T]', val: T) -> None:

_init_obj_ref(obj)

ifnot isinstance(val, obj.ref):

raise TypeError(f'has to be of type {obj.ref}, pasted {val}')

obj._value = val

classGen(Generic[T]):

_value: T

ref: Type[T]

value = ValueHandler[T]()

def__init__(self, value: T) -> None:

self._value = value

classA:passclassB(A):pass

b = Gen[A](B())

b.value = A()

b.value = int() # TypeError: has to be of type <class '__main__.A'>, pasted 0Конечно, в последствие, надо будет переписать для более универсального использования, но суть понятна.

[UPD]: In the morning I decided to try to do the same as in the module itself typing, but simpler:

import typing as ty

T = ty.TypeVar('T')

classA(ty.Generic[T]):# __args are unique every instantiation

__args: ty.Optional[ty.Tuple[ty.Type[T]]] = None

value: T

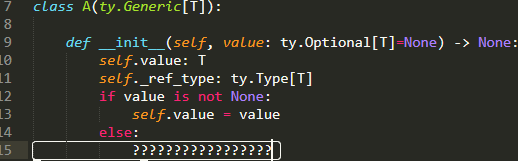

def__init__(self, value: ty.Optional[T]=None) -> None:"""Get actual type of generic and initizalize it's value."""

cls = ty.cast(A, self.__class__)

if cls.__args:

self.ref = cls.__args[0]

else:

self.ref = type(value)

if value:

self.value = value

else:

self.value = self.ref()

cls.__args = Nonedef__class_getitem__(cls, *args: ty.Union[ty.Type[int], ty.Type[str]]

) -> ty.Type['A']:"""Recive type args, if passed any before initialization."""

cls.__args = ty.cast(ty.Tuple[ty.Type[T]], args)

return super().__class_getitem__(*args, **kwargs) # type: ignore

a = A[int]()

b = A(int())

c = A[str]()

print([a.value, b.value, c.value]) # [0, 0, ''][UPD]: Developer typingIvan Levinsky said that both options may break unpredictably.

Anyway, you can use whatever way. It doesn’t__class_getitem__even be better__class_getitem__documented.

Functions and aliases

Yes, with generics, it’s not at all simple:

For example, if we take a function somewhere as an argument, its annotation automatically turns from covariant to contravariant:

classA:passclassB(A):passdeffoo(arg: 'A') -> None:# принимает инстанции A и B

...

defbar(f: Callable[['A'], None]):# принимает функции с аннотацией не ниже A

...And in principle, I have no claims to logic, only it has to be solved through generic aliases:

TA = TypeVar('TA', bound='A')

deffoo(arg: 'B') -> None:# принимает инстанции B и сабклассов

...

defbar(f: Callable[['TA'], None]):# принимает функции с аннотациями A и B

...In general, the section on type variation should be read carefully, and not on time.

backward compatibility

This is not so hot: from version 3.7 it Genericis a subclass ABCMeta, which is very convenient and good. It's bad that it breaks the code if it is running on 3.6.

Structural Inheritance (Stuctural Suptyping)

At first I was very happy: the interfaces were delivered! The role of interfaces is performed by the class Protocolfrom the module typing_extensions, which, in combination with the decorator @runtime, allows you to check whether the class implements the interface without direct inheritance. MyPy is also highlighted at a deeper level.

However, I didn’t notice any particular practical use in runtime compared to multiple inheritance.

It seems that the decorator checks only the presence of the method with the required name, without even checking the number of arguments, not to mention the typing:

import typing as ty

import typing_extensions as te

@te.runtimeclassIntStackP(te.Protocol):

_list: ty.List[int]

defpush(self, val: int) -> None:

...

classIntStack:def__init__(self) -> None:

self._list: ty.List[int] = list()

defpush(self, val: int) -> None:ifnot isinstance(val, int):

raise TypeError('wrong pushued val type')

self._list.append(val)

classStrStack:def__init__(self) -> None:

self._list: ty.List[str] = list()

defpush(self, val: str, weather: ty.Any=None) -> None:ifnot isinstance(val, str):

raise TypeError('wrong pushued val type')

self._list.append(val)

defpush_func(stack: IntStackP, value: int):ifnot isinstance(stack, IntStackP):

raise TypeError('is not IntStackP')

stack.push(value)

a = IntStack()

b = StrStack()

c: ty.List[int] = list()

push_func(a, 1)

push_func(b, 1) # TypeError: wrong pushued val type

push_func(c, 1) # TypeError: is not IntStackPOn the other hand, MyPy, in turn, behaves more intelligently, and highlights the incompatibility of types:

push_func(a, 1)

push_func(b, 1) # Argument 1 to "push_func" has incompatible type "StrStack"; # expected "IntStackP"# Following member(s) of "StrStack" have conflicts:# _list: expected "List[int]", got "List[str]"# Expected:# def push(self, val: int) -> None# Got:# def push(self, val: str, weather: Optional[Any] = ...) -> NoneOperator Overloading

Very fresh topic, because When operators are overloaded with full type safety, all the fun disappears. This question has already emerged more than once in the MyPy bug tracker, but it still curses in some places and can be safely turned off.

I explain the situation:

classA:def__add__(self, other) -> int:return3def__iadd__(self, other) -> 'A':if isinstance(other, int):

returnNotImplementedreturn A()

var = A()

var += 3# Inferred type is 'A', but runtime type is 'int'?If the composite assignment method returns NotImplemented, Python searches first __radd__, then uses it __add__, and voila.

The same applies to overloading of any subclass methods of the form:

classA:def__add__(self, x : 'A') -> 'A': ...

classB(A): @overloaddef__add__(self, x : 'A') -> 'A': ...

@overloaddef__add__(self, x : 'B') -> 'B' : ...In some places, the warnings have already moved to the documentation, in some places they are still on sale. But the general conclusion of the contributors: to leave such overloads acceptable.