About static analysis in all honesty

Recently, they are increasingly talking about static analysis as one of the important means of ensuring the quality of software products being developed, especially from a security point of view. Static analysis allows you to find vulnerabilities and other errors, it can be used in the development process, integrating into customized processes. However, in connection with its application, many questions arise. What is the difference between paid and free tools? Why is it not enough to use the linter? In the end, what does the statistic have to do with it? Let's try to figure it out.

We’ll answer the last question right away - statistics have nothing to do with it, although static analysis is often mistakenly called statistical. The analysis is static, because the application does not start during scanning.

First, let's figure out what we want to look for in the program code. Static analysis is most often used to search for vulnerabilities - sections of code, the presence of which can lead to a violation of the confidentiality, integrity or availability of the information system. However, the same technologies can be used to search for other errors or code features.

We make a reservation that in general the problem of static analysis is algorithmically unsolvable (for example, by the Rice theorem). Therefore, you have to either limit the conditions of the task, or allow inaccuracy in the results (skip vulnerabilities, give false positives). It turns out that on real programs, the second option turns out to be working.

There are many paid and free tools that claim to search for vulnerabilities in applications written in different programming languages. Let's consider how the static analyzer is usually arranged. Further we will focus on the analyzer core and algorithms. Of course, the tools can differ in the friendliness of the interface, in the set of functionality, in the set of plugins for different systems and in the ease of use of the API. This is probably a topic for a separate article.

In the scheme of operation of a static analyzer, three main steps can be distinguished.

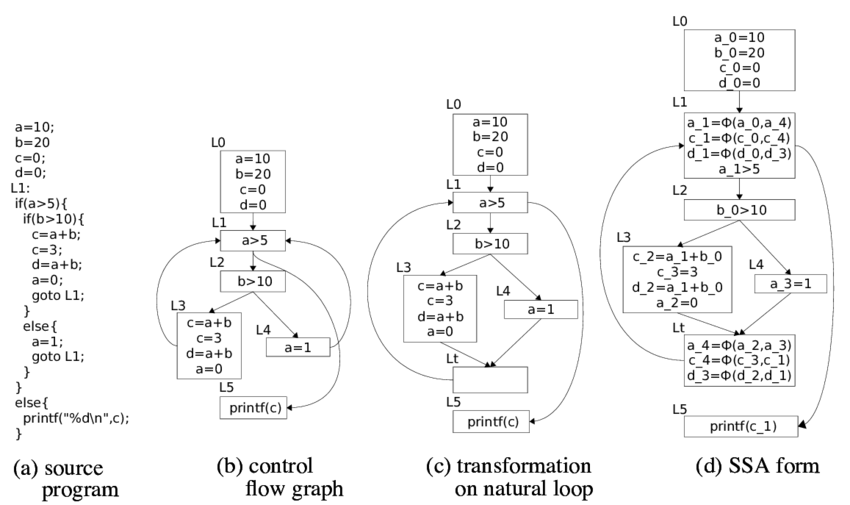

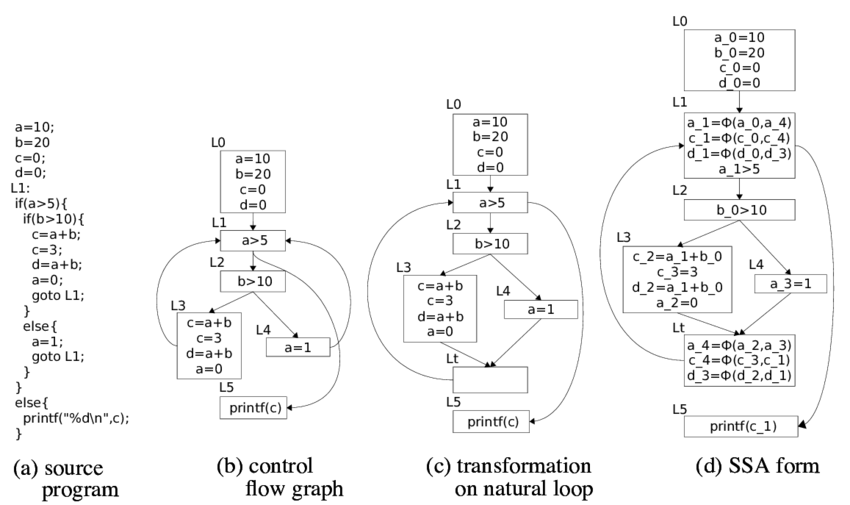

Different static analyzers can use different code models, for example, the program source code, token stream, parse tree, three-address code, control flow graph, bytecode - standard or native - and so on.

Like compilers, lexical and syntactic analysis is used to build an internal representation, most often an analysis tree (AST, Abstract Syntax Tree). The lexical analysis breaks the program text into minimal semantic elements, at the output receiving a stream of tokens. Parsing verifies that the token stream matches the grammar of the programming language, that is, the resulting token stream is correct from a language point of view. As a result of parsing, a parsing tree is built - a structure that models the source code of the program. Next, semantic analysis is applied; it checks the fulfillment of more complex conditions, for example, the correspondence of data types in assignment instructions.

The parse tree can be used as an internal representation. You can also get other models from the parse tree. For example, you can translate it into a three-address code, which, in turn, builds a control flow graph (CFG). Typically, CFG is the primary model for static analysis algorithms.

In binary analysis (static analysis of binary or executable code), a model is also built, but the reverse development practices are already used here: decompilation, deobfuscation, reverse translation. As a result, you can get the same models as from the source code, including the source code (using full decompilation). Sometimes the binary code itself can serve as an intermediate representation.

Theoretically, the closer the model to the source code, the worse the quality of the analysis. On the source code itself, you can only search for regular expressions, which will not allow you to find at least any complex vulnerability.

One of the main static analysis algorithms is data flow analysis. The task of this analysis is to determine at each point in the program some information about the data that the code operates on. The information may be different, for example, data type or value. Depending on what information needs to be determined, the task of analyzing the data flow can be formulated.

For example, if it is necessary to determine whether an expression is a constant, as well as the value of this constant, then the problem of propagating constants (constant propagation) is solved. If it is necessary to determine the type of a variable, then we can talk about the problem of type propagation. If you need to understand which variables can point to a specific memory area (store the same data), then we are talking about the task of synonyms analysis (alias analysis). There are many other data flow analysis tasks that can be used in a static analyzer. Like the stages of building a code model, these tasks are also used in compilers.

In the theory of constructing compilers, solutions to the problem of intra-procedural analysis of a data stream are described (it is necessary to track data in a single procedure / function / method). Decisions are based on the theory of algebraic lattices and other elements of mathematical theories. The problem of analyzing the data flow can be solved in polynomial time, that is, for a time acceptable for computers, if the conditions of the problem satisfy the conditions of the solvability theorem, which in practice does not always happen.

We will tell you more about solving the problem of intra-procedural analysis of the data flow. To set a specific task, in addition to determining the information you need, you need to determine the rules for changing this information when passing data according to instructions in CFG. Recall that the nodes in the CFG are the basic blocks - sets of instructions, the execution of which is always sequential, and the arcs indicate the possible transfer of control between the basic blocks.

For each instruction sets are defined:

sets are defined:

The goal of data flow analysis is to define sets and

and  for each instruction

for each instruction  programs. The basic system of equations, with which the tasks of analyzing the data flow are solved, is determined by the following relationship (data flow equations):

programs. The basic system of equations, with which the tasks of analyzing the data flow are solved, is determined by the following relationship (data flow equations):

The second relation formulates the rules according to which information is “combined” at the points of confluence of CFG arcs ( - predecessors

- predecessors  in CFG). The operation of union, intersection and some others can be used.

in CFG). The operation of union, intersection and some others can be used.

The desired information (the set of values of the functions introduced above) is formalized as an algebraic lattice. Functions and

and  are considered as monotone mappings on lattices (flow functions). For data flow equations, the solution is the fixed point of these mappings.

are considered as monotone mappings on lattices (flow functions). For data flow equations, the solution is the fixed point of these mappings.

Algorithms for solving data flow analysis problems look for maximum fixed points. There are several approaches to the solution: iterative algorithms, analysis of strongly connected components, T1-T2 analysis, interval analysis, structural analysis, and so on. There are theorems on the correctness of these algorithms; they determine the scope of their applicability to real problems. I repeat, the conditions of the theorems may not be fulfilled, which leads to more complicated algorithms and inaccurate results.

In practice, it is necessary to solve the problems of interprocedural analysis of the data flow, since rarely the vulnerability will be completely localized in one function. There are several common algorithms.

Inline functions . At the function call point, we embed the called function, thereby reducing the task of interprocedural analysis to the task of intraprocedural analysis. This method is easily implemented, but in practice, when it is applied, a combinatorial explosion is quickly achieved.

Building a general graph of the program control flow, in which function calls are replaced by transitions to the start address of the called function, and return instructions are replaced by transitions to all instructions following all instructions for calling this function. This approach adds a large number of unrealizable execution paths, which greatly reduces the accuracy of the analysis.

An algorithm similar to the previous one, but when switching to a function, the context is saved - for example, a stack frame. Thus, the problem of creating unrealizable paths is solved. However, the algorithm is applicable with limited call depth.

Building information about functions (function summary).The most applicable interprocedural analysis algorithm. It is based on the construction of a summary for each function: the rules by which information about data is converted when applying this function, depending on the various values of the input arguments. Ready-made summaries are used for intraprocedural analysis of functions. A separate complication here is the determination of the order of traversal of functions, since in case-by-case analysis, a summary should already be built for all called functions. Usually, special iterative algorithms for traversing a call graph are created.

Interprocedural analysis of the data stream is an exponentially difficult task, which is why the analyzer needs to carry out a number of optimizations and assumptions (it is impossible to find the exact solution in adequate time for computing power). Usually, when developing an analyzer, it is necessary to find a compromise between the amount of resources consumed, the analysis time, the number of false positives and vulnerabilities found. Therefore, a static analyzer can work for a long time, consume a lot of resources and give false positives. However, without this it is impossible to find the most important vulnerabilities.

It is at this point that serious static analyzers differ from many open tools, which, in particular, can position themselves in the search for vulnerabilities. Quick checks in linear time are good when you need to get the result quickly, for example, during compilation. However, this approach cannot find the most critical vulnerabilities - for example, related to the implementation of data.

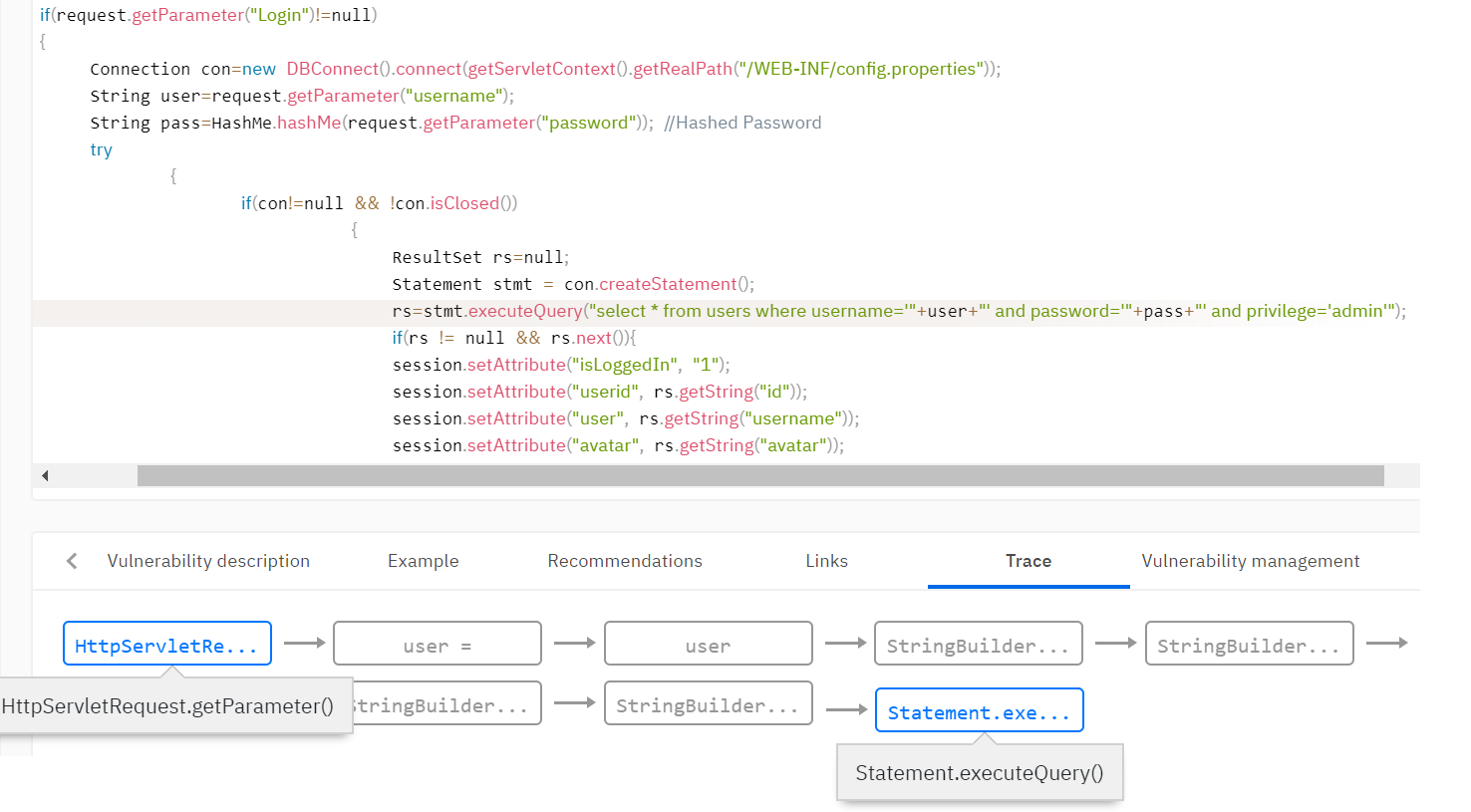

Separately, it is worth dwelling on one of the tasks of data flow analysis - taint analysis. Taint analysis allows you to distribute flags throughout the program. This task is key to information security, because it is with the help of it that vulnerabilities are discovered related to the implementation of data (SQL injection, crossite scripting, open redirects, falsification of the file path, and so on), as well as leakage of confidential data (password entry in event logs, insecure data transfer).

Let's try to model the task. Suppose we want to track n flags - . A lot of information here will be a lot of subsets

. A lot of information here will be a lot of subsets , since for each variable at each point in the program we want to define its flags.

, since for each variable at each point in the program we want to define its flags.

Next we need to define the flow functions. In this case, the flow functions can be determined by the following considerations.

Finally, you need to define the rules for merging information at the junction points of CFG arcs. Merging is determined by the union rule, that is, if different sets of flags for one variable came from different base blocks, then they are merged when merging. Including false positives come from here: the algorithm does not guarantee that the path to the CFG on which the flag appeared can be executed.

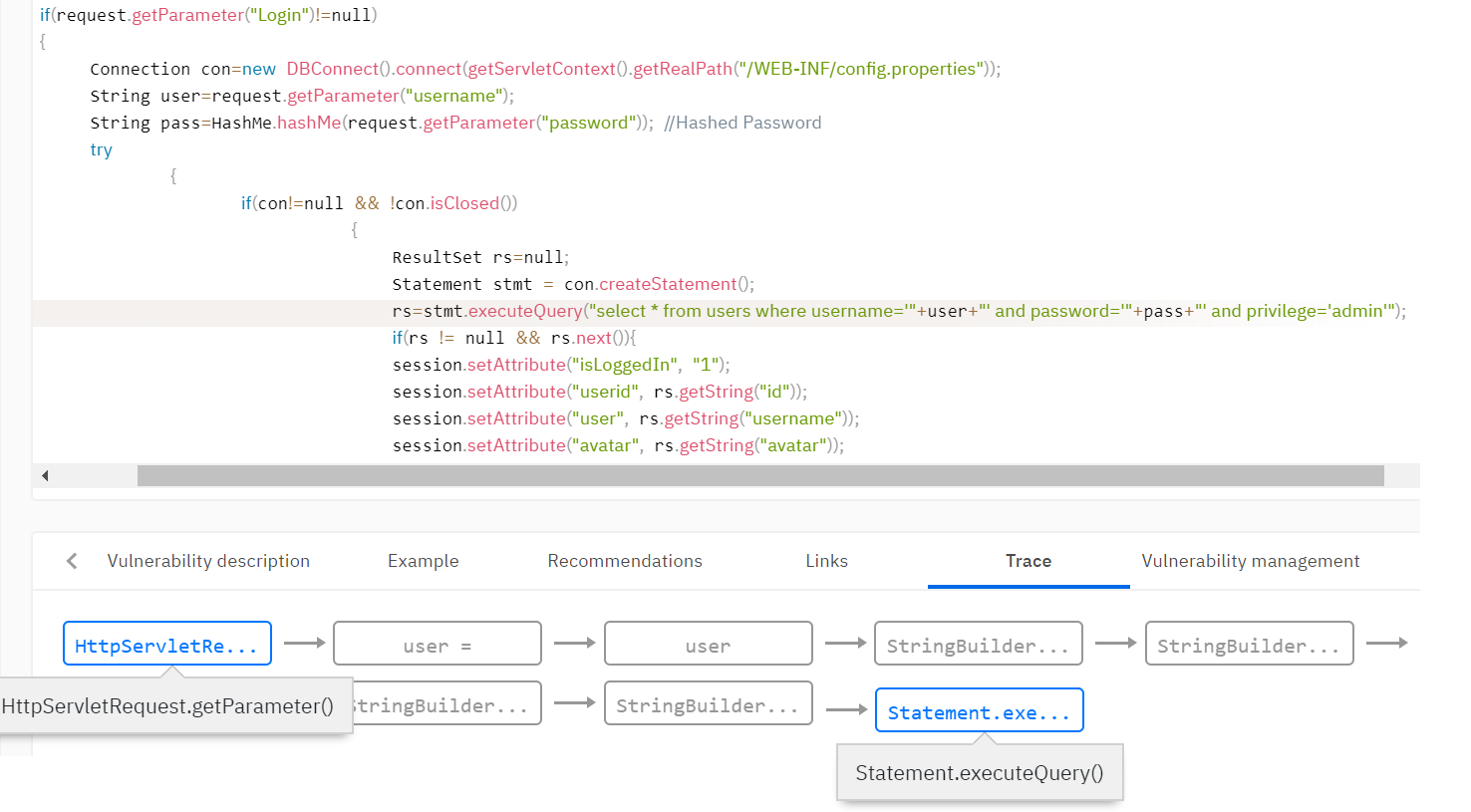

For example, you need to detect vulnerabilities such as SQL Injection. This vulnerability occurs when unverified data from the user falls into the methods of working with the database. It is necessary to determine that the data came from the user and add the taint flag to that data. Typically, the analyzer’s rule base sets the rules for setting the taint flag. For example, set a flag to the return value of the getParameter () method of the Request class.

Next, you need to distribute the flag throughout the analyzed program using taint analysis, given that the data can be validated and the flag may disappear on one of the execution paths. The analyzer sets many functions that remove flags. For example, the function of validating data from html tags can clear the flag for cross-site scripting (XSS) vulnerabilities. Or, the function to bind a variable to an SQL expression removes the flag for embedding in SQL.

As a result of applying the above algorithms, the intermediate representation is supplemented with the information necessary to search for vulnerabilities. For example, in the code model, information appears about which variables belong to certain flags, which data is constant. Vulnerability search rules are formulated in terms of a code model. The rules describe what features in the final interim view may indicate a vulnerability.

For example, you can apply a vulnerability search rule that defines a method call with a parameter that has the taint flag. Returning to the example of SQL injection, we verify that the variables with the taint flag do not fall into the database query function.

It turns out that an important part of the static analyzer, in addition to the quality of the algorithms, is the configuration and the rule base: a description of which constructs in the code generate flags or other information, which constructs validate such data, and for which constructs the use of such data is critical.

In addition to data flow analysis, there are other approaches. One of the famous is symbolic execution or abstract interpretation. In these approaches, the program runs on abstract domains, the calculation and distribution of data restrictions in the program. Using this approach, one can not only find the vulnerability, but also calculate the conditions on the input data under which the vulnerability is exploitable. However, this approach has serious drawbacks - with standard solutions on real programs, algorithms explode exponentially, and optimizations lead to serious losses in the quality of analysis.

In the end, I think it’s worth summing up, speaking about the pros and cons of static analysis. It is logical that we will compare with dynamic analysis, in which the vulnerability search occurs during program execution.

The undoubted advantage of static analysis is the complete coverage of the analyzed code. Also, the advantages of static analysis include the fact that to run it there is no need to run the application in a combat environment. Static analysis can be implemented at the very early stages of development, minimizing the cost of vulnerabilities found.

The disadvantages of static analysis are the inevitable presence of false positives, resource consumption and a long scan time on large amounts of code. However, these disadvantages are inevitable, based on the specifics of the algorithms. As we saw, a quick analyzer will never find a real vulnerability such as SQL injection and the like.

We wrote in another article about the remaining difficulties of using static analysis tools, which, as it turns out, can be overcome quite well .

We’ll answer the last question right away - statistics have nothing to do with it, although static analysis is often mistakenly called statistical. The analysis is static, because the application does not start during scanning.

First, let's figure out what we want to look for in the program code. Static analysis is most often used to search for vulnerabilities - sections of code, the presence of which can lead to a violation of the confidentiality, integrity or availability of the information system. However, the same technologies can be used to search for other errors or code features.

We make a reservation that in general the problem of static analysis is algorithmically unsolvable (for example, by the Rice theorem). Therefore, you have to either limit the conditions of the task, or allow inaccuracy in the results (skip vulnerabilities, give false positives). It turns out that on real programs, the second option turns out to be working.

There are many paid and free tools that claim to search for vulnerabilities in applications written in different programming languages. Let's consider how the static analyzer is usually arranged. Further we will focus on the analyzer core and algorithms. Of course, the tools can differ in the friendliness of the interface, in the set of functionality, in the set of plugins for different systems and in the ease of use of the API. This is probably a topic for a separate article.

Intermediate presentation

In the scheme of operation of a static analyzer, three main steps can be distinguished.

- Building an intermediate representation (an intermediate representation is also called an internal representation or code model).

- The use of static analysis algorithms, as a result of which the code model is supplemented with new information.

- Applying vulnerability search rules to an augmented code model.

Different static analyzers can use different code models, for example, the program source code, token stream, parse tree, three-address code, control flow graph, bytecode - standard or native - and so on.

Like compilers, lexical and syntactic analysis is used to build an internal representation, most often an analysis tree (AST, Abstract Syntax Tree). The lexical analysis breaks the program text into minimal semantic elements, at the output receiving a stream of tokens. Parsing verifies that the token stream matches the grammar of the programming language, that is, the resulting token stream is correct from a language point of view. As a result of parsing, a parsing tree is built - a structure that models the source code of the program. Next, semantic analysis is applied; it checks the fulfillment of more complex conditions, for example, the correspondence of data types in assignment instructions.

The parse tree can be used as an internal representation. You can also get other models from the parse tree. For example, you can translate it into a three-address code, which, in turn, builds a control flow graph (CFG). Typically, CFG is the primary model for static analysis algorithms.

In binary analysis (static analysis of binary or executable code), a model is also built, but the reverse development practices are already used here: decompilation, deobfuscation, reverse translation. As a result, you can get the same models as from the source code, including the source code (using full decompilation). Sometimes the binary code itself can serve as an intermediate representation.

Theoretically, the closer the model to the source code, the worse the quality of the analysis. On the source code itself, you can only search for regular expressions, which will not allow you to find at least any complex vulnerability.

Data flow analysis

One of the main static analysis algorithms is data flow analysis. The task of this analysis is to determine at each point in the program some information about the data that the code operates on. The information may be different, for example, data type or value. Depending on what information needs to be determined, the task of analyzing the data flow can be formulated.

For example, if it is necessary to determine whether an expression is a constant, as well as the value of this constant, then the problem of propagating constants (constant propagation) is solved. If it is necessary to determine the type of a variable, then we can talk about the problem of type propagation. If you need to understand which variables can point to a specific memory area (store the same data), then we are talking about the task of synonyms analysis (alias analysis). There are many other data flow analysis tasks that can be used in a static analyzer. Like the stages of building a code model, these tasks are also used in compilers.

In the theory of constructing compilers, solutions to the problem of intra-procedural analysis of a data stream are described (it is necessary to track data in a single procedure / function / method). Decisions are based on the theory of algebraic lattices and other elements of mathematical theories. The problem of analyzing the data flow can be solved in polynomial time, that is, for a time acceptable for computers, if the conditions of the problem satisfy the conditions of the solvability theorem, which in practice does not always happen.

We will tell you more about solving the problem of intra-procedural analysis of the data flow. To set a specific task, in addition to determining the information you need, you need to determine the rules for changing this information when passing data according to instructions in CFG. Recall that the nodes in the CFG are the basic blocks - sets of instructions, the execution of which is always sequential, and the arcs indicate the possible transfer of control between the basic blocks.

For each instruction

(information generated by the instruction

),

(information destroyed by the instruction

),

(information at the point before the instruction

),

(information at the point after the instruction

)

The goal of data flow analysis is to define sets

The second relation formulates the rules according to which information is “combined” at the points of confluence of CFG arcs (

The desired information (the set of values of the functions introduced above) is formalized as an algebraic lattice. Functions

Algorithms for solving data flow analysis problems look for maximum fixed points. There are several approaches to the solution: iterative algorithms, analysis of strongly connected components, T1-T2 analysis, interval analysis, structural analysis, and so on. There are theorems on the correctness of these algorithms; they determine the scope of their applicability to real problems. I repeat, the conditions of the theorems may not be fulfilled, which leads to more complicated algorithms and inaccurate results.

Interprocedural analysis

In practice, it is necessary to solve the problems of interprocedural analysis of the data flow, since rarely the vulnerability will be completely localized in one function. There are several common algorithms.

Inline functions . At the function call point, we embed the called function, thereby reducing the task of interprocedural analysis to the task of intraprocedural analysis. This method is easily implemented, but in practice, when it is applied, a combinatorial explosion is quickly achieved.

Building a general graph of the program control flow, in which function calls are replaced by transitions to the start address of the called function, and return instructions are replaced by transitions to all instructions following all instructions for calling this function. This approach adds a large number of unrealizable execution paths, which greatly reduces the accuracy of the analysis.

An algorithm similar to the previous one, but when switching to a function, the context is saved - for example, a stack frame. Thus, the problem of creating unrealizable paths is solved. However, the algorithm is applicable with limited call depth.

Building information about functions (function summary).The most applicable interprocedural analysis algorithm. It is based on the construction of a summary for each function: the rules by which information about data is converted when applying this function, depending on the various values of the input arguments. Ready-made summaries are used for intraprocedural analysis of functions. A separate complication here is the determination of the order of traversal of functions, since in case-by-case analysis, a summary should already be built for all called functions. Usually, special iterative algorithms for traversing a call graph are created.

Interprocedural analysis of the data stream is an exponentially difficult task, which is why the analyzer needs to carry out a number of optimizations and assumptions (it is impossible to find the exact solution in adequate time for computing power). Usually, when developing an analyzer, it is necessary to find a compromise between the amount of resources consumed, the analysis time, the number of false positives and vulnerabilities found. Therefore, a static analyzer can work for a long time, consume a lot of resources and give false positives. However, without this it is impossible to find the most important vulnerabilities.

It is at this point that serious static analyzers differ from many open tools, which, in particular, can position themselves in the search for vulnerabilities. Quick checks in linear time are good when you need to get the result quickly, for example, during compilation. However, this approach cannot find the most critical vulnerabilities - for example, related to the implementation of data.

Taint analysis

Separately, it is worth dwelling on one of the tasks of data flow analysis - taint analysis. Taint analysis allows you to distribute flags throughout the program. This task is key to information security, because it is with the help of it that vulnerabilities are discovered related to the implementation of data (SQL injection, crossite scripting, open redirects, falsification of the file path, and so on), as well as leakage of confidential data (password entry in event logs, insecure data transfer).

Let's try to model the task. Suppose we want to track n flags -

Next we need to define the flow functions. In this case, the flow functions can be determined by the following considerations.

- A lot of rules are specified in which constructions are defined that lead to the appearance or change of a set of flags.

- The assignment operation flips flags from the right to the left.

- Any operation unknown for rule sets combines flags from all operands and the final set of flags is added to the operation results.

Finally, you need to define the rules for merging information at the junction points of CFG arcs. Merging is determined by the union rule, that is, if different sets of flags for one variable came from different base blocks, then they are merged when merging. Including false positives come from here: the algorithm does not guarantee that the path to the CFG on which the flag appeared can be executed.

For example, you need to detect vulnerabilities such as SQL Injection. This vulnerability occurs when unverified data from the user falls into the methods of working with the database. It is necessary to determine that the data came from the user and add the taint flag to that data. Typically, the analyzer’s rule base sets the rules for setting the taint flag. For example, set a flag to the return value of the getParameter () method of the Request class.

Next, you need to distribute the flag throughout the analyzed program using taint analysis, given that the data can be validated and the flag may disappear on one of the execution paths. The analyzer sets many functions that remove flags. For example, the function of validating data from html tags can clear the flag for cross-site scripting (XSS) vulnerabilities. Or, the function to bind a variable to an SQL expression removes the flag for embedding in SQL.

Vulnerability Search Rules

As a result of applying the above algorithms, the intermediate representation is supplemented with the information necessary to search for vulnerabilities. For example, in the code model, information appears about which variables belong to certain flags, which data is constant. Vulnerability search rules are formulated in terms of a code model. The rules describe what features in the final interim view may indicate a vulnerability.

For example, you can apply a vulnerability search rule that defines a method call with a parameter that has the taint flag. Returning to the example of SQL injection, we verify that the variables with the taint flag do not fall into the database query function.

It turns out that an important part of the static analyzer, in addition to the quality of the algorithms, is the configuration and the rule base: a description of which constructs in the code generate flags or other information, which constructs validate such data, and for which constructs the use of such data is critical.

Other approaches

In addition to data flow analysis, there are other approaches. One of the famous is symbolic execution or abstract interpretation. In these approaches, the program runs on abstract domains, the calculation and distribution of data restrictions in the program. Using this approach, one can not only find the vulnerability, but also calculate the conditions on the input data under which the vulnerability is exploitable. However, this approach has serious drawbacks - with standard solutions on real programs, algorithms explode exponentially, and optimizations lead to serious losses in the quality of analysis.

conclusions

In the end, I think it’s worth summing up, speaking about the pros and cons of static analysis. It is logical that we will compare with dynamic analysis, in which the vulnerability search occurs during program execution.

The undoubted advantage of static analysis is the complete coverage of the analyzed code. Also, the advantages of static analysis include the fact that to run it there is no need to run the application in a combat environment. Static analysis can be implemented at the very early stages of development, minimizing the cost of vulnerabilities found.

The disadvantages of static analysis are the inevitable presence of false positives, resource consumption and a long scan time on large amounts of code. However, these disadvantages are inevitable, based on the specifics of the algorithms. As we saw, a quick analyzer will never find a real vulnerability such as SQL injection and the like.

We wrote in another article about the remaining difficulties of using static analysis tools, which, as it turns out, can be overcome quite well .