When speed and scaling are needed: server of distributed iOS devices

Many developers of iOS UI tests are probably familiar with the problem of test run time. Badoo runs over 1,400 end-to-end tests for iOS applications for each regression run. These are more than 40 machine hours of tests that pass in 30 real minutes.

Nikolai Abalov from Badoo shared how he managed to speed up the test execution from 1.5 hours to 30 minutes; how they unravel the closely related tests and the iOS infrastructure by going to the device server; how it made it easier to run tests in parallel and made tests and infrastructure easier to support and scale.

You'll learn how easy it is to run tests in parallel with tools like fbsimctl, and how separating tests and infrastructure can make it easier to host, support, and scale your tests.

Initially, Nikolai presented a report at the Heisenbug conference (you can watch the video ), but now for Habr we made a text version of the report. Next is the first-person narration:

Hello everyone, today I will talk about scaling testing for iOS. My name is Nicholas, I mainly deal with the iOS infrastructure at Badoo. Before that, he worked for 2GIS for three years, was engaged in development and automation, in particular, he wrote Winium.Mobile - an implementation of WebDriver for Windows Phone. I was taken to Badoo to work on Windows Phone automation, but after a while the business decided to suspend the development of this platform. And I was offered interesting tasks for the automation of iOS, about which I will talk today.

What are we going to talk about? The plan is as follows:

- An informal statement of the problem, an introduction to the tools used: how and why.

- Parallel testing on iOS and how it developed (in particular, according to the history of our company, since we started working on it back in 2015).

- Device server is the main part of the report. Our new test parallelization model.

- The results we achieved with the server.

- If you do not have 1500 tests, then you may not really need a device server, but you can still get interesting things out of it, and we will talk about them. They can be applied if you have 10-25 tests, and this will still give either acceleration or additional stability.

- And finally, a debriefing.

Instruments

The first is a little about who uses what. Our stack is a little non-standard, because we use both Calabash and WebDriverAgent at the same time (which gives us speed and Calabash backdoors for automating our application and at the same time full access to the system and other applications through WebDriverAgent). WebDriverAgent is a Facebook implementation of WebDriver for iOS that is used internally by Appium. And Calabash is an embedded server for automation. The tests themselves we write in human-readable form using Cucumber. That is, in our company pseudo-BDD. And because we used Cucumber and Calabash, we inherited Ruby, all the code is written on it. There is a lot of code, and you have to continue to write in Ruby. To run tests in parallel, we use parallel_cucumber, a tool written by one of my colleagues at Badoo.

Let's start with what we had. When I began to prepare the report, there were 1200 tests. By the time they were completed, there were 1300. While I arrived here, there were already 1400 tests. These are end-to-end tests, not unit tests and integration tests. They make up 35-40 hours of computer time on one simulator. They passed earlier in an hour and a half. I’ll tell you how they began to pass in 30 minutes.

We have a workflow in our company with branches, reviews, and running tests on these branches. Developers create about 10 requests to the main repository of our application. But it also has components that are shared with other applications, so sometimes there are more than ten. As a result, 30 test runs per day, at least. Since the developers push, then they realize that they started with bugs, reload, and all this starts a full regression, simply because we can run it. On the same infrastructure, we launch additional projects, such as Liveshot, which takes screenshots of the application in the main user scripts in all languages, so that translators can verify the correctness of the translation, whether it fits on the screen and so on. As a result, about one and a half thousand hours of machine time at the moment come out.

First of all, we want developers and testers to trust automation and rely on it to reduce manual regression. For this to happen, it is necessary to achieve fast and, most importantly, stable and reliable operation from automation. If the tests pass in an hour and a half, the developer will get tired of waiting for the results, he will start to do another task, his focus will switch. And when some tests fall, he will be very glad that he has to come back, switch his attention and repair something. If the tests are unreliable, then over time, people begin to perceive them only as a barrier. They constantly fall, although there are no bugs in the code. These are Flaky tests, some kind of hindrance. Accordingly, these two points have been disclosed in these requirements:

- Tests should take 30 minutes or faster.

- They must be stable.

- They must be scalable, so that we can add half a hour to add another hundred tests.

- Infrastructure should be easily maintained and developed.

- On simulators and physical devices, everything should start the same way.

We mainly run tests on simulators, and not on physical devices, because it is faster, more stable and easier. Physical devices are used only for tests that really require this. For example, camera, push notifications and the like.

How to satisfy these requirements and do everything well? The answer is very simple: we remove two-thirds of the tests! This solution fits in 30 minutes (because only a third of the tests remain), scales easily (more tests can be deleted), and increases reliability (because the first thing we remove is the most unreliable tests). That’s all for me. Questions?

But seriously, there is some truth in every joke. If you have a lot of tests, then you need to review them and understand which ones bring real benefits. We had a different task, so we decided to see what can be done.

The first approach is filtering tests based on coverage or components. That is, select the appropriate tests based on file changes in the application. I will not talk about this, but this is one of the tasks that we are solving at the moment.

Another option is to speed up and stabilize the tests themselves. You take a specific test, see which steps take the most time in it and whether they can be optimized somehow. If some of them are very unstable very often, you correct them, because it reduces test restarts, and everything goes faster.

And, finally, a completely different task - parallelizing tests, distributing them over a large number of simulators and providing a scalable and stable infrastructure so that there is much to parallelize.

In this article we will talk mainly about the last two points and at the end, in tips & tricks, we will touch on the point about speed and stabilization.

Parallel testing for iOS

Let's start with the history of parallel testing for iOS in general and Badoo in particular. To begin with, simple arithmetic, here, however, there is an error in the formula, if we compare the dimensions:

There were 1300 tests for one simulator, it turns out 40 hours. Then Satish, my leader, comes and says that he needs half an hour. You have to invent something. X appears in the formula: how many simulators to start, so that everything goes in half an hour. The answer is 80 simulators. And immediately the question arises, where to put these 80 simulators, because they do not fit anywhere.

There are several options: you can go to clouds like SauceLabs, Xamarin or AWS Device Farm. And you can think of everything at home and do well. Given that this article exists, we did everything well at home. We decided so, because a cloud with such a scale would be quite expensive, and there was also a situation when iOS 10 came out and Appium released support for it for almost a month. This means that in SauceLabs for a month we could not automatically test iOS 10, which did not suit us at all. In addition, all clouds are closed, and you can not affect them.

So, we decided to do everything in-house. We started somewhere in 2015, then Xcode was not able to run more than one simulator. As it turned out, he can not run more than one simulator under one user on the same machine. If you have many users, then you can run simulators as much as you like. My colleague Tim Boverstock came up with a model on which we lived for a long time.

There is an agent (TeamCity, Jenkins Node and the like), it runs parallel_cucumber, which simply goes to remote machines via ssh. The picture shows two cars for two users. All necessary files such as tests are copied and run on remote machines via ssh. And the tests already run the simulator locally on the current desktop. For this to work, you had to first go to each machine, create, for example, 5 users, if you want 5 simulators, make one user an automatic login, open screensharing for the rest, so that they always have a desktop. And configure the ssh daemon so that it has access to processes on the desktop. In such a simple way, we began to run tests in parallel. But there are several problems in this picture. Firstly, the tests control the simulator, they are in the same place, where is the simulator itself. That is, they must always be run on poppies, they eat up resources that could be spent on running simulators. As a result, you have fewer simulators on the machine and they cost more. Another point is that you need to go to each machine, configure users. And then you just stumble into the global ulimit. If there are five users and they raise a lot of processes, then at some point the descriptors will end in the system. Having reached the limit, tests will start to fall when trying to open new files and start new processes. And then you just stumble into the global ulimit. If there are five users and they raise a lot of processes, then at some point the descriptors will end in the system. Having reached the limit, tests will start to fall when trying to open new files and start new processes. And then you just stumble into the global ulimit. If there are five users and they raise a lot of processes, then at some point the descriptors will end in the system. Having reached the limit, tests will start to fall when trying to open new files and start new processes.

In 2016-2017, we decided to switch to a slightly different model. Looked report Lawrence Lomax of Facebook - they presented fbsimctl, and some have told how the infrastructure to Facebook. There was also a report by Viktor Koronevich about this model. The picture is not very different from the previous one - we just got rid of users, but this is a big step forward, since now there is only one desktop, fewer processes are launched, simulators have become cheaper. There are three simulators in this picture, not two, as resources have been freed up to launch an additional one. We lived with this model for a very long time, until the middle of October 2017, when we began to switch to our remote device server.

So it looked like iron. On the left is a box with macbooks. Why we ran all tests on them is a separate big story. Running the tests on the macbooks that we inserted in the iron box was not a good idea, because somewhere in the afternoon they started to overheat, since heat does not leave well when they are so on the surface. Tests became unstable, especially when simulators started to crash when loading.

We decided it simply: we put the laptops “in tents”, the airflow area increased and the stability of the infrastructure suddenly increased.

So sometimes you do not have to deal with software, but go around turning laptops.

But if you look at this picture, there’s some mess of wires, adapters and tin in general. This is the iron part, and it was still good. In software, a complete interweaving of tests with the infrastructure was going on, and it was impossible to continue to live like that.

We identified the following issues:

- The fact that the tests were closely related to the infrastructure, launched simulators and managed their entire life cycle.

- This made scaling difficult because adding a new node implied setting it up for both tests and running simulators. For example, if you wanted to update Xcode, you had to add workaround directly to the tests, because they run on different versions of Xcode. Some if heaps appear to run the simulator.

- Tests are tied to the machine where the simulator is located, and this costs a pretty penny, since they have to be run on poppies instead of * nix, which are cheaper.

- And it was always very easy to delve into the simulator. In some tests, we went to the file system of the simulator, deleted some files there or changed them, and everything was fine until it was done in three different ways in three different tests, and then unexpectedly the fourth one started to crash if it was not lucky to start after those three.

- And the last moment - resources were not rummaged in any way. For example, there were four TeamCity agents, five machines were connected to each, and tests could be run only on their five machines. There was no centralized resource management system, because of this, when only one task comes, it went on five machines, and all the other 15 were idle. Because of this, builds took a very long time.

New model

We decided to switch to a beautiful new model.

Removed all tests on one machine, where TeamCity agent. This machine can now be on * nix or even on Windows, if you so wish. They will communicate over HTTP with some thing that we will call device server. All simulators and physical devices will be located somewhere there, and tests will run here, request the device via HTTP and continue to work with it. The scheme is very simple, only two elements on the diagram.

In reality, of course, ssh and more remained behind the server. But now it doesn’t bother anyone, because the guys who write the tests are at the top in this diagram, and they have some kind of specific interface for working with a local or remote simulator, so they are fine. And now I work below, and I have everything as it was. We have to live with it.

What does it give?

- First, the division of responsibility. At some point in testing automation, you need to consider it as a normal development. It employs the same principles and approaches that developers use.

- The result is a strictly defined interface: you cannot directly do something with the simulator, for this you need to open a ticket in the device server, and we will figure out how to do this optimally without breaking other tests.

- The test environment has become cheaper because we raised it in * nix, which are much cheaper than service poppies.

- And resource sharing appeared, because there is a single layer with which everyone communicates, it can plan the distribution of machines located behind it, i.e. sharing resources between agents.

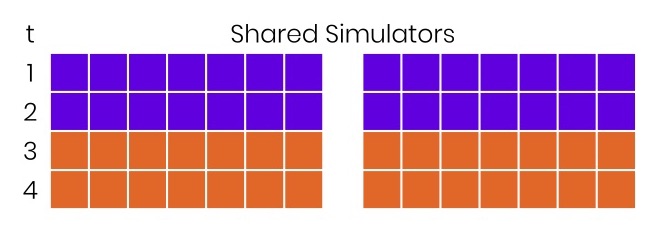

Above is depicted, as it was before. To the left are conventional units of time, say, tens of minutes. There are two agents, 7 simulators are connected to each, at time 0 build comes in and takes 40 minutes. After 20 minutes, another one arrives, and takes the same time. Everything seems great. But there, and there are gray squares. They mean that we lost money because we did not use the available resources.

You can do this: the first build comes in and sees all the free simulators, is distributed, and the tests are accelerated twice. There was nothing to do. In reality, this often happens because developers rarely push their brunches at the same moment. Although sometimes this happens, and "checkers", "pyramids" and the like begin. However, in most cases, free acceleration twice can be obtained by simply installing a centralized management system for all resources.

Other reasons to move on to this:

- Black boxing, that is, now the device server is a black box. When you write tests, you only think about tests and think that this black box will always work. If it doesn't work, you just go and knock on whoever should do it, that is, me. And I have to fix it. Not only me, in fact, several people are involved in the entire infrastructure.

- You can not spoil the inside of the simulator.

- You don’t have to put a million utilities on the machine for everything to start - you just put one utility that hides all the work in the device server.

- It has become easier to update the infrastructure, which we will talk about somewhere in the end.

A reasonable question: why not Selenium Grid? Firstly, we had a lot of legacy code, 1500 tests, 130 thousand lines of code for different platforms. And all this was controlled by parallel_cucumber, which created the life cycle of the simulator outside the test. That is, there was a special system that loaded the simulator, waiting for it to be ready and giving it to the test. In order not to rewrite everything, we decided to try not to use Selenium Grid.

We also have many non-standard actions, and we very rarely use WebDriver. The bulk of the tests are on Calabash, and WebDriver is only ancillary. That is, we do not use Selenium in most cases.

And, of course, we wanted everything to be flexible, easy to prototype. Because the whole project started simply with an idea that they decided to test, implemented in a month, everything started up, and it became the main decision in our company. By the way, we first wrote in Ruby, and then rewrote the device server to Kotlin. The tests turned out to be on Ruby, and the server on Kotlin.

Device server

Now more about the device server itself, how it works. When we first started researching this issue, we used the following tools:

- xcrun simctl and fbsimctl - command line utilities for managing simulators (the first is officially from Apple, the second from Facebook, it is a little more convenient to use)

- WebDriverAgent, also from Facebook, in order to launch applications outside the process when push notification arrives or something like that

- ideviceinstaller, to put the application on physical devices and then somehow automate on the device.

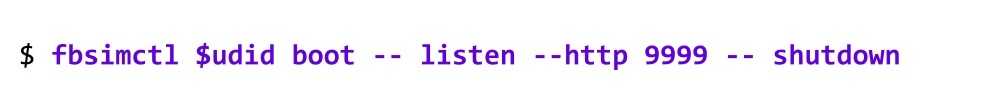

By the time we started writing the device server, we had investigated. It turns out that by that time fbsimctl was already able to do everything that xcrun simctl and ideviceinstaller were able to do, so we just threw them away, leaving only fbsimctl and WebDriverAgent. This is already a simplification. Then we thought: why do we need to write something, for sure Facebook is all set. Indeed, fbsimctl can act as a server. You can start it like this:

This will raise the simulator and start the server, which will listen to the commands.

When you stop the server, it will automatically shut down the simulator.

What commands can I send? For example, using curl send list, and it will display complete information about this device:

And it's all in JSON, that is, it is easily parsed from the code, and it is easy to work with it. They have implemented a huge bunch of commands that allows you to do anything with the simulator.

For example, approve is to give permission to use the camera, location and notification. The open command allows you to open deep links in the application. It would seem that you can not write anything, but take fbsimctl. But it turned out that there are not enough such teams:

Here it is easy to guess that without them you will not be able to launch a new simulator. That is, someone must go to the car in advance and pick up the simulator. And most important: you cannot run tests on a simulator. Without these commands, the server cannot be used remotely, so we decided to make a complete list of the additional requirements that we need.

- The first is the creation and loading of simulators on demand. That is, liveshots can at any time ask for the iPhone X, and then the iPhone 5S, but most of the tests will run on the iPhone 6s. We must be able to create the required number of simulators of each type on demand.

- We also need to somehow be able to run WebDriverAgent or other XCUI tests on simulators or physical devices in order to drive the automation itself.

- And we wanted to completely hide the satisfaction of requirements. If your tests want to test something on iOS 8 for backward compatibility, then they don’t need to know which machine to go for this device. They simply ask the device server for iOS 8, and if there is such a machine, he will find it himself, somehow prepare and return the device from this machine. This was not in fbsimctl.

- Finally, these are various additional steps, such as deleting cookies in tests, which saves you a whole minute in each test, and other various tricks, which we will talk about at the very end.

- And the last moment is the pooling of simulators. We had the idea that since the device server now lives separately from the tests, it is possible to run all the simulators in it at the same time in it, and when the tests arrive, the simulator will be ready to start working instantly, and this will save us time. As a result, we didn’t do it, because loading simulators was already very fast. And this will also be at the very end, these are the spoilers.

The picture is just an example of an interface, we wrote some kind of wrapper, a client for working with this remote device. Here the dots are the various facebook methods that we just duplicated. Everything else is its own methods, for example, quick reset of a device, cleaning cookies and receiving various diagnostics.

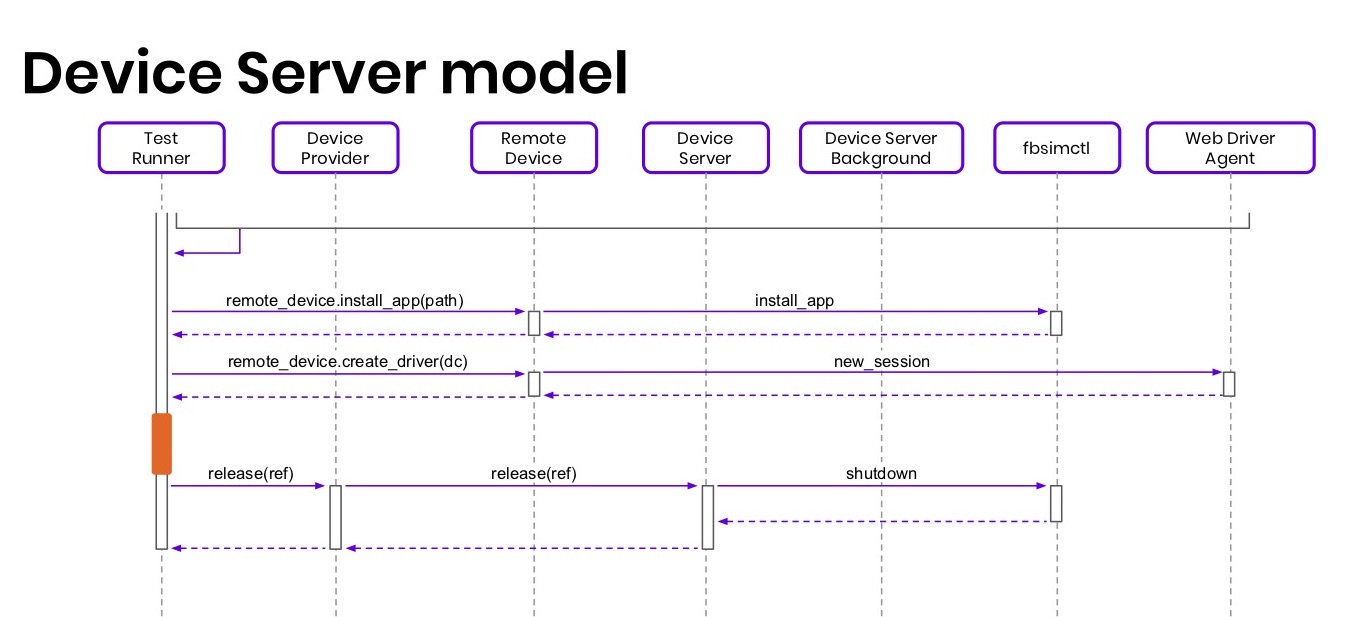

The whole scheme looks something like this: there is a Test Runner that will run tests and prepare the environment; there is a Device Provider, a client for Device Server, to ask for devices from him; Remote Device is a wrapper over a remote device; Device Server - the device server itself. Everything behind it is hidden from the tests, there are some background streams for cleaning the disk and performing other important actions and fbsimctl with WebDriverAgent.

How does it all work? From tests or from Test Runner, we request a device with a certain capability, for example, iPhone 6. The request goes to the Device Provider, and it sends the device server, which finds a suitable machine, starts the background stream on it to prepare the simulator, and immediately returns a reference to the tests , token, promise that in the future the device will be created and downloaded. According to this token, you can go to Device Server and ask for a device. We turn this token into an instance of the RemoteDevice class and it will be possible to work with it already in tests.

All this happens almost instantly, and in the background parallel loading of the simulator begins with fbsimctl. Now, for example, we are loading simulators in headless mode. If anyone remembers the first picture with iron, you could see many simulator windows on it, before we loaded them not in the headless mode. They are somehow loaded, you won’t even see anything. We just wait until the simulator is fully loaded, for example, an entry about SpringBoard and other heuristics appears in syslog to determine the simulator’s readiness.

As soon as it boots up, run XCTest, which actually raises WebDriverAgent, and we will start asking him for healthCheck, because WebDriverAgent sometimes does not rise, especially if the system is very heavily loaded. In parallel, at this time, a cycle starts, waiting for the status of “ready” on the device. These are actually the same healthCheck. Once the device is fully loaded and ready for testing, you exit the loop.

Now you can put the application on it simply by sending a request to fbsimctl. Everything is elementary here. You can still create a driver, the request is proxied to WebDriverAgent, and creates a session. After that, you can run the tests.

Tests are such a small part of this whole scheme, in them you can continue to communicate with the device server to perform actions like deleting cookies, receiving video, starting recording, and so on. In the end, you need to free the device (release), it ends, it clears all resources, flushes the cache and the like. Actually freeing up the device is optional. It’s clear that the device server itself does this, because sometimes tests fail along with Test Runner and do not explicitly release the devices. This scheme is greatly simplified, it does not have many items and background works that we perform so that the server can work for a month without any problems and reboots.

Results and next steps

The most interesting part is the results. They are simple. From 30 machines went to 60. These are virtual machines, not physical machines. Most importantly, we reduced the time from one and a half hours to 30 minutes. And here the question arises: if the number of cars has doubled, then why has time decreased three times?

In fact, everything is simple. I showed a picture about resource sharing - this is the first reason. He gave an additional rise in speed in most cases when developers at different times started tasks.

The second point is the diversity of tests and infrastructure. After that, we finally understood how everything works, and were able to optimize each of the parts individually and add even a little acceleration to the system. Separation of Concerns is a very important idea, because when everything is intertwined, it becomes impossible to fully embrace the system.

It has become easier to do updates. For example, when I first joined the company, we upgraded to Xcode 9, which took more than a week with that few machines. The last time we updated on Xcode 9.2, and it took literally a day and a half, with most of the time copying files. We did not participate, it did something there.

We greatly simplified the Test Runner, which included rsync, ssh, and other logic. Now all this is thrown out and works somewhere on * nix, in Docker containers.

Next steps: preparing the device server for open source (after the report it was hosted on GitHub ) , we are thinking about removing ssh, because it requires additional configuration on the machines and in most cases complicates the logic and support of the entire system. But now you can take the device server, enable it on all ssh machines, and the tests will really work on them without problems.

Tips & tricks

Now the most important thing is all sorts of tricks and just useful things that we found when creating a device server and this infrastructure.

The first is the simplest. As you remember, we had a MacBook Pro, all tests were run on laptops. Now we launch them on Mac Pro.

Here are two configurations. These are actually the top versions of each of the devices. On the MacBook, we could stably run 6 simulators in parallel. If you try to load more at the same time, the simulators begin to fail due to the fact that they load the processor heavily, they have mutual locks and so on. You can run 18 on the Mac Pro - it's very easy to calculate, because instead of 4 there are 12 cores. We multiply by three - you have about 18 simulators. In fact, you can try to run a little more, but they must somehow be separated in time, you can not, for example, run in one minute. Although there will be a trick with these 18 simulators, it is not so simple.

And this is their price. I don’t remember how much it is in rubles, but it’s clear that they cost a lot. The cost of each simulator for the MacBook Pro costs almost £ 400, and for the Mac Pro almost £ 330. This is already around £ 70 savings on each simulator.

In addition, these macbooks had to be installed in a certain way, they had charging on magnets, which had to be glued to tape, because sometimes they fell off. And you had to buy an adapter to connect Ethernet, because so many devices nearby in the iron box on Wi-Fi actually do not work, it becomes unstable. The adapter also costs about £ 30, when you divide by 6, then you will get another £ 5 for each device. But, if you do not need this super-parallelization, you have only 20 tests and 5 simulators, it’s actually easier to buy a MacBook, because it can be found in any store, and you will have to order and wait for the top-end Mac Pro. By the way, they cost us a little cheaper, because we took them in bulk and there was some kind of discount. You can also buy a Mac Pro with little memory,

But with the Mac Pro there is one trick. We had to break them into three virtual machines, put ESXi there. This is bare metal virtualization, that is, a hypervisor that is installed on a bare machine, and not on the host system. He is the host himself, so we can run three virtual machines. And if you install some normal virtualization on macOS, for example Parallels, then you will be able to run only 2 virtual machines due to Apple licensing restrictions. I had to break it up because CoreSimulator, the main service managing the simulators, had internal locks, and at the same time more than 6 simulators were simply not loaded, they started to wait for something in the queue, and the total loading time of 18 simulators became unacceptable. By the way, ESXi costs £ 0, it is always nice when something is worth nothing, but it works well.

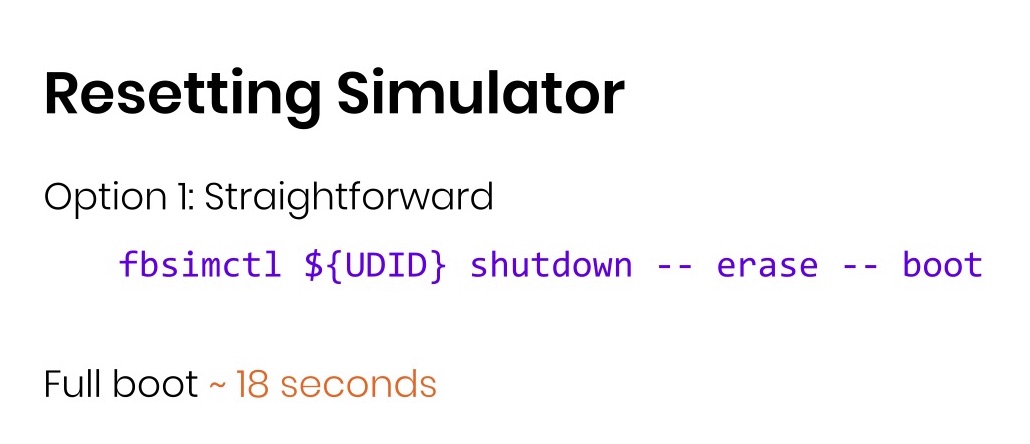

Why didn’t we do pooling? Partly because we accelerated the reset of the simulator. Suppose the test crashes, you want to completely clean the simulator so that the next one does not crash due to the remaining obscure files in the file system. The simplest solution is to shutdown the simulator, explicitly erase (erase) and boot (boot).

Very simple, one line, but takes 18 seconds. And six months or a year ago, it took almost a minute. Thanks to Apple for optimizing this, but you can do it trickier. Download the simulator and copy its working directories to the backup folder. And then you turn off the simulator, delete the working directory and copy the backup, start the simulator.

It turns out 8 seconds: the download accelerated more than twice. At the same time, I didn’t have to do anything complicated, that is, in Ruby-code it takes literally two lines. In the picture I give an example on a bash so that it can be easily translated into other languages.

The next trick. There is a Bumble application, it is similar to Badoo, but with a slightly different concept, much more interesting. There you need to login via Facebook. In all our tests, since we use a new user from the pool each time, we had to log out from the previous one. To do this, using WebDriverAgent, we opened Safari, went to Facebook, clicked Sign out. It seems to be good, but it takes almost a minute in each test. A hundred tests. One hundred extra minutes.

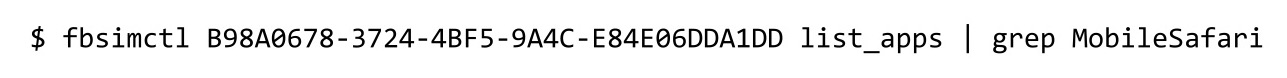

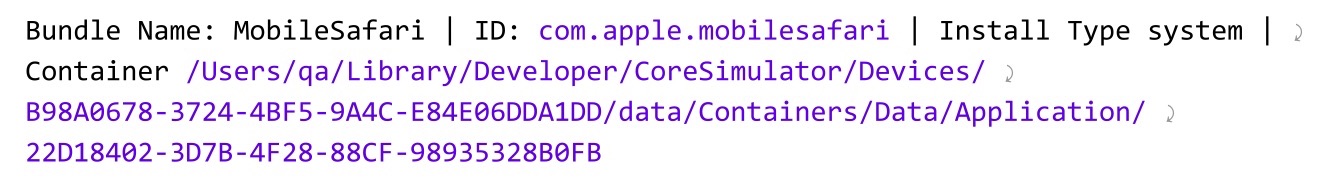

In addition, Facebook sometimes likes to do A / B tests, so they can change the locators, the text on the buttons. Suddenly, a bunch of tests will fall, and everyone will be extremely unhappy. Therefore, through fbsimctl we make list_apps, which finds all applications.

We find MobileSafari:

And there is a path to the DataContainer, and there is a binary file with cookies in it:

We just delete it - it takes 20 ms. Tests began to pass 100 minutes faster, became more stable, because they can not fall because of Facebook. So parallelization is sometimes not needed. You can find places for optimization, it's easy minus 100 minutes, nothing needs to be done. In the code, these are two lines.

Next: how do we prepare host machines to run simulators.

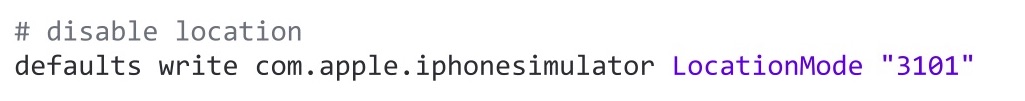

With the first example, many who launched Appium are familiar with disabling the hard keyboard. The simulator has a habit of connecting the hard keyboard on the computer when entering text in the simulator, and completely hide the virtual one. And Appium uses a virtual keyboard to enter text. Accordingly, after a local debug of input tests, other tests may begin to fail due to the lack of a keyboard. This command can disable the hard keyboard, and we do this before lifting each test node.

The next paragraph is more relevant for us, because the application is geolocation-related. And very often you need to run tests so that it is initially disabled. You can set 3101 in the LocationMode. Why so? There used to be an article in the Apple documentation, but then they deleted it for some reason. Now it’s just a magic constant in the code that we all pray for and hope that it won’t break. Because as soon as it breaks, all users will be in San Francisco, because fbsimctl puts such a location when loading. On the other hand, we will easily find out, because everyone will be in San Francisco.

The next is disabling Chrome, a frame around the simulator that has various buttons. When running autotests, it is not needed. Previously, turning it off allowed you to place more simulators from left to right to see how everything goes in parallel. Now we don’t do that, because everything is headless. How many do not go into the car, the simulators themselves will not be visible. If this is necessary, then you can stream from the desired simulator.

There is also a set of different options that can be turned on or off. Of these, I will only mention SlowMotionAnimation, because I had a very interesting second or third day at work. I ran the tests, and they all started to fall in timeouts. They did not find the elements in the inspector, although he was. It turned out that at that time I started Chrome, pressed cmd + T to open a new tab. At this point, the simulator became active and intercepted the team. And for him, cmd + T is a slowdown of all animations by 10 times to debug the animation. This option should also always be turned off automatically if you want to run tests on machines that people have access to, because they can accidentally break tests by slowing down animations.

Probably the most interesting thing for me, since I did this not so long ago, is the management of all this infrastructure. 60 virtual hosts (actually 64 + 6 TeamCity agents) nobody wants to manually roll out. We found the xcversion utility- Now it is part of fastlane, a Ruby gem that can be used as a command line utility: it partially automates the installation of Xcode. Then we took Ansible, wrote playbooks, to roll out fbsimctl of the required version everywhere, Xcode and deploy the configs for the device server itself. And Ansible for removing and updating simulators. When we switch to iOS 11, we leave iOS 10. But when the testing team says that it completely abandons automatic testing on iOS 10, we just go through Ansible and clean up the old simulators. Otherwise, they take up a lot of disk space.

How it works? If you just take xcversion and call it on each of the 60 machines, it will take a lot of time, since it goes to the Apple website and downloads all the images. To update the machines that are in the park, you need to select one working machine, run xcversion install on it with the necessary version of Xcode, but do not install anything or delete anything. The installation package will be downloaded to the cache. The same can be done for any version of the simulator. The installation package is placed in ~ / Library / Caches / XcodeInstall. Then you load everything with Ceph, and if it is not there, start some kind of web server in this directory. I'm used to Python, so I run a Python Python server on machines.

Now, on any other machine of the developer or tester, you can make xcversion install and specify the link to the raised server. It will download xip from the specified machine (if the local area network is fast, then this will happen almost instantly), unpack the package, confirm the license - in general, it will do everything for you. There will be a fully working Xcode in which it will be possible to run simulators and tests. Unfortunately, they weren’t so convenient with simulators, so you have to do curl or wget, download a package from that server to your local machine in the same directory, run xcversion simulators --install. We placed these calls inside Ansible scripts and updated 60 machines in a day. Most of the time took network copying files. In addition, we were moving at that moment, that is, some of the cars were turned off. We restarted Ansible two or three times,

A little debriefing. In the first part: it seems to me that priorities are important. That is, first of all you should have stability and reliability of tests, and then speed. If you only pursue speed, start parallelizing everything, then the tests will work quickly, but nobody will ever look at them, they will just restart everything until everything suddenly passes. Or even score on tests and push to the master.

The next point: automation is the same development, so you can just take the patterns that you already thought up for us and use them. If now your infrastructure is closely connected with tests and scaling is planned, then this is a good moment to divide first, and then to scale.

And the last point: if the task is to speed up the tests, then the first thing that comes to mind is to add more simulators to make it faster by some factor. In fact, very often you need not add, but carefully analyze the code and optimize everything in a couple of lines, as in the example with cookies. This is better than parallelization, because 100 minutes were saved with two lines of code, and for parallelization you will have to write a lot of code and then support the iron part of the infrastructure. For money and resources it will cost much more.

Those who are interested in this report from the Heisenbug conference may also be interested in the following Heisenbug : it will be held in Moscow on December 6-7, and the conference website already contains descriptions of a number of reports (and, by the way, the acceptance of applications for reports is still open).