Overview and comparative testing of PC "Elbrus 401 ‑ PC". Part Three - Development Tools

We continue to review the new domestic computer. After a brief acquaintance with the features of the Elbrus architecture, we will consider the software development tools offered to us.

Recall the structure of the article:

Enjoy reading!

The essence of the E2K architecture in one phrase can be formulated as follows: 64-bit registers, explicit parallelism of instruction execution and strictly controlled access to memory.

For example, x86 or SPARC architecture processors, capable of executing more than one instruction per cycle (superscalar), and sometimes also out of order, have an implicitparallelism: the processor directly in real time analyzes the dependencies between instructions on a small section of code and, if it considers it possible, loads certain actuators at the same time. Sometimes he acts too optimistic, - speculatively, with discarding the result or rollback the transaction in case of unsuccessful prediction. Sometimes, on the contrary, it is too pessimistic - assuming dependencies between the values of the registers or parts of the registers, which actually are not from the point of view of the executable program.

If explicitparallelism (explicitly parallel instruction computing, EPIC) the same analysis takes place at the compilation stage, and all machine instructions defined for parallel execution are written in one very large instruction word (VLIW), and Elbrus has the length of this “Words” are not fixed and can be from 1 to 8 double words (in this context, a single word has a capacity of 32 bits).

Undoubtedly, the compiler has much greater possibilities in terms of the amount of covered code, the time and memory spent, and when writing machine code manually, the programmer can carry out even more intelligent optimization. But this is in theory, and in practice you are unlikely to use assembler, and therefore it all depends on how good the optimizing compiler is, and writing one is not an easy task, to say the least. In addition, if, with implicit parallelism, “slow” instructions can continue to work without blocking the receipt of the following instructions on other actuators, then with explicit parallelism the entire wide command will wait for the complete completion. Finally, an optimizing compiler will help little in interpreting dynamic languages.

All this is well understood at the MCST, of course, and therefore, Elbrus also implements speculative execution technologies, pre-loaded code and data, and combined computational operations. Therefore, instead of theorizing and wondering how many hypothetical gigaflops this or that platform can give out under a successful set of circumstances, in the fourth part of the article we just take and evaluate the actual performance of real programs - applied and synthetic.

Of no less interest because of its exoticism is the technology of secure execution of programs in C / C ++, where the use of pointers provides an extensive range of opportunities to shoot yourself in the foot. The concept of contextual protection, jointly implemented by the compiler at the assembly stage and the processor at runtime, as well as the operating system in terms of memory management, will not allow the scope of variables to be violated, be it an appeal to a private class variable, private data of another module, local variables of the calling function . Any manipulations with changing the access level are permissible only in the direction of decreasing rights. Saving links to short-lived objects in long-lived structures is blocked. Attempts are also prevented from using dead links: if the object to which the link was once received has already been deleted, even the location of another, new facility at the same address will not be considered an excuse for access to its contents. Encouraged to use data as a code and transfer control anywhere.

Indeed, as soon as we move from high-level idioms to low-level pointers, all these areas of visibility turn out to be nothing more than syntactic salt. Some (simplest) cases of misuse of pointers can sometimes help catch static source code analyzers. But when the program has already been translated into x86 or SPARC machine instructions, then nothing will prevent it from reading or writing the value from the wrong memory cell or the wrong size, which will lead to crash in a completely different place - and here you are sitting, looking at the damaged stack and you have no idea where to start debugging, because on the other machine the same code fulfills successfully. A stack overflow and the resulting vulnerabilities are just a scourge of popular platforms. It is gratifying that our developers systematically approach these problems, and not limited to arranging more and more crutches, the effect of which still resembles a rake rather. After all, no one cares how fast your program works if it does not work correctly. In addition, tighter control by the compiler forces you to rewrite "foul smelling" and intolerable code, which means that it indirectly enhances the programming culture.

The byte order when storing numbers in Elbrus’s memory, unlike SPARC, is little endian (the lower byte first), that is, as on x86. Similarly, since the platform aims to support x86 code, there are no restrictions on the alignment of data in memory.

You can read more about the design of ICST computers on SPARC and E2K architectures in the book “Microprocessors and Computing Complexes of the Elbrus Family”, which was published by Peter Publishing House in a minimal print run and has long been sold, but is available for free in PDF form ( 6 MB ) and for a small fee on Google Play . Against the background of the lack of other detailed information in the public domain, this publication is just a storehouse of knowledge. But the text is concentrated mainly on the hardware, the algorithms of operation of buffers and pipelines, caches and arithmetic-logic devices - the topic of writing [effective] programs is not addressed at all, and even just mentioning machine instructions can be counted on the fingers.

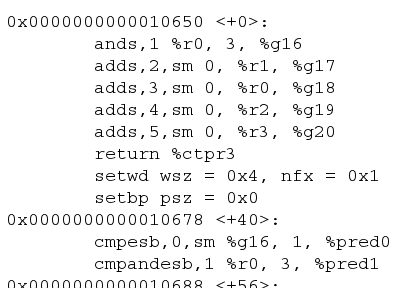

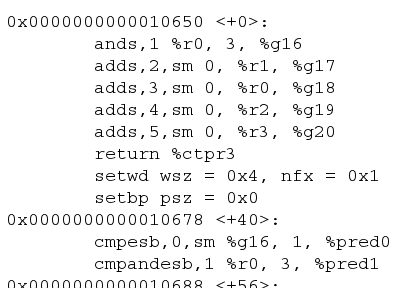

In addition to compiling the high-level languages C, C ++, Fortran, the documentation at every opportunity does not forget to mention the possibility of writing programs directly in Assembler, but nowhere is it specified how exactly you can get involved in this filigree art, where at least you can get a reference to machine instructions. Fortunately, the system has a GDB debugger that can disassemble the code of previously compiled programs. In order not to go beyond the scope of the article, we write a simple arithmetic function that has a good backlog for parallelization.

Here's what it translates to when compiling in -O3 mode :

The first thing that catches your eye is that each command is decoded at once into several instructions executed in parallel. The mnemonic designation of instructions is generally intuitive, although some names seem unusual after Intel: for example, an unsigned extension instruction here is called sxt , not movzx . The parameter of many computational commands, in addition to the operands themselves, is the number of the executive device - it is not without reason that ELBRUS stands for explicit basic resources utilization scheduling, that is, “explicit planning for the use of basic resources”.

To access the full 64-bit register value, the prefix “ d"; in theory, it is also possible to access the lower 16 and 8 bits of the value. The designation of general-purpose global registers, of which there are 32 pieces, is prefixed with “ g ” before the number , and local procedure registers with the prefix “ r ”. The window size of the local registers requested by the setwd instruction can reach 224, and the pump is pushed onto the stack automatically as needed.

The way you apply certain instructions is confusing: for example, return, as you might guess, it serves to return control to the calling procedure, however, in all the code samples studied, this instruction occurs long before the last command (where some kind of context manipulation is also present), sometimes even in the very first command word, as here. Although the aforementioned book devotes a whole paragraph to this issue, it hasn’t become clear for us yet. Update as of February 9, 2016: the comments suggest that the return statement only prepares the way for returning from the subprogram and allows the processor to start loading the next commands of the calling procedure, and the control itself returns when the execution reaches the ct instruction .

However, “easy to read code” and “efficient code” are far from being the same when it comes to machine instructions. If you compile without optimization, then the code is more consistent and similar to forehead calculation, but at the cost of lengthening: instead of 6 saturated command words, 8 sparse ones are generated.

Fortune-telling session on coffee grounds for the sim, let's finish before we fantasize to completely ridiculous assumptions. Let's hope that one day the command reference and programming and optimization guide will be made public.

The standard compiler of C / C ++ languages in the Elbrus operating system is LCC - proprietary development of the MCST company, compatible with GCC. Detailed information about the structure and principles of this compiler is not published, but according to an interview with a former developer of one of several developed subspecies of the compiler, Edison Design Group uses a frontend for high-level analysis of source codes , and low-level translation to machine instructions can be performed in different ways - without optimization or with optimization. It is the optimizing compiler that is delivered to end users, not only on the E2K platform, for which there simply are no alternative machine code generators, but also on the SPARC family of platforms, where the usual GCC as part of the MSVS operating system is also available.

Considering the architectural features listed above - obvious concurrency, secure execution of programs - the LCC compiler obviously implements many unique solutions worthy of the most rigorous study and testing in practice. Unfortunately, at the time of writing these lines, the author has neither sufficient qualifications for this, nor time for such studies; I hope that sooner or later this issue will be addressed by a much wider circle of representatives of the IT community, including more competent ones.

From what we managed to notice with the naked eye when building programs for testing performance, LCC on E2K more often than others gives warnings about possible errors, illiterate constructions or simply suspicious places in the code. True, the author is not so familiar with GCC to guarantee the distinction between unique LCC messages in Russian and simply translated ones (moreover, the translation is selective), and I'm not sure that a more intense stream of warnings is not a result of an automatically completed assembly configuration. Also, without knowing the semantics of a particular section of code, it is sometimes difficult to understand how clever the compiler is in finding hidden bugs, or raising a false alarm. For example, in Postgresql code, the same construct is found four times in the same file with slight variations:

The compiler predicts a possible way out of the 1-dimensional array in the string with the call of the strspn function . Under what circumstances this can happen, the author does not understand (and there was no such warning on other platforms, although the check mode is Warray-boundsis standard for GCC), however, it is noteworthy that multiple replications of the same non-trivial design (since it was necessary to explain its purpose in the commentary), instead of putting it into a separate function with an eloquent name that does not require explanation. Even if the alarm turned out to be false, detecting a foul-smelling code is a useful effect; the authors of the PVS ‑ Studio static analyzer will be left without work. But seriously, it would be amusing and useful to compare what additional errors in the code the LCC is really capable of detecting due to the unique features of the E2K architecture - at the same time, the free software world could get another batch of bug reports.

Ещё одним курьёзным результатом знакомства с говорливым LCC стало просвещение автора, а затем и его более опытных коллег, на тему, что такое триграфы (trigraphs) в языках C/C++, и почему они по умолчанию не поддерживаются, к счастью. Вот так живёшь и не подозреваешь, что безобидное на первый взгляд сочетание знаков пунктуации в текстовых литералах или комментариях может оказаться миной замедленного действия — или отличным материалом для программной закладки, смотря с какой стороны баррикад вы находитесь.

An unpleasant consequence of LCC’s self-sufficiency is that its message format is different from that of GCC, and when compiled from a development environment (for example, Qt Creator), these messages fall only in the general log of work, but not in the list of recognized problems. Perhaps this can be customized in some way, either from the compiler's side or in the development environment, but at least out of the box it does not understand one another.

Traditionally acute for domestic platforms, given their relatively low performance, there is the issue of cross-compilation, that is, the assembly of programs for the target architecture and a specific set of system libraries, using the resources of more powerful computers, with a different architecture and other software. Judging by the identification lines in the core of the Elbrus system and in the LCC compiler itself, they are assembled on Linux i386, but this toolkit for x86, of course, is not included in the distribution package of the system itself. It’s interesting, but is it possible to do the opposite: on Elbrus to collect programs for other platforms? (The author did not succeed further than the first phase of the GCC assembly for i386.)

Versions of the most significant packages for the developer:

Again, if you were expecting GCC 5, PHP 7, and Java 9, then these are your problems, as one famous footballer says. In this case, I must also say thank you that at least not GCC 3.4.6 (LCC 1.16.12), as part of previous versions of the Elbrus system, or GCC 3.3.6 as part of MSVS 3.0; By the way, the main compiler in MSVS 3.0 is still GCC 2.95.4 (and why be surprised when there is a kernel from a 2.4 branch?). Compared to the previous situation, when it was possible to stumble upon a GCC bug fixed in the upstream ten years ago, the new system has almost heavenly conditions - you can even swipe it in C ++ 11 if you do not want to maintain backward compatibility.

The appearance of OpenJDK, at least in some form, can already be called a big breakthrough, because dislike for Java and Monoin such systems has long been known; and this dislike can be understood when even native programs barely move. Since there are many javists among the author’s colleagues, due to the above circumstances, forced to restrain the souls wonderful impulses, it was decided to devote a separate series of performance tests to devote Java. Looking ahead, we note that the results were discouraging even in relative terms: with the same success, you can write interpreted scripts in PHP or Python, probably.

Support for C and C ++ alone is not limited to compatibility with the GNU Compiler Collection: the system still has a Fortran translator. Since the author is only familiar with Professor Fortran, everyone interested can recommend the December topic on “Made with Us”, where the comments touch on the use of this language as a benchmark.

For dessert, we stocked the most delicious: the last part of the article is devoted to the study of the performance of Elbrus in comparison with a variety of hardware and software platforms, including domestic ones.

Recall the structure of the article:

- hardware review :

- acquisition process;

- Hardware;

- software review :

- operating system launch;

- regular software;

- development tools overview:

- benchmarking performance :

- Description of competing computers

- benchmark results;

- summarizing.

Enjoy reading!

Architecture features

The essence of the E2K architecture in one phrase can be formulated as follows: 64-bit registers, explicit parallelism of instruction execution and strictly controlled access to memory.

For example, x86 or SPARC architecture processors, capable of executing more than one instruction per cycle (superscalar), and sometimes also out of order, have an implicitparallelism: the processor directly in real time analyzes the dependencies between instructions on a small section of code and, if it considers it possible, loads certain actuators at the same time. Sometimes he acts too optimistic, - speculatively, with discarding the result or rollback the transaction in case of unsuccessful prediction. Sometimes, on the contrary, it is too pessimistic - assuming dependencies between the values of the registers or parts of the registers, which actually are not from the point of view of the executable program.

If explicitparallelism (explicitly parallel instruction computing, EPIC) the same analysis takes place at the compilation stage, and all machine instructions defined for parallel execution are written in one very large instruction word (VLIW), and Elbrus has the length of this “Words” are not fixed and can be from 1 to 8 double words (in this context, a single word has a capacity of 32 bits).

Undoubtedly, the compiler has much greater possibilities in terms of the amount of covered code, the time and memory spent, and when writing machine code manually, the programmer can carry out even more intelligent optimization. But this is in theory, and in practice you are unlikely to use assembler, and therefore it all depends on how good the optimizing compiler is, and writing one is not an easy task, to say the least. In addition, if, with implicit parallelism, “slow” instructions can continue to work without blocking the receipt of the following instructions on other actuators, then with explicit parallelism the entire wide command will wait for the complete completion. Finally, an optimizing compiler will help little in interpreting dynamic languages.

All this is well understood at the MCST, of course, and therefore, Elbrus also implements speculative execution technologies, pre-loaded code and data, and combined computational operations. Therefore, instead of theorizing and wondering how many hypothetical gigaflops this or that platform can give out under a successful set of circumstances, in the fourth part of the article we just take and evaluate the actual performance of real programs - applied and synthetic.

VLIW: a breakthrough or a dead end?

There is an opinion that the VLIW concept is poorly suited for general-purpose processors: they say that once Transmeta Crusoe was produced - they didn’t “fire” it. It’s strange for the author to hear such statements, since ten years ago he tested a laptop based on Efficeon (this is the next generation of the same line) and found it very promising. If you did not know that under the hood, the x86 code was being translated into native commands, it was impossible to guess about it. Yes, he could not compete with the Pentium M, but the performance at the Pentium 4 level showed, and the power consumption was much more modest. And certainly he was a cut above VIA C3, which is quite an x86.

Of no less interest because of its exoticism is the technology of secure execution of programs in C / C ++, where the use of pointers provides an extensive range of opportunities to shoot yourself in the foot. The concept of contextual protection, jointly implemented by the compiler at the assembly stage and the processor at runtime, as well as the operating system in terms of memory management, will not allow the scope of variables to be violated, be it an appeal to a private class variable, private data of another module, local variables of the calling function . Any manipulations with changing the access level are permissible only in the direction of decreasing rights. Saving links to short-lived objects in long-lived structures is blocked. Attempts are also prevented from using dead links: if the object to which the link was once received has already been deleted, even the location of another, new facility at the same address will not be considered an excuse for access to its contents. Encouraged to use data as a code and transfer control anywhere.

Indeed, as soon as we move from high-level idioms to low-level pointers, all these areas of visibility turn out to be nothing more than syntactic salt. Some (simplest) cases of misuse of pointers can sometimes help catch static source code analyzers. But when the program has already been translated into x86 or SPARC machine instructions, then nothing will prevent it from reading or writing the value from the wrong memory cell or the wrong size, which will lead to crash in a completely different place - and here you are sitting, looking at the damaged stack and you have no idea where to start debugging, because on the other machine the same code fulfills successfully. A stack overflow and the resulting vulnerabilities are just a scourge of popular platforms. It is gratifying that our developers systematically approach these problems, and not limited to arranging more and more crutches, the effect of which still resembles a rake rather. After all, no one cares how fast your program works if it does not work correctly. In addition, tighter control by the compiler forces you to rewrite "foul smelling" and intolerable code, which means that it indirectly enhances the programming culture.

The byte order when storing numbers in Elbrus’s memory, unlike SPARC, is little endian (the lower byte first), that is, as on x86. Similarly, since the platform aims to support x86 code, there are no restrictions on the alignment of data in memory.

Order, Alignment, and Portability

For programmers spoiled by the cozy world of Intel, it may be a revelation that outside of this world, accessing memory without alignment (for example, writing a 32-bit value at 0x0400000 5) Is not just an unwanted operation that is slower than normal, but a prohibited action leading to a hardware exception. Therefore, porting a nominally cross-platform project, which at first boiled down to minimal changes, after the first launch can come to a standstill - when it becomes clear that all serialization and deserialization of data (integers and real numbers, UTF ‑ 16 text), scattered all over multi-megabyte code, is produced directly, without a dedicated level of platform abstraction, and in each case is framed in its own way. Certainly, if every programmer had the opportunity to test their imperishable masterpieces on alternative platforms - for example, SPARC - the global quality of the code would certainly improve.

You can read more about the design of ICST computers on SPARC and E2K architectures in the book “Microprocessors and Computing Complexes of the Elbrus Family”, which was published by Peter Publishing House in a minimal print run and has long been sold, but is available for free in PDF form ( 6 MB ) and for a small fee on Google Play . Against the background of the lack of other detailed information in the public domain, this publication is just a storehouse of knowledge. But the text is concentrated mainly on the hardware, the algorithms of operation of buffers and pipelines, caches and arithmetic-logic devices - the topic of writing [effective] programs is not addressed at all, and even just mentioning machine instructions can be counted on the fingers.

Machine language

In addition to compiling the high-level languages C, C ++, Fortran, the documentation at every opportunity does not forget to mention the possibility of writing programs directly in Assembler, but nowhere is it specified how exactly you can get involved in this filigree art, where at least you can get a reference to machine instructions. Fortunately, the system has a GDB debugger that can disassemble the code of previously compiled programs. In order not to go beyond the scope of the article, we write a simple arithmetic function that has a good backlog for parallelization.

uint64_t CalcParallel(

uint64_t a,

uint64_t b,

uint64_t c,

uint32_t d,

uint32_t e,

uint16_t f,

uint16_t g,

uint8_t h

) {

return (a * b) + (c * d) - (e * f) + (g / h);

}

Here's what it translates to when compiling in -O3 mode :

0x0000000000010490 <+0>:

muld,1 %dr0, %dr1, %dg20

sxt,2 6, %r3, %dg19

getfs,3 %r6, _f32,_lts2 0x2400, %g17

getfs,4 %r5, _lit32_ref, _lts2 0x00002400, %g18

getfs,5 %r7, _f32,_lts3 0x200, %g16

return %ctpr3

setwd wsz = 0x5, nfx = 0x1

setbp psz = 0x0

0x00000000000104c8 <+56>:

nop 5

muld,0 %dr2, %dg19, %dg18

muls,3 %r4, %g18, %g17

sdivs,5 %g17, %g16, %g16

0x00000000000104e0 <+80>:

sxt,0 6, %g17, %dg17

addd,1 %dg20, %dg18, %dg18

0x00000000000104f0 <+96>:

nop 5

subd,0 %dg18, %dg17, %dg17

0x00000000000104f8 <+104>:

sxt,0 2, %g16, %dg16

0x0000000000010500 <+112>:

ct %ctpr3

ipd 3

addd,0 %dg17, %dg16, %dr0

The first thing that catches your eye is that each command is decoded at once into several instructions executed in parallel. The mnemonic designation of instructions is generally intuitive, although some names seem unusual after Intel: for example, an unsigned extension instruction here is called sxt , not movzx . The parameter of many computational commands, in addition to the operands themselves, is the number of the executive device - it is not without reason that ELBRUS stands for explicit basic resources utilization scheduling, that is, “explicit planning for the use of basic resources”.

To access the full 64-bit register value, the prefix “ d"; in theory, it is also possible to access the lower 16 and 8 bits of the value. The designation of general-purpose global registers, of which there are 32 pieces, is prefixed with “ g ” before the number , and local procedure registers with the prefix “ r ”. The window size of the local registers requested by the setwd instruction can reach 224, and the pump is pushed onto the stack automatically as needed.

The way you apply certain instructions is confusing: for example, return, as you might guess, it serves to return control to the calling procedure, however, in all the code samples studied, this instruction occurs long before the last command (where some kind of context manipulation is also present), sometimes even in the very first command word, as here. Although the aforementioned book devotes a whole paragraph to this issue, it hasn’t become clear for us yet. Update as of February 9, 2016: the comments suggest that the return statement only prepares the way for returning from the subprogram and allows the processor to start loading the next commands of the calling procedure, and the control itself returns when the execution reaches the ct instruction .

However, “easy to read code” and “efficient code” are far from being the same when it comes to machine instructions. If you compile without optimization, then the code is more consistent and similar to forehead calculation, but at the cost of lengthening: instead of 6 saturated command words, 8 sparse ones are generated.

Fortune-telling session on coffee grounds for the sim, let's finish before we fantasize to completely ridiculous assumptions. Let's hope that one day the command reference and programming and optimization guide will be made public.

Development tools

The standard compiler of C / C ++ languages in the Elbrus operating system is LCC - proprietary development of the MCST company, compatible with GCC. Detailed information about the structure and principles of this compiler is not published, but according to an interview with a former developer of one of several developed subspecies of the compiler, Edison Design Group uses a frontend for high-level analysis of source codes , and low-level translation to machine instructions can be performed in different ways - without optimization or with optimization. It is the optimizing compiler that is delivered to end users, not only on the E2K platform, for which there simply are no alternative machine code generators, but also on the SPARC family of platforms, where the usual GCC as part of the MSVS operating system is also available.

Considering the architectural features listed above - obvious concurrency, secure execution of programs - the LCC compiler obviously implements many unique solutions worthy of the most rigorous study and testing in practice. Unfortunately, at the time of writing these lines, the author has neither sufficient qualifications for this, nor time for such studies; I hope that sooner or later this issue will be addressed by a much wider circle of representatives of the IT community, including more competent ones.

From what we managed to notice with the naked eye when building programs for testing performance, LCC on E2K more often than others gives warnings about possible errors, illiterate constructions or simply suspicious places in the code. True, the author is not so familiar with GCC to guarantee the distinction between unique LCC messages in Russian and simply translated ones (moreover, the translation is selective), and I'm not sure that a more intense stream of warnings is not a result of an automatically completed assembly configuration. Also, without knowing the semantics of a particular section of code, it is sometimes difficult to understand how clever the compiler is in finding hidden bugs, or raising a false alarm. For example, in Postgresql code, the same construct is found four times in the same file with slight variations:

for (i = 0, ptr = cont->cells; *ptr; i++, ptr++) {

//....//

/* is string only whitespace? */

if ((*ptr)[strspn(*ptr, " \t")] == '\0')

fputs(" ", fout);

else

html_escaped_print(*ptr, fout);

//....//

}

The compiler predicts a possible way out of the 1-dimensional array in the string with the call of the strspn function . Under what circumstances this can happen, the author does not understand (and there was no such warning on other platforms, although the check mode is Warray-boundsis standard for GCC), however, it is noteworthy that multiple replications of the same non-trivial design (since it was necessary to explain its purpose in the commentary), instead of putting it into a separate function with an eloquent name that does not require explanation. Even if the alarm turned out to be false, detecting a foul-smelling code is a useful effect; the authors of the PVS ‑ Studio static analyzer will be left without work. But seriously, it would be amusing and useful to compare what additional errors in the code the LCC is really capable of detecting due to the unique features of the E2K architecture - at the same time, the free software world could get another batch of bug reports.

Ещё одним курьёзным результатом знакомства с говорливым LCC стало просвещение автора, а затем и его более опытных коллег, на тему, что такое триграфы (trigraphs) в языках C/C++, и почему они по умолчанию не поддерживаются, к счастью. Вот так живёшь и не подозреваешь, что безобидное на первый взгляд сочетание знаков пунктуации в текстовых литералах или комментариях может оказаться миной замедленного действия — или отличным материалом для программной закладки, смотря с какой стороны баррикад вы находитесь.

An unpleasant consequence of LCC’s self-sufficiency is that its message format is different from that of GCC, and when compiled from a development environment (for example, Qt Creator), these messages fall only in the general log of work, but not in the list of recognized problems. Perhaps this can be customized in some way, either from the compiler's side or in the development environment, but at least out of the box it does not understand one another.

Traditionally acute for domestic platforms, given their relatively low performance, there is the issue of cross-compilation, that is, the assembly of programs for the target architecture and a specific set of system libraries, using the resources of more powerful computers, with a different architecture and other software. Judging by the identification lines in the core of the Elbrus system and in the LCC compiler itself, they are assembled on Linux i386, but this toolkit for x86, of course, is not included in the distribution package of the system itself. It’s interesting, but is it possible to do the opposite: on Elbrus to collect programs for other platforms? (The author did not succeed further than the first phase of the GCC assembly for i386.)

Versions of the most significant packages for the developer:

- compilers: lcc 1.19.18 (gcc 4.4.0 compatible);

- interpreters: erlang 15.b.1, gawk 4.0.2, lua 5.1.4, openjdk 1.6.0_27 (jvm 20.0 ‑ b12), perl 5.16.3, php 5.4.11, python 2.7.3, slang 2.2.4, tcl 8.6.1;

- build tools: autoconf 2.69, automake 1.13.1, cmake 2.8.10.2, distcc 3.1, m4 1.4.16, make 3.81, makedepend 1.0.4, pkgtools 13.1, pmake 1.45;

- build tools: binutils 2.23.1, elfutils 0.153, patchelf 0.6;

- frameworks: boost 1.53.0, qt 4.8.4, qt 5.2.1;

- libraries: expat 2.1.0, ffi 3.0.10, gettext 0.18.2, glib 2.36.3, glibc 2.16.0, gmp 4.3.1, gtk + 2.24.17, mesa 10.0.4, ncurses 5.9, opencv 2.4.8, pcap 1.3.0, popt 1.7, protobuf 2.4.1, sdl 1.2.13, sqlite 3.6.13, tk 8.6.0, usb 1.0.9, wxgtk 2.8.12, xml ‑ parser 2.41, zlib 1.2.7;

- testing and debugging tools: cppunit 1.12.1, dprof 1.3, gdb 7.2, perf 3.5.7;

- development environments: anjuta 2.32.1.1, glade 2.12.0, glade 3.5.1, qt ‑ creator 2.7.1;

- version control systems: bzr 2.2.4, cvs 1.11.22, git 1.8.0, patch 2.7, subversion 1.7.7.

Again, if you were expecting GCC 5, PHP 7, and Java 9, then these are your problems, as one famous footballer says. In this case, I must also say thank you that at least not GCC 3.4.6 (LCC 1.16.12), as part of previous versions of the Elbrus system, or GCC 3.3.6 as part of MSVS 3.0; By the way, the main compiler in MSVS 3.0 is still GCC 2.95.4 (and why be surprised when there is a kernel from a 2.4 branch?). Compared to the previous situation, when it was possible to stumble upon a GCC bug fixed in the upstream ten years ago, the new system has almost heavenly conditions - you can even swipe it in C ++ 11 if you do not want to maintain backward compatibility.

The appearance of OpenJDK, at least in some form, can already be called a big breakthrough, because dislike for Java and Monoin such systems has long been known; and this dislike can be understood when even native programs barely move. Since there are many javists among the author’s colleagues, due to the above circumstances, forced to restrain the souls wonderful impulses, it was decided to devote a separate series of performance tests to devote Java. Looking ahead, we note that the results were discouraging even in relative terms: with the same success, you can write interpreted scripts in PHP or Python, probably.

Support for C and C ++ alone is not limited to compatibility with the GNU Compiler Collection: the system still has a Fortran translator. Since the author is only familiar with Professor Fortran, everyone interested can recommend the December topic on “Made with Us”, where the comments touch on the use of this language as a benchmark.

For dessert, we stocked the most delicious: the last part of the article is devoted to the study of the performance of Elbrus in comparison with a variety of hardware and software platforms, including domestic ones.