Microsoft plans to host data centers on the ocean floor

Welcome to our readers on the iCover Blog Pages ! According to The New York Times, Microsoft's research division of Microsoft Research, which unites more than 1.1 thousand scientists on the planet, is exploring the possibility of placing data centers at sea and ocean depths. The 3-month field trials of the first operational prototype of the underwater data center within the framework of the new global project of Project Natick were completed successfully.

High-performance server equipment installed in data storage and processing centers (DPCs) and responsible for many operations, from ensuring the stable operation of social networks and mail services to playing streaming video and meeting the rapidly growing needs of the Internet of Things, produces tremendous amounts of thermal energy. This, in turn, necessitates the continuous use of expensive cooling systems in operation. That is why IT market leaders, including IT giants such as Facebook and Google, are deploying their data centers in cold European countries in northern Europe. “When you use your smartphone, you believe that everything happens according to the will of this wonderful little computer, but in fact you use more than 100 computers in an environment called the cloud,” Says Peter Lee, corporate vice president of Microsoft Research. “Multiply the result by billions of people, and then you can very vaguely imagine how huge the scale of the ongoing computational work.”

The idea of organizing a data center on the ocean floor was born by the company's employees in 2014 during research work at Microsoft data centers. Interestingly, one of the crew members, Sean James, once worked on a U.S. Navy submarine. According to the project, the waters of the ocean depths are quite capable of doing colossal work to cool server equipment efficiently and absolutely free of charge, and for a system using tidal energy or a turbine, it can generate electricity in volumes sufficient for the full operation of the equipment. To provide the required level of isolation, Microsoft suggests placing the servers in special waterproof steel containers.

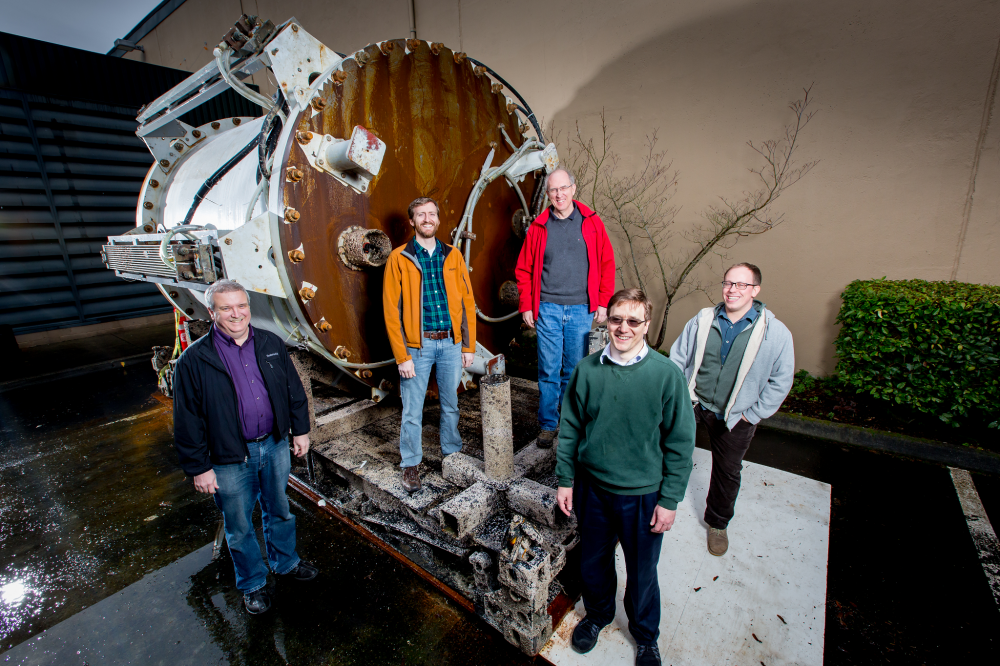

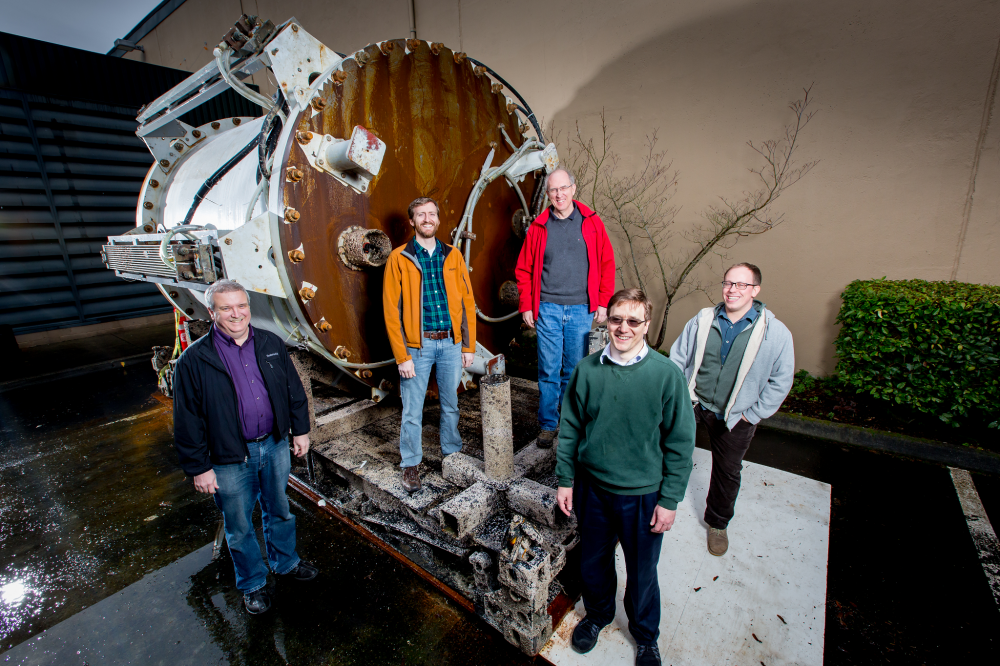

The Natick Team: Eric Peterson, Spencer Fowers, Norm Whitaker, Ben Cutler, Jeff Kramer. (left to right)

According to the information on the pages of the publication, the project passed the first stage of testing. To conduct a series of tests one kilometer off the coast of central California, not far from San Luis Obispo, a 2.4-meter diameter steel capsule immersed to a depth of 9 meters was used. Testing modes were managed from the company's campus office.

In order to collect data for subsequent modeling of the situation as close as possible to the natural one, when ... “sending a repairman to fix the problem in the middle of the night is physically impossible”, the container was equipped with hundreds of different sensors for measuring pressure, humidity, movement and other significant parameters. The main cause for concern for the specialists participating in the experiment was the high, in their opinion, probability of hardware failures and data loss. Fortunately, their fears did not materialize, and the system adequately passed all the pilot tests. This allowed the team to decide to extend the originally planned experiment time to 105 days and even successfully deploy some commercial projects with data processing in the Microsoft Azure cloud environment during further testing.

It is very important that, as the results of the experiments showed, the capsule during operation "in load mode" warmed up "... extremely little", that is, "... at a distance of several inches from its outer walls, no changes in water temperature were recorded ”Said Dr. Lee. At the end of a series of experiments, the prototype of the first underwater data center, Leona Philpot, named after the character of the Halo series of video games, was successfully returned from the ocean floor and delivered to the company's campus slightly overgrown with small shells.

According to The New York Times , to date, Microsoft has invested in the creation of an existing global network of more than 100 data centers$ 15 billion and provides more than 200 different online services. In the immediate plans of the company, the deployment of a whole network of data centers is primarily in those countries of Europe where state programs for supporting alternative energy are operating.

The successful implementation of the first stage of the experiment allows the research team to plan the development of the next version of a triple larger capsule. To participate in the design at this stage, the unit plans to attract a group of specialists in alternative energy.

To make proactive conclusions about the likelihood of technical implementation of this bold project, and even more so, the timing of putting the first facilities into operation is clearly premature. But if successful, the company will be able to significantly reduce not only operating costs, but also the time required to launch one data center.

Another not obvious advantage is the possibility of placing data centers at much shorter distances from a user living in the vicinity of the coastline than is the case in current practice when placing data centers on land.

Briefly:

The Natick project promises to help improve the quality of customer service in the regions located adjacent to the coastline. According to Microsoft (... Half of the world's population lives within 200 km of the ocean ...), almost 50% of the world's population lives up to 200 km from the coastline where Natick data centers can be installed. Placing data centers in the coastal shelf will drastically reduce the delay time and improve the quality of the received signal.

One of the advantages of deep-sea data center technology is the rapid deployment of the complex (up to 90 days instead of 2 years). In addition, such systems are able to quickly respond to market needs, quickly deploy in the event of natural disasters or special events.

The company's specialists are studying the possibility of deploying highly reliable server equipment with a limiting period of cadence once a decade. However, according to the plan of the company's engineers, the data center will be deployed for a period of 5 years, after which its hardware and configuration will change to the actual ones at the time of replacement. The target life expectancy of one data center is at least 20 years, after which it will be sent for processing.

Despite the successful start and first results, the experiment participants themselves did not name any specific dates for putting the deep-water data centers into operation: "... Project Natick is currently at the research stage. It's still early days in evaluating whether this concept could be adopted by Microsoft and other cloud service providers "- such a comment on the question about the timing can be found on the project page.

Sources

natick.research.microsoft.com

The New York Times

businessinsider.com

Dear readers, we are always happy to meet and wait for you on the pages of our blog. We are ready to continue sharing with you the latest news, review materials and other publications, and will try to do everything possible to ensure that the time spent with us is wasted by you. And, of course, do not forget to subscribe to our sections .

Our other articles and events

High-performance server equipment installed in data storage and processing centers (DPCs) and responsible for many operations, from ensuring the stable operation of social networks and mail services to playing streaming video and meeting the rapidly growing needs of the Internet of Things, produces tremendous amounts of thermal energy. This, in turn, necessitates the continuous use of expensive cooling systems in operation. That is why IT market leaders, including IT giants such as Facebook and Google, are deploying their data centers in cold European countries in northern Europe. “When you use your smartphone, you believe that everything happens according to the will of this wonderful little computer, but in fact you use more than 100 computers in an environment called the cloud,” Says Peter Lee, corporate vice president of Microsoft Research. “Multiply the result by billions of people, and then you can very vaguely imagine how huge the scale of the ongoing computational work.”

The idea of organizing a data center on the ocean floor was born by the company's employees in 2014 during research work at Microsoft data centers. Interestingly, one of the crew members, Sean James, once worked on a U.S. Navy submarine. According to the project, the waters of the ocean depths are quite capable of doing colossal work to cool server equipment efficiently and absolutely free of charge, and for a system using tidal energy or a turbine, it can generate electricity in volumes sufficient for the full operation of the equipment. To provide the required level of isolation, Microsoft suggests placing the servers in special waterproof steel containers.

The Natick Team: Eric Peterson, Spencer Fowers, Norm Whitaker, Ben Cutler, Jeff Kramer. (left to right)

According to the information on the pages of the publication, the project passed the first stage of testing. To conduct a series of tests one kilometer off the coast of central California, not far from San Luis Obispo, a 2.4-meter diameter steel capsule immersed to a depth of 9 meters was used. Testing modes were managed from the company's campus office.

In order to collect data for subsequent modeling of the situation as close as possible to the natural one, when ... “sending a repairman to fix the problem in the middle of the night is physically impossible”, the container was equipped with hundreds of different sensors for measuring pressure, humidity, movement and other significant parameters. The main cause for concern for the specialists participating in the experiment was the high, in their opinion, probability of hardware failures and data loss. Fortunately, their fears did not materialize, and the system adequately passed all the pilot tests. This allowed the team to decide to extend the originally planned experiment time to 105 days and even successfully deploy some commercial projects with data processing in the Microsoft Azure cloud environment during further testing.

It is very important that, as the results of the experiments showed, the capsule during operation "in load mode" warmed up "... extremely little", that is, "... at a distance of several inches from its outer walls, no changes in water temperature were recorded ”Said Dr. Lee. At the end of a series of experiments, the prototype of the first underwater data center, Leona Philpot, named after the character of the Halo series of video games, was successfully returned from the ocean floor and delivered to the company's campus slightly overgrown with small shells.

According to The New York Times , to date, Microsoft has invested in the creation of an existing global network of more than 100 data centers$ 15 billion and provides more than 200 different online services. In the immediate plans of the company, the deployment of a whole network of data centers is primarily in those countries of Europe where state programs for supporting alternative energy are operating.

The successful implementation of the first stage of the experiment allows the research team to plan the development of the next version of a triple larger capsule. To participate in the design at this stage, the unit plans to attract a group of specialists in alternative energy.

To make proactive conclusions about the likelihood of technical implementation of this bold project, and even more so, the timing of putting the first facilities into operation is clearly premature. But if successful, the company will be able to significantly reduce not only operating costs, but also the time required to launch one data center.

Another not obvious advantage is the possibility of placing data centers at much shorter distances from a user living in the vicinity of the coastline than is the case in current practice when placing data centers on land.

Briefly:

The Natick project promises to help improve the quality of customer service in the regions located adjacent to the coastline. According to Microsoft (... Half of the world's population lives within 200 km of the ocean ...), almost 50% of the world's population lives up to 200 km from the coastline where Natick data centers can be installed. Placing data centers in the coastal shelf will drastically reduce the delay time and improve the quality of the received signal.

One of the advantages of deep-sea data center technology is the rapid deployment of the complex (up to 90 days instead of 2 years). In addition, such systems are able to quickly respond to market needs, quickly deploy in the event of natural disasters or special events.

The company's specialists are studying the possibility of deploying highly reliable server equipment with a limiting period of cadence once a decade. However, according to the plan of the company's engineers, the data center will be deployed for a period of 5 years, after which its hardware and configuration will change to the actual ones at the time of replacement. The target life expectancy of one data center is at least 20 years, after which it will be sent for processing.

Despite the successful start and first results, the experiment participants themselves did not name any specific dates for putting the deep-water data centers into operation: "... Project Natick is currently at the research stage. It's still early days in evaluating whether this concept could be adopted by Microsoft and other cloud service providers "- such a comment on the question about the timing can be found on the project page.

Sources

natick.research.microsoft.com

The New York Times

businessinsider.com

Dear readers, we are always happy to meet and wait for you on the pages of our blog. We are ready to continue sharing with you the latest news, review materials and other publications, and will try to do everything possible to ensure that the time spent with us is wasted by you. And, of course, do not forget to subscribe to our sections .

Our other articles and events