OpenCL in everyday tasks

Recently, we talked about HSA and during the discussion of the advantages of the new approach to building PCs, we touched on such an interesting topic as GPGPU - general-purpose computing on a graphics accelerator. Today, AMD video accelerators provide access to their resources using OpenCL, a framework that provides a relatively simple and intuitive programmable high-parallel system.

Today, OpenCL technologies are supported by all the major players on the market: the ability to provide programs with access to “advanced” acceleration (also free, since OpenCL does not imply any royalties and royalties) is clearly worth it, and everyone benefits from the versatility of such APIs who implements OpenCL support in their products.

Read more about where OpenCL can be found in everyday life today, how it speeds up regular office software and what opportunities it opens up for developers today and we'll talk.

Of course, OpenCL is not the only way to implement general GPU computing. In addition to OpenCL, there are CUDA, OpenACC and C ++ AMP on the market, but the first two technologies are really popular and well-known.

The same people who gave the world OpenGL and OpenAM technologies: Khronos Group, were developing the OpenCL standard. The OpenCL trademark itself is owned by Apple, but, fortunately for programmers and users around the world, this technology is not closed or tied to the products of the "apple" company. In addition to Apple, Khronos Goup includes such market giants as Activision Blizzard, AMD, IBM, Intel, NVidia, and a dozen other companies (mainly manufacturers of ARM solutions) that joined the consortium later.

To a certain extent, OpenCL and CUDA are ideologically and syntactically similar, from which the community has only benefited. Because of the similarity of certain methods and approaches, it’s easier for programmers to use both technologies, to switch from “closed” and NVidia CUDA hardware-bound to universal and working everywhere (including ordinary multi-core CPUs, and supercomputers based on CELL architecture) OpenCL .

Now you’ll think, “yeah, they’ll talk about games and photoshop.” No, OpenCL is able to speed up computing beyond just graphics. One of the most popular applications that use the capabilities of GPGPU is ... a cross-platform office suite LibreOffice. OpenCL support appeared in it in 2014 and is used to speed up calculations in the Calc table manager.

Here's a visual comparison of system performance with an AMD A10-7850K with an R7 graphics core and an Intel Core I5 with an HD4600 on board:

In synthetic tests, the same AMD A10-7850K in heterogeneous computing using OpenCL outperforms the almost twice as expensive i5-4670K / 4690 :

By the way, in science and its applied areas there are a lot of tasks that perfectly shift to the vector processors of video accelerators and allow you to perform calculations tens and hundreds of times faster than on the CPU.

For example, various sections of linear algebra. Multiplication of vectors and matrices is what GPUs do every day, working with graphics. In these tasks, they have practically no equal, because their architecture has been honed for years.

This also includes the fast Fourier transform and all that is connected with it: solving complex differential equations by various methods. Separately, we can distinguish the gravitational problems of N-bodieswhich are used for calculating aero- and hydrodynamics, modeling liquids and plasma. The complexity of the calculations lies in the fact that each particle interacts with the others, the laws of interaction are quite complicated, and the calculations need to be carried out in parallel. For such tasks, OpenCL and AMD GPU capabilities are perfectly suited, as parallel computing with many objects and so successfully solved on processors of this type every day: in pixel shaders .

Structured grids are often used in raster graphics. Unstructured - in calculations in the field of hydrodynamics and in various calculations with elements whose graphs have different weights. Differences between structured gratings and unstructured ones in the number of “neighbors” of each element: structured gratings have the same difference, unstructured gratings have different ones, but both of them fit perfectly with OpenCL's ability to speed up calculations. Difficulties in transferring computations are mainly mathematical. That is, the main task of the programmer is not only to “write” the system’s work, but also to develop a mathematical description that transfers data to hardware capabilities using OpenCL.

Combinatorial logic (hash calculation also applies here), Monte Carlo methods- that is well ported to the GPU. A lot of computing modules, high performance in parallel computing are what really accelerates these algorithms.

Search the way back. Graph calculations and dynamic programming: sorting, collision detection (contact, intersection), regular structure generation, various selection and search algorithms. With some limitations, but they lend themselves to optimization and acceleration of the work of neural networks and related structures, but here, the problems are more likely that neural structures are simply “expensive” to virtualize, it is more profitable to use FPGA solutions. The work of finite state machines (which are already used in working with the GPU, for example, when it comes to compression / decompression of a video signal or work to find duplicate elements) is excellent.

Comparing directly the performance of OpenCL and CUDA makes little sense. Firstly, if we compare them on AMD and NVidia video cards, AMD video adapters will win in a rough race of computing capabilities: modern NVidia accelerators have a number of performance limitations in FP64 format introduced by NVidia itself in order to sell “professional” video cards for computing (Tesla and Titan Z series). Their price is incomparably higher than that of their FLOPS counterparts based on AMD solutions and their “related” cards in the NVidia number line, which makes the comparison rather complicated. You can take into account performance-per-watt or productivity-per-dollar, but this has practically nothing to do with a pure comparison of computing power: “FLOPS at any cost” are weakly matched with the current financial situation,

Secondly, you can try to compare OpenCL and CUDA on a video card from NVidia, but NVidia itself implements OpenCL through CUDA at the driver level, so the comparison will be somewhat dishonest for obvious reasons.

On the other hand, if you take into account not only performance, then some analysis can still be done.

OpenCL runs on a much larger list of hardware than NVidia CUDA. Almost all CPUs supporting the SSE 3 instruction set, video accelerators from Radeon HD5xxx and NVidia GT8600 to the latest Fury / Fury-X and 980Ti / Titan X, APU from AMD, Intel integrated graphics - in general, almost any modern hardware with several cores can take advantage of this technology.

The implementation features of CUDA and OpenCL (as well as rather complicated documentation, since parallel programming as a whole is far from the easiest development area), are rather reflected in specific development capabilities and tools, and not in the field of productivity.

For example, OpenCL has some memory allocation problems due to “The OpenCL documentation is very unclear here”.

At the same time, CUDA is inferior to OpenCL in the field of synchronization of flows - data, instructions, memory, anything. In addition, using OpenCL, you can use out-of-order queues and instructions, and CUDA still only knows how to in-order. In practice, this avoids processor downtime while waiting for data, and the effect is more noticeable, the longer the processor cover and the greater the difference between the speed of memory and the speed of computing modules. In a nutshell: the more power you allocate under OpenCL, the greater will be the gap in performance. CUDA will require writing much more complex code to achieve comparable results.

Development tools (debugger, profiler, compiler) CUDA is somewhat better than similar ones in OpenCL, but CUDA implements API through C, and OpenCL through C ++, simplifying work with object-oriented programming, while both frameworks abound with "local »Tricks, restrictions and features.

NVidia's approach in this case is very similar to how Apple works. A closed solution, with a large set of restrictions and strict rules, but well sharpened for working on a specific hardware.

OpenCL offers more flexible tools and capabilities, but requires a higher level of training from developers. General code on a pure OpenCL should run on any hardware supporting it, but at the same time “optimized” for specific solutions (say, AMD video accelerators or CELL processors) will work much faster.

Today, OpenCL technologies are supported by all the major players on the market: the ability to provide programs with access to “advanced” acceleration (also free, since OpenCL does not imply any royalties and royalties) is clearly worth it, and everyone benefits from the versatility of such APIs who implements OpenCL support in their products.

Read more about where OpenCL can be found in everyday life today, how it speeds up regular office software and what opportunities it opens up for developers today and we'll talk.

GPGPU, OpenCL and a bit of history

Of course, OpenCL is not the only way to implement general GPU computing. In addition to OpenCL, there are CUDA, OpenACC and C ++ AMP on the market, but the first two technologies are really popular and well-known.

The same people who gave the world OpenGL and OpenAM technologies: Khronos Group, were developing the OpenCL standard. The OpenCL trademark itself is owned by Apple, but, fortunately for programmers and users around the world, this technology is not closed or tied to the products of the "apple" company. In addition to Apple, Khronos Goup includes such market giants as Activision Blizzard, AMD, IBM, Intel, NVidia, and a dozen other companies (mainly manufacturers of ARM solutions) that joined the consortium later.

To a certain extent, OpenCL and CUDA are ideologically and syntactically similar, from which the community has only benefited. Because of the similarity of certain methods and approaches, it’s easier for programmers to use both technologies, to switch from “closed” and NVidia CUDA hardware-bound to universal and working everywhere (including ordinary multi-core CPUs, and supercomputers based on CELL architecture) OpenCL .

OpenCL in everyday use

Now you’ll think, “yeah, they’ll talk about games and photoshop.” No, OpenCL is able to speed up computing beyond just graphics. One of the most popular applications that use the capabilities of GPGPU is ... a cross-platform office suite LibreOffice. OpenCL support appeared in it in 2014 and is used to speed up calculations in the Calc table manager.

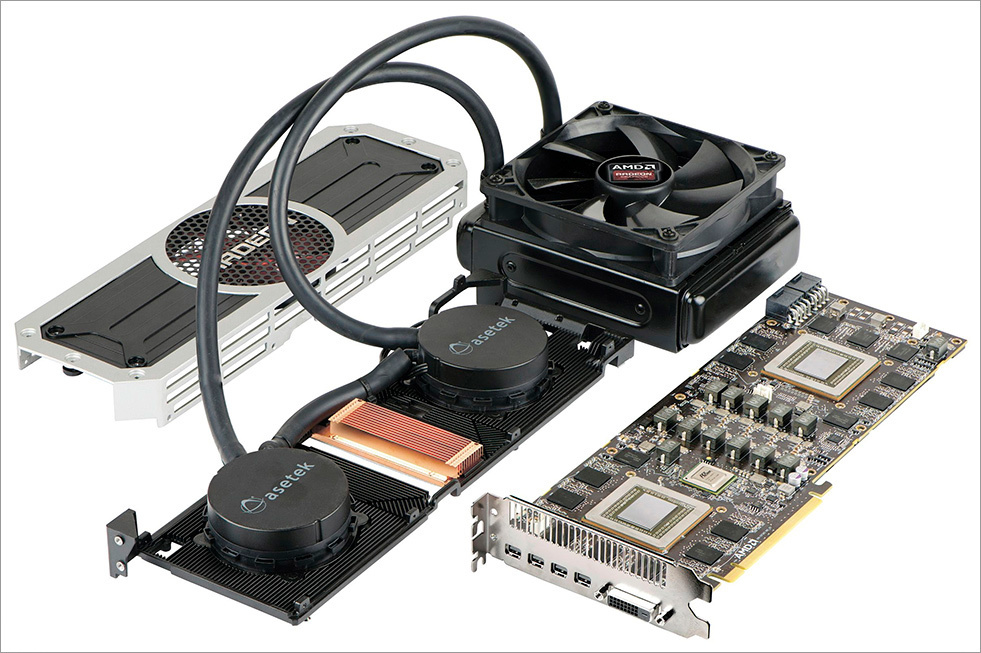

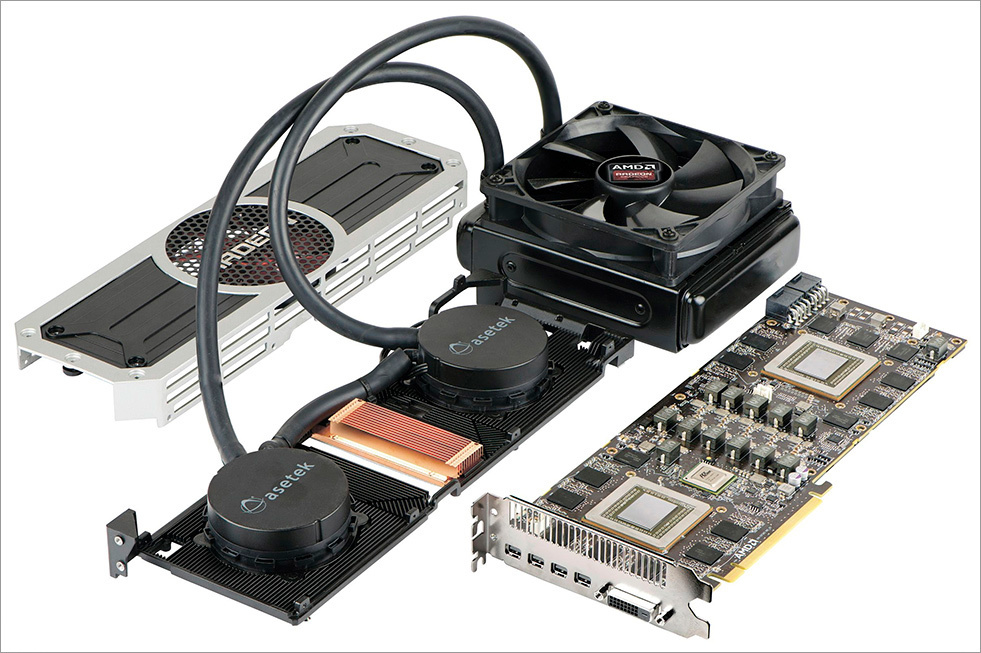

Here's a visual comparison of system performance with an AMD A10-7850K with an R7 graphics core and an Intel Core I5 with an HD4600 on board:

In synthetic tests, the same AMD A10-7850K in heterogeneous computing using OpenCL outperforms the almost twice as expensive i5-4670K / 4690 :

By the way, in science and its applied areas there are a lot of tasks that perfectly shift to the vector processors of video accelerators and allow you to perform calculations tens and hundreds of times faster than on the CPU.

For example, various sections of linear algebra. Multiplication of vectors and matrices is what GPUs do every day, working with graphics. In these tasks, they have practically no equal, because their architecture has been honed for years.

This also includes the fast Fourier transform and all that is connected with it: solving complex differential equations by various methods. Separately, we can distinguish the gravitational problems of N-bodieswhich are used for calculating aero- and hydrodynamics, modeling liquids and plasma. The complexity of the calculations lies in the fact that each particle interacts with the others, the laws of interaction are quite complicated, and the calculations need to be carried out in parallel. For such tasks, OpenCL and AMD GPU capabilities are perfectly suited, as parallel computing with many objects and so successfully solved on processors of this type every day: in pixel shaders .

Structured grids are often used in raster graphics. Unstructured - in calculations in the field of hydrodynamics and in various calculations with elements whose graphs have different weights. Differences between structured gratings and unstructured ones in the number of “neighbors” of each element: structured gratings have the same difference, unstructured gratings have different ones, but both of them fit perfectly with OpenCL's ability to speed up calculations. Difficulties in transferring computations are mainly mathematical. That is, the main task of the programmer is not only to “write” the system’s work, but also to develop a mathematical description that transfers data to hardware capabilities using OpenCL.

Combinatorial logic (hash calculation also applies here), Monte Carlo methods- that is well ported to the GPU. A lot of computing modules, high performance in parallel computing are what really accelerates these algorithms.

What else can be accelerated with OpenCL and powerful GPUs?

Search the way back. Graph calculations and dynamic programming: sorting, collision detection (contact, intersection), regular structure generation, various selection and search algorithms. With some limitations, but they lend themselves to optimization and acceleration of the work of neural networks and related structures, but here, the problems are more likely that neural structures are simply “expensive” to virtualize, it is more profitable to use FPGA solutions. The work of finite state machines (which are already used in working with the GPU, for example, when it comes to compression / decompression of a video signal or work to find duplicate elements) is excellent.

OpenCL vs CUDA

Comparing directly the performance of OpenCL and CUDA makes little sense. Firstly, if we compare them on AMD and NVidia video cards, AMD video adapters will win in a rough race of computing capabilities: modern NVidia accelerators have a number of performance limitations in FP64 format introduced by NVidia itself in order to sell “professional” video cards for computing (Tesla and Titan Z series). Their price is incomparably higher than that of their FLOPS counterparts based on AMD solutions and their “related” cards in the NVidia number line, which makes the comparison rather complicated. You can take into account performance-per-watt or productivity-per-dollar, but this has practically nothing to do with a pure comparison of computing power: “FLOPS at any cost” are weakly matched with the current financial situation,

Secondly, you can try to compare OpenCL and CUDA on a video card from NVidia, but NVidia itself implements OpenCL through CUDA at the driver level, so the comparison will be somewhat dishonest for obvious reasons.

On the other hand, if you take into account not only performance, then some analysis can still be done.

OpenCL runs on a much larger list of hardware than NVidia CUDA. Almost all CPUs supporting the SSE 3 instruction set, video accelerators from Radeon HD5xxx and NVidia GT8600 to the latest Fury / Fury-X and 980Ti / Titan X, APU from AMD, Intel integrated graphics - in general, almost any modern hardware with several cores can take advantage of this technology.

The implementation features of CUDA and OpenCL (as well as rather complicated documentation, since parallel programming as a whole is far from the easiest development area), are rather reflected in specific development capabilities and tools, and not in the field of productivity.

For example, OpenCL has some memory allocation problems due to “The OpenCL documentation is very unclear here”.

At the same time, CUDA is inferior to OpenCL in the field of synchronization of flows - data, instructions, memory, anything. In addition, using OpenCL, you can use out-of-order queues and instructions, and CUDA still only knows how to in-order. In practice, this avoids processor downtime while waiting for data, and the effect is more noticeable, the longer the processor cover and the greater the difference between the speed of memory and the speed of computing modules. In a nutshell: the more power you allocate under OpenCL, the greater will be the gap in performance. CUDA will require writing much more complex code to achieve comparable results.

Development tools (debugger, profiler, compiler) CUDA is somewhat better than similar ones in OpenCL, but CUDA implements API through C, and OpenCL through C ++, simplifying work with object-oriented programming, while both frameworks abound with "local »Tricks, restrictions and features.

NVidia's approach in this case is very similar to how Apple works. A closed solution, with a large set of restrictions and strict rules, but well sharpened for working on a specific hardware.

OpenCL offers more flexible tools and capabilities, but requires a higher level of training from developers. General code on a pure OpenCL should run on any hardware supporting it, but at the same time “optimized” for specific solutions (say, AMD video accelerators or CELL processors) will work much faster.