The use of polarized light with Kinect increases the accuracy of 3D scanning by 1000 times

Light polarization algorithms made it possible to create serial depth sensors with a resolution 1000 times higher than their predecessors.

Researchers at the Massachusetts Institute of Technology (MIT) found that light polarization - the physical phenomenon underlying the technology for creating polarized sunglasses and most 3D films - will increase the resolution of conventional 3D visualization devices by 1000 times.

Thanks to this technology, high-quality built-in 3D cameras will appear in mobile phones and it will be possible to take photos, immediately sending them to print from a 3D printer.

One of the developers of the new system, Ahut Kadambi, a graduate student at MIT Media Lab, said: “Already today, you can reduce a 3D camera to the size that matches the parameters of mobile phones. But this affects the sensitivity of 3D sensors, which leads to a very rough reproduction of geometric shapes. We use natural polarization mechanisms. So thanks to polarizing filters, even when using low-quality sensors, we get results that are significantly superior in quality to images of objects from laser scanners used in mechanical engineering. ”

The new system, called Polarized 3D, is described in detail in an article that developers will present at an international computer technology conference in late December. Kadambi acted as a pioneer, then joined his research consultant Ramesh Raskar, associate professor of media art and science from MIT Media Lab; Boxin Shi, formerly a postdoctoral candidate in the Raskar group, is currently a research fellow at Rapid-Rich Object Search Lab; and Vahe Taamazyan, a graduate student at the Skolkovo Institute of Science and Technology, founded in 2011 with the support of the Massachusetts Institute of Technology.

Reflection of polarized light

If we consider an electromagnetic wave as a wave-like tilde, polarization will determine the direction of the symbol. A bend may appear above and below, or on both sides of the mark, or somewhere in the middle.

In addition, polarization affects the specifics of light reflection from physical objects. If light hits the object directly, most of it will be absorbed, but the entire spectrum of polarizations will be characterized by the same polarization spectrum as the incoming light beam. However, with wider angles of reflection, it is more likely that the volume of reflected light within a specific range of polarizations will be larger.

That is why polarized glasses perfectly eliminate glare: sunlight, reflected from asphalt or water at a small angle, as a rule, is a rather concentrated polarized beam. Thus, the polarization of reflected light conveys information about the geometry of illuminated objects.

The existence of this relationship has been known for a long time, but it was not possible to apply information in practice due to very ambiguous theories of polarized light. Light with a certain polarization, reflected from the surface in a specific direction and transmitted through a polarizing lens, cannot be distinguished from light with opposite polarization reflected from the surface in the opposite direction.

This means that for any surface in the visual scene for measurements obtained on the basis of polarized light, two hypotheses regarding orientation are equally valid. The selection of all possible combinations of the position of a particular surface in order to find out which of them fits harmoniously into the geometry of the scene leads to extremely time-consuming calculations.

Polarization plus depth analysis

To put an end to the existing uncertainty once and for all, Media Lab researchers used crude calculations of depth based on the time it takes a light signal to reflect from an object and return to its source. Even with such additional information, it is rather difficult to determine the surface orientation based on light polarization measurements, but this can be done in real time by using a graphics processor, such as specialized graphics chips of most game consoles.

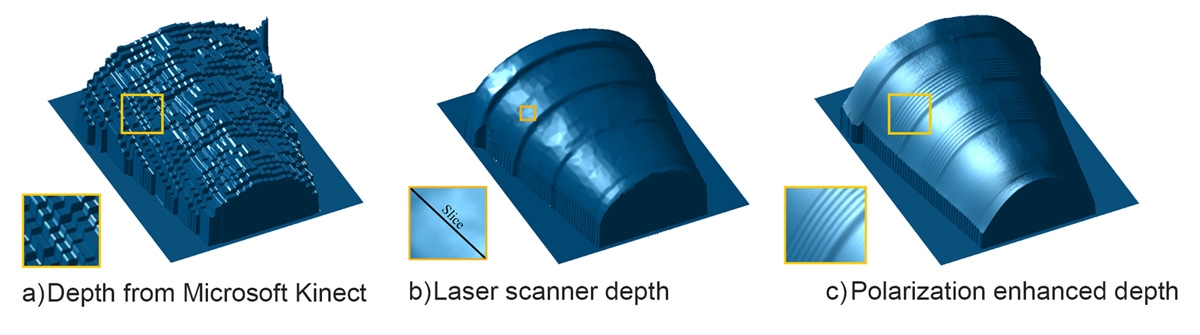

The experimental setup consisted of Microsoft Kinect - measuring depth using reflection time analysis - and a conventional polarized photo lens mounted in front of the camera. During each study, the developers took three pictures of the object, each time turning the polarizing filter. Based on the data obtained, the available algorithms compared the degree of illumination of the finished images.

By itself, at a distance of several meters, Kinect is able to recognize parts with a diameter of about a centimeter. But due to polarization, the experimental system made it possible to capture elements tens of micrometers in size, i.e. a thousand times smaller.

For comparison, the researchers also visualized several objects using a high-precision laser scanner, the design of which involves the preliminary installation of the necessary item on the tablet. But here, the Polarized 3D resolution turned out to be higher.

A mechanically rotating polarizing filter can hardly be considered as a suitable solution for a mobile phone’s camera, but lattices of miniature polarizing filters that can be superimposed on individual pixels in a light sensor are an excellent affordable alternative. Processing the equivalent of three pixels of light per each pixel of the image will reduce the resolution of the camera of a mobile phone, but no more than in the case of color filters, which are used in modern camera models.

According to a developer’s article, polarization systems will also help create self-driving cars. Available experimental samples of such machines, in principle, can be operated under normal lighting conditions, but as soon as it starts to rain, snow or fog, the system fails. This is because drops of water in the air scatter light differently, which makes automated analysis noticeably more difficult.

In the course of simple - though even at such a level, serious computing equipment had a hard time - tests, MIT researchers were able to prove that their system can use the information of interfering light waves to eliminate errors caused by scattering. According to Kadambi: “Mitigating the scattering effect in experimental scenes is just a small step forward. But I think that soon he will make a real breakthrough. ”

Yoav Schechner, associate professor of electrical engineering at Technion - Israel Institute of Technology in Haifa, Israel, explains: “The concept combines two principles of 3D scanning, each of which has its pros and cons. One of them determines the range of each pixel in the scene: this is a classic of the genre for most 3D visualization systems. The second principle does not specify a range, but, on the other hand, specifies the inclination of the object locally. That is, if we talk about a specific pixel in the scene, we will find out how straight or slanted the object is. ”

Schechner adds: “In this system, one principle eliminates inaccuracies caused by another. Thanks to this approach, which, in practice, eradicated the ambiguity that existed in the field of form scanning using polarization, polarization can become a universal tool in the field of mechanical engineering. ”