Development or slowdown of IT?

The progress of mankind over the past three to four hundred years has been exponential. By human progress, I mean here the total contribution of all sciences and technologies: mathematics and physics, biology and chemistry, engineering disciplines, architecture and industry. Many new areas have appeared, such as astronautics and microelectronics, genetic engineering and computer science. But each specific industry is developing unevenly and does not have an exponential nature.

Some industries may experience slowdowns and even stagnation. In this article, I am trying to reflect on entering a developmental plateau in IT.

Consider, for example, the automotive industry. You can go to the car exhibition and, in principle, see that there are no significant changes in the cars. Yes, the speeds developed have increased, yes, it has become more convenient, there are all kinds of ABS (anti-towing system), climate control, seat heating, panoramic cameras and more, but this all relates in many respects to additional accessories and design. These are not revolutionary changes. Cars remained approximately the same as 30-50-70 years ago. There are no flying cars, no walking machines or cars built on other principles (not counting Tesla and Google’s self-driving car, of course).

The situation is similar with the space industry. Our country is a historical leader in space technology: the first to launch a satellite, the first to send a man, our space station is full of astronauts from other countries. But lately there have also been no revolutionary changes: missiles are based on the same principles as 50 years ago. Mars is not populated, even on the moon, apple orchards are still not visible. People in the 60s thought that we already populate the entire solar system and go beyond it. But we still have rockets with satellites falling into the ocean.

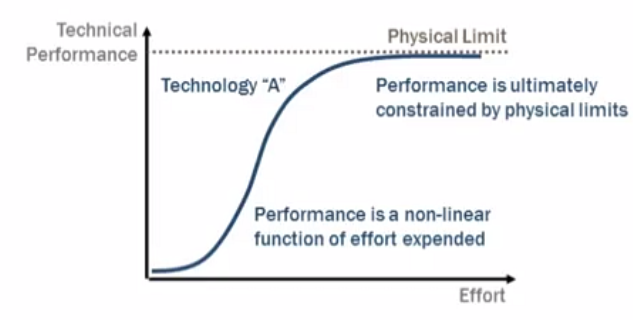

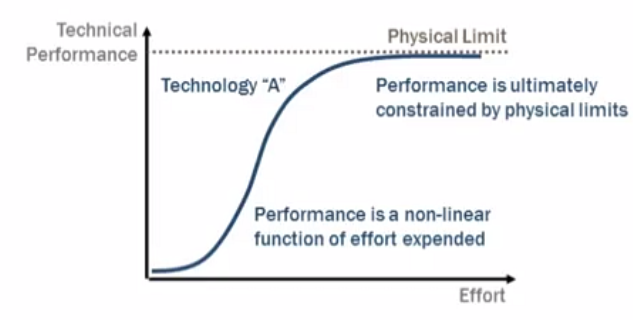

It seems logical that the development of sciences and engineering disciplines is spasmodic - a new revolutionary theory appears, for example, Newton's theory, the worldview is being revised, and science is reaching a new level. On which it develops slowly until the next revolutionary leap - for example, Einstein's theory. There is a paradigm shift and subsequent exponential growth. Which inevitably reaches a saturation plateau.

The slowdown in development after the jump is due to the fact that this subsequent development represents mainly various optimizations and improvements. Over time, after all the simple improvements have already been applied, the number and nature of these optimizations become very complex. Often they are much more complicated than the idea itself. For example, in cars, the original idea has not changed for more than a hundred years, but new models come out every year, with various new automatic systems: automatic transmission, fuel economy systems and more. And all complex systems can now carry out self-diagnostics and issue errors.

The same applies to processors. Three levels of caching, pipelines, superscalarity, predictive branching systems and more were added to the original idea.

In the end, the complexity and cost of introducing new optimizations will exceed the potential benefits - all low-hanging fruits will already be ripped off - and the technology will reach a plateau before the next leap. Horse racing is called scientific and technological revolution. These leaps can be caused by the ingenious ideas of a particular person (for example, von Neumann or Alan Turing), the mutual influence of systems, social, economic, or some other factors.

In addition, the older science becomes, the more discoveries it has made and the more experimental experience and knowledge it has accumulated, the more it is necessary to study, the more difficult it is for young scientists to start inventing something new (because in order to create something new , you must first familiarize yourself with and understand everything that was created before you). This explains the constant and significant increase in the age of Nobel laureates.

Obviously, at present, information technology is undergoing an exponential growth stage. How much is left? What makes us see this speed of IT development? What caused this growth?

The reasons, in my opinion, are as follows:

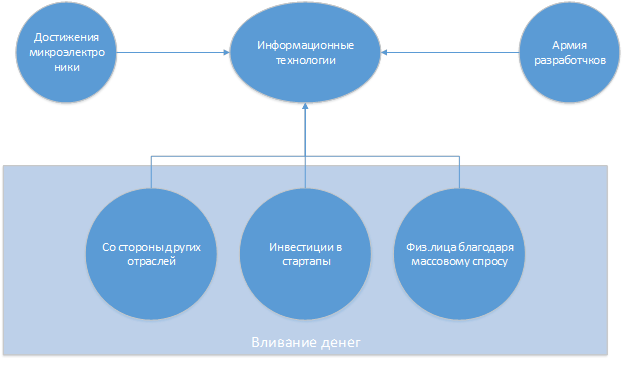

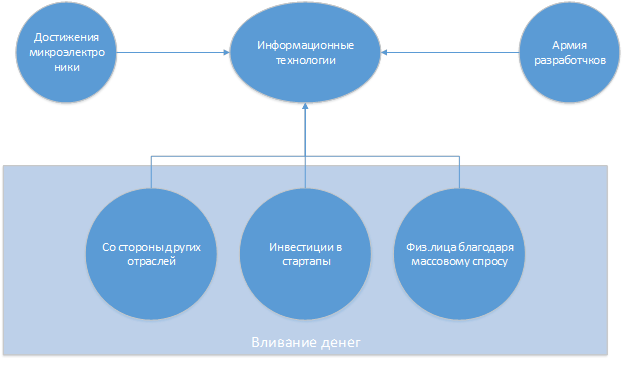

Firstly, the contribution of other engineering areas has affected the development of IT- microelectronics, which caused a decrease in cost and an increase in the power of iron. Why have processors become more powerful? Semiconductor transistors were invented, then photolithography technologies and ultra-large integrated circuits appeared. Whether Moore's law will cease to operate in the coming years is the topic of a separate article.

The second reason: an injection of money .

All these injections of money make it possible to pay for a huge army of developers who create millions of applications. I think that saturation will soon happen here: computers are now changing less often than before, corporations have already written their software, and as for the sunset of startups, this can also be discussed separately.

An interesting and paradoxical observation: despite such a colossal increase in processor power, I do not see equally significant changes in the speed of execution and the tasks that I solve. For example, there is not much difference between the Word and Excel of 1996 and modern versions. As I watched films 10 years ago, and now, only the resolution is slightly better and download faster (several times, but not thousands of times). There is no revolutionary difference between the then Duke Nukem and the present (namely revolutionary), many still play Heroes III.

Here are some specific examples:

I think this is because it is impossible to rewrite such a huge amount of code from scratch. What are backward compatibility issues and more. Despite all the techniques such as agile, modularity and other things, changing the developed software is much, ten times more difficult than the original development. But throwing and writing everything from scratch is impossible. Yes, and why? Therefore, the core of the systems is almost unchanged, added ryushechki and external things, changing the GUI - which is easy.

The IT industry is young enough in two ways, as Uncle Bob said: she is not so many years old and the average age of programmers is very small - in the region of 30 years. This happens because the number of programmers is growing rapidly due to the arrival of young people - it almost doubles every 5 years. There is a trend that older programmers go to managerial positions.

It is paradoxical that, although the basic software solves all the same problems as many years ago, the tools are changing rapidly: languages are being improved, new frameworks and libraries, new approaches are emerging. When I started, I wrote in plain visual basic, in pascal, in Delphi. Now the star of these languages has practically rolled. At that time there were no languages like C # or Scala. No one spoke about functional programming, this was not a trend. And even a little earlier, probably, they did not use Delphi and OOP, they wrote in Fortran. I remember the time when people used DOS, there was no Internet, there was no google and facebook. That was 20 years ago.

What conclusion can be drawn from the following two facts: more newcomers come and new fashionable languages, frameworks, technologies appear all the time? The software will contain many bugs and will be overcomplicated. Of course, there are many techniques that are designed to reduce the complexity of the code: continuous and flexible development, TDD and unit testing, version control systems and code reviews. It's difficult to do without a version control system when a lot of people work on the code. The code is getting bigger and bigger. Tens of years ago there were tens of thousands of lines of code in complex programs, now tens of millions of lines of code. And the new software builds on, uses, and pulls hundreds of thousands of lines of code from third-party libraries. Very often in a project no one understands how a particular module works. It even happens that no one understands how the whole system works. There is a lot of code, it was written by different teams at different times. The goals could be different. There could be prerequisites that were simply forgotten. Something was done for the future, but something was not needed. The code has become very complicated.

That is, the objective reality is this: systems are enlarged, increased in size, more and more people are working on them, the code becomes more and more and more difficult.

Thus, in the future, most programmers will work to support huge monsters, and not write something from scratch. And this activity will increasingly obey the rules, because somehow it will be necessary to leave the software workable. Everything will turn into clear bureaucratic procedures. Unauthorized violation of the order, for example, changing the procedure, will be unacceptable. And this is a factor in slowing IT down.

Thanks for attention!

Some industries may experience slowdowns and even stagnation. In this article, I am trying to reflect on entering a developmental plateau in IT.

Consider, for example, the automotive industry. You can go to the car exhibition and, in principle, see that there are no significant changes in the cars. Yes, the speeds developed have increased, yes, it has become more convenient, there are all kinds of ABS (anti-towing system), climate control, seat heating, panoramic cameras and more, but this all relates in many respects to additional accessories and design. These are not revolutionary changes. Cars remained approximately the same as 30-50-70 years ago. There are no flying cars, no walking machines or cars built on other principles (not counting Tesla and Google’s self-driving car, of course).

The situation is similar with the space industry. Our country is a historical leader in space technology: the first to launch a satellite, the first to send a man, our space station is full of astronauts from other countries. But lately there have also been no revolutionary changes: missiles are based on the same principles as 50 years ago. Mars is not populated, even on the moon, apple orchards are still not visible. People in the 60s thought that we already populate the entire solar system and go beyond it. But we still have rockets with satellites falling into the ocean.

It seems logical that the development of sciences and engineering disciplines is spasmodic - a new revolutionary theory appears, for example, Newton's theory, the worldview is being revised, and science is reaching a new level. On which it develops slowly until the next revolutionary leap - for example, Einstein's theory. There is a paradigm shift and subsequent exponential growth. Which inevitably reaches a saturation plateau.

The slowdown in development after the jump is due to the fact that this subsequent development represents mainly various optimizations and improvements. Over time, after all the simple improvements have already been applied, the number and nature of these optimizations become very complex. Often they are much more complicated than the idea itself. For example, in cars, the original idea has not changed for more than a hundred years, but new models come out every year, with various new automatic systems: automatic transmission, fuel economy systems and more. And all complex systems can now carry out self-diagnostics and issue errors.

The same applies to processors. Three levels of caching, pipelines, superscalarity, predictive branching systems and more were added to the original idea.

In the end, the complexity and cost of introducing new optimizations will exceed the potential benefits - all low-hanging fruits will already be ripped off - and the technology will reach a plateau before the next leap. Horse racing is called scientific and technological revolution. These leaps can be caused by the ingenious ideas of a particular person (for example, von Neumann or Alan Turing), the mutual influence of systems, social, economic, or some other factors.

In addition, the older science becomes, the more discoveries it has made and the more experimental experience and knowledge it has accumulated, the more it is necessary to study, the more difficult it is for young scientists to start inventing something new (because in order to create something new , you must first familiarize yourself with and understand everything that was created before you). This explains the constant and significant increase in the age of Nobel laureates.

Obviously, at present, information technology is undergoing an exponential growth stage. How much is left? What makes us see this speed of IT development? What caused this growth?

The reasons, in my opinion, are as follows:

Firstly, the contribution of other engineering areas has affected the development of IT- microelectronics, which caused a decrease in cost and an increase in the power of iron. Why have processors become more powerful? Semiconductor transistors were invented, then photolithography technologies and ultra-large integrated circuits appeared. Whether Moore's law will cease to operate in the coming years is the topic of a separate article.

The second reason: an injection of money .

- Injection of money by individuals. Mass - everyone has computers. Smartphones have appeared for everyone. People pay money for hardware, for software, leave money on various Internet services.

- Injecting money into IT from commercial companies. This is the development of programs for them, and the creation of sites and investments in contextual advertising. Of course, any program requires support. Commercial companies are forced to buy updates, many use the SaaS (Software as a service) model when a monthly fee is charged. Thus, of course, the infusion of money by firms will continue. This also applies to advertising on the Internet and in mobile applications.

- Investments in startups. Both large funds and simply rich people who began to pretentiously call themselves “business angels” invest large sums of money received from other industries in startups.

All these injections of money make it possible to pay for a huge army of developers who create millions of applications. I think that saturation will soon happen here: computers are now changing less often than before, corporations have already written their software, and as for the sunset of startups, this can also be discussed separately.

An interesting and paradoxical observation: despite such a colossal increase in processor power, I do not see equally significant changes in the speed of execution and the tasks that I solve. For example, there is not much difference between the Word and Excel of 1996 and modern versions. As I watched films 10 years ago, and now, only the resolution is slightly better and download faster (several times, but not thousands of times). There is no revolutionary difference between the then Duke Nukem and the present (namely revolutionary), many still play Heroes III.

Here are some specific examples:

- So many computers (at least in Russia) still have Windows XP installed 15 years ago.

- Where is the revolutionary difference between Gingerbread and Marshmallow? Yes, the design got better, we modified the GC a bit and switched from Dalvik to ART. But there is no fundamental revolutionary difference. There are no significant changes in the kernel. All the same activity, services, broadcast receivers, as eight years ago.

- I once worked on lotus notes, which is already 20 years old. They release new versions, monitor the latest technologies, but the database properties window is still the same as in the version 10 years ago. The base structure is the same.

I think this is because it is impossible to rewrite such a huge amount of code from scratch. What are backward compatibility issues and more. Despite all the techniques such as agile, modularity and other things, changing the developed software is much, ten times more difficult than the original development. But throwing and writing everything from scratch is impossible. Yes, and why? Therefore, the core of the systems is almost unchanged, added ryushechki and external things, changing the GUI - which is easy.

The IT industry is young enough in two ways, as Uncle Bob said: she is not so many years old and the average age of programmers is very small - in the region of 30 years. This happens because the number of programmers is growing rapidly due to the arrival of young people - it almost doubles every 5 years. There is a trend that older programmers go to managerial positions.

It is paradoxical that, although the basic software solves all the same problems as many years ago, the tools are changing rapidly: languages are being improved, new frameworks and libraries, new approaches are emerging. When I started, I wrote in plain visual basic, in pascal, in Delphi. Now the star of these languages has practically rolled. At that time there were no languages like C # or Scala. No one spoke about functional programming, this was not a trend. And even a little earlier, probably, they did not use Delphi and OOP, they wrote in Fortran. I remember the time when people used DOS, there was no Internet, there was no google and facebook. That was 20 years ago.

What conclusion can be drawn from the following two facts: more newcomers come and new fashionable languages, frameworks, technologies appear all the time? The software will contain many bugs and will be overcomplicated. Of course, there are many techniques that are designed to reduce the complexity of the code: continuous and flexible development, TDD and unit testing, version control systems and code reviews. It's difficult to do without a version control system when a lot of people work on the code. The code is getting bigger and bigger. Tens of years ago there were tens of thousands of lines of code in complex programs, now tens of millions of lines of code. And the new software builds on, uses, and pulls hundreds of thousands of lines of code from third-party libraries. Very often in a project no one understands how a particular module works. It even happens that no one understands how the whole system works. There is a lot of code, it was written by different teams at different times. The goals could be different. There could be prerequisites that were simply forgotten. Something was done for the future, but something was not needed. The code has become very complicated.

That is, the objective reality is this: systems are enlarged, increased in size, more and more people are working on them, the code becomes more and more and more difficult.

Thus, in the future, most programmers will work to support huge monsters, and not write something from scratch. And this activity will increasingly obey the rules, because somehow it will be necessary to leave the software workable. Everything will turn into clear bureaucratic procedures. Unauthorized violation of the order, for example, changing the procedure, will be unacceptable. And this is a factor in slowing IT down.

Total

| Development factors | Slowdown Factors |

|---|---|

| Increased iron productivity (temporarily) | The increasing complexity of systems |

| Injection of money (temporarily) | |

| An increasing number of programmers (temporarily) | Backward compatibility requirement |

| Specialization (frontend - backend) | Half of the developers have less than 5 years of experience - many errors and poor code (temporarily) |

| Ready-made frameworks and libraries spread | I have to drag a lot of third-party code |

| Improved languages and IDEs, version control systems | Continuous technology change (temporarily) |

| Improving development techniques: TDD, DDD, Agile, patterns and refactoring | Overhead for management and organization due to the increased number of developers working on the project |

Thanks for attention!