Why is almost everything that Facebook “hacking” reported

- Transfer

If you follow the news, you may have noticed that a company called Cambridge Analytica has somehow become frequent in headlines . The media tells the following story:

Some dubious British data analytics company, using a 24-year-old genius, developed an innovative technology to hack Facebook and steal 50 million user profiles. They then used this data to help Trump and Brexit campaigns psychologically manipulate voters through targeted advertising. As a result, in a referendum in Britain, people voted to leave the European Union, and Trump was elected US President.

Unfortunately, almost all of the statements described are misleading or simply incorrect.

Firstly, there was no hacking.

The collected data was taken from user profiles after users gave permission to access data from a third-party application. Remember those small confirmation windows that appear when you want to play Candy Crush or log in via FB so you don't have to create a new password for a random site? Yeah, these very ones:

A Cambridge scientist, Alexander Kogan - not affiliated with Cambridge Analytica - made the Test Your Personality application, advertised it, paying $ 1 per install to people through the Amazon Mechanical Turk crowdsourcing site, and used the permissions to collect data from profile. The application installed 270,000 people, so you could expect that it collected information from 270,000 profiles - but in fact, it processed 50 million profiles.

50 million profiles ???

Yes. In that reckless year 2014, Facebook had an opportunity called “permission for friends,” which allowed access to the profiles of not only the person who installed the application, but also the profiles of all his friends. To prevent this, it was necessary to enable a certain setting in the privacy section, which few people knew about at all (here is an article from the 2012 blog that explains how to do this). It was with the help of “permission for friends” that Kogan multiplied 270,000 permissions to the number of 50 million profiles.

The fact that the data of FB users was handed out by their friends without notice or permission was a cause for serious concern, about which privacy advocates spoke back then. Therefore, in 2015, in the face of growing criticism and pressure, FB removed this opportunity, explaining this by the desire to give users “more control” over their data. This decision caused shock among application developers, since the ability to have access to friends' profiles was extremely popular (see comments under the announcement of disabling the feature from 2014). Sandy Parakilas, a former FB manager, told Bloomberg that “tens or even hundreds of thousands of developers” used it before disabling this feature.

To summarize the preliminary result; At this point, we have two key points:

The importance of the second point becomes apparent if you read texts like this:

This text was offered as evidence that the FB was lying to policy makers about their relationship with CA. But if you understand the difference between the internal data of the FB and the data collected on the FB by third-party developers, it becomes clear that what the FB policy director says is most likely true.

So how does CA fit in with this whole story?

They paid Kogan to collect 50 million profiles. Whose idea it was originally, it is already difficult to find out. Kogan says that CA came to him with a proposal, and CA says that Kogan came to her. Be that as it may, the data leak was just that; it was not FB internal data, but data dissemination rules. Developers were allowed to collect all user data that they needed for their applications, but they were not allowed (even in 2014) to collect this data for sale to their third parties.

And yet, regardless of the official rules of the FB, apparently, the company did not try too hard to monitor how its developers use the collected data. Perhaps because of this, when the FB first discoveredthat Kogan sold CA data in 2015, she was only satisfied with receiving written confirmation from both parties that the data collected was deleted.

The fact that there were at least tens of thousands of developers who had access to such information meant that the data collected on the FB would inevitably be sold or somehow transferred to third parties. And the former manager from FB, dissatisfied with the situation, confirmed this:

The thing is how journalists, especially Carol Cadwalladre from Observer, designed this story. Most publications have advanced two views on this issue. Firstly, the CA informant revealed a “big leak” of data from the FB, and we have already described this problem. Secondly, this “leak” was associated with the success of Trump’s presidential campaign.

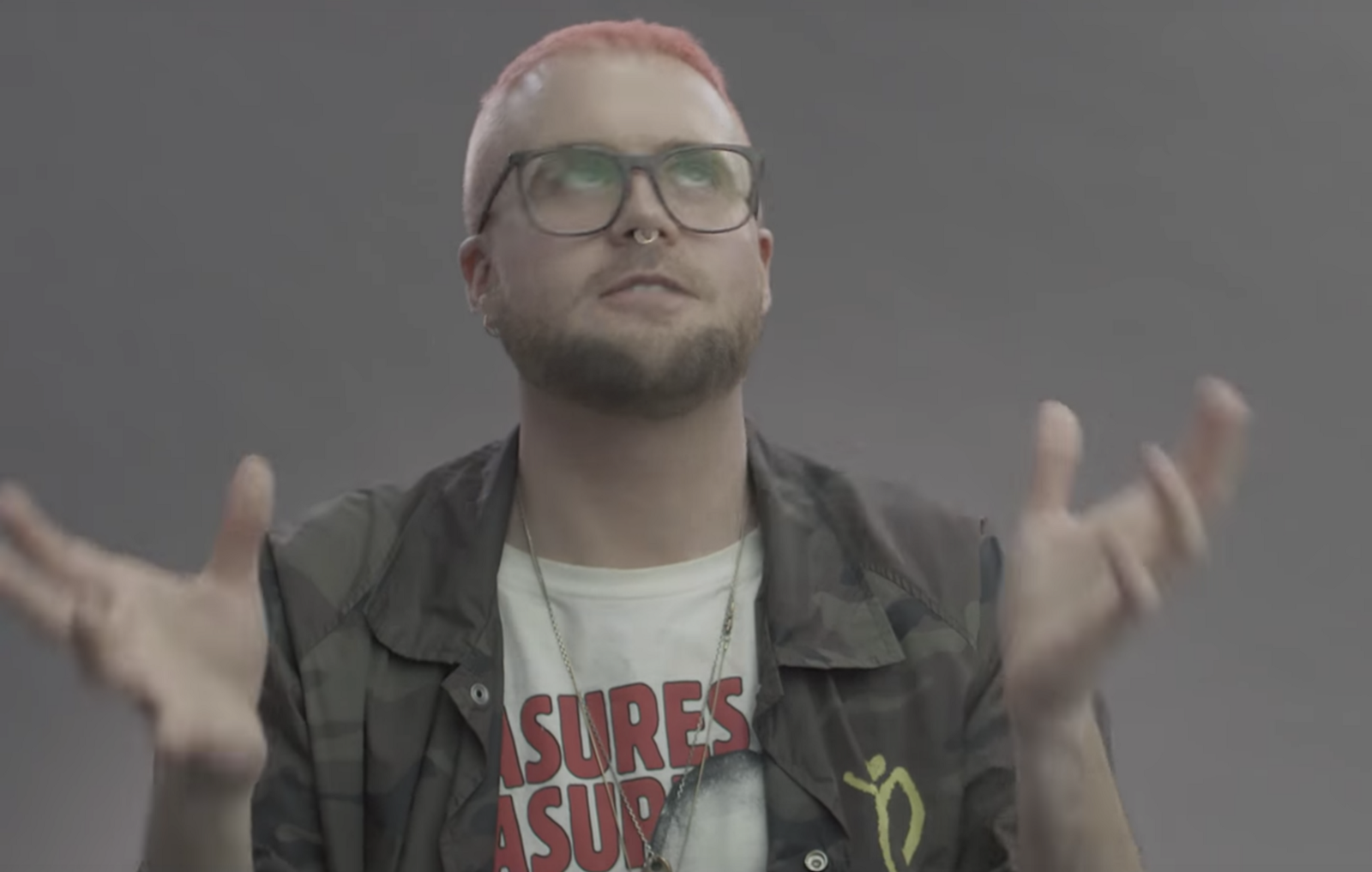

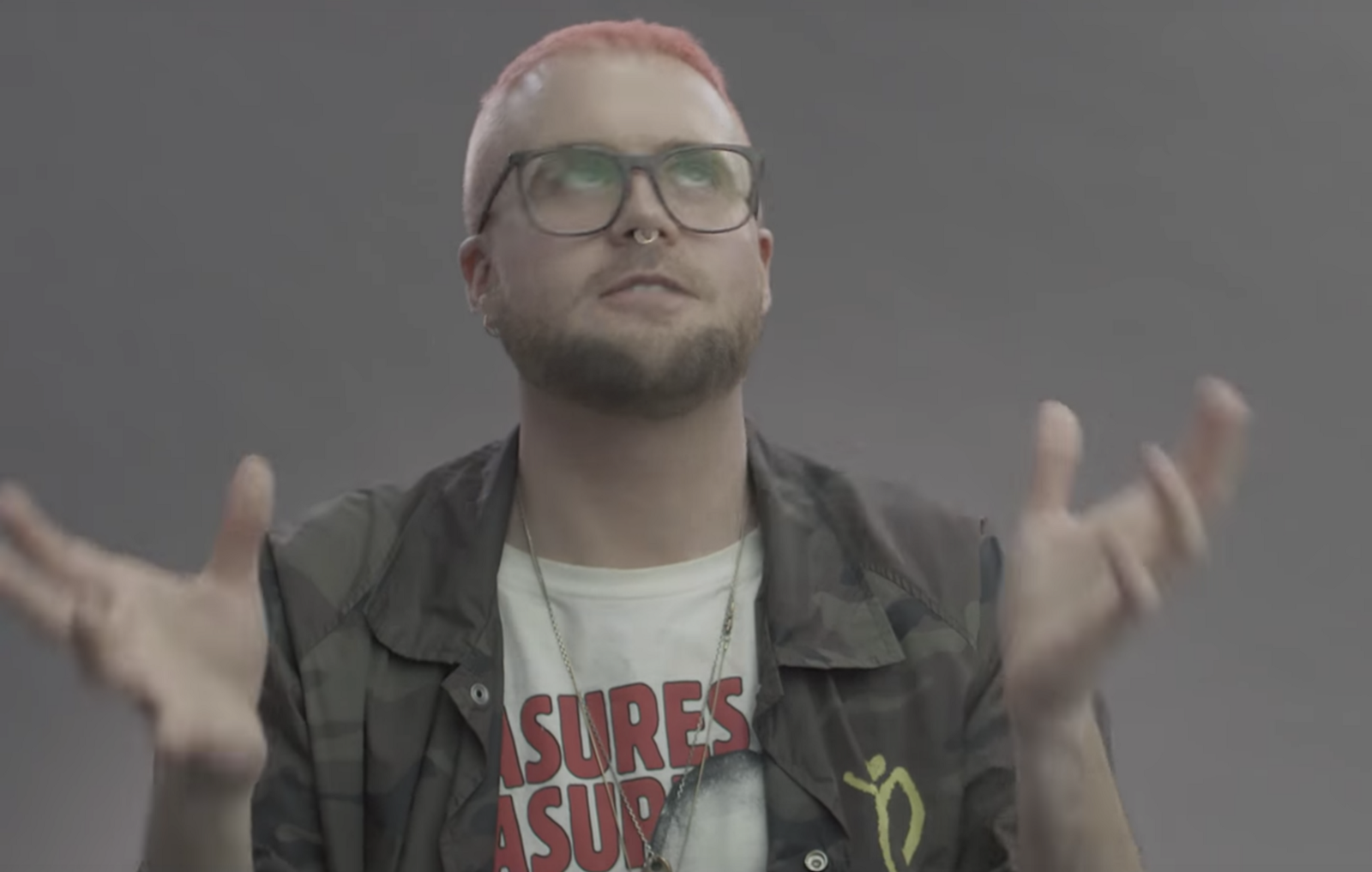

Christopher Wiley - an outstanding mind, “hacked” FB

The second point of view is as dubious as the first, and is based mainly on the pompous statements of Christopher Wiley- Former CA employee with pink hair. Carol Cadwalladre, who has worked with this story for years, told in various interviews that she approached her not as a journalist-researcher, but as an author of essays. This means that she paid more attention to the “human side of history,” or, more simply, to Chris Wiley. This approach has its pros and cons, but the biggest drawback is how much her articles depended on Wiley’s stories, in which he portrayed himself as a young talent, at the center of global political conspiracies.

Cadwalladre fully endorses Wylie’s self-presentation and obsequiously describes him.as "smart, funny, daring, wise, greedy for knowledge, intriguing, impossibly young." "The trajectory of his career, like most of the aspects of his life, was outstanding, incongruous, incredible." “Wiley lives for ideas. He speaks incessantly for hours on end. ” “When Wiley pays all his attention to something, his strategic brain, his attention to detail, his ability to plan 12 steps forward become something that is scary to watch.” “His set of outstanding talents includes political skills of such a high level that, in comparison with them, the House of Cards looks like a culinary show.”

Wow. Here is the guy.

Cadwalladre’s personality-focused approach makes articles easier and helps hide essential technical details, instead delivering sensational quotes and personal stories from the lives of Wiley, his friends and colleagues. Such information can provide food for thought, if you approach it critically - but this is rare. Instead, Cadwalladre simply believed in the story told by Wiley: “By the time we first met in person , I had a few hours of conversation every day.”

So let's turn to the oversight and look at Wylie’s statements a little more critically:

The last point is the most important, and the least evidence is given.

It may be tempting to point out Trump's unexpected victory, but there are many confusing factors in this matter. Trump really won. But he won against the most unpopular Democratic candidate in modern history, who was trying to hold the Democratic Party for a third term in a row (and this has not happened since the 1940s). Moreover, he won with very little advantage and lost the vote for popularity.

Alexander Knicks, Director of CA, stands amid a large number of impressive schedules

Could all this be evidence of the accuracy of the psychological positioning of CA? Perhaps, but then we are faced with the danger of working with an irrefutable hypothesis. It would be better to study the ratio of the number of victories and defeats of CA. Unfortunately, we do not have access to the list of her clients, but we know that for the first time she gained fame while working on the presidential campaign of Ted Cruise. Yes, yes - Ted Cruise, a Republican senator whom Trump crushed in the Republican internal party elections, despite all the “power” of the CA that the first possessed. Not the first I notice this obvious contradiction - Martin Robbins noted the same thing in an article from last year:

The FB conducted an experiment for 689,000 users, tweaking the algorithm for delivering news to show them slightly more or slightly less status updates of their friends containing positively or negatively colored words. As any researcher knows, with such a large sample you are guaranteed to get statistically significant differences between the groups. A more important parameter will be the strength of the detected effect. In the FB study, the difference turned out to be truly frightening: people who saw fewer negative updates used 0.05 positively colored words for every hundred in their status updates, and those who saw less positive updates used 1 positively colored word less for every hundred. Exactly. FB could manipulate people so so that they use 1 positively colored word less for every hundred. Based on this, it cannot be said that FB is helpless, because more intervention would lead to stronger results, but it is important to see things in perspective.

Note that the y axis does not start at 0

It turns out that the real story is not that Kogan, Wiley and CA developed the incredibly high-tech “hack” FB. The thing is that, with the exception of the sale of data by Kogan, they used common methods that were allowed on FB until 2015. From the moment this story became public, CA was branded as reprehensible and unethical - at least that's how it advertises to potential customers. But most of the statements repeated by the media are simply a thoughtless repetition of what CA and Chris Wiley themselves tell about themselves, without a critical look at the facts. The problem is the lack of sufficient evidence that the company is capable of what it claims to be, and full of evidence that it is not as effective as it likes to pretend; for example, remember

No one is completely protected from marketing or politics, but there is practically no evidence that CA will be better than any other PR company or specialists in political campaigning and voter positioning. Campaigns for political positioning and misinformation, including advertising from Russia [the United States accused Russia of interfering in the last presidential election; Russia officially rejected these allegations / approx. perev. ], of course, influenced the outcome of recent elections, but have they become a critical factor? Was this factor more influential than Komi’s statement [ former FBI director / approx. perev. ] on reopening the investigation into the e-mail caseHillary Clinton the week before the election? Or a statement by Brexit supporters that every week the European Union stole £ 250 million from the health fund [ £ 350 million / approx. perev. ]? I am somehow skeptical about this.

I will clarify that I am not claiming that CA and Kogan are innocent. At the very least, it’s clear that they did things that go against the rules for distributing data to FB. Likewise, FB obviously allowed its developers too much in terms of access to private data. I argue that CA is not the evil puppeteers they are trying to imagine. She is more like Trump - she makes extremely exaggerated statements about her capabilities, which attracts increased attention to her.

Some dubious British data analytics company, using a 24-year-old genius, developed an innovative technology to hack Facebook and steal 50 million user profiles. They then used this data to help Trump and Brexit campaigns psychologically manipulate voters through targeted advertising. As a result, in a referendum in Britain, people voted to leave the European Union, and Trump was elected US President.

Unfortunately, almost all of the statements described are misleading or simply incorrect.

Firstly, there was no hacking.

The collected data was taken from user profiles after users gave permission to access data from a third-party application. Remember those small confirmation windows that appear when you want to play Candy Crush or log in via FB so you don't have to create a new password for a random site? Yeah, these very ones:

A Cambridge scientist, Alexander Kogan - not affiliated with Cambridge Analytica - made the Test Your Personality application, advertised it, paying $ 1 per install to people through the Amazon Mechanical Turk crowdsourcing site, and used the permissions to collect data from profile. The application installed 270,000 people, so you could expect that it collected information from 270,000 profiles - but in fact, it processed 50 million profiles.

50 million profiles ???

Yes. In that reckless year 2014, Facebook had an opportunity called “permission for friends,” which allowed access to the profiles of not only the person who installed the application, but also the profiles of all his friends. To prevent this, it was necessary to enable a certain setting in the privacy section, which few people knew about at all (here is an article from the 2012 blog that explains how to do this). It was with the help of “permission for friends” that Kogan multiplied 270,000 permissions to the number of 50 million profiles.

The fact that the data of FB users was handed out by their friends without notice or permission was a cause for serious concern, about which privacy advocates spoke back then. Therefore, in 2015, in the face of growing criticism and pressure, FB removed this opportunity, explaining this by the desire to give users “more control” over their data. This decision caused shock among application developers, since the ability to have access to friends' profiles was extremely popular (see comments under the announcement of disabling the feature from 2014). Sandy Parakilas, a former FB manager, told Bloomberg that “tens or even hundreds of thousands of developers” used it before disabling this feature.

To summarize the preliminary result; At this point, we have two key points:

- None of the above is related to “hacking” FB or using any errors. We are talking about using the opportunity provided by FB to all developers, which was used by at least tens of thousands of them.

- The data collected does not apply to internal FB data. The developers collected this data from the profiles of the people who downloaded their application (and their friends). FB has collected much more data for users than there is in the public domain, and this data is available for all users of this platform. Only FB has access to them. Apparently, the journalists who wrote about this story could not understand this moment - they constantly put an equal sign between “internal FB data” and “data collected from user profiles using a third-party application”. But there is a big difference between these concepts.

The importance of the second point becomes apparent if you read texts like this:

Simon Milner, FB Policy Director for Britain, in response to a question whether Cambridge Analytica (CA) has data from the FB, replied: “No. They have a lot of data, but this is not user data from FB. This may be data about people using FB, collected by them independently, but this is not some data provided by us. ”

This text was offered as evidence that the FB was lying to policy makers about their relationship with CA. But if you understand the difference between the internal data of the FB and the data collected on the FB by third-party developers, it becomes clear that what the FB policy director says is most likely true.

So how does CA fit in with this whole story?

They paid Kogan to collect 50 million profiles. Whose idea it was originally, it is already difficult to find out. Kogan says that CA came to him with a proposal, and CA says that Kogan came to her. Be that as it may, the data leak was just that; it was not FB internal data, but data dissemination rules. Developers were allowed to collect all user data that they needed for their applications, but they were not allowed (even in 2014) to collect this data for sale to their third parties.

And yet, regardless of the official rules of the FB, apparently, the company did not try too hard to monitor how its developers use the collected data. Perhaps because of this, when the FB first discoveredthat Kogan sold CA data in 2015, she was only satisfied with receiving written confirmation from both parties that the data collected was deleted.

The fact that there were at least tens of thousands of developers who had access to such information meant that the data collected on the FB would inevitably be sold or somehow transferred to third parties. And the former manager from FB, dissatisfied with the situation, confirmed this:

When asked how exactly the FB controlled the data received by external developers, he replied: “No way. Absolutely. As soon as the data left the FB servers, there was no control and no idea what happened to them next. ” Parakilas said that he “always assumed the existence of a black market” of data collected from FB, transmitted to third-party developers.Given how common the practice of data collection was, and that many developers had access to more users than 270,000, why did the CA get into the headlines?

The thing is how journalists, especially Carol Cadwalladre from Observer, designed this story. Most publications have advanced two views on this issue. Firstly, the CA informant revealed a “big leak” of data from the FB, and we have already described this problem. Secondly, this “leak” was associated with the success of Trump’s presidential campaign.

Christopher Wiley - an outstanding mind, “hacked” FB

The second point of view is as dubious as the first, and is based mainly on the pompous statements of Christopher Wiley- Former CA employee with pink hair. Carol Cadwalladre, who has worked with this story for years, told in various interviews that she approached her not as a journalist-researcher, but as an author of essays. This means that she paid more attention to the “human side of history,” or, more simply, to Chris Wiley. This approach has its pros and cons, but the biggest drawback is how much her articles depended on Wiley’s stories, in which he portrayed himself as a young talent, at the center of global political conspiracies.

Cadwalladre fully endorses Wylie’s self-presentation and obsequiously describes him.as "smart, funny, daring, wise, greedy for knowledge, intriguing, impossibly young." "The trajectory of his career, like most of the aspects of his life, was outstanding, incongruous, incredible." “Wiley lives for ideas. He speaks incessantly for hours on end. ” “When Wiley pays all his attention to something, his strategic brain, his attention to detail, his ability to plan 12 steps forward become something that is scary to watch.” “His set of outstanding talents includes political skills of such a high level that, in comparison with them, the House of Cards looks like a culinary show.”

Wow. Here is the guy.

Cadwalladre’s personality-focused approach makes articles easier and helps hide essential technical details, instead delivering sensational quotes and personal stories from the lives of Wiley, his friends and colleagues. Such information can provide food for thought, if you approach it critically - but this is rare. Instead, Cadwalladre simply believed in the story told by Wiley: “By the time we first met in person , I had a few hours of conversation every day.”

So let's turn to the oversight and look at Wylie’s statements a little more critically:

- Steve Bannon wanted to use big data as a weapon - it's easy to believe.

- CA claims to be able to provide effective tools for psychological positioning and manipulation - true.

- Chris Wiley was engaged in dubious business and considers himself partially responsible for what is happening - naturally.

- Self-promotion CA really corresponds to the effectiveness of the services they offer - hmmmmm ...

The last point is the most important, and the least evidence is given.

It may be tempting to point out Trump's unexpected victory, but there are many confusing factors in this matter. Trump really won. But he won against the most unpopular Democratic candidate in modern history, who was trying to hold the Democratic Party for a third term in a row (and this has not happened since the 1940s). Moreover, he won with very little advantage and lost the vote for popularity.

Alexander Knicks, Director of CA, stands amid a large number of impressive schedules

Could all this be evidence of the accuracy of the psychological positioning of CA? Perhaps, but then we are faced with the danger of working with an irrefutable hypothesis. It would be better to study the ratio of the number of victories and defeats of CA. Unfortunately, we do not have access to the list of her clients, but we know that for the first time she gained fame while working on the presidential campaign of Ted Cruise. Yes, yes - Ted Cruise, a Republican senator whom Trump crushed in the Republican internal party elections, despite all the “power” of the CA that the first possessed. Not the first I notice this obvious contradiction - Martin Robbins noted the same thing in an article from last year:

The story of the Republican intra-party elections is that CA fashion data lost to the dude with a website made for a thousand bucks. The transformation of this story into an exciting saga about the invincible scientific voodoo, inexorably dragging Trump to victory, is a big stretch. Did they even work for anyone else? Without a customer list, it’s very easy to selectively approach winners.The meaning of the CA technologies used is to build algorithms based on data from social networks that can accurately predict the effectiveness of the impact of messages on a person based on his personality and psychological portrait. This is exactly what the articles about the use of psychography for voters' microtargeting mean. But most of the claims about the effectiveness of such technologies are extremely exaggerated. Kogan, a Cambridge scientist at the center of the discussion, wrote something like this. He stated that he was appointed the scapegoat and claimed that the personality profiles he had collected were not so useful for making predictions for microtargeting:

“In the course of our further study of this topic,” he wrote, “we found that the predictions issued by SCL had a 6-fold higher chance of describing all 5 personality traits than a chance of describing them all correctly. In short, even if these data were used for microtargeting, in reality it could only harm the achievement of the goal.Kogan cannot be called an impartial source of information, but his statements coincide with various studies that have shown not the most brilliant results in attempts to manipulate through social networks. Take, for example, the controversial FB research on “mind management,” recently referred to by several journalists. And not one of the references to this study describes how failed it was.

The FB conducted an experiment for 689,000 users, tweaking the algorithm for delivering news to show them slightly more or slightly less status updates of their friends containing positively or negatively colored words. As any researcher knows, with such a large sample you are guaranteed to get statistically significant differences between the groups. A more important parameter will be the strength of the detected effect. In the FB study, the difference turned out to be truly frightening: people who saw fewer negative updates used 0.05 positively colored words for every hundred in their status updates, and those who saw less positive updates used 1 positively colored word less for every hundred. Exactly. FB could manipulate people so so that they use 1 positively colored word less for every hundred. Based on this, it cannot be said that FB is helpless, because more intervention would lead to stronger results, but it is important to see things in perspective.

Note that the y axis does not start at 0

It turns out that the real story is not that Kogan, Wiley and CA developed the incredibly high-tech “hack” FB. The thing is that, with the exception of the sale of data by Kogan, they used common methods that were allowed on FB until 2015. From the moment this story became public, CA was branded as reprehensible and unethical - at least that's how it advertises to potential customers. But most of the statements repeated by the media are simply a thoughtless repetition of what CA and Chris Wiley themselves tell about themselves, without a critical look at the facts. The problem is the lack of sufficient evidence that the company is capable of what it claims to be, and full of evidence that it is not as effective as it likes to pretend; for example, remember

No one is completely protected from marketing or politics, but there is practically no evidence that CA will be better than any other PR company or specialists in political campaigning and voter positioning. Campaigns for political positioning and misinformation, including advertising from Russia [the United States accused Russia of interfering in the last presidential election; Russia officially rejected these allegations / approx. perev. ], of course, influenced the outcome of recent elections, but have they become a critical factor? Was this factor more influential than Komi’s statement [ former FBI director / approx. perev. ] on reopening the investigation into the e-mail caseHillary Clinton the week before the election? Or a statement by Brexit supporters that every week the European Union stole £ 250 million from the health fund [ £ 350 million / approx. perev. ]? I am somehow skeptical about this.

I will clarify that I am not claiming that CA and Kogan are innocent. At the very least, it’s clear that they did things that go against the rules for distributing data to FB. Likewise, FB obviously allowed its developers too much in terms of access to private data. I argue that CA is not the evil puppeteers they are trying to imagine. She is more like Trump - she makes extremely exaggerated statements about her capabilities, which attracts increased attention to her.