Pornhub started removing fake celebrity videos generated by neural network

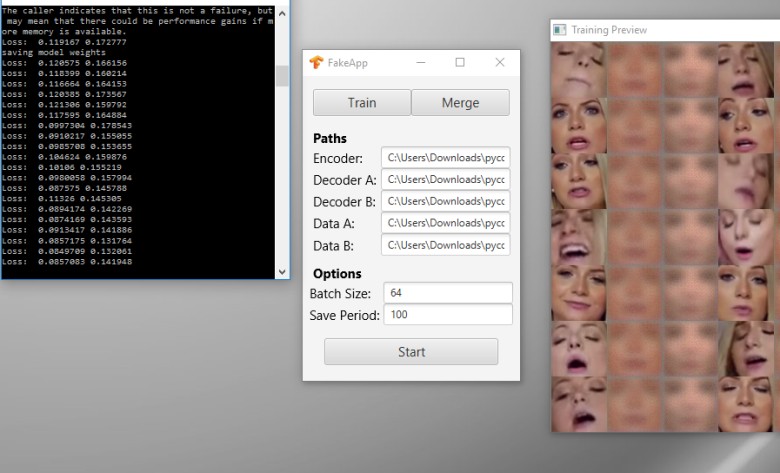

FakeApp program

On January 12, 2018, a Reddit user with the nickname Deepfakes released the FakeApp program , an application for replacing faces in videos. Over the past time, several new versions have been released (the current version of FakeApp 2.1 was released on February 4) - and the program has gained terrible popularity. The number of downloads has long exceeded 100,000. Especially, fans of the “strawberry” liked it for a long time who wanted to see their favorite actress or some other chaste celebrity in an interesting position.

Fake videos with celebrity faces were so widespread that popular video hosting had to take action. The first ban on fake videos was introduced by the famous site Pornhub - a giant of world porn with an attendance of 75 million people a day.

“Users have started tagging such content, and we will remove it as soon as complaints appear,” said Corey Price, vice president of Pornhub, in a Mashable comment . “We encourage everyone who faces this problem to visit our content removal page to formally submit a request.”

Representatives of Pornhub later clarified their position. In a comment on Motherboard, they said they see it as a form of porn without the consent of the actress. And on the site any types of videos have always been blocked when the participant clearly does not agree on what is happening. Now this kind of content also includes AI-generated videos with strangers.

Although Pornhub has formally banned celebrity porn, in reality dozens of such videos are uploaded daily. And soon their quality will increase so much that it will become very difficult to distinguish: is this a real video with a celebrity or a fake one.

Deepfakes first showed the results of its program in December 2017. Then he has not yet made it publicly available. The author said that faces are superimposed by a system based on a neural network, which was developed using various free libraries and frameworks. He basically made the program using the Keras library on the TensorFlow backend . To train a neural network and compile a collection of celebrity faces, a search on Google images, stock photos and YouTube videos is enough.

The structure of the neural network is similar to that described in the scientific work of researchers from Nvidia . Their algorithm can, for example, change the weather in a video in real time.

To fake the face of a particular celebrity, you need to train the neural network specifically on her video and on porn videos. After training, the program is able to replace the face on the fly. Thousands of such videos have already been published in / r / deepfakes /.

On a consumer-level video card, training takes several hours; on a CPU, it can take several days. To work, you need the installed CUDA 8.0 environment. Learning neural networks has long been an affordable technology that even a teenager can play with.

Interestingly, someone can use this program to compromise a friend of a girl and a guy. The program requires two sets of several hundred frames with two faces to be replaced. Frames are extracted from videos by the team

ffmpeg -i scene.mp4 -vf fps=[FPS OF VIDEO]. Then you need to specify the path to the folders with the “models” in the program - and continue training until the preview window starts to show a satisfactory result. Many people voluntarily post hundreds of photos and videos with their faces to social networks. This will be enough to train the neural network.

Famous pornstars spoke out negatively about using this program and distributing fake videos. For example, actress Grace Evangeline (Grace Evangeline) said that the industry always has one important rule - consent. And in this case, shooting with celebrities is carried out without their consent, which is wrong. Not to mention the fact that at the same time the body of another actress is also used without her consent.

Specialists in machine vision and Artificial Intelligence believe that faking videos and photographs raises serious ethical issues - therefore, a broad public discussion on this topic should be raised. Everyone should know how easy it is to fake someone else’s video. Previously, this was available only to professionals with powerful resources. And now any lone programmer can do this.

Perhaps in the future it will be necessary to amend legislation to protect the integrity of not only the physical body of a person, but also his digital identity and virtual image. For violent acts with a virtual image of a person without the consent of that person, they may also introduce responsibility.

FakeApp 2.1 (torrent), 1.6 GB

Only registered users can participate in the survey. Please come in.

Should a person be legally responsible for violence against another person’s avatar?

- 38.9% Yes, if he posted a video 331

- 6.5% Yes, anyway 56

- 54.4% No 463