Forward to the past. TNT - Explosive disclosure

... As long as the operating room plows 4/5 again, from where the pieces of iron and mate chips and screenshots fly out in large numbers every day , we can say that now there is already some material that I will try to put together in a digestible form below.

Let's start with what I would like to start. With an explanation of the reasons and objectives of this post. The post, as it were, reports three very important points:

Now about the goals.

Verily, let’s start by recalling (without quotes and proofs in the name of peace) what were the reviews of early hardware-3D on PC in the late 90s and early 2000s ...

And don't tell me anything about our economy and size 15 CRT monitors. At the time, staff members were already selling if not LCD, then precisely flat CRTs with support for human resolutions! The potential was high. In general, potency, they say, is an important parameter. Here we measure it.

Back in the month of March, all this finally did not allow me to live in peace, because "how is it that I was 15 years late ???". Well, I decided to hand over a couple of reviews for your unpopular analysis, on a chip for each.

But what is here?

And there will not be manipulated with the PC: memory upgrades, CPU overclocking and similar movements. Because he collected the top of his generation - this car does not make sense to disperse and upgrade.

And there will be a lot of detailed stops on each board and its features: there will be monitoring, comparison in different api, with different tweaks and overclocking. Analysis of input data,modeling a discrete random variable by twenty-six iterations, calculating the variance, plotting an integral function that accurately describes the resulting picture, subjective and analytical estimates. In general, a completely clumsy approach, which for some reason the reviewers have always neglected.

ATTENTION, Config:

MB: Gigabyte 6vtxe (Apollo Pro133T chipset)

CPU: Pentium 3-S 1.4Ghz / 512Kb / 133FSB

RAM: 3x256Mb PC133

HDD: healthy UDMA-5 WD with a decent 2MB cache and 5400RPM

WinME

Video:

Why WinME. So after all flash drives work immediately, it does not turn blue from 768 MB of memory ... Not enough? But for what else I fell in love with WinME:

Then he chose more carefully. And then, in D3D, monitoring didn’t really work ...

Now. I played a good game, I chose the game Deus Ex . There are several reasons for this.

Firstly, an unfulfilled childhood game, inaccessible by system requirements. I played with my brother with breaks for a month. Very vague memories of lack of sleep as a result of a delay of "another 5 minutes" up to 2 am. So vague that in the course of a recent passage it turned out that I managed to go quite far back then ... I have long wanted to go through Deus Ex without brakes at maximum speed (!!! so much so that the scale of detail is shocking !!!) - why not?

Secondly, seriously. Game on the Unreal Engine 1 engine . The power that was proposed in 1998 by this motive:

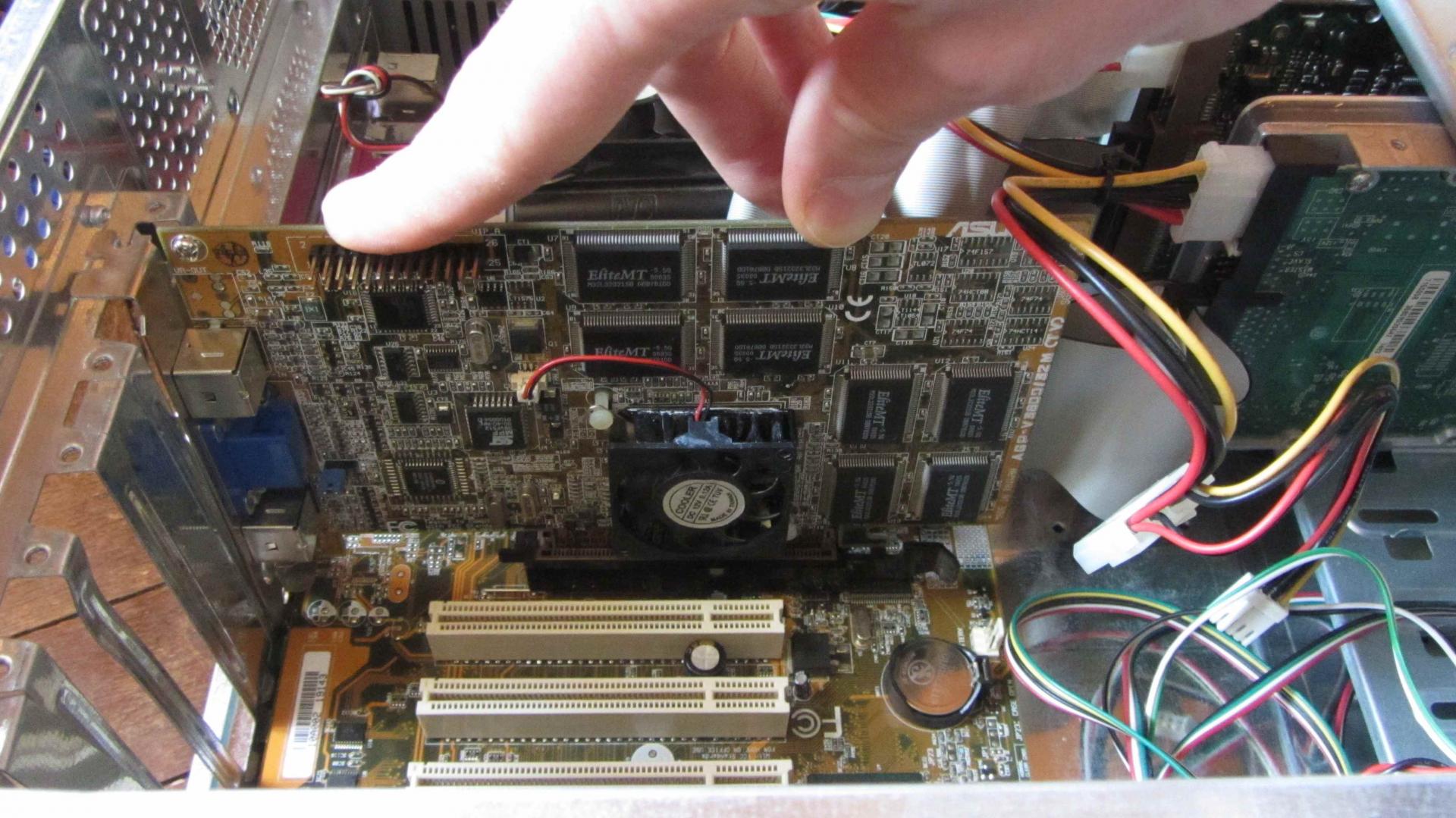

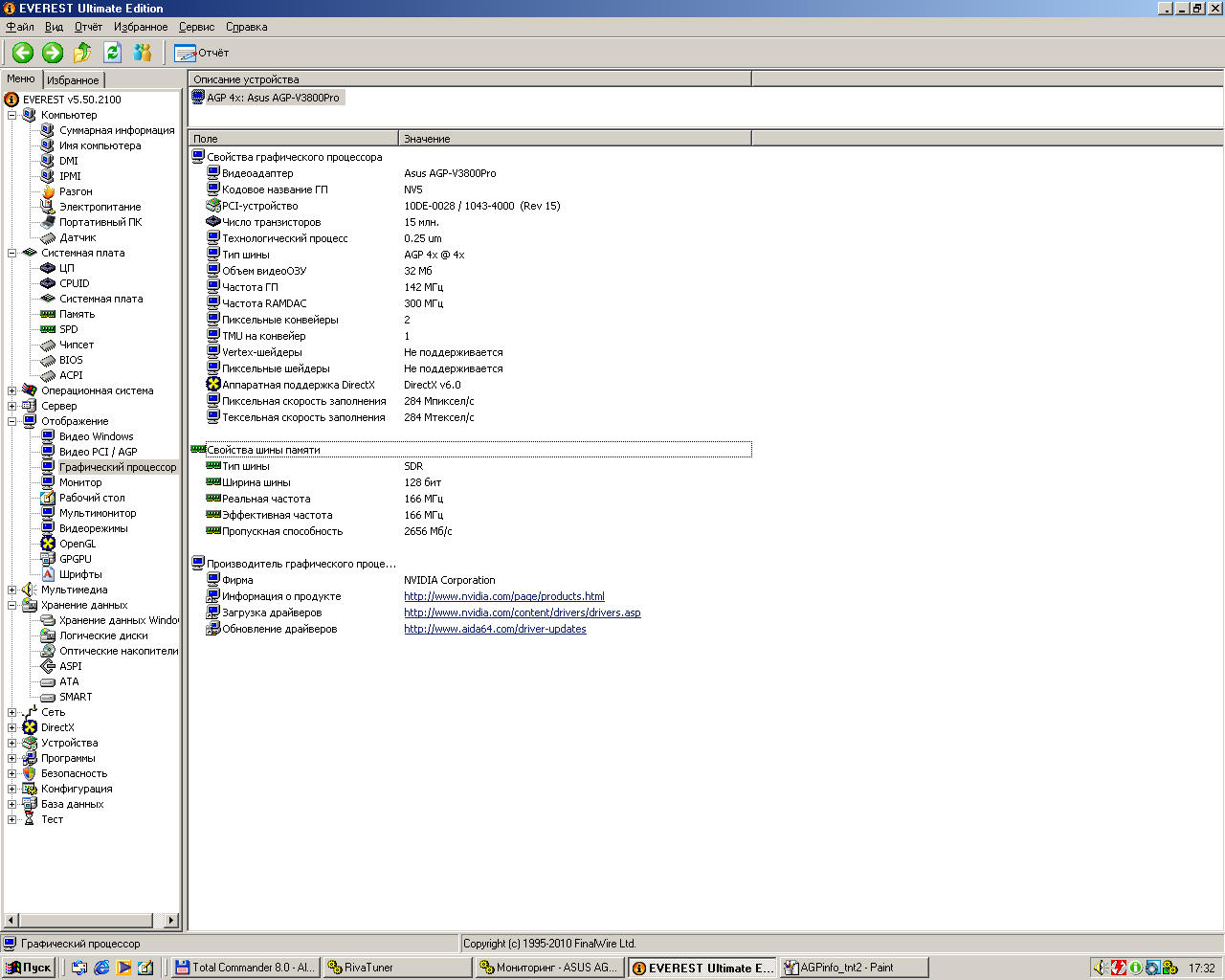

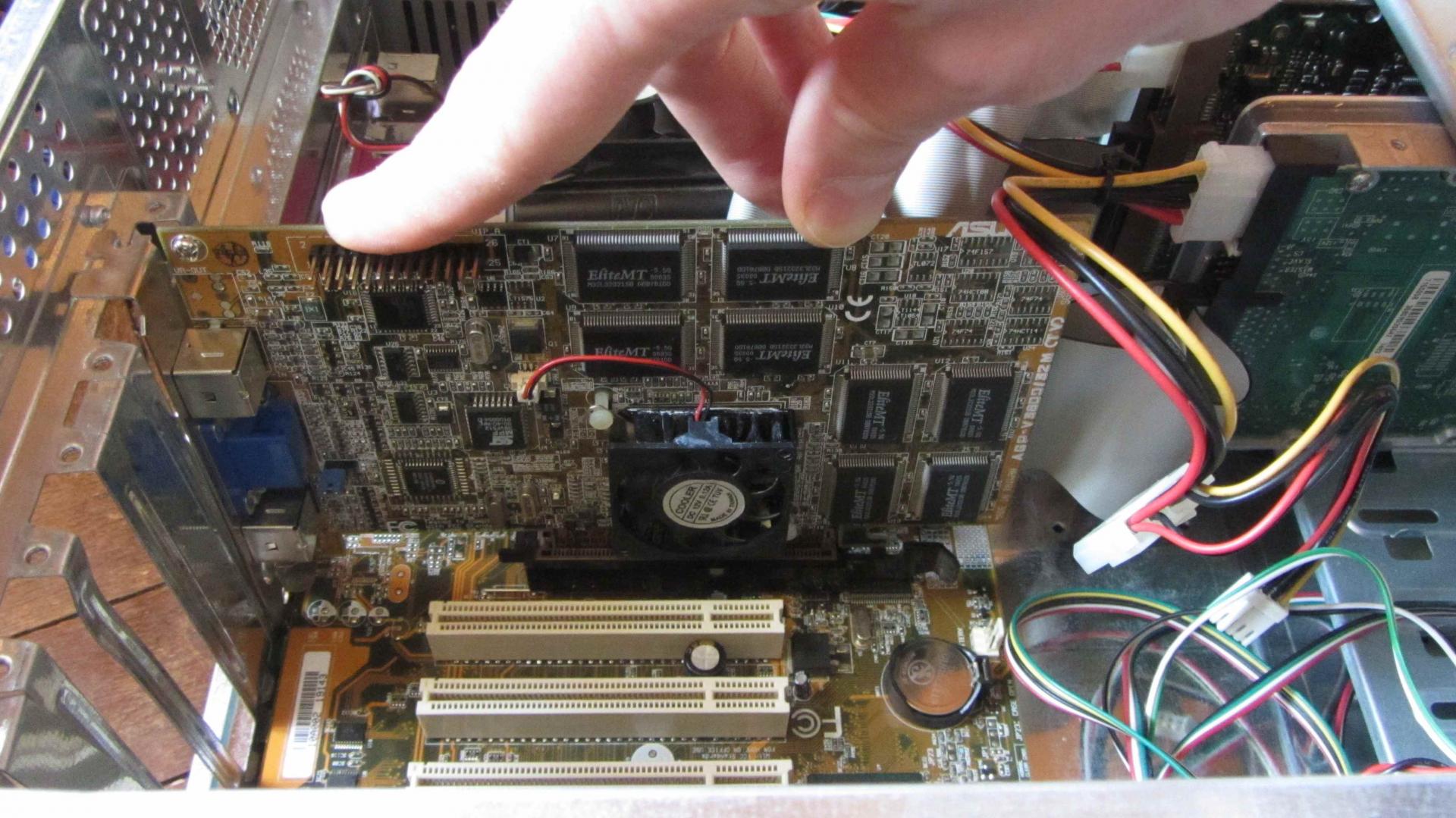

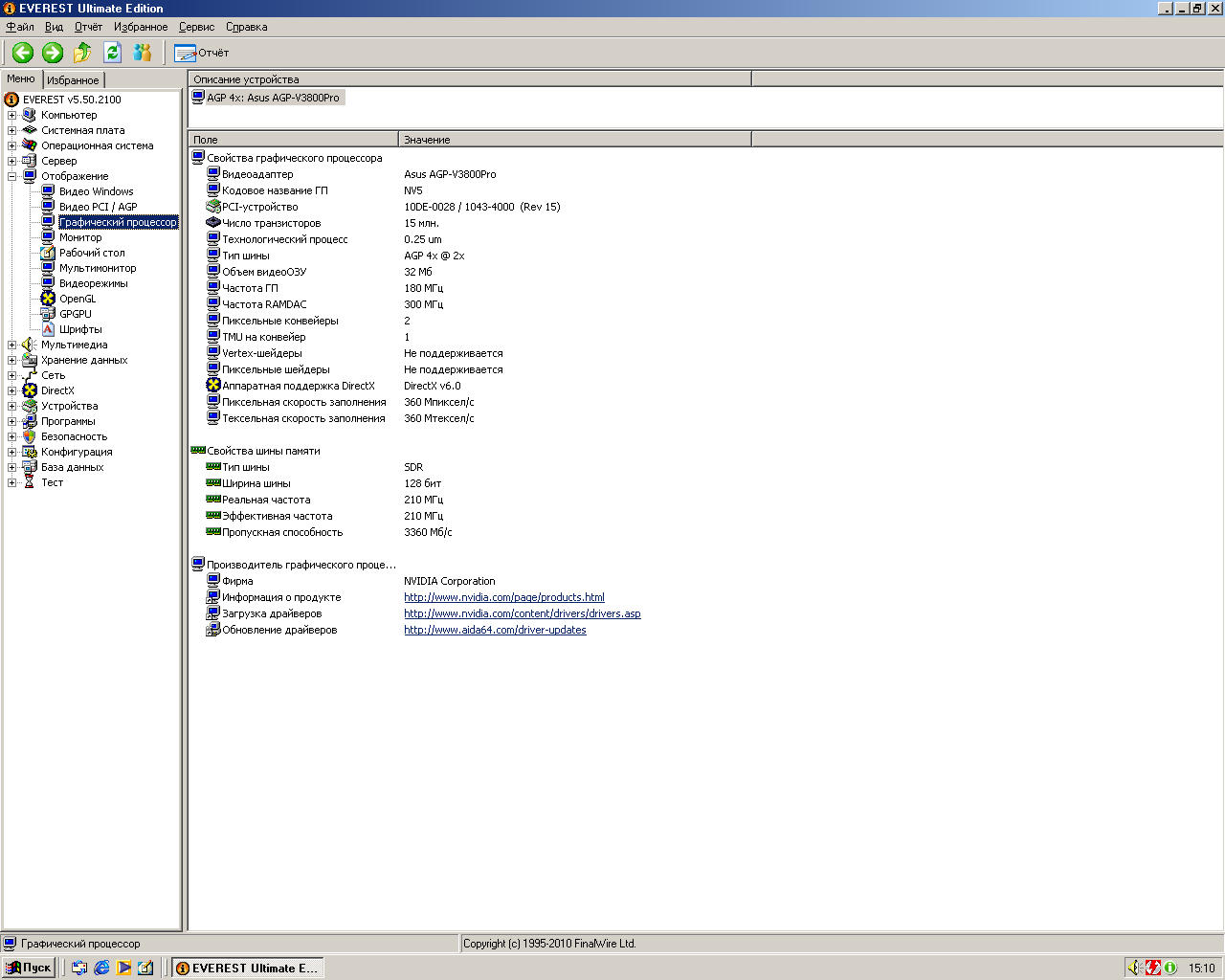

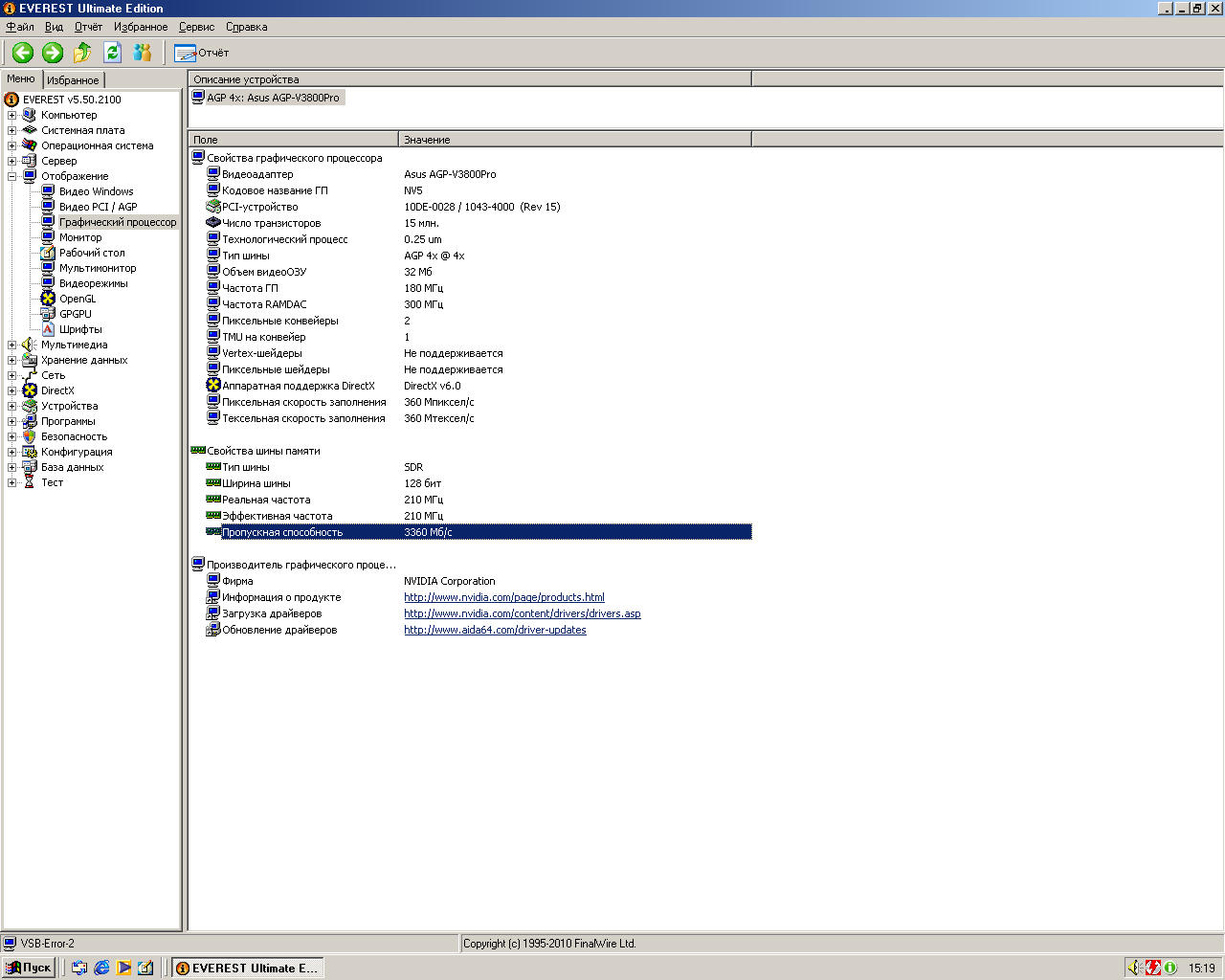

Here is the same legendary Kriva, the 3dfx killer (represented by ASUS V3800PRO). This statement seemed doubtful, therefore, we will begin with it.

Supports AGP4x, but Sideband by the way, it doesn’t. Well, now it’s important to check it out whether it’s important or not;)

... Let's put the rest of the chips like the feature connector and the TV tuner ...

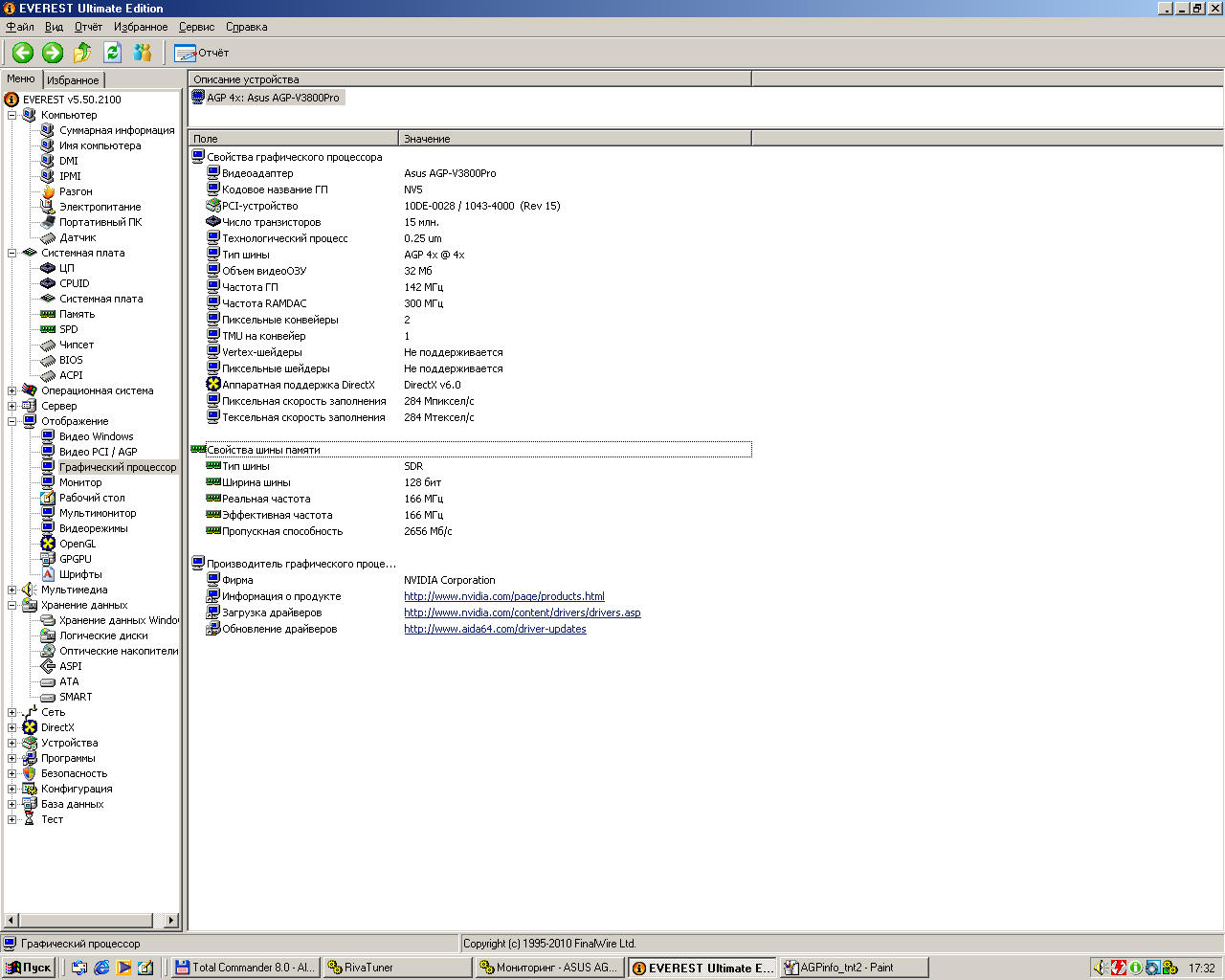

Карта построена на чипе RIVA TNT2 PRO. 1999 года рождения.

Вообще TNT — это TwiNTexel. То был первый в истории десктопов ! один! чип, способный накладывать две текстуры за такт. А вот это — уже TNT2.

Данная карта (PRO) — это как бы изначально разогнанная версия стандартного TNT2 (к слову, в ассортименте была ещё ULTRA — это как бы изначально разогнанная версия PRO). Работает на 142/166 (чип/память).

К слову, это та самая классная видеокарта, в которой приходилось выбирать между либо честной трилинейной фильтрацией, либо мультитекстурированием. Уже страшно…

However, what does all this really mean and how to look at it in practice? We’ll deal with this.

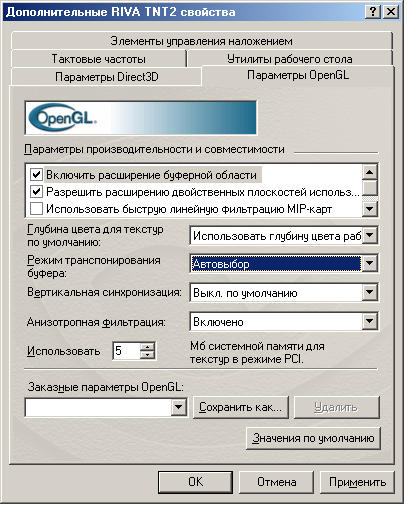

Built-in firewood in winme! highly! old, even quake3 will not start with them, saying that your opengl is outdated or simply missing.

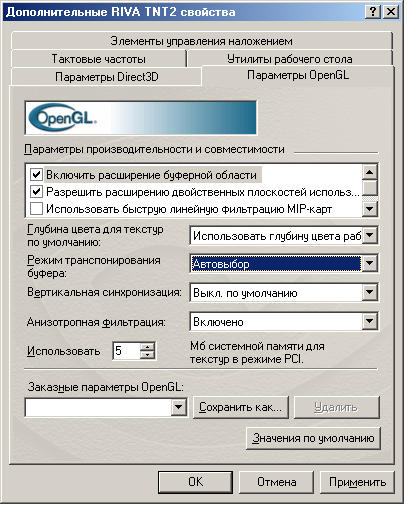

Asus drivers for tnt2 are no good. You just look at the abundance of options that are understandable and accessible to every student, such as Buffer extension or Transon ... trapson ... damn ...

OMFG, Poor people! But there are still ForceWare drivers from! 2005! years with support already D3D9. Deus EX with them is a wonderful artifact. Now it becomes clear why the cardholders were so exhausted to change it to voodoo - no one seems to have written normal firewood in the history of the card.

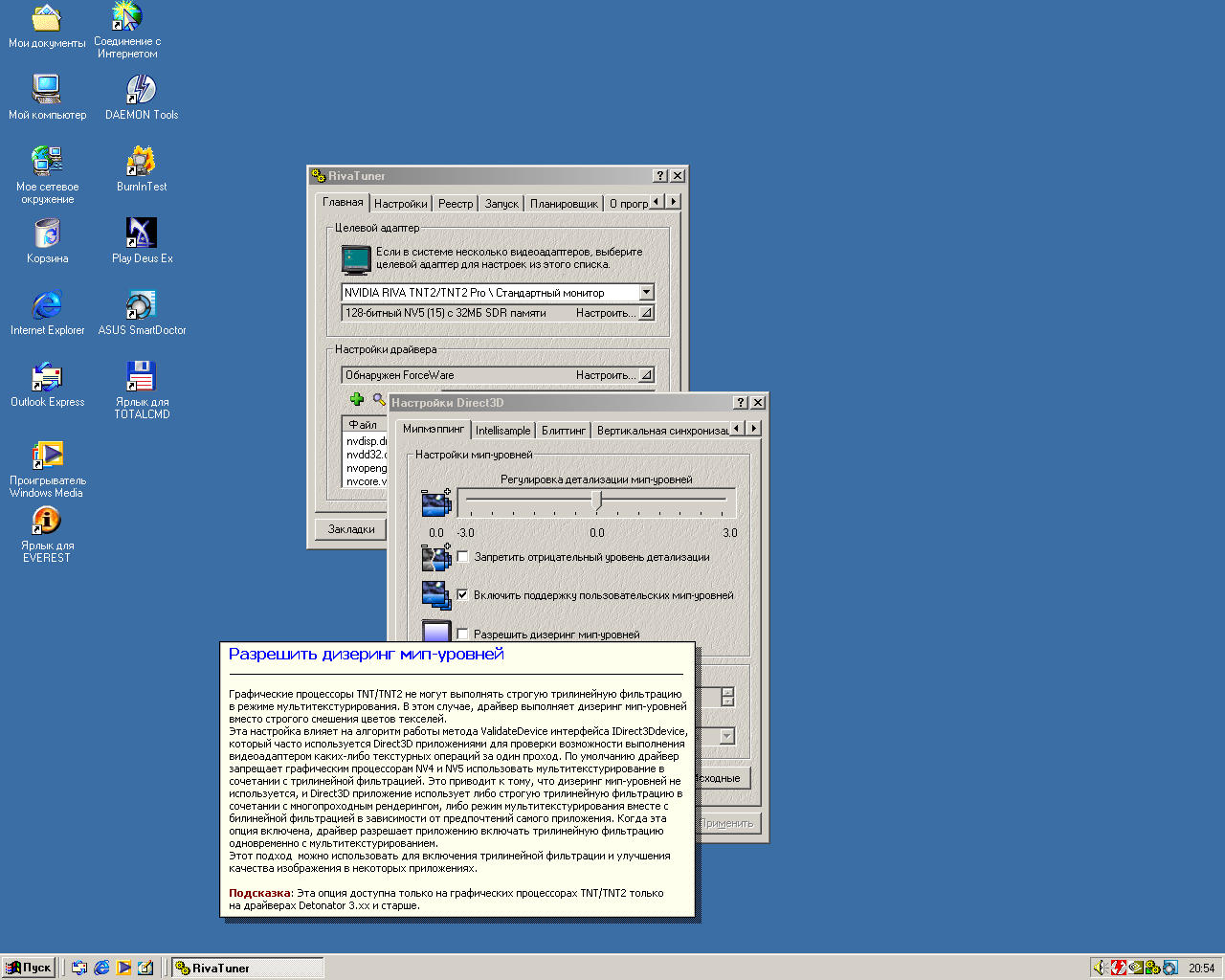

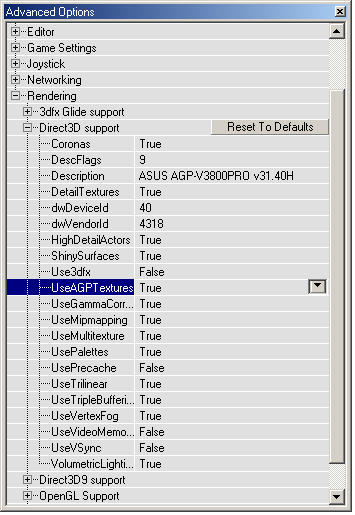

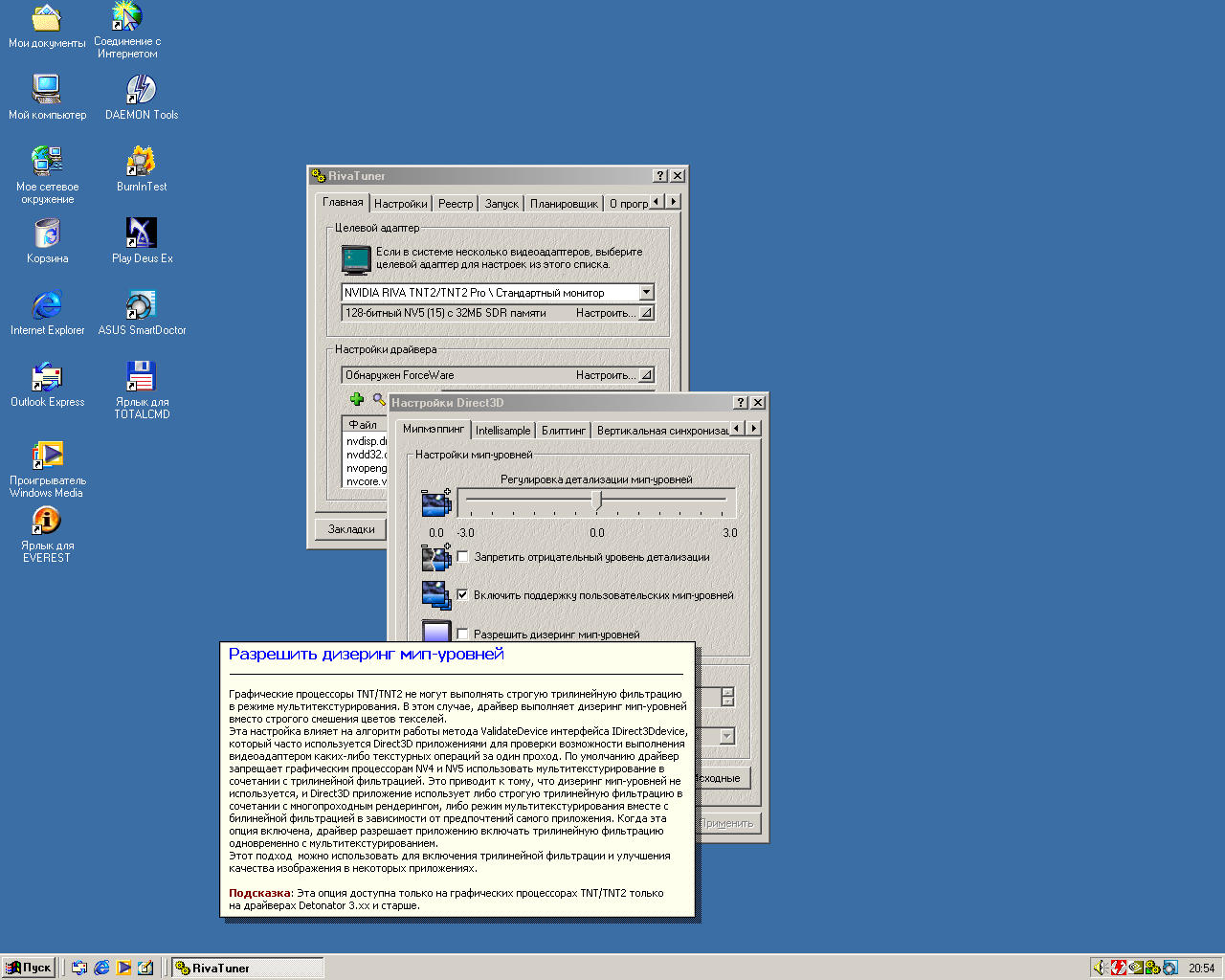

And there’s also a famous utility written by our compatriot Unwinder 'om -RivaTuner .

I must say, a worthy improvement in the series. I finally understood how to use the utility only after a couple of days, probably. And then, the abundance of tabs and hidden registry features is still a mystery to me ... In any case, the main thing I got: the possibility of low-level card overclocking.

NOTE Config:

MB: Gigabyte 6vtxe (Apollo Pro133T chipset)

CPU: Pentium 3 1.4Ghz S-/ 512Kb / 133FSB

RAM: 3x256Mb PC133

HDD: healthy UDMA-5 WD with decent 2MB cache and 5400RPM

WinME

Video: Riva TNT2 PRO

IMPORTANT :

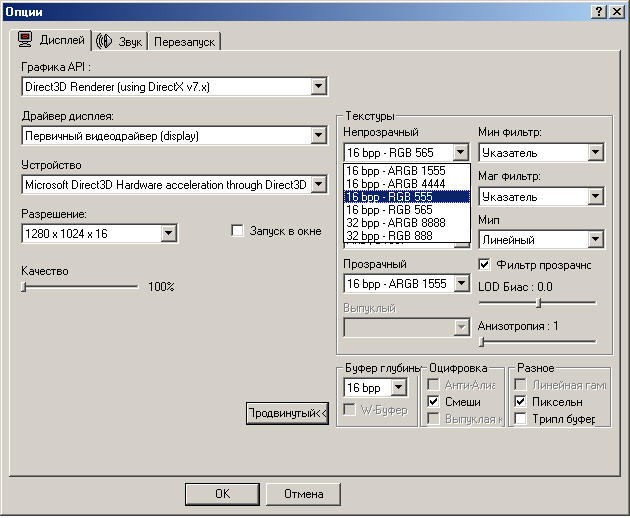

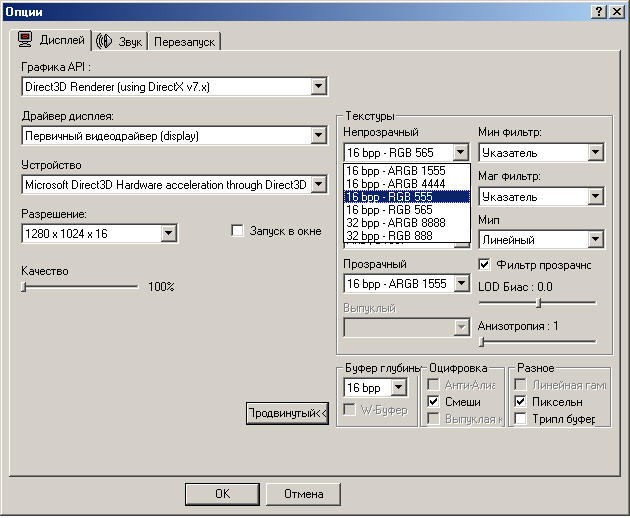

All graphics settings in the game - to the maximum !!! This means something like this: if something beautiful and / or useful can be included, we include it.

We immediately disperse the card from the standard 142/166 almost to the Ultra level ( 180/210 ). Why not? In fact, overclocking will give frames 5-7. Well, that's not bad either. I haven’t tried to drive above, I’m sure: start collecting artifacts.

The resolution in the game for this card was 1280x1024. Everything below is just not interesting for me (it was not interesting at the time of writing the paragraph). All that is above is no longer her bar. So it shows less than 30 frames almost everywhere.

So, let's go on technology.

The card is capable of Direct3D and OpenGL as standard.

Also let me remind you that at that time in every second game a 32-bit color was slowly introduced and offered. So there was a choice between 16-fast and 32-slow.

Nvidia positioned the card as a powerful 32-bit pipeline. By the way, a competitor named 3dfx had only 16-bit color and he fell in this battle. Is blind

Well, let's look at the picture in different APIs, and at the same time try to find the difference between the 16-bit and 32-bit colors;)

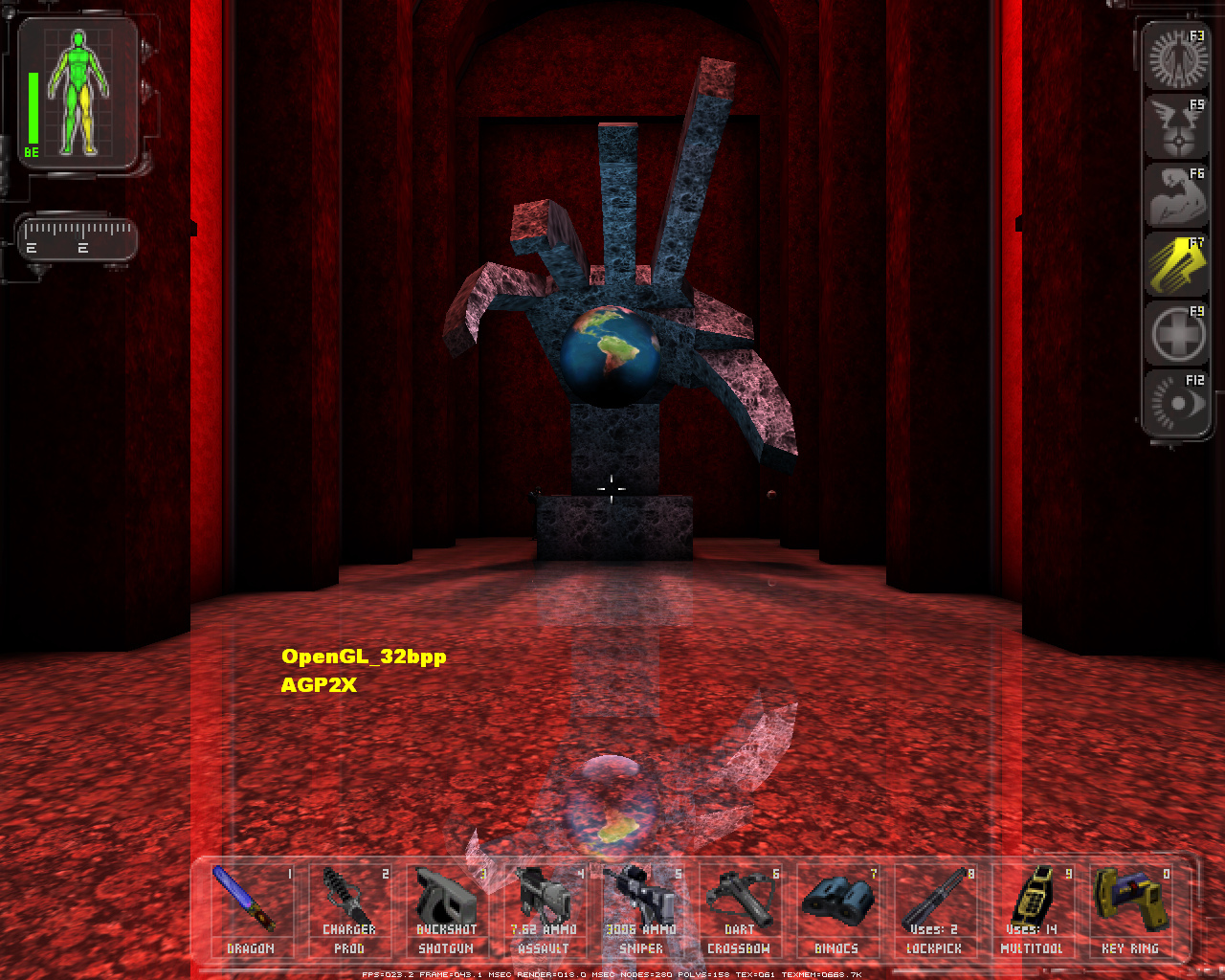

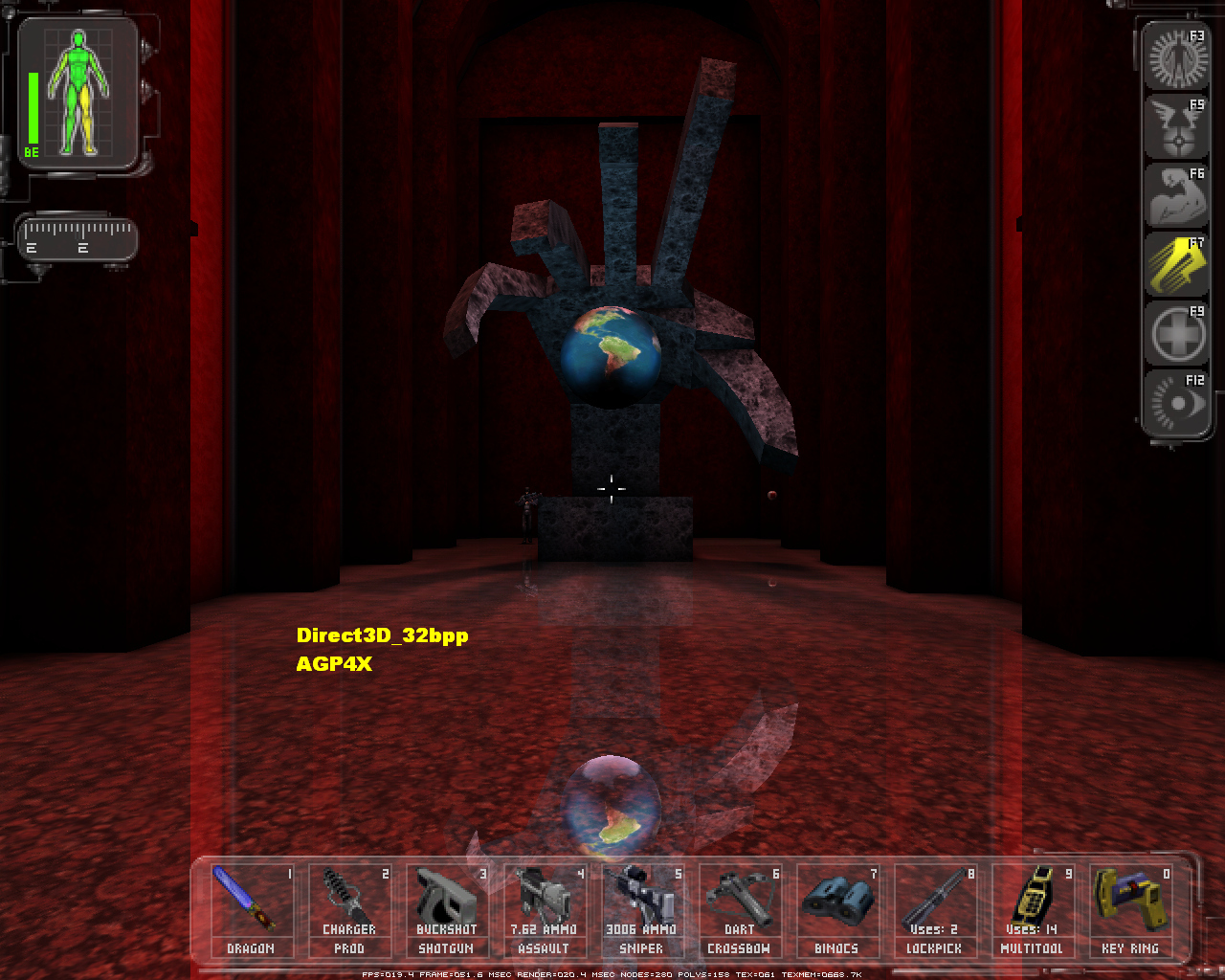

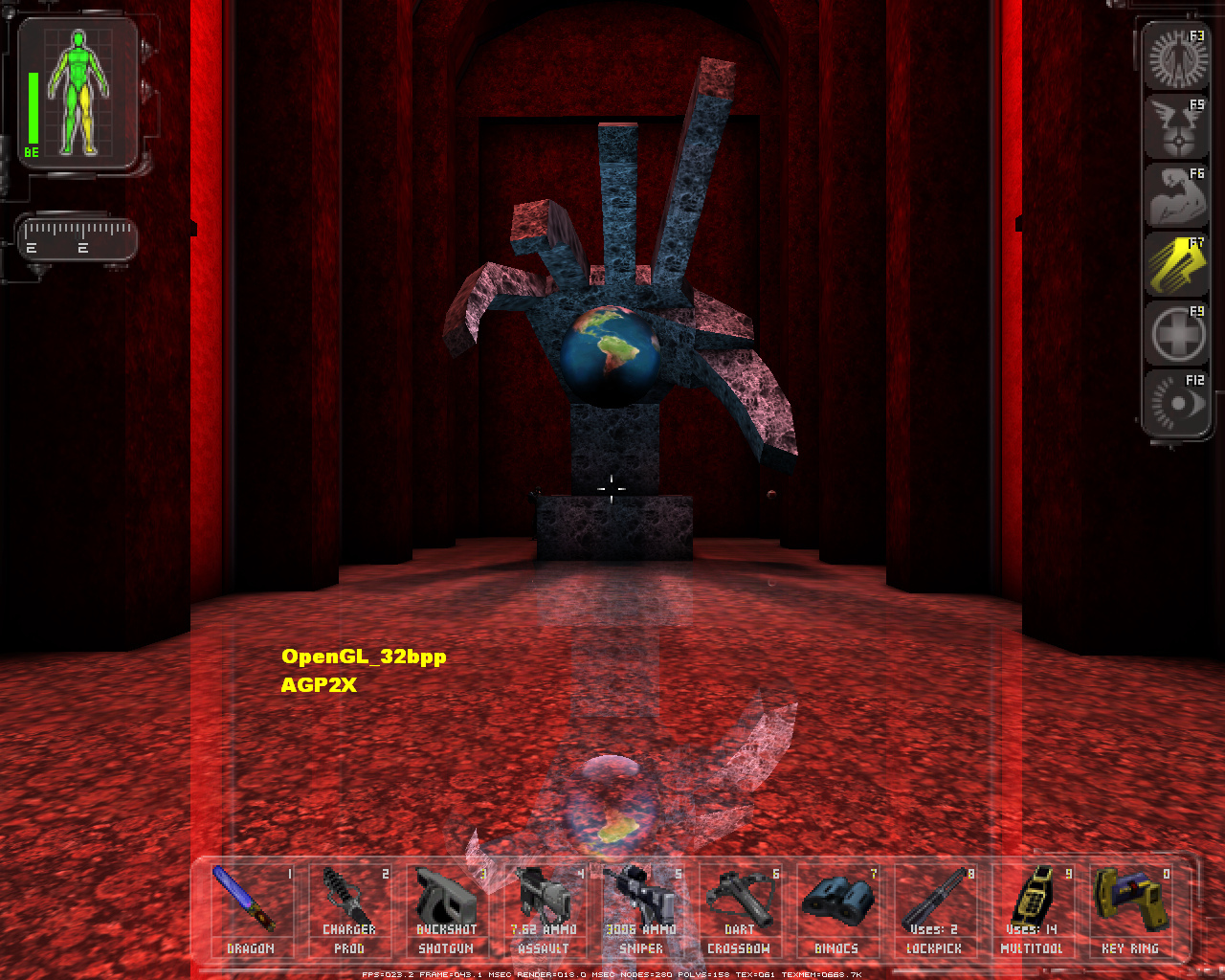

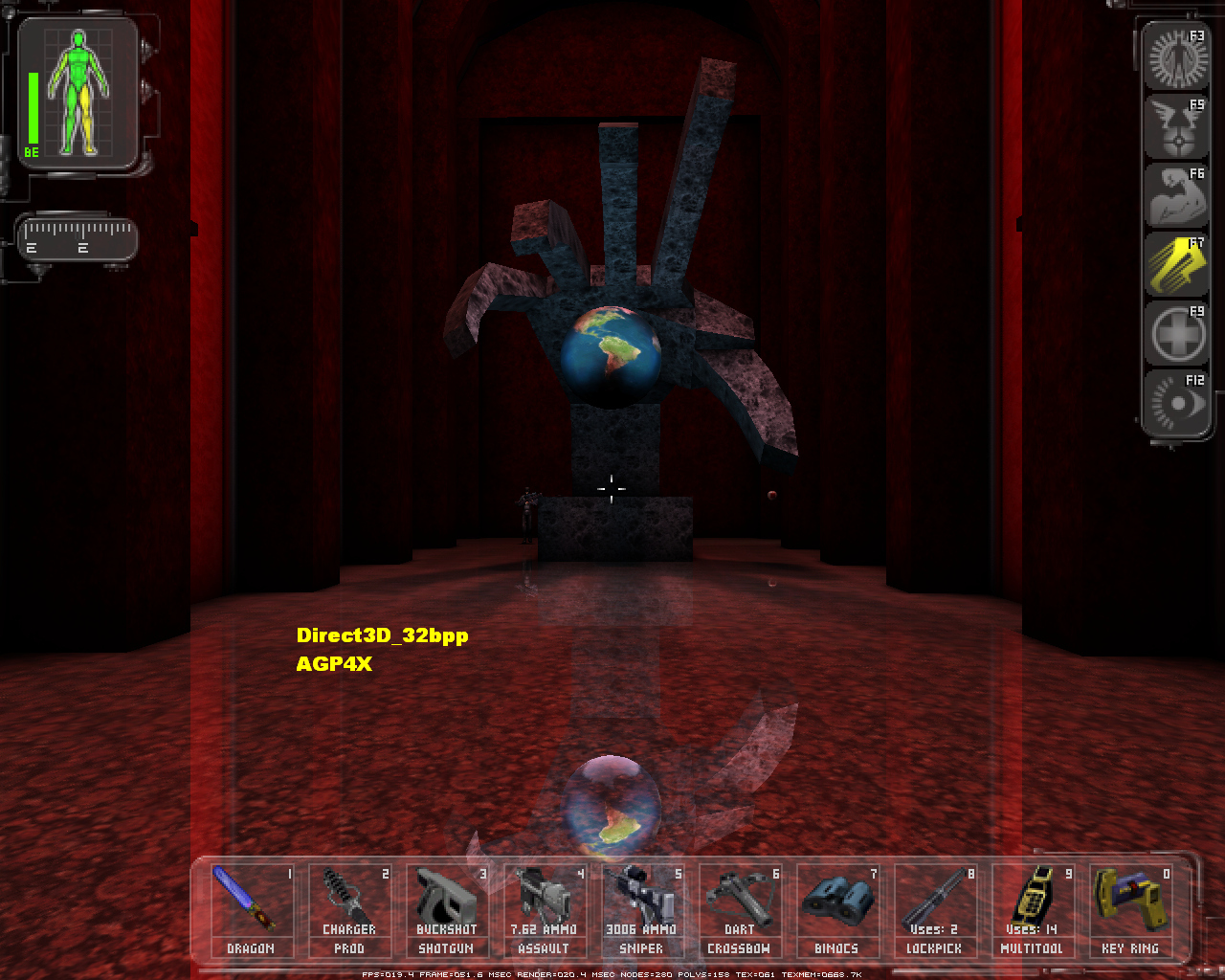

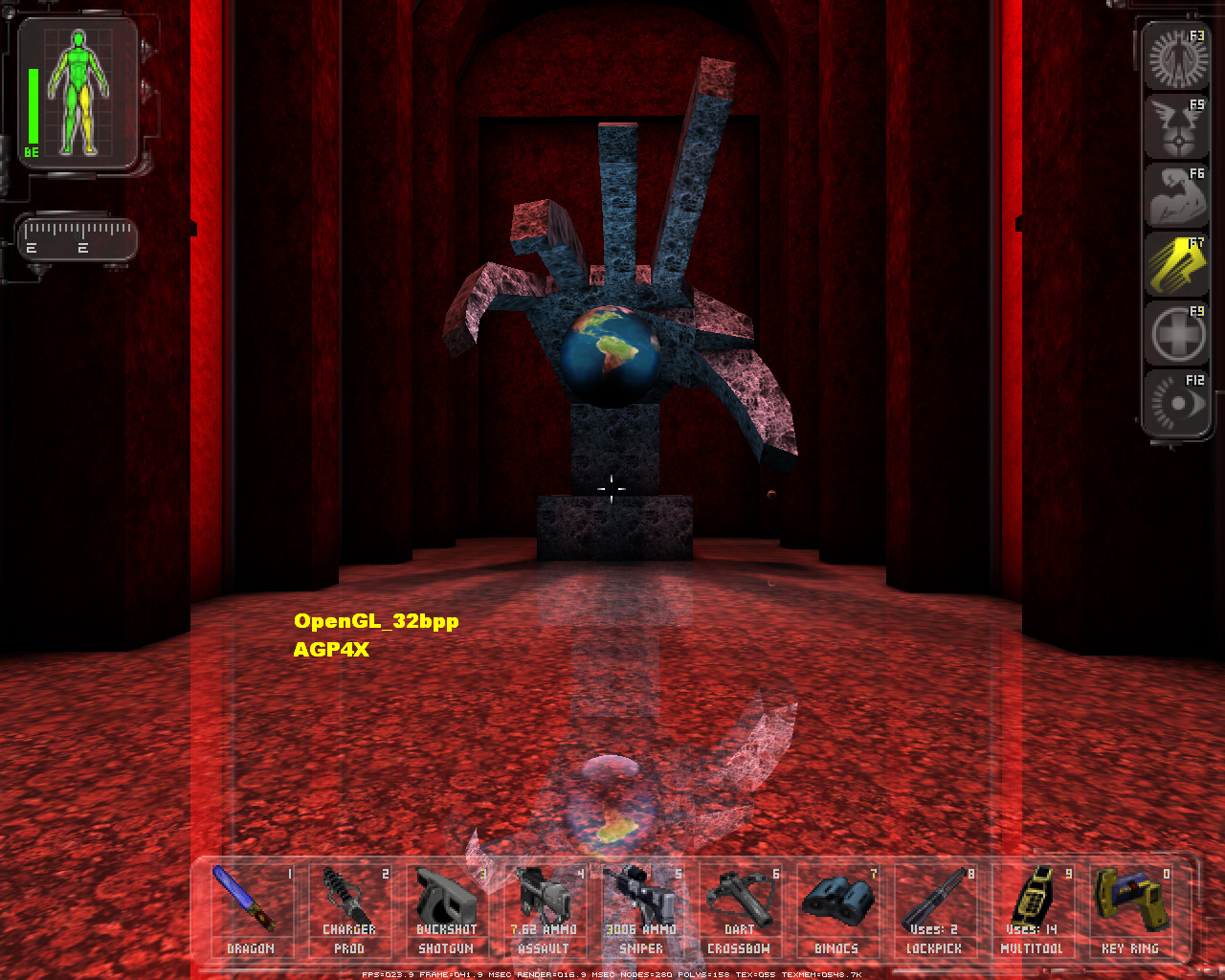

As promised, further there will be shots everywhere with monitoring and all things, where possible ; the sizes are original (the signatures on the bottom left are yellow - mine, I confess ...)

Well, what can I say ...

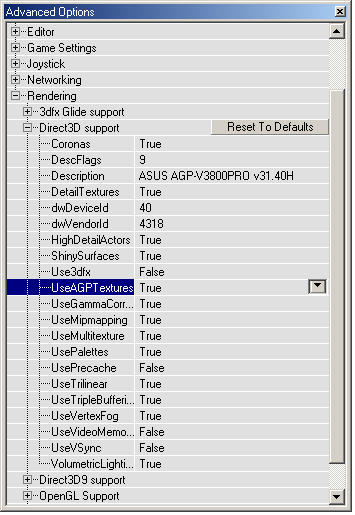

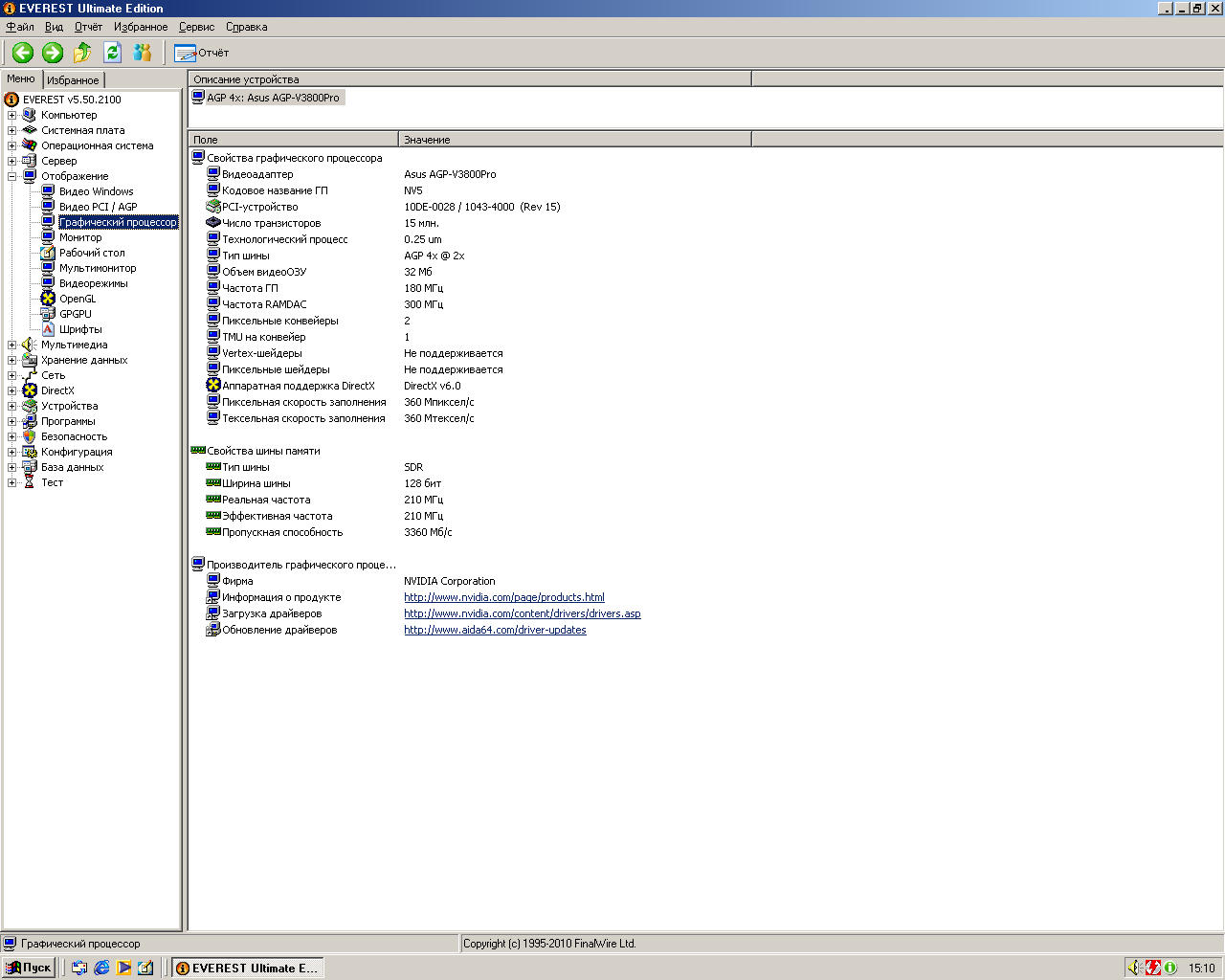

As already mentioned somewhere above, the card can AGP4x. It was an innovation at the time. Does it give anything? We’ll definitely find out now, but for a start I’ll especially “know” the settings of the Unreal engine for D3D (for OGL, there is no interesting option for us at all).

As you can see, I turned on DiME . So, this option in our case will not affect anything. In the tnt2 curve, agp-texturing has not yet been used. According to rumors, AGP-aperture was used only as an intermediate storage before sending textures to local memory. This is a known flaw in the chip and it can easily be google.

Well, fine, now you can begin to measure potency:

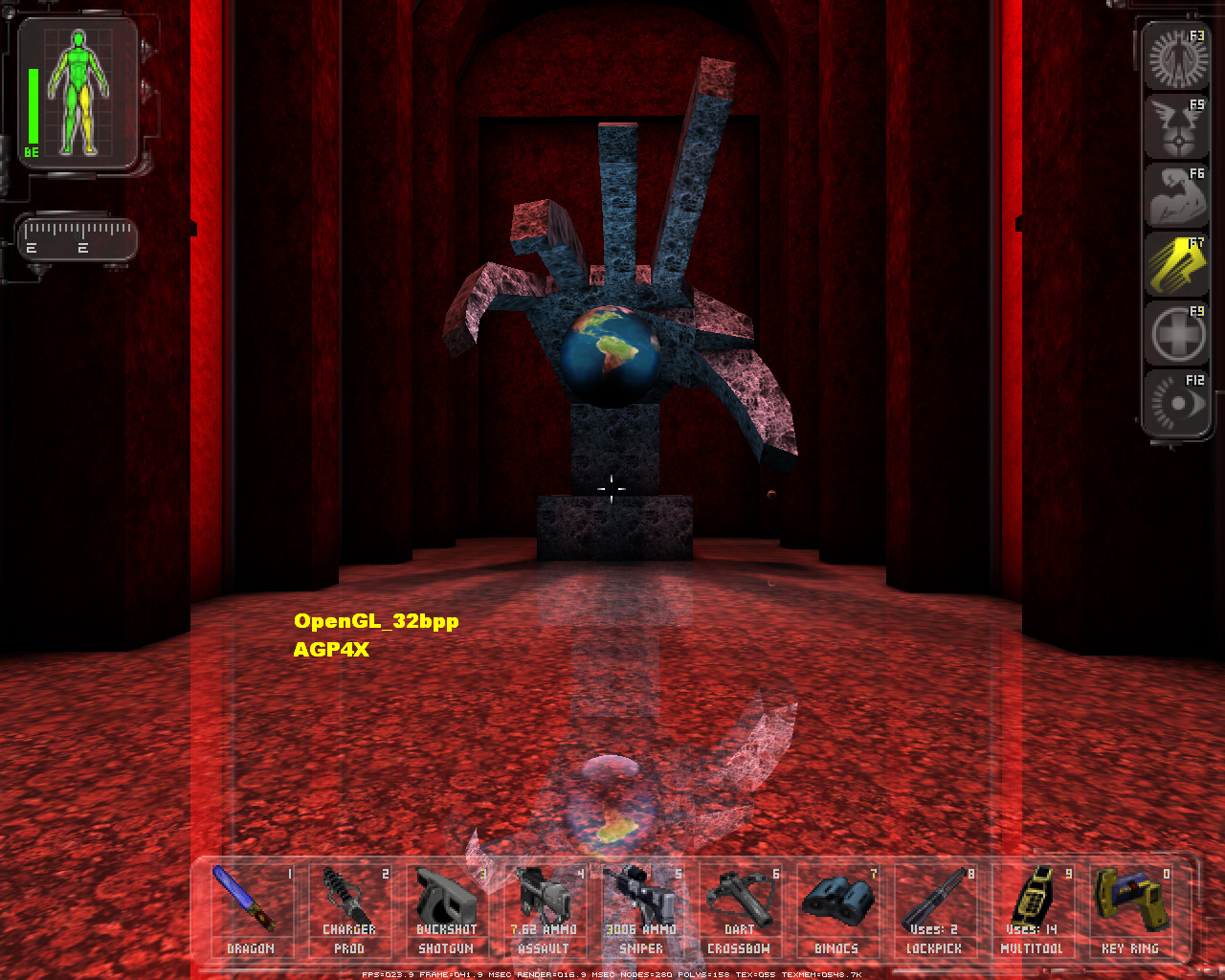

AGP2X

Let me remind you: !!! FPS is considered the engine at the bottom of the shot !!!

By the way, here you can see the difference between api. Look at the reflection of the ball.

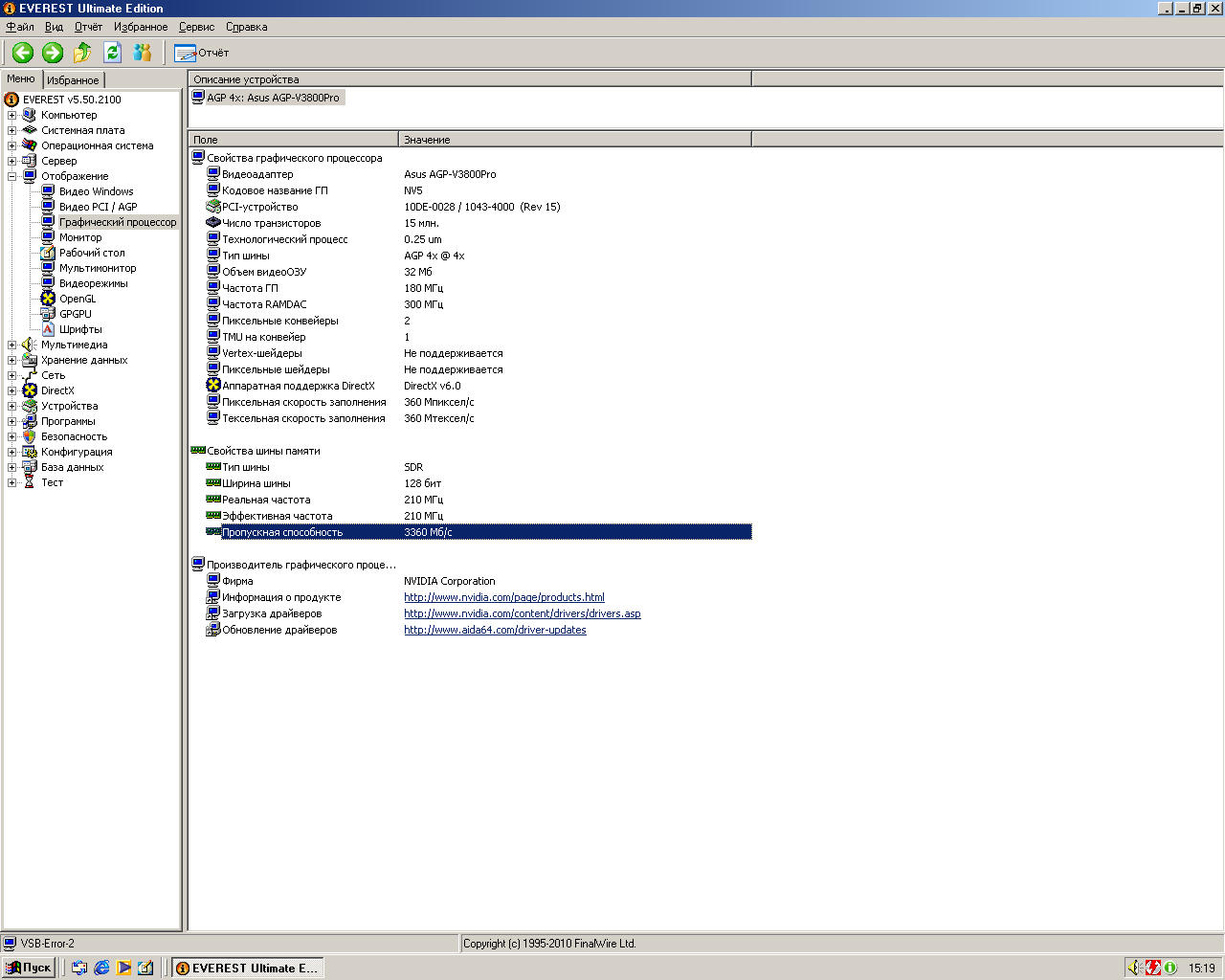

AGP4X

Notice for a single parameter AGP speed is not affected !!! In practice:

Well, it’s clear that the card doesn’t even give out 35 frames in agp2x, and raising to agp4x doesn’t change anything at all ? I really don’t think that the 1.4Ghz server tool is missing, which, sorry, came out three years later. There is definitely enough memory. The FSB: DRAM ratio is 1: 1, and I'm sure the hard time is doing it.

I think the chip is just crushing and there's nothing to be done. Marketing is such marketing.

Here is the fun part. You can quote yourself.

So, the wonderful engine UE1 allows you to check all this without leaving the checkout. But you can check only in D3Dx. In OGL, multitexturing through game options is not configurable.

Check what? Well ... Trilinear was first introduced in TNT2. But the chip could not use it simultaneously with multitexturing. And it is necessary, because competitors! Therefore, it seemed to be, but it worked in two versions: real trilinear filtering without multitexturing, or less expensive mip-map dithering ("approximation", as it was incorrectly called then) and multitexturing.

To begin with, I’ll try to explain what a trilinear is in general (maybe not too successful) ...

Trilinear is the development of a bilinear. Only applicableto 2D objects that are at a distance from the player and close to each other. A good example is, for example, a floor or wall, consisting of tiles of the same type, which are closely adjacent to each other. When moving away from the player, the tiles should decrease (this is called “mipmapping” by the way), which happens, but as a result of the decrease between the tiles, a sharp transition becomes noticeable, the uniformity of the overall picture is lost. Well, the trilinear is designed to fix this. Overall, it averages the extreme pixels to a total value of colors ...

So. This is real. And what does mip-map dithering do then?

Explanation of mip-map dithering with an example of voodoo5:

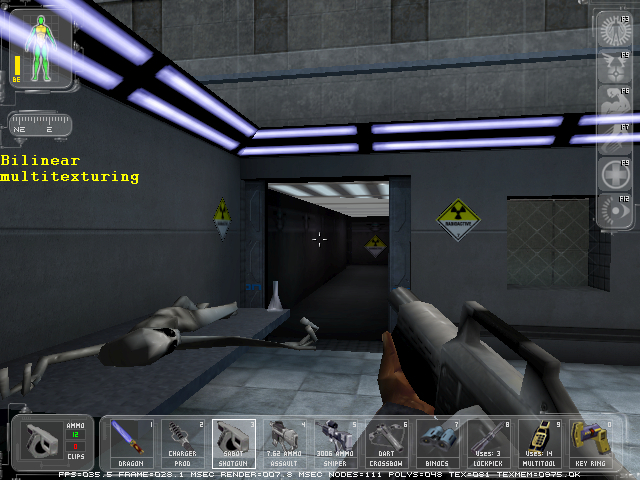

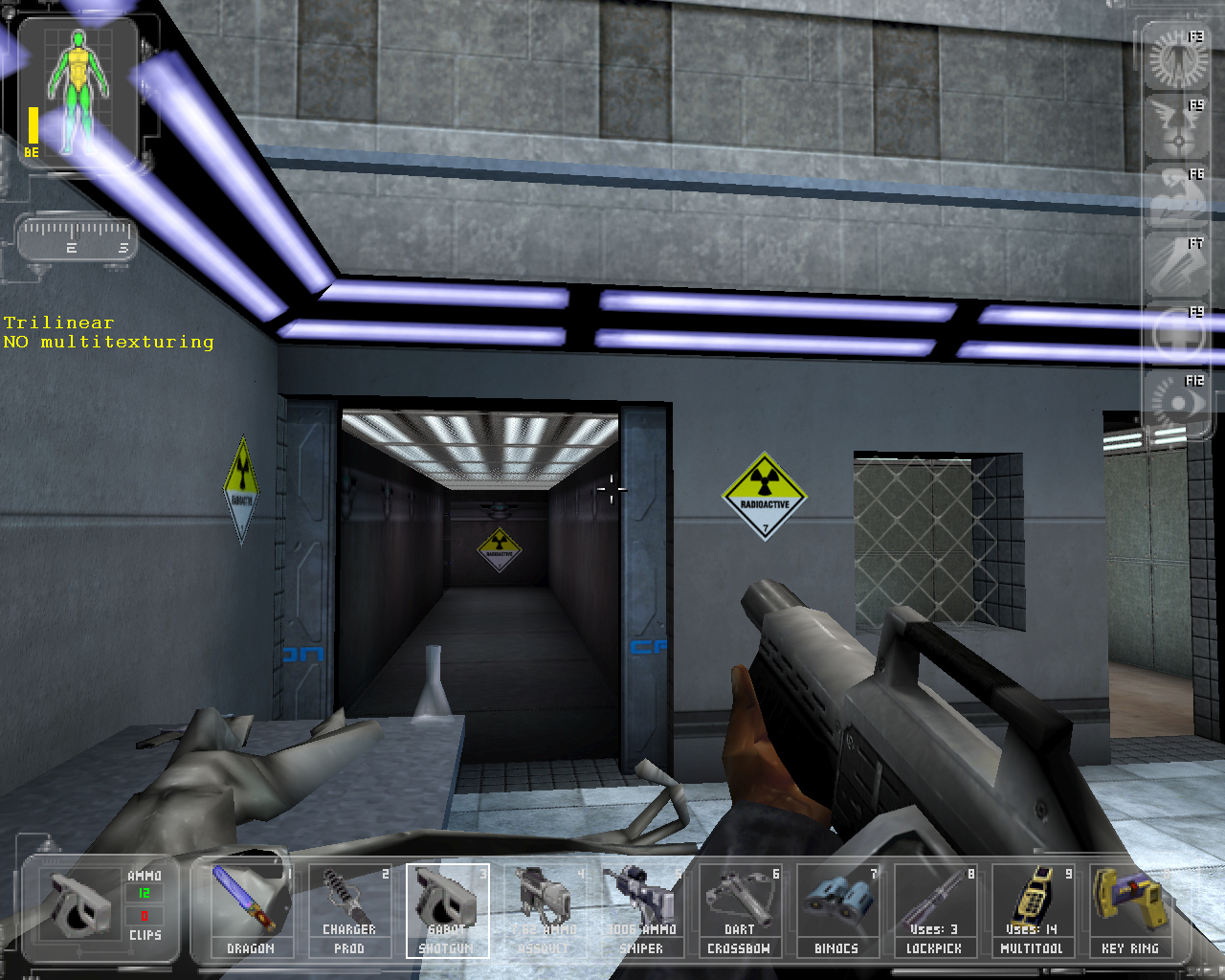

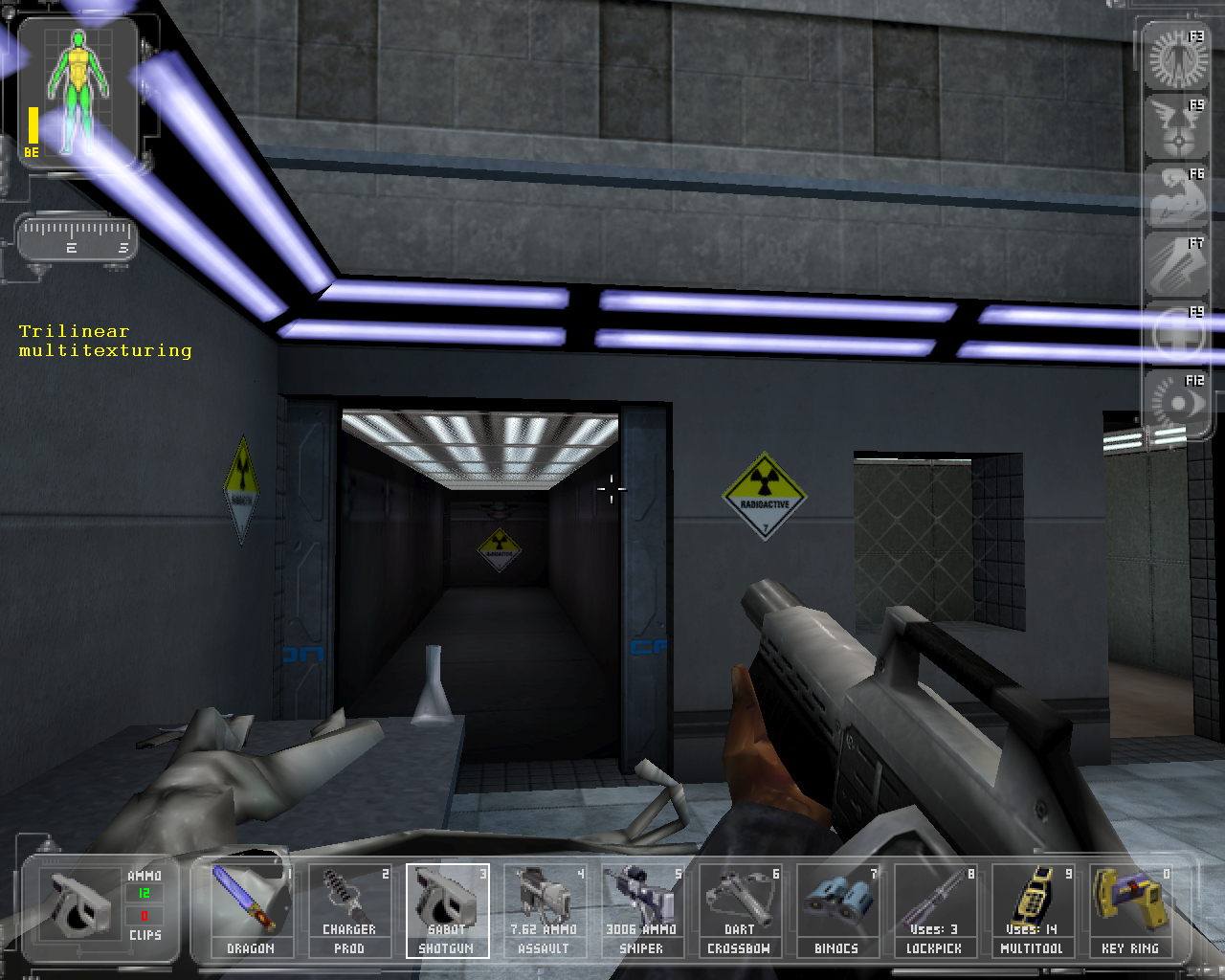

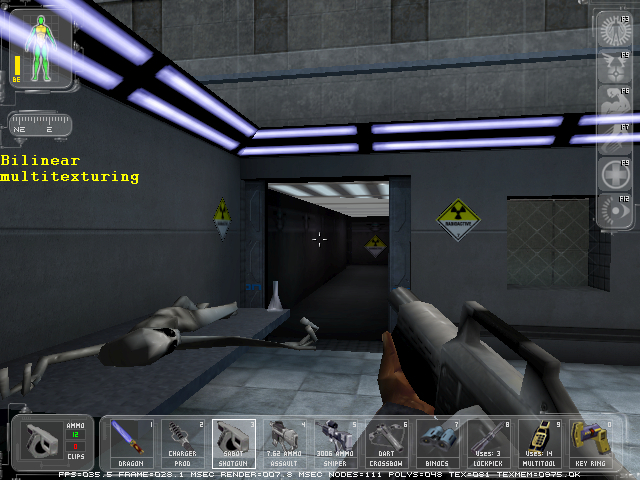

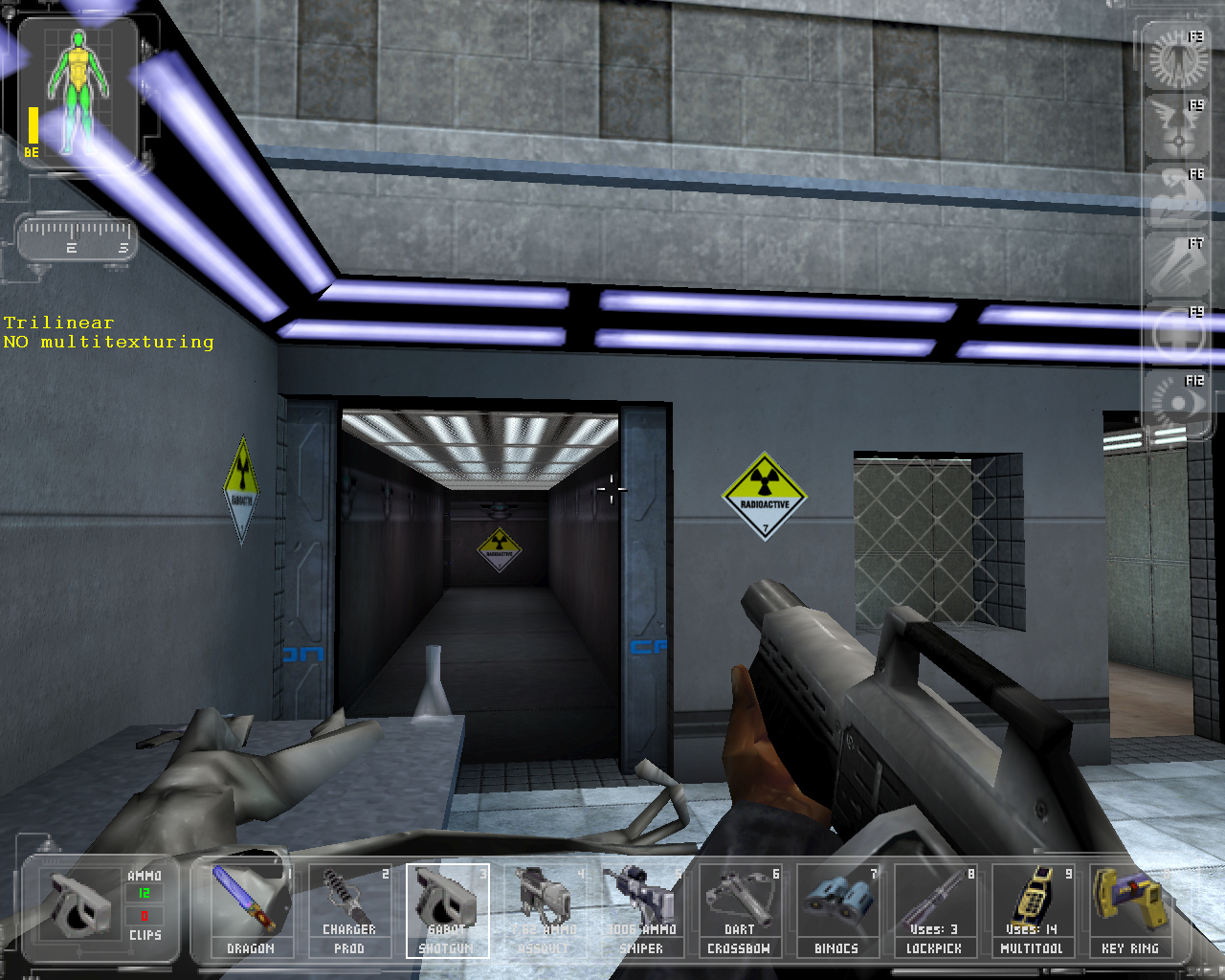

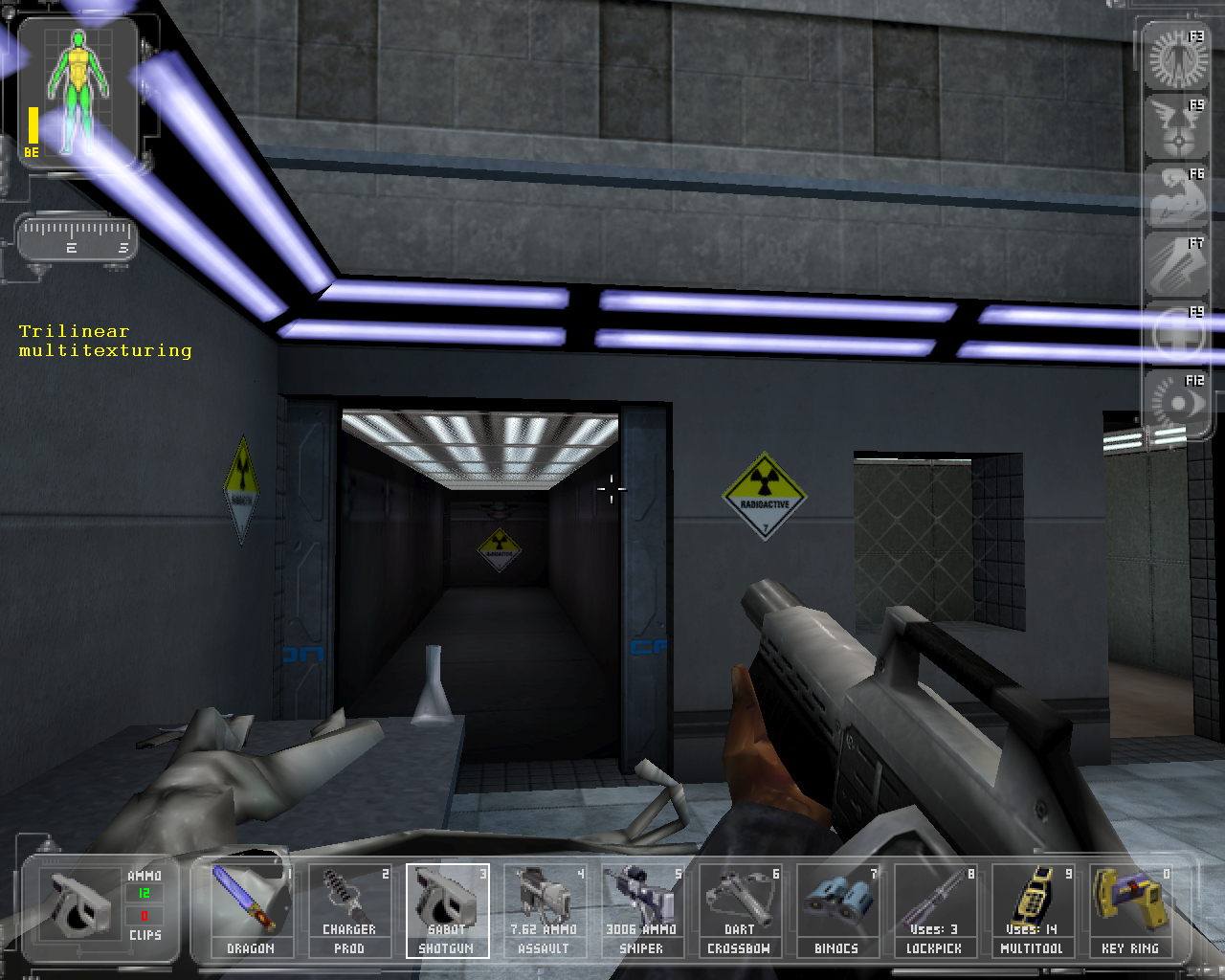

Okay, come on, I’ll show you better shots, which means we’ll go by the surveyors of the mid-90s. Set 640x480. Now everyone should look at smoothing the lamps on the ceiling in the corridor and smoothing the Radioactive sign to the left of the aisle !!!

You also do not see the difference? In fact, it is, you just need to pull these 640x480 on your 20+ inches monitor now and then justice will prevail (today every browser has a carriage). It is clear that at high resolution, the small pictures are all the same ...

If, nevertheless, someone pulled, he noticed that with multitexturing turned on:

Back to 1280x1024.

Well, here you can better see how the mip-map dithering phonite is. Horror, right? Honestly, if they had not told me, I would never have noticed. In my opinion, the nickname "crooked" chip received undeservedly. It’s not his fault that you play 640x480 in 1998, right?

Although seek out flaws mip-map dithering with a magnifying glass to 640x480 squares among seems idiotic to me, yet for those special occasions that damn checkered needed to go, our compatriot Unwinder offers the same special solution:

the no more comments The ...

Very worthy. 1280x1024x32bpp. 20-30fps overclocked. For me, so if the mouse starts to blunt in the menu, and the inventory in the battle is drawn with a delay of half a second, you can wait. It was impossible, for example, to wait for 5-7 minutes on Pentium 150 and a 2D video card for Heroes3, or, for example, it was impossible to wait in Quake 2 in a copy room where the rockets appeared later, and the sound delay was about a second. So 20-30 frames is already quite playable.

Do not like it, do not want to drive? Well, play at 1024x768 and below. Or in 16-bit color.

But still, of course, voodoo3000 was faster, albeit with only 22 bits. It’s a pity 3dfx, but he still left something for us ...

During testing, I encountered a monitoring problem. On the forum, where he came from, he recently outlined briefly how to monitor on Win9x / me . I invite everyone who understands and shares (idea) to share experience, because I have never found such information anywhere (not surprisingly).

... TBC ...

Let's start with what I would like to start. With an explanation of the reasons and objectives of this post. The post, as it were, reports three very important points:

Do not trust, but verify!

While you are young, you need to hit the hard place!

Take everything from iron (and don’t give it back)!

Now about the goals.

Verily, let’s start by recalling (without quotes and proofs in the name of peace) what were the reviews of early hardware-3D on PC in the late 90s and early 2000s ...

- Exhaustive attention so dearly beloved resolutions 800x600 and 1024x768. You don’t mind that measuring in 99 which one with all the newfangled features lifted up will show 60 fps in 800x600 resolution is a little strange? After all, these cards already had support for high resolutions in 3D - that’s what you had to test!

- A detailed review of the "2D component" (lacking only the graphs of the oscilloscope). This is so important when you have a CRT monitor!

- At least the coverage of the whole theory, but no specifics; Even forecasts for the future!

- A bunch of beautiful graphs from Yoksel with some outrageous numbers. Have you ever wondered what those numbers meant? I suppose the amount in dollars is not otherwise.

... Now it’s all like: I inserted the video card, I downloaded the firewood myself, I started the game, it itself got patched, I set up the graphics, I turned on the fraps and burn it. What can I say, there are only two living api, and even then OGL is less and less found in top games.

What happened before? There were more vendors, more api, and, therefore, comparing performance was somewhat more problematic, because it was much harder and longer. And then no one ever thought of monitoring like that. Honestly, show me at least one review of those years where video cards are compared for performance and at the same time they provide shots of some powerful game (for example, on the unreal engine),Where are the results of online monitoring at least basic fps and CPU usage?

Since November 95. Did not have. Not a single review containing such shots. Shock, heart attack, des: x

Then hardly anybody seriously even used FRAPS then . And, then, everything was by sight (well, almost). - Well, for the sweet configs, on which everything was tested, do you remember? Turning a blind eye to the motherboard (chipset), it’s good if at least the percentage met the needs of Vidyahi. But actually it was necessary to monitor something in the background (? Free resources?).

Okay, iron, but where in general could you get an acceptable comparison of two cards of different vendors, if most often both cards were compared to ... well, offhand: 3DMark , Quake3 , another_one_mass_ average_game_game .

For some reason, no one even remembered such giants as Unreal or Hitman . But these were top engines (which no modern card could normally pull out) with a lot of settings, with support for several api, with support for human permissions (more than 1024x768).

And don't tell me anything about our economy and size 15 CRT monitors. At the time, staff members were already selling if not LCD, then precisely flat CRTs with support for human resolutions! The potential was high. In general, potency, they say, is an important parameter. Here we measure it.

Back in the month of March, all this finally did not allow me to live in peace, because "how is it that I was 15 years late ???". Well, I decided to hand over a couple of reviews for your unpopular analysis, on a chip for each.

But what is here?

And there will not be manipulated with the PC: memory upgrades, CPU overclocking and similar movements. Because he collected the top of his generation - this car does not make sense to disperse and upgrade.

percent and mother are the most uninteresting components of the old pc, until you want something unusual. what's the point of keeping almost the same computers? these are not works of art, but faceless and soulless boxes. early 3D video and sound, this is interesting. and the prots and mothers are all the same and boring

And there will be a lot of detailed stops on each board and its features: there will be monitoring, comparison in different api, with different tweaks and overclocking. Analysis of input data,

ATTENTION, Config:

MB: Gigabyte 6vtxe (Apollo Pro133T chipset)

CPU: Pentium 3-S 1.4Ghz / 512Kb / 133FSB

RAM: 3x256Mb PC133

HDD: healthy UDMA-5 WD with a decent 2MB cache and 5400RPM

WinME

Video:

Why WinME. So after all flash drives work immediately, it does not turn blue from 768 MB of memory ... Not enough? But for what else I fell in love with WinME:

- Autorefresh is absent almost everywhere;

- Complete OS instability. The full-screen process is hanging, you’ll finish the hell;

- But traditionally you can exit the blue screen by pressing Anykey.

- Conservative axis. It’s okay not to go to the Internet anymore: the last even third-party browser is not that html5, javascript will hardly understand. But it loads like a seven on an SSD. In general, the axis is designed for productive work.

- The most difficult quest is not firewood at all. Try to find working monitoring software in 3D. There is Everest - in the background it works. But bring in 3D with what? Yes, I had to study the issue for a month or two. The coolest thing was when I first tried to put something:

- the application was delivered. I'm starting it up. Please update Windows to a newer version!

Then he chose more carefully. And then, in D3D, monitoring didn’t really work ...

Now. I played a good game, I chose the game Deus Ex . There are several reasons for this.

Firstly, an unfulfilled childhood game, inaccessible by system requirements. I played with my brother with breaks for a month. Very vague memories of lack of sleep as a result of a delay of "another 5 minutes" up to 2 am. So vague that in the course of a recent passage it turned out that I managed to go quite far back then ... I have long wanted to go through Deus Ex without brakes at maximum speed (!!! so much so that the scale of detail is shocking !!!) - why not?

Secondly, seriously. Game on the Unreal Engine 1 engine . The power that was proposed in 1998 by this motive:

- support for the vast majority of 3d features like trilinear, anisotropic, multitex and all kinds of lightning;

- Support for 16-channel audio, overlay effects and sound positioning. Support for hardware audio processing (yes, this was also relevant once;))

- support for 5 3d-renering api !!! You didn’t think: besides DirectX there were at least 4 more;

- For 2d-only card owners (those without hardware 3D acceleration): you will find a full-fledged software-renderer !!! : yes:

- all of the above had to be shoved into it somehow and made so that everything worked: whistling:

- from all of the above follows: the engine was successfully sold and implemented.

Out of the corner of my eye

Here is the same legendary Kriva, the 3dfx killer (represented by ASUS V3800PRO). This statement seemed doubtful, therefore, we will begin with it.

Supports AGP4x, but Sideband by the way, it doesn’t. Well, now it’s important to check it out whether it’s important or not;)

... Let's put the rest of the chips like the feature connector and the TV tuner ...

Closer to heart

Карта построена на чипе RIVA TNT2 PRO. 1999 года рождения.

Вообще TNT — это TwiNTexel. То был первый в истории десктопов ! один! чип, способный накладывать две текстуры за такт. А вот это — уже TNT2.

Данная карта (PRO) — это как бы изначально разогнанная версия стандартного TNT2 (к слову, в ассортименте была ещё ULTRA — это как бы изначально разогнанная версия PRO). Работает на 142/166 (чип/память).

К слову, это та самая классная видеокарта, в которой приходилось выбирать между либо честной трилинейной фильтрацией, либо мультитекстурированием. Уже страшно…

However, what does all this really mean and how to look at it in practice? We’ll deal with this.

Implementation

Built-in firewood in winme! highly! old, even quake3 will not start with them, saying that your opengl is outdated or simply missing.

Asus drivers for tnt2 are no good. You just look at the abundance of options that are understandable and accessible to every student, such as Buffer extension or Transon ... trapson ... damn ...

OMFG, Poor people! But there are still ForceWare drivers from! 2005! years with support already D3D9. Deus EX with them is a wonderful artifact. Now it becomes clear why the cardholders were so exhausted to change it to voodoo - no one seems to have written normal firewood in the history of the card.

And there’s also a famous utility written by our compatriot Unwinder 'om -RivaTuner .

I have only two words for the developers to say: user interface !!!

I must say, a worthy improvement in the series. I finally understood how to use the utility only after a couple of days, probably. And then, the abundance of tabs and hidden registry features is still a mystery to me ... In any case, the main thing I got: the possibility of low-level card overclocking.

Application level

NOTE Config:

MB: Gigabyte 6vtxe (Apollo Pro133T chipset)

CPU: Pentium 3 1.4Ghz S-/ 512Kb / 133FSB

RAM: 3x256Mb PC133

HDD: healthy UDMA-5 WD with decent 2MB cache and 5400RPM

WinME

Video: Riva TNT2 PRO

IMPORTANT :

All graphics settings in the game - to the maximum !!! This means something like this: if something beautiful and / or useful can be included, we include it.

We immediately disperse the card from the standard 142/166 almost to the Ultra level ( 180/210 ). Why not? In fact, overclocking will give frames 5-7. Well, that's not bad either. I haven’t tried to drive above, I’m sure: start collecting artifacts.

The resolution in the game for this card was 1280x1024. Everything below is just not interesting for me (it was not interesting at the time of writing the paragraph). All that is above is no longer her bar. So it shows less than 30 frames almost everywhere.

So, let's go on technology.

API and colorfulness

The card is capable of Direct3D and OpenGL as standard.

What?

... Today, probably, not everyone already understands what a graphical API is. Let’s do this: everyone knows about DirectX. It consists of DirectInput (today XInput), DirectDraw, Direct3D, DirectSound and today something else. So here are all the various api, which for what is clear from the name. If you take the Direct3D API, it's something like a software tool for drawing 3D using the hardware capabilities of the drawing card itself. This is an important point. Support by a card of a particular API indicates whether it is possible to draw using this card using specific chips of a specific api. The performance in each of the api, of course, is different. Because the implementation is different ...

Also let me remind you that at that time in every second game a 32-bit color was slowly introduced and offered. So there was a choice between 16-fast and 32-slow.

If anyone is interested, what is the difference.

So, here are a few points. Let's start with the theory. There is a picture of 16 bits per pixel. This means that for each of the three colors is given how many bits? Yes, not a damn thing that means. Because a typical picture for a game can most often be encoded in one of the following ways:

Who did not understand: A 16-bit picture can be encoded as RGB565 (red, green, yellow), or it can be encoded as A RGB1555. A is an alpha channel. It is needed to indicate the transparency of the texture. On that I will leave you to reflect on the purpose of the remaining options.

In fact, the following can be seen: not only is the color spectrum of “a thousand colors” (aka Haicolor, it is 16bit) obviously does not cover the human (2 ^ 5 x 2 ^ 6 x 2 ^ 5 = 65536 color values), but also had to choose which channel to give preference to the situation.

Both problems were solved by True color (32bit). Everything is almost like Marx’s there: everyone according to need, everyone possible. By the way, in fact, the color is 24-bit, 8 bits for transparency after all.

The flip side of the coin guess what? Right size. Such textures (if we talk about the game) will weigh more than two times. Just do not think that in games where there was a choice between 16- and 32-bit textures, both types were stored. Of course, 32 bits were just converted.

Speaking of games. Shots are made unprofessionally, but look at the water and the sky.

And here is a bonus to you of the consequences of incorrect coding of the alpha channel: there are not many transparent details, for example, hud at the top, softening the shadow of the machine.

... Okay, the herd will run away ...

Who did not understand: A 16-bit picture can be encoded as RGB565 (red, green, yellow), or it can be encoded as A RGB1555. A is an alpha channel. It is needed to indicate the transparency of the texture. On that I will leave you to reflect on the purpose of the remaining options.

In fact, the following can be seen: not only is the color spectrum of “a thousand colors” (aka Haicolor, it is 16bit) obviously does not cover the human (2 ^ 5 x 2 ^ 6 x 2 ^ 5 = 65536 color values), but also had to choose which channel to give preference to the situation.

Both problems were solved by True color (32bit). Everything is almost like Marx’s there: everyone according to need, everyone possible. By the way, in fact, the color is 24-bit, 8 bits for transparency after all.

The flip side of the coin guess what? Right size. Such textures (if we talk about the game) will weigh more than two times. Just do not think that in games where there was a choice between 16- and 32-bit textures, both types were stored. Of course, 32 bits were just converted.

Speaking of games. Shots are made unprofessionally, but look at the water and the sky.

And here is a bonus to you of the consequences of incorrect coding of the alpha channel: there are not many transparent details, for example, hud at the top, softening the shadow of the machine.

... Okay, the herd will run away ...

Nvidia positioned the card as a powerful 32-bit pipeline. By the way, a competitor named 3dfx had only 16-bit color and he fell in this battle. Is blind

Well, let's look at the picture in different APIs, and at the same time try to find the difference between the 16-bit and 32-bit colors;)

As promised, further there will be shots everywhere with monitoring and all things, where possible ; the sizes are original (the signatures on the bottom left are yellow - mine, I confess ...)

Well, what can I say ...

- Firstly, I want to note that in fact everything in the game looks " somewhat " brighter ... These screenshots are honest and are made using the engine. Honestly, this place was very visual, however, I tried: D

In general, there are big problems with the brightness in the game. Here is what you see, this brightness is turned out by 100%. By default it costs 50%. Understand? I heard about the fix , but somehow it was too late to install it. And the atmosphere is good ... In the end, who the hell did these screenshots surrender? And soooo come down ... Okay, do not worry, there will be more screenshots. - Secondly, you can notice the difference between 16 and 32bpp in Direct3D: if you return to the shots, you will see a terrible ripple in the center at d3d_16bpp. This is the rendering quality of TNT2. Other cards usually do not.

In OGL, there is no color difference at all. This suggests that the game is cheating and always gives away textures in some fixed format. Which one is not interesting. Interestingly, setting the color bitness does not play a role in OGL.

In general, if you download to the computer and unscrew the brightness in the photoshop, you will see the difference in api. It is in color and in drawing, but rather insignificant. So 3dfx is unlikely to go blind then ... I really don’t think so. - Thirdly, you saw the monitoring (top right yellow) only in OGL. Why? Yes, because in those distant programs only unknown to me, they were able to monitor in D3D x , which is used in Unreal Engine 1.

Plus, the percent in WinME is always 100% loaded. Apparently, this is his credo (proca). It is enough to enter Windows and press START!

The memory shows what kind of eyes. In general, it can be seen that further monitoring is pointless, but Unreal Engine itself can count the frames for us (below, under the inventory, white in the center)

AGP

As already mentioned somewhere above, the card can AGP4x. It was an innovation at the time. Does it give anything? We’ll definitely find out now, but for a start I’ll especially “know” the settings of the Unreal engine for D3D (for OGL, there is no interesting option for us at all).

As you can see, I turned on DiME . So, this option in our case will not affect anything. In the tnt2 curve, agp-texturing has not yet been used. According to rumors, AGP-aperture was used only as an intermediate storage before sending textures to local memory. This is a known flaw in the chip and it can easily be google.

Well, fine, now you can begin to measure potency:

AGP2X

Let me remind you: !!! FPS is considered the engine at the bottom of the shot !!!

By the way, here you can see the difference between api. Look at the reflection of the ball.

AGP4X

Notice for a single parameter AGP speed is not affected !!! In practice:

Well, it’s clear that the card doesn’t even give out 35 frames in agp2x, and raising to agp4x doesn’t change anything at all ? I really don’t think that the 1.4Ghz server tool is missing, which, sorry, came out three years later. There is definitely enough memory. The FSB: DRAM ratio is 1: 1, and I'm sure the hard time is doing it.

I think the chip is just crushing and there's nothing to be done. Marketing is such marketing.

Trilinear

Here is the fun part. You can quote yourself.

By the way, this is the same cool video card in which you had to choose between either honest trilinear filtering or multitexturing. Already scary ...

So, the wonderful engine UE1 allows you to check all this without leaving the checkout. But you can check only in D3Dx. In OGL, multitexturing through game options is not configurable.

Check what? Well ... Trilinear was first introduced in TNT2. But the chip could not use it simultaneously with multitexturing. And it is necessary, because competitors! Therefore, it seemed to be, but it worked in two versions: real trilinear filtering without multitexturing, or less expensive mip-map dithering ("approximation", as it was incorrectly called then) and multitexturing.

To begin with, I’ll try to explain what a trilinear is in general (maybe not too successful) ...

Trilinear is the development of a bilinear. Only applicableto 2D objects that are at a distance from the player and close to each other. A good example is, for example, a floor or wall, consisting of tiles of the same type, which are closely adjacent to each other. When moving away from the player, the tiles should decrease (this is called “mipmapping” by the way), which happens, but as a result of the decrease between the tiles, a sharp transition becomes noticeable, the uniformity of the overall picture is lost. Well, the trilinear is designed to fix this. Overall, it averages the extreme pixels to a total value of colors ...

So. This is real. And what does mip-map dithering do then?

Explanation of mip-map dithering with an example of voodoo5:

Mip Mapping introduces a new annoying artifact: Where the hardware swaps from one level to another, you usually notice a discontinuity in the texture. You can notice the swap from a sharp to a more blurry level. This swap discontinuity can be hidden using two techniques: trilinear filtering and Mip Map Dithering. Tri-Linear filtering is the best method, but is rather expensive since more texture information has to be fetched (8 texels to be correct also trilinear filtering would require 2X multi texturing if application does single texturing, and 4X multi texturing if application does 2X multi texturing). Mip Map Dithering, on the other hand, has roughly the same cost as normal Mip Mapped rendering but it uses a dither trick to hide the sudden swap from sharp to blur. The basic idea behind Mip Map Dithering is that instead of changing from the high level to the lower level in one go you do it gradually. This is done by adding a random value to the Mip Level selector, as a result you will swap between the level for a while (jumping over the limit and back) rather than one move over the change value. While Mip Map dithering successfully hides the sudden change it also results in more blurry graphics. Some people consider Mip Map dithering cheating, but remember — it does not require you to perform double texture passes for each pixel.

...

www.beyond3d.com/content/articles/57/4

Okay, come on, I’ll show you better shots, which means we’ll go by the surveyors of the mid-90s. Set 640x480. Now everyone should look at smoothing the lamps on the ceiling in the corridor and smoothing the Radioactive sign to the left of the aisle !!!

You also do not see the difference? In fact, it is, you just need to pull these 640x480 on your 20+ inches monitor now and then justice will prevail (today every browser has a carriage). It is clear that at high resolution, the small pictures are all the same ...

If, nevertheless, someone pulled, he noticed that with multitexturing turned on:

- The overall brightness level in the game drops. This is a known bug in the engine .

- The multi-textured trilinear on the screenshots looks a little worse than the bilinear. But in the game, the difference will be the greater, the larger your monitor. It is a fact.

Back to 1280x1024.

Well, here you can better see how the mip-map dithering phonite is. Horror, right? Honestly, if they had not told me, I would never have noticed. In my opinion, the nickname "crooked" chip received undeservedly. It’s not his fault that you play 640x480 in 1998, right?

Although seek out flaws mip-map dithering with a magnifying glass to 640x480 squares among seems idiotic to me, yet for those special occasions that damn checkered needed to go, our compatriot Unwinder offers the same special solution:

the no more comments The ...

Well, you know ...

Very worthy. 1280x1024x32bpp. 20-30fps overclocked. For me, so if the mouse starts to blunt in the menu, and the inventory in the battle is drawn with a delay of half a second, you can wait. It was impossible, for example, to wait for 5-7 minutes on Pentium 150 and a 2D video card for Heroes3, or, for example, it was impossible to wait in Quake 2 in a copy room where the rockets appeared later, and the sound delay was about a second. So 20-30 frames is already quite playable.

Do not like it, do not want to drive? Well, play at 1024x768 and below. Or in 16-bit color.

But still, of course, voodoo3000 was faster, albeit with only 22 bits. It’s a pity 3dfx, but he still left something for us ...

Acknowledgments

- Открывателям горизонтов и срывателям покровов — комьюнити VOGONS. За кучу уникальных исследований и наработок!

- Christian Ghisler'у низкий поклон за TC. Пользуюсь с детства. Сколько раз воспользовался синхронизацией папок за время написания не представляется конечным числом… Купил.

- Администрации old-games.ru за возможность быстро и бесплатно скачать 20 игр скопом только ради того, чтобы заценить структуру файлов и однажды запустить. Простите за трафик ;)

З.Ы.

During testing, I encountered a monitoring problem. On the forum, where he came from, he recently outlined briefly how to monitor on Win9x / me . I invite everyone who understands and shares (idea) to share experience, because I have never found such information anywhere (not surprisingly).

... TBC ...

Used literature and greetings

- In such matters, everything begins, as a rule, always with a wiki .

- Then ixbt always follows ...

- And hwp .

- And for the sweet ... for the sweet - gamegpu and vogonswiki .